CentOS 搭建Graylog集群详解

1. Graylog2 简介

Graylog 是一个简单易用、功能较全面的日志管理工具,相比 ELK 组合, 优点:

- 部署维护简单,一体化解决方案,不像ELK三个独立系统集成。

- 查相比ES json语法,搜索语法更加简单,如 source:mongo AND reponse_time_ms:>5000。

- 内置简单的告警。

- 可以将搜索条件导出为 json格式文本,方便开发调用ES rest api搜索脚本。

- 自己开发采集日志的脚本,并用curl/nc发送到Graylog Server,发送格式是自定义的GELF,Flunted和Logstash都有相应的输出GELF消息的插件。自己开发带来很大的自由度。实际上只需要用inotifywait监控日志的modify事件,并把日志的新增行用curl/netcat发送到Graylog Server就可。

- UI 比较友好,搜索结果高亮显示。

当然,在拓展性上,graylog还是不如ELK。

Graylog整体组成:

- Graylog提供 graylog 对外接口, CPU 密集

- Elasticsearch 日志文件的持久化存储和检索, IO 密集

- MongoDB 存储一些 Graylog 的配置

2. Graylog架构

单server架构 :

Graylog集群架构 :

3. Graylog安装

这里我搭建的是集群方案,但是将ES与Graylog和MongoDB部署在同一台server上。

① 前提条件:

$ sudo yum install java-1.8.-openjdk-headless.x86_64

$ sed -i 's/^SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

$ setenforce 0 #安装pwgen

$ sudo yum install epel-release

$ sudo yum install pwgen

② MongoDB安装:

创建/etc/yum.repos.d/mongodb-org-3.2.repo文件,添加如下内容:

[mongodb-org-3.2]

name=MongoDB Repository

baseurl=https://repo.mongodb.org/yum/redhat/$releasever/mongodb-org/3.2/x86_64/

gpgcheck=

enabled=

gpgkey=https://www.mongodb.org/static/pgp/server-3.2.asc

安装MongoDB:

sudo yum install mongodb-org

启动服务:

$ sudo chkconfig --add mongod

$ sudo systemctl daemon-reload

$ sudo systemctl enable mongod.service

$ sudo systemctl start mongod.service

③Elasticsearch安装:

Graylog 2.3.x 支持 Elasticsearch 5.x版本。

首先安装Elastic GPG key以及repository文件,然后yum安装:

$ rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch

$ cat /etc/yum.repos.d/elasticsearch.repo

[elasticsearch-.x]

name=Elasticsearch repository for .x packages

baseurl=https://artifacts.elastic.co/packages/5.x/yum

gpgcheck=

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=

autorefresh=

type=rpm-md $ sudo yum install elasticsearch

编辑Elasticsearch配置文件/etc/elasticsearch/elasticsearch.yml,添加cluster信息:

# cat /etc/elasticsearch/elasticsearch.yml

# ======================== Elasticsearch Configuration =========================

#

# NOTE: Elasticsearch comes with reasonable defaults for most settings.

# Before you set out to tweak and tune the configuration, make sure you

# understand what are you trying to accomplish and the consequences.

#

# The primary way of configuring a node is via this file. This template lists

# the most important settings you may want to configure for a production cluster.

#

# Please consult the documentation for further information on configuration options:

# https://www.elastic.co/guide/en/elasticsearch/reference/index.html

#

# ---------------------------------- Cluster -----------------------------------

#

# Use a descriptive name for your cluster:

#

cluster.name: graylog

#

# ------------------------------------ Node ------------------------------------

#

# Use a descriptive name for the node:

#

node.name: shop-log-

#

# Add custom attributes to the node:

#

#node.attr.rack: r1

#

# ----------------------------------- Paths ------------------------------------

#

# Path to directory where to store the data (separate multiple locations by comma):

#

path.data: /data/elasticsearch/db

#

# Path to log files:

#

path.logs: /data/elasticsearch/logs

#

# ----------------------------------- Memory -----------------------------------

#

# Lock the memory on startup:

#

#bootstrap.memory_lock: true

#

# Make sure that the heap size is set to about half the memory available

# on the system and that the owner of the process is allowed to use this

# limit.

#

# Elasticsearch performs poorly when the system is swapping the memory.

#

# ---------------------------------- Network -----------------------------------

#

# Set the bind address to a specific IP (IPv4 or IPv6):

#

network.host: 10.2.2.42

#

# Set a custom port for HTTP:

#

http.port:

#

# For more information, consult the network module documentation.

#

# --------------------------------- Discovery ----------------------------------

#

# Pass an initial list of hosts to perform discovery when new node is started:

# The default list of hosts is ["127.0.0.1", "[::1]"]

#

# 这里给其他两个节点的地址

discovery.zen.ping.unicast.hosts: ["10.2.2.41", "10.2.2.43"]

#

# Prevent the "split brain" by configuring the majority of nodes (total number of master-eligible nodes / + ):

#

discovery.zen.minimum_master_nodes:

#

# For more information, consult the zen discovery module documentation.

#

# ---------------------------------- Gateway -----------------------------------

#

# Block initial recovery after a full cluster restart until N nodes are started:

#

#gateway.recover_after_nodes:

#

# For more information, consult the gateway module documentation.

#

# ---------------------------------- Various -----------------------------------

#

# Require explicit names when deleting indices:

#

#action.destructive_requires_name: true

http.cors.enabled: true

http.cors.allow-origin: "*"

elasticsearch.yml

启动Elasticsearch服务:

$ sudo chkconfig --add elasticsearch

$ sudo systemctl daemon-reload

$ sudo systemctl enable elasticsearch.service

$ sudo systemctl restart elasticsearch.service

④Graylog安装

$ sudo rpm -Uvh https://packages.graylog2.org/repo/packages/graylog-2.3-repository_latest.rpm

$ sudo yum install graylog-server

编辑graylog配置文件 /etc/graylog/server/server.conf,添加 password_secret和 password_secret_sha2(必须)

可以使用 echo -n yourpassword | sha256sum 命令来生成 password_secret_sha2。

设置rest_listen_uri以及web_listen_uri为公共ip或公共hostname,以便连接graylog。

# cat /etc/graylog/server/server.conf

############################

# GRAYLOG CONFIGURATION FILE

############################

#

# This is the Graylog configuration file. The file has to use ISO -/Latin- character encoding.

# Characters that cannot be directly represented in this encoding can be written using Unicode escapes

# as defined in https://docs.oracle.com/javase/specs/jls/se8/html/jls-3.html#jls-3.3, using the \u prefix.

# For example, \u002c.

#

# * Entries are generally expected to be a single line of the form, one of the following:

#

# propertyName=propertyValue

# propertyName:propertyValue

#

# * White space that appears between the property name and property value is ignored,

# so the following are equivalent:

#

# name=Stephen

# name = Stephen

#

# * White space at the beginning of the line is also ignored.

#

# * Lines that start with the comment characters ! or # are ignored. Blank lines are also ignored.

#

# * The property value is generally terminated by the end of the line. White space following the

# property value is not ignored, and is treated as part of the property value.

#

# * A property value can span several lines if each line is terminated by a backslash (鈥榎鈥 character.

# For example:

#

# targetCities=\

# Detroit,\

# Chicago,\

# Los Angeles

#

# This is equivalent to targetCities=Detroit,Chicago,Los Angeles (white space at the beginning of lines is ignored).

#

# * The characters newline, carriage return, and tab can be inserted with characters \n, \r, and \t, respectively.

#

# * The backslash character must be escaped as a double backslash. For example:

#

# path=c:\\docs\\doc1

# # If you are running more than one instances of Graylog server you have to select one of these

# instances as master. The master will perform some periodical tasks that non-masters won't perform.

is_master = false # The auto-generated node ID will be stored in this file and read after restarts. It is a good idea

# to use an absolute file path here if you are starting Graylog server from init scripts or similar.

node_id_file = /etc/graylog/server/node-id # You MUST set a secret to secure/pepper the stored user passwords here. Use at least characters.

# Generate one by using for example: pwgen -N -s

password_secret = BjwAAuTEWDQNtAKhUL5lQ3TvW41saWseKpRdTSrecBFifsCJDXak4fudnACBcaMyl0I4yzJDF801Kyasdfsdfasdfasdfasd # The default root user is named 'admin'

root_username = admin # You MUST specify a hash password for the root user (which you only need to initially set up the

# system and in case you lose connectivity to your authentication backend)

# This password cannot be changed using the API or via the web interface. If you need to change it,

# modify it in this file.

# Create one by using for example: echo -n yourpassword | shasum -a

# and put the resulting hash value into the following line

root_password_sha2 = 926c00b3f65df24b65a9a7b58a989add920c81441dccd2

dsfasdfasdf

# The email address of the root user.

# Default is empty

#root_email = "" # The time zone setting of the root user. See http://www.joda.org/joda-time/timezones.html for a list of valid time zones.

# Default is UTC

root_timezone = Asia/Shanghai # Set plugin directory here (relative or absolute)

plugin_dir = /usr/share/graylog-server/plugin # REST API listen URI. Must be reachable by other Graylog server nodes if you run a cluster.

# When using Graylog Collectors, this URI will be used to receive heartbeat messages and must be accessible for all collectors.

rest_listen_uri = http://10.2.2.42:9000/api/ # REST API transport address. Defaults to the value of rest_listen_uri. Exception: If rest_listen_uri

# is set to a wildcard IP address (0.0.0.0) the first non-loopback IPv4 system address is used.

# If set, this will be promoted in the cluster discovery APIs, so other nodes may try to connect on

# this address and it is used to generate URLs addressing entities in the REST API. (see rest_listen_uri)

# You will need to define this, if your Graylog server is running behind a HTTP proxy that is rewriting

# the scheme, host name or URI.

# This must not contain a wildcard address (0.0.0.0).

rest_transport_uri = http://10.2.2.42:9000/api/ # Enable CORS headers for REST API. This is necessary for JS-clients accessing the server directly.

# If these are disabled, modern browsers will not be able to retrieve resources from the server.

# This is enabled by default. Uncomment the next line to disable it.

#rest_enable_cors = false # Enable GZIP support for REST API. This compresses API responses and therefore helps to reduce

# overall round trip times. This is enabled by default. Uncomment the next line to disable it.

#rest_enable_gzip = false # Enable HTTPS support for the REST API. This secures the communication with the REST API with

# TLS to prevent request forgery and eavesdropping. This is disabled by default. Uncomment the

# next line to enable it.

#rest_enable_tls = true # The X. certificate chain file in PEM format to use for securing the REST API.

#rest_tls_cert_file = /path/to/graylog.crt # The PKCS# private key file in PEM format to use for securing the REST API.

#rest_tls_key_file = /path/to/graylog.key # The password to unlock the private key used for securing the REST API.

#rest_tls_key_password = secret # The maximum size of the HTTP request headers in bytes.

#rest_max_header_size = # The maximal length of the initial HTTP/1.1 line in bytes.

#rest_max_initial_line_length = # The size of the thread pool used exclusively for serving the REST API.

#rest_thread_pool_size = # Comma separated list of trusted proxies that are allowed to set the client address with X-Forwarded-For

# header. May be subnets, or hosts.

#trusted_proxies = 127.0.0.1/, :::::::/ # Enable the embedded Graylog web interface.

# Default: true

web_enable = true # Web interface listen URI.

# Configuring a path for the URI here effectively prefixes all URIs in the web interface. This is a replacement

# for the application.context configuration parameter in pre-2.0 versions of the Graylog web interface.

web_listen_uri = http://10.2.2.42:9000/ # Web interface endpoint URI. This setting can be overriden on a per-request basis with the X-Graylog-Server-URL header.

# Default: $rest_transport_uri

web_endpoint_uri = http://42.111.111.111:9000/api # Enable CORS headers for the web interface. This is necessary for JS-clients accessing the server directly.

# If these are disabled, modern browsers will not be able to retrieve resources from the server.

web_enable_cors = true # Enable/disable GZIP support for the web interface. This compresses HTTP responses and therefore helps to reduce

# overall round trip times. This is enabled by default. Uncomment the next line to disable it.

#web_enable_gzip = false # Enable HTTPS support for the web interface. This secures the communication of the web browser with the web interface

# using TLS to prevent request forgery and eavesdropping.

# This is disabled by default. Uncomment the next line to enable it and see the other related configuration settings.

#web_enable_tls = true # The X. certificate chain file in PEM format to use for securing the web interface.

#web_tls_cert_file = /path/to/graylog-web.crt # The PKCS# private key file in PEM format to use for securing the web interface.

#web_tls_key_file = /path/to/graylog-web.key # The password to unlock the private key used for securing the web interface.

#web_tls_key_password = secret # The maximum size of the HTTP request headers in bytes.

#web_max_header_size = # The maximal length of the initial HTTP/1.1 line in bytes.

#web_max_initial_line_length = # The size of the thread pool used exclusively for serving the web interface.

#web_thread_pool_size = # List of Elasticsearch hosts Graylog should connect to.

# Need to be specified as a comma-separated list of valid URIs for the http ports of your elasticsearch nodes.

# If one or more of your elasticsearch hosts require authentication, include the credentials in each node URI that

# requires authentication.

#

# Default: http://127.0.0.1:9200

elasticsearch_hosts = http://grayloguser:3KKLg8294CE0@10.2.2.41:9200,http://grayloguser:3KKLg8294CE0@10.2.2.42:9200,http://grayloguser:3KKLg8294CE0@10.2.2.43:9200 # Maximum amount of time to wait for successfull connection to Elasticsearch HTTP port.

#

# Default: Seconds

#elasticsearch_connect_timeout = 10s # Maximum amount of time to wait for reading back a response from an Elasticsearch server.

#

# Default: seconds

#elasticsearch_socket_timeout = 60s # Maximum idle time for an Elasticsearch connection. If this is exceeded, this connection will

# be tore down.

#

# Default: inf

#elasticsearch_idle_timeout = -1s # Maximum number of total connections to Elasticsearch.

#

# Default:

#elasticsearch_max_total_connections = # Maximum number of total connections per Elasticsearch route (normally this means per

# elasticsearch server).

#

# Default:

#elasticsearch_max_total_connections_per_route = # Maximum number of times Graylog will retry failed requests to Elasticsearch.

#

# Default:

#elasticsearch_max_retries = # Enable automatic Elasticsearch node discovery through Nodes Info,

# see https://www.elastic.co/guide/en/elasticsearch/reference/5.4/cluster-nodes-info.html

#

# WARNING: Automatic node discovery does not work if Elasticsearch requires authentication, e. g. with Shield.

#

# Default: false

#elasticsearch_discovery_enabled = true # Filter for including/excluding Elasticsearch nodes in discovery according to their custom attributes,

# see https://www.elastic.co/guide/en/elasticsearch/reference/5.4/cluster.html#cluster-nodes

#

# Default: empty

#elasticsearch_discovery_filter = rack: # Frequency of the Elasticsearch node discovery.

#

# Default: 30s

# elasticsearch_discovery_frequency = 30s # Enable payload compression for Elasticsearch requests.

#

# Default: false

#elasticsearch_compression_enabled = true # Graylog will use multiple indices to store documents in. You can configured the strategy it uses to determine

# when to rotate the currently active write index.

# It supports multiple rotation strategies:

# - "count" of messages per index, use elasticsearch_max_docs_per_index below to configure

# - "size" per index, use elasticsearch_max_size_per_index below to configure

# valid values are "count", "size" and "time", default is "count"

#

# ATTENTION: These settings have been moved to the database in 2.0. When you upgrade, make sure to set these

# to your previous .x settings so they will be migrated to the database!

rotation_strategy = count # (Approximate) maximum number of documents in an Elasticsearch index before a new index

# is being created, also see no_retention and elasticsearch_max_number_of_indices.

# Configure this if you used 'rotation_strategy = count' above.

#

# ATTENTION: These settings have been moved to the database in 2.0. When you upgrade, make sure to set these

# to your previous .x settings so they will be migrated to the database!

elasticsearch_max_docs_per_index = # (Approximate) maximum size in bytes per Elasticsearch index on disk before a new index is being created, also see

# no_retention and elasticsearch_max_number_of_indices. Default is 1GB.

# Configure this if you used 'rotation_strategy = size' above.

#

# ATTENTION: These settings have been moved to the database in 2.0. When you upgrade, make sure to set these

# to your previous .x settings so they will be migrated to the database!

#elasticsearch_max_size_per_index = # (Approximate) maximum time before a new Elasticsearch index is being created, also see

# no_retention and elasticsearch_max_number_of_indices. Default is day.

# Configure this if you used 'rotation_strategy = time' above.

# Please note that this rotation period does not look at the time specified in the received messages, but is

# using the real clock value to decide when to rotate the index!

# Specify the time using a duration and a suffix indicating which unit you want:

# 1w = week

# 1d = day

# 12h = hours

# Permitted suffixes are: d for day, h for hour, m for minute, s for second.

#

# ATTENTION: These settings have been moved to the database in 2.0. When you upgrade, make sure to set these

# to your previous .x settings so they will be migrated to the database!

#elasticsearch_max_time_per_index = 1d # Disable checking the version of Elasticsearch for being compatible with this Graylog release.

# WARNING: Using Graylog with unsupported and untested versions of Elasticsearch may lead to data loss!

#elasticsearch_disable_version_check = true # Disable message retention on this node, i. e. disable Elasticsearch index rotation.

#no_retention = false # How many indices do you want to keep?

#

# ATTENTION: These settings have been moved to the database in 2.0. When you upgrade, make sure to set these

# to your previous .x settings so they will be migrated to the database!

elasticsearch_max_number_of_indices = # Decide what happens with the oldest indices when the maximum number of indices is reached.

# The following strategies are availble:

# - delete # Deletes the index completely (Default)

# - close # Closes the index and hides it from the system. Can be re-opened later.

#

# ATTENTION: These settings have been moved to the database in 2.0. When you upgrade, make sure to set these

# to your previous .x settings so they will be migrated to the database!

retention_strategy = delete # How many Elasticsearch shards and replicas should be used per index? Note that this only applies to newly created indices.

# ATTENTION: These settings have been moved to the database in Graylog 2.2.. When you upgrade, make sure to set these

# to your previous settings so they will be migrated to the database!

elasticsearch_shards =

elasticsearch_replicas = # Prefix for all Elasticsearch indices and index aliases managed by Graylog.

#

# ATTENTION: These settings have been moved to the database in Graylog 2.2.. When you upgrade, make sure to set these

# to your previous settings so they will be migrated to the database!

elasticsearch_index_prefix = graylog # Name of the Elasticsearch index template used by Graylog to apply the mandatory index mapping.

# Default: graylog-internal

#

# ATTENTION: These settings have been moved to the database in Graylog 2.2.. When you upgrade, make sure to set these

# to your previous settings so they will be migrated to the database!

#elasticsearch_template_name = graylog-internal # Do you want to allow searches with leading wildcards? This can be extremely resource hungry and should only

# be enabled with care. See also: http://docs.graylog.org/en/2.1/pages/queries.html

allow_leading_wildcard_searches = false # Do you want to allow searches to be highlighted? Depending on the size of your messages this can be memory hungry and

# should only be enabled after making sure your Elasticsearch cluster has enough memory.

allow_highlighting = true # Analyzer (tokenizer) to use for message and full_message field. The "standard" filter usually is a good idea.

# All supported analyzers are: standard, simple, whitespace, stop, keyword, pattern, language, snowball, custom

# Elasticsearch documentation: https://www.elastic.co/guide/en/elasticsearch/reference/2.3/analysis.html

# Note that this setting only takes effect on newly created indices.

#

# ATTENTION: These settings have been moved to the database in Graylog 2.2.. When you upgrade, make sure to set these

# to your previous settings so they will be migrated to the database!

elasticsearch_analyzer = standard # Global request timeout for Elasticsearch requests (e. g. during search, index creation, or index time-range

# calculations) based on a best-effort to restrict the runtime of Elasticsearch operations.

# Default: 1m

#elasticsearch_request_timeout = 1m # Global timeout for index optimization (force merge) requests.

# Default: 1h

#elasticsearch_index_optimization_timeout = 1h # Maximum number of concurrently running index optimization (force merge) jobs.

# If you are using lots of different index sets, you might want to increase that number.

# Default:

#elasticsearch_index_optimization_jobs = # Time interval for index range information cleanups. This setting defines how often stale index range information

# is being purged from the database.

# Default: 1h

#index_ranges_cleanup_interval = 1h # Batch size for the Elasticsearch output. This is the maximum (!) number of messages the Elasticsearch output

# module will get at once and write to Elasticsearch in a batch call. If the configured batch size has not been

# reached within output_flush_interval seconds, everything that is available will be flushed at once. Remember

# that every outputbuffer processor manages its own batch and performs its own batch write calls.

# ("outputbuffer_processors" variable)

output_batch_size = # Flush interval (in seconds) for the Elasticsearch output. This is the maximum amount of time between two

# batches of messages written to Elasticsearch. It is only effective at all if your minimum number of messages

# for this time period is less than output_batch_size * outputbuffer_processors.

output_flush_interval = # As stream outputs are loaded only on demand, an output which is failing to initialize will be tried over and

# over again. To prevent this, the following configuration options define after how many faults an output will

# not be tried again for an also configurable amount of seconds.

output_fault_count_threshold =

output_fault_penalty_seconds = # The number of parallel running processors.

# Raise this number if your buffers are filling up.

processbuffer_processors =

outputbuffer_processors = #outputbuffer_processor_keep_alive_time =

#outputbuffer_processor_threads_core_pool_size =

#outputbuffer_processor_threads_max_pool_size = # UDP receive buffer size for all message inputs (e. g. SyslogUDPInput).

#udp_recvbuffer_sizes = # Wait strategy describing how buffer processors wait on a cursor sequence. (default: sleeping)

# Possible types:

# - yielding

# Compromise between performance and CPU usage.

# - sleeping

# Compromise between performance and CPU usage. Latency spikes can occur after quiet periods.

# - blocking

# High throughput, low latency, higher CPU usage.

# - busy_spinning

# Avoids syscalls which could introduce latency jitter. Best when threads can be bound to specific CPU cores.

processor_wait_strategy = blocking # Size of internal ring buffers. Raise this if raising outputbuffer_processors does not help anymore.

# For optimum performance your LogMessage objects in the ring buffer should fit in your CPU L3 cache.

# Must be a power of . (, , , ...)

ring_size = inputbuffer_ring_size =

inputbuffer_processors =

inputbuffer_wait_strategy = blocking # Enable the disk based message journal.

message_journal_enabled = true # The directory which will be used to store the message journal. The directory must me exclusively used by Graylog and

# must not contain any other files than the ones created by Graylog itself.

#

# ATTENTION:

# If you create a seperate partition for the journal files and use a file system creating directories like 'lost+found'

# in the root directory, you need to create a sub directory for your journal.

# Otherwise Graylog will log an error message that the journal is corrupt and Graylog will not start.

message_journal_dir = /var/lib/graylog-server/journal # Journal hold messages before they could be written to Elasticsearch.

# For a maximum of hours or GB whichever happens first.

# During normal operation the journal will be smaller.

#message_journal_max_age = 12h

#message_journal_max_size = 5gb #message_journal_flush_age = 1m

#message_journal_flush_interval =

#message_journal_segment_age = 1h

#message_journal_segment_size = 100mb # Number of threads used exclusively for dispatching internal events. Default is .

#async_eventbus_processors = # How many seconds to wait between marking node as DEAD for possible load balancers and starting the actual

# shutdown process. Set to if you have no status checking load balancers in front.

lb_recognition_period_seconds = # Journal usage percentage that triggers requesting throttling for this server node from load balancers. The feature is

# disabled if not set.

#lb_throttle_threshold_percentage = # Every message is matched against the configured streams and it can happen that a stream contains rules which

# take an unusual amount of time to run, for example if its using regular expressions that perform excessive backtracking.

# This will impact the processing of the entire server. To keep such misbehaving stream rules from impacting other

# streams, Graylog limits the execution time for each stream.

# The default values are noted below, the timeout is in milliseconds.

# If the stream matching for one stream took longer than the timeout value, and this happened more than "max_faults" times

# that stream is disabled and a notification is shown in the web interface.

#stream_processing_timeout =

#stream_processing_max_faults = # Length of the interval in seconds in which the alert conditions for all streams should be checked

# and alarms are being sent.

#alert_check_interval = # Since 0.21 the Graylog server supports pluggable output modules. This means a single message can be written to multiple

# outputs. The next setting defines the timeout for a single output module, including the default output module where all

# messages end up.

#

# Time in milliseconds to wait for all message outputs to finish writing a single message.

#output_module_timeout = # Time in milliseconds after which a detected stale master node is being rechecked on startup.

#stale_master_timeout = # Time in milliseconds which Graylog is waiting for all threads to stop on shutdown.

#shutdown_timeout = # MongoDB connection string

# See https://docs.mongodb.com/manual/reference/connection-string/ for details

mongodb_uri = mongodb://graylog:75PN76Db66En@10.2.2.41:27017,10.2.2.42:27017,10.2.2.43:27017/graylog?replicaSet=rs0 # Authenticate against the MongoDB server

#mongodb_uri = mongodb://grayloguser:secret@localhost:27017/graylog # Use a replica set instead of a single host

#mongodb_uri = mongodb://grayloguser:secret@localhost:27017,localhost:27018,localhost:27019/graylog # Increase this value according to the maximum connections your MongoDB server can handle from a single client

# if you encounter MongoDB connection problems.

mongodb_max_connections = # Number of threads allowed to be blocked by MongoDB connections multiplier. Default:

# If mongodb_max_connections is , and mongodb_threads_allowed_to_block_multiplier is ,

# then threads can block. More than that and an exception will be thrown.

# http://api.mongodb.com/java/current/com/mongodb/MongoOptions.html#threadsAllowedToBlockForConnectionMultiplier

mongodb_threads_allowed_to_block_multiplier = # Drools Rule File (Use to rewrite incoming log messages)

# See: http://docs.graylog.org/en/2.1/pages/drools.html

#rules_file = /etc/graylog/server/rules.drl # Email transport

#transport_email_enabled = false

#transport_email_hostname = mail.example.com

#transport_email_port =

#transport_email_use_auth = true

#transport_email_use_tls = true

#transport_email_use_ssl = true

#transport_email_auth_username = you@example.com

#transport_email_auth_password = secret

#transport_email_subject_prefix = [graylog]

#transport_email_from_email = graylog@example.com # Specify and uncomment this if you want to include links to the stream in your stream alert mails.

# This should define the fully qualified base url to your web interface exactly the same way as it is accessed by your users.

#transport_email_web_interface_url = https://graylog.example.com # The default connect timeout for outgoing HTTP connections.

# Values must be a positive duration (and between and when converted to milliseconds).

# Default: 5s

#http_connect_timeout = 5s # The default read timeout for outgoing HTTP connections.

# Values must be a positive duration (and between and when converted to milliseconds).

# Default: 10s

#http_read_timeout = 10s # The default write timeout for outgoing HTTP connections.

# Values must be a positive duration (and between and when converted to milliseconds).

# Default: 10s

#http_write_timeout = 10s # HTTP proxy for outgoing HTTP connections

#http_proxy_uri = # Disable the optimization of Elasticsearch indices after index cycling. This may take some load from Elasticsearch

# on heavily used systems with large indices, but it will decrease search performance. The default is to optimize

# cycled indices.

#

# ATTENTION: These settings have been moved to the database in Graylog 2.2.. When you upgrade, make sure to set these

# to your previous settings so they will be migrated to the database!

#disable_index_optimization = true # Optimize the index down to <= index_optimization_max_num_segments. A higher number may take some load from Elasticsearch

# on heavily used systems with large indices, but it will decrease search performance. The default is .

#

# ATTENTION: These settings have been moved to the database in Graylog 2.2.. When you upgrade, make sure to set these

# to your previous settings so they will be migrated to the database!

#index_optimization_max_num_segments = # The threshold of the garbage collection runs. If GC runs take longer than this threshold, a system notification

# will be generated to warn the administrator about possible problems with the system. Default is second.

#gc_warning_threshold = 1s # Connection timeout for a configured LDAP server (e. g. ActiveDirectory) in milliseconds.

#ldap_connection_timeout = # Disable the use of SIGAR for collecting system stats

#disable_sigar = false # The default cache time for dashboard widgets. (Default: seconds, minimum: second)

#dashboard_widget_default_cache_time = 10s # Automatically load content packs in "content_packs_dir" on the first start of Graylog.

#content_packs_loader_enabled = true # The directory which contains content packs which should be loaded on the first start of Graylog.

content_packs_dir = /usr/share/graylog-server/contentpacks # A comma-separated list of content packs (files in "content_packs_dir") which should be applied on

# the first start of Graylog.

# Default: empty

content_packs_auto_load = grok-patterns.json # For some cluster-related REST requests, the node must query all other nodes in the cluster. This is the maximum number

# of threads available for this. Increase it, if '/cluster/*' requests take long to complete.

# Should be rest_thread_pool_size * average_cluster_size if you have a high number of concurrent users.

proxied_requests_thread_pool_size =

server.conf

启动graylog服务:

$ sudo chkconfig --add graylog-server

$ sudo systemctl daemon-reload

$ sudo systemctl enable graylog-server.service

$ sudo systemctl start graylog-server.service

4. 多节点集群配置安装

① MongoDB集群配置:

修改所有mongdb节点的配置文件/etc/mongod.conf,添加集群replication信息replSetName: rs0,并重启服务。

# cat /etc/mongod.conf

# mongod.conf # for documentation of all options, see:

# http://docs.mongodb.org/manual/reference/configuration-options/ # where to write logging data.

systemLog:

destination: file

logAppend: true

path: /var/log/mongodb/mongod.log # Where and how to store data.

storage:

dbPath: /var/lib/mongo

journal:

enabled: true

# engine:

# mmapv1:

# wiredTiger: # how the process runs

processManagement:

fork: true # fork and run in background

pidFilePath: /var/run/mongodb/mongod.pid # location of pidfile # network interfaces

net:

port:

# bindIp: 127.0.0.1 # Listen to local interface only, comment to listen on all interfaces. #security: #operationProfiling: replication:

replSetName: rs0

#sharding: ## Enterprise-Only Options #auditLog: #snmp:

mongod.conf

$ sudo systemctl restart mongod.service

在集群其中一个节点,启动mongo命令行:

$ mongo

初始化mongodb集群,使用本机hostname或IP加端口:

$ rs.initiate( {

_id : "rs0",

members: [ { _id : , host : "mongodb0.example.net:27017" } ]

})

验证集群配置:

$ rs.conf()

{

"_id" : "rs0",

"version" : ,

"protocolVersion" : NumberLong(),

"members" : [

{

"_id" : ,

"host" : "mongodb0.example.net:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : ,

"tags" : {

},

"slaveDelay" : NumberLong(),

"votes" :

}

],

"settings" : {

"chainingAllowed" : true,

"heartbeatIntervalMillis" : ,

"heartbeatTimeoutSecs" : ,

"electionTimeoutMillis" : ,

"catchUpTimeoutMillis" : ,

"getLastErrorModes" : {

},

"getLastErrorDefaults" : {

"w" : ,

"wtimeout" :

},

"replicaSetId" : ObjectId("585ab9df685f726db2c6a840")

}

}

rs.conf()

将其他节点加入集群,并查看集群配置:

rs0:PRIMARY> rs.add("mongodb1.example.net")

rs0:PRIMARY> rs.add("mongodb2.example.net")

rs0:PRIMARY> rs.status()

创建graylog数据库,并添加graylog用户,赋予readWrite和dbAdmin权限:

rs0:PRIMARY> use graylog

switched to db graylog

rs0:PRIMARY> db.createUser( {

user: "graylog",

pwd: "75PN76Db66En",

roles: [ { role: "readWrite", db: "graylog" } ]

});

rs0:PRIMARY> db.grantRolesToUser( "graylog" , [ { role: "dbAdmin", db: "graylog" } ])

rs0:PRIMARY> show users

rs0:PRIMARY> db.auth("graylog","75sdfsdsdfn")

② Elasticsearch 集群配置:

修改elasticsearch配置文件并重启服务:

# cat /etc/elasticsearch/elasticsearch.yml | grep cluster.name

cluster.name: graylog

# cat /etc/elasticsearch/elasticsearch.yml | grep discovery.zen.ping

discovery.zen.ping.unicast.hosts: ["10.2.2.41", "10.2.2.43"]

# cat /etc/elasticsearch/elasticsearch.yml | grep network.host

network.host: 10.2.2.42

③ graylog集群配置

graylog master节点修改配置server.conf 中 is_master = true,其他节点为false,同时rest_listen_uri以及rest_transport_uri必须可以被集群中的其他节点连通。

修改mongodb连接配置:

# cat /etc/graylog/server/server.conf|grep mongodb_uri

mongodb_uri = mongodb://graylog:75PsdfsDb66En@10.2.2.41:27017,10.2.2.42:27017,10.2.2.43:27017/graylog?replicaSet=rs0

修改elasticsearch连接配置:

# cat /etc/graylog/server/server.conf|grep elasticsearch_hosts

elasticsearch_hosts = http://grayloguser:3KKLg8sdf340@10.2.2.41:9200,http://grayloguser:3KKLg8294CE0@10.2.2.42:9200,http://grayloguser:

3KKLg8sdf340

@10.2.2.43:9200

开启web界面:

# cat /etc/graylog/server/server.conf|grep web_enable

web_enable = true

④ 创建负载均衡器,对graylog配置负载均衡,我使用的是微软云负载均衡,这里不再说明。

此时可以通过 负载均衡器IP:9000 对graylog进行访问。

5. 日志接入

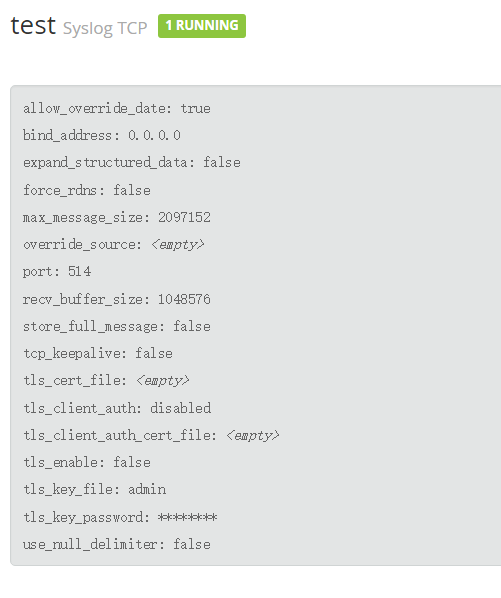

接入 syslog

首先在 webui 创建 input:

以 rsyslog 为例:

/etc/rsyslog.d/graylog.conf: *.* @@x.x.x.x:;RSYSLOG_SyslogProtocol23Format service rsyslog restart

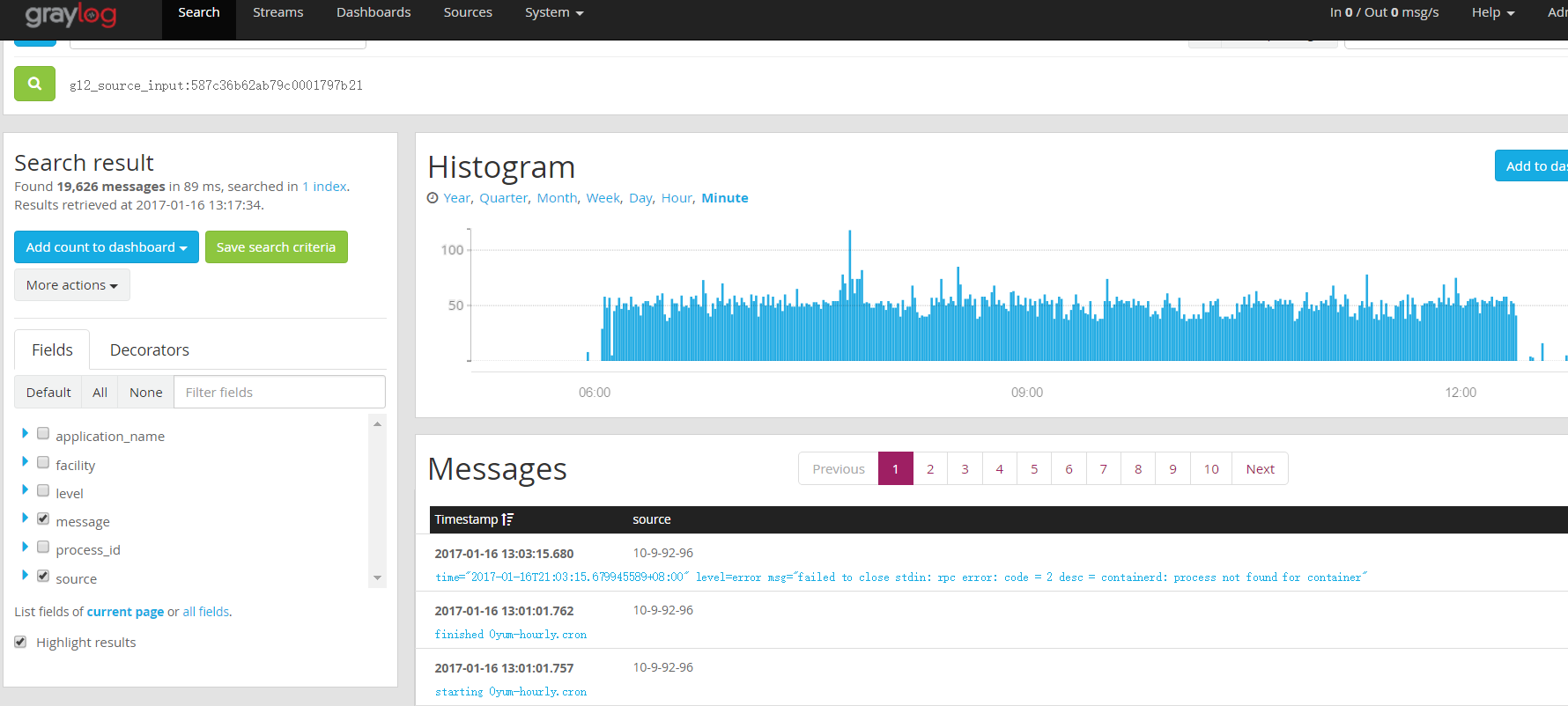

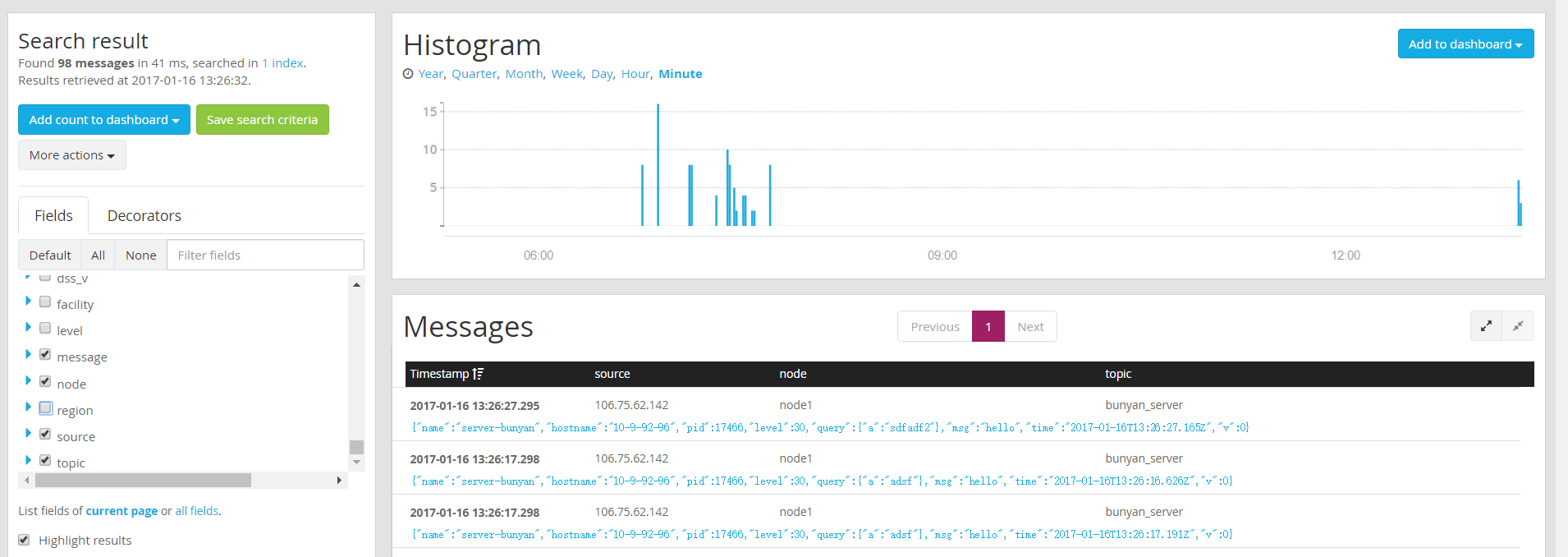

即可查看该 input 的 message:

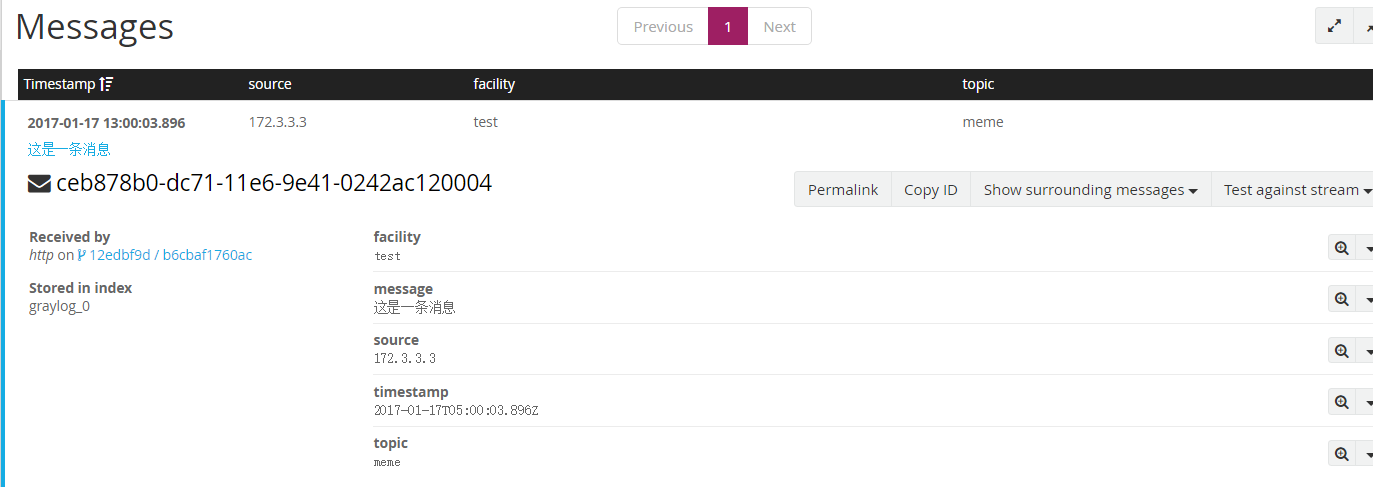

GELF (http 为例)

GELF (Graylog Extended Log Format) 可以接收结构化的事件, 支持压缩(GZIP’d or ZLIB’d)和分块。

GELF message:

- version

string - host

string - short_message

string - full_message

string - timestamp

number - level

number - facility

string - line

number - file

string - _[additional field]

stringornumber, 通过_前缀添加自定义的字段

- version

新建一个 GELF HTTP input:

推送日志:

curl -XPOST http://106.75.62.142:12201/gelf -p0 -d '{"message":"这是一条消息", "host":"172.3.3.3", "facility":"test", "topic": "meme"}'

查看推送的日志:

收集服务日志( nodejs 为例)

log4js, bunyan, winston 等等 nodejs 日志框架都可以, 这里我们以 bunyan 为例, 因为 bunyan 可以将日志以 json 的形式打印。

const express = require('express');

const bodyParser = require('body-parser');

const bunyan = require('bunyan');

const log = bunyan.createLogger({

name: 'server-bunyan',

level: 'debug',

streams: [{

type: 'rotating-file',

path: '/data/logs/server-bunyan.log',

period: '1d',

count:

}]

});

const app = express();

app.use(bodyParser.json());

app.use(bodyParser.urlencoded({ extended: true }));

app.get('/hello', (req, res) => {

log.info({

query: req.query

}, 'hello');

res.send('hello world');

});

app.listen(, '0.0.0.0', () => {

log.info('app listening on 5004');

});

rsyslog:

module(load="imfile" PollingInterval="") # input

input(type="imfile" File="/data/logs/server.log" Tag="server" ruleset="push_remote")

input(type="imfile" File="/data/logs/detail.log" Tag="detail" ruleset="push_remote")

input(type="imfile" File="/data/logs/server-bunyan.log" Tag="bunyan_server" ruleset="push_remote") # template

template(name="mytpl" type="string" string="node1 %programname% %msg%\n" )

# output

ruleset(name="push_remote") {

action(

type="omfwd"

protocol="tcp"

target="x.x.x.x"

port=""

template="mytpl"

action.resumeRetryCount="-1"

action.resumeInterval=""

queue.filename="push-remote"

queue.size=""

queue.highwatermark=""

queue.lowwatermark=""

queue.maxdiskspace="100g"

queue.saveonshutdown="on"

queue.type="LinkedList"

queue.maxfilesize="128m"

)

}

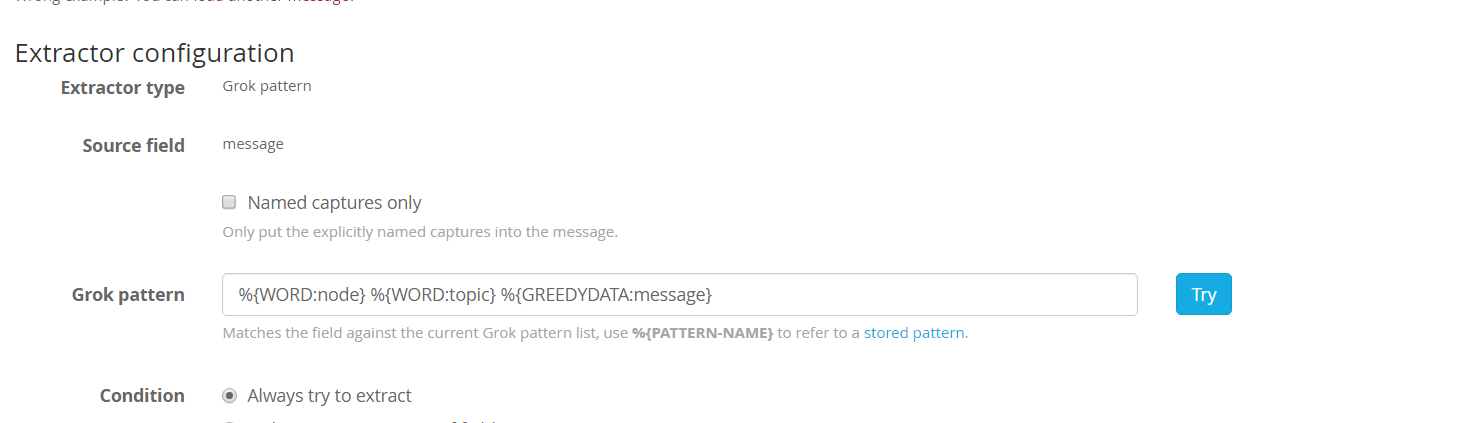

新建 input, 监听 515 端口,这里我们体验一下 graylog 的 Extractor,给改 input 添加一个 Extractor:

我们加了一个抓取器,来提取 node, topic 两个字段。

在 webui 查看该 input 的 message:

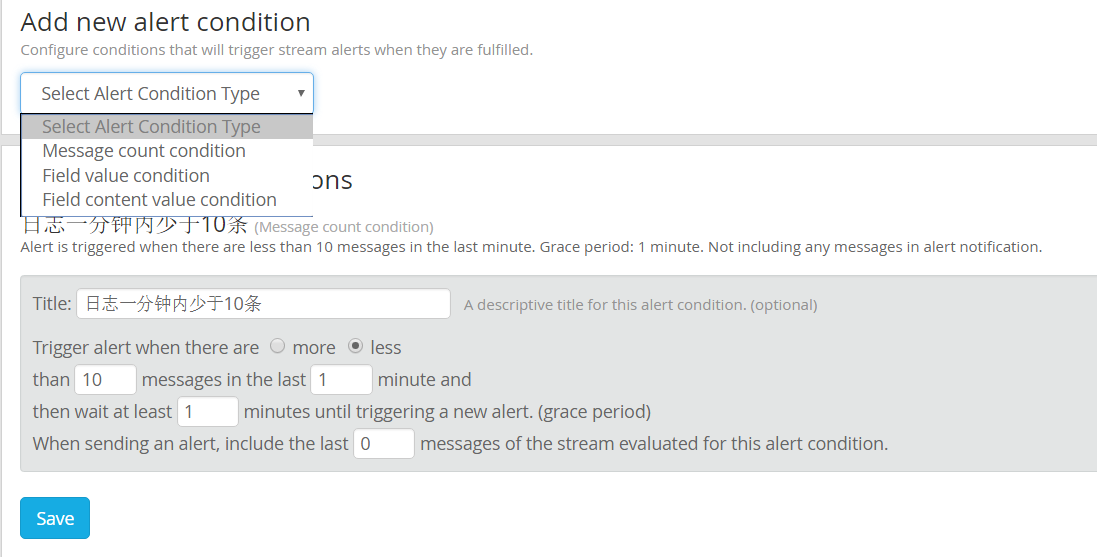

Alerts

Graylog 内置的告警条件:

- 消息数量

- 字段值(number)

- 字段内容

内置告警方式:

- HTTP 回调

体验一下 HTTP 回调。

新建一个 Stream, 进入 manager alerts, 新建一个告警条件:

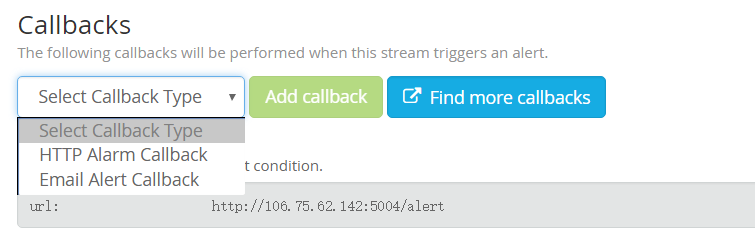

创建一个 HTTP 回调:

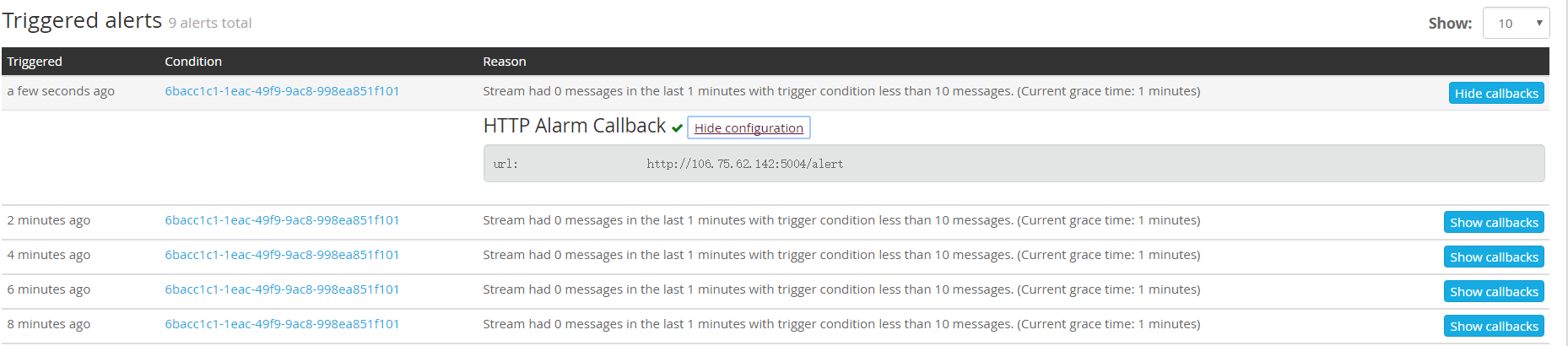

告警以 post 方式请求回调, 请求的 body 内容:

{

"check_result": {

"result_description": "Stream had 0 messages in the last 1 minutes with trigger condition less than 10 messages. (Current grace time: 1 minutes)",

"triggered_condition": {

"id": "6bacc1c1-1eac-49f9-9ac8-998ea851f101",

"type": "message_count",

"created_at": "2017-01-17T05:25:13.592Z",

"creator_user_id": "admin",

"title": "日志一分钟内少于10条",

"parameters": {

"grace": ,

"threshold_type": "less",

"threshold": ,

"time": ,

"backlog":

}

},

"triggered_at": "2017-01-17T05:44:11.921Z",

"triggered": true,

"matching_messages": []

},

"stream": {

"creator_user_id": "admin",

"outputs": [],

"alert_receivers": {

"emails": [

"dongsoso@hotmail.com"

],

"users": [

"dongsoso@hotmail.com"

]

},

"matching_type": "AND",

"description": "alert",

"created_at": "2017-01-17T05:21:58.852Z",

"disabled": false,

"rules": [],

"alert_conditions": [

{

"creator_user_id": "admin",

"created_at": "2017-01-17T05:25:13.592Z",

"id": "6bacc1c1-1eac-49f9-9ac8-998ea851f101",

"type": "message_count",

"title": "日志一分钟内少于10条",

"parameters": {

"grace": ,

"threshold_type": "less",

"threshold": ,

"time": ,

"backlog":

}

}

],

"id": "587da9f62ab79c0001352b7a",

"title": "test",

"content_pack": null

}

}

查看告警历史:

更多更好用的功能等待发现…

官方文档 : http://docs.graylog.org/en/2.3/index.html

CentOS 搭建Graylog集群详解的更多相关文章

- 使用acs-engine在Azure中国区部署kubernetes集群详解

转载请注明出处:http://www.cnblogs.com/wayneiscoming/p/7649642.html 1. acs-engine简介 ACS是微软在2015年12月推出的一项基于容器 ...

- redis主从架构,分片集群详解

写在前面:这篇笔记有点长,如果你认真看完,收获会不少,如果你只是忘记了相关命令,请翻到末尾. redis的简单介绍: 一个提供多种数据类类型储存,整个系统都在内存中运行的, 定期通过异步的方式把数据刷 ...

- elasticsearch 集群详解

ES为什么要实现集群 在单台ES服务器节点上,随着业务量的发展索引文件慢慢增多,会影响到效率和内存存储问题等. 如果使用ES集群,会将单台服务器节点的索引文件使用分片技术,分布式的存放在多个不同的物理 ...

- Hadoop(四)HDFS集群详解

前言 前面几篇简单介绍了什么是大数据和Hadoop,也说了怎么搭建最简单的伪分布式和全分布式的hadoop集群.接下来这篇我详细的分享一下HDFS. HDFS前言: 设计思想:(分而治之)将大文件.大 ...

- adoop(四)HDFS集群详解

阅读目录(Content) 一.HDFS概述 1.1.HDFS概述 1.2.HDFS的概念和特性 1.3.HDFS的局限性 1.4.HDFS保证可靠性的措施 二.HDFS基本概念 2.1.HDFS主从 ...

- 【转载】高可用的MongoDB集群详解

1.序言 MongoDB 是一个可扩展的高性能,开源,模式自由,面向文档的数据库. 它使用 C++编写.MongoDB 包含一下特点: l 面向集合的存储:适合存储对象及JSON形式的数据. l ...

- Spark学习笔记--Linux安装Spark集群详解

本文主要讲解如何在Linux环境下安装Spark集群,安装之前我们需要Linux已经安装了JDK和Scala,因为Spark集群依赖这些.下面就如何安装Spark进行讲解说明. 一.安装环境 操作系统 ...

- java架构之路-(MQ专题)RocketMQ从入坑到集群详解

这次我们来说说我们的RocketMQ的安装和参数配置,先来看一下我们RocketMQ的提出和应用场景吧. 早在2009年,阿里巴巴的淘宝第一次提出了双11购物狂欢节,但是在2009年,服务器无法承受到 ...

- CentOS搭建Redis集群

集群原理-redis-cluster架构图 架构细节: (1)所有的redis节点彼此互联(PING-PONG机制),内部使用二进制协议优化传输速度和带宽. (2)节点的fail是通过集群中超过半数的 ...

随机推荐

- 如何修改int的打印内容——史上最难的JAVA面试题

序 今天看到了一个比较特别的面试题,考察的是如何改变int的System.out.print的结果.题目如下: 下面的一句话"这是初级java实习生面试题"非常挑衅的激起了大家做题 ...

- 正则表达式 提取<A>标签

功能用途 主要实现了提取html代码中的a标签和url地址. 示例代码 Regex regex = new Regex("href\\s*=\\s*(?:\"(?<1> ...

- Day1 - 服务器硬件基础

1.1 关于运维人员 1.1.1 运维的职责 1.保证服务器7*24小时 运行 2.保证数据不能丢 3.提高用户的体验(网站打开的速度) 1.1.2 运维原则 简单.易用.高效 === 简单.粗暴 ...

- 深度学习框架-caffe安装-环境[Mac OSX 10.12]

深度学习框架-caffe安装 [Mac OSX 10.12] [参考资源] 1.英文原文:(使用GPU) [http://hoondy.com/2015/04/03/how-to-install-ca ...

- DFS和BFS(无向图)Java实现

package practice; import java.util.Iterator; import java.util.Stack; import edu.princeton.cs.algs4.* ...

- 插入排序与希尔排序Java实现

public class TestMain { public static void main(String[] args) { Integer[] a = new Integer[5000]; fo ...

- spring mvc:Error scanning entry module-info.class from jar错误

项目从jdk1.6升级到jdk1.8,启动的时候出现如下错误: java.lang.RuntimeException: Error scanning entry module-info.class f ...

- Django models数据库配置以及多数据库联用设置

今天来说说web框架Django怎么配置使用数据库,也就是传说中MVC(Model View Controller)中的M,Model(模型). 简单介绍一下Django中的MVC: 模型(model ...

- Javascript学习日志(三):闭包

说实话,前面一节的原型和原型链在当初学的时候并没有很头疼,对着高级编程第三版撸了几遍就理解透了,闭包这一节真的挺头疼的,很惭愧,看了差不多十来遍吧,还翻看了网上的其他博客和解释文档,五花八门的表达方式 ...

- js实现换肤效果

一,js换肤的基本原理 基本原理很简单,就是使用 JS 切换对应的 CSS 样式表文件.例如导航网站 Hao123 的右上方就有网页换肤功能.除了切换 CSS 样式表文件之外,通常的网页换肤还需要通过 ...