OceanBase初体验之部署生产标准的三节点分布式集群

前置条件

OceanBase 数据库集群至少由三个节点组成,所以先准备好3台服务器:

| IP | 配置 | 操作系统 |

|---|---|---|

| x.x.x.150 | Intel x86 12C 64G内存 1T SSD | CentOS 7.9 |

| x.x.x.155 | Intel x86 12C 64G内存 1T SSD | CentOS 7.9 |

| x.x.x.222 | Intel x86 12C 64G内存 1T SSD | CentOS 7.9 |

关于运行 OceanBase 集群对于硬件资源和系统软件的要求,大家可以参考官方建议:

https://www.oceanbase.com/docs/common-oceanbase-database-cn-1000000000508277

服务器特殊的配置项(每台都要设置):

$ vi /etc/sysctl.conf

vm.swappiness = 0

vm.max_map_count = 655360

vm.min_free_kbytes = 2097152

vm.overcommit_memory = 0

fs.file-max = 6573688

$ sysctl -p

$ echo never > /sys/kernel/mm/redhat_transparent_hugepage/enabled

$ echo never > /sys/kernel/mm/transparent_hugepage/enabled

$ systemctl disable firewalld

$ systemctl stop firewalld

如果是使用的物理机部署,建议在BIOS中开启最大性能模式,X86芯片开启超线程。三台节点保证时间一致。

OceanBase 提供了多种部署方式,我们这里采用命令行部署,官方提供了集群管理工具OBD(俗称黑屏部署)。

下载安装包,直接上全家桶 All in One 的版本:https://www.oceanbase.com/softwarecenter

初始化中控机

在三台机器中任意挑选一台当做集群的中控机,通过OBD来操作整个集群,中控机只是用于管理集群,用单独的机器部署也可以。

把安装包上传到中控机,先把OBD装好:

[ob@localhost ~]$ tar -xzf oceanbase-all-in-one-*.tar.gz

[ob@localhost ~]$ cd oceanbase-all-in-one/bin/

[ob@localhost ~]$ ./install.sh

[ob@localhost ~]$ source ~/.oceanbase-all-in-one/bin/env.sh

到这里集群管理工具 obd 和客户端连接工具 obclient 就都装好了。

[ob@localhost ~]$ which obd

~/.oceanbase-all-in-one/obd/usr/bin/obd

[ob@localhost ~]$ which obclient

~/.oceanbase-all-in-one/obclient/u01/obclient/bin/obclient

编写集群部署配置

在oceanbase-all-in-one/conf目录下放了很多配置文件示例,可根据实际部署需要来修改。我这里要部署一套标准的 OceanBase 分布式集群,包含的组件有:

- observer - 数据库核心服务

- obproxy - 数据库代理,对多节点做负载均衡

- obagent - 监控采集服务

- grafana - 监控显示服务

- prometheus - 监控数据存储

配置文件内容如下:

## Only need to configure when remote login is required

user:

username: ob

password: oceanbase

# key_file: your ssh-key file path if need

# port: your ssh port, default 22

# timeout: ssh connection timeout (second), default 30

oceanbase-ce:

servers:

- name: server1

# Please don't use hostname, only IP can be supported

ip: x.x.x.222

- name: server2

ip: x.x.x.150

- name: server3

ip: x.x.x.155

global:

# Please set devname as the network adaptor's name whose ip is in the setting of severs.

# if set severs as "127.0.0.1", please set devname as "lo"

# if current ip is 192.168.1.10, and the ip's network adaptor's name is "eth0", please use "eth0"

devname: eno1

# if current hardware's memory capacity is smaller than 50G, please use the setting of "mini-single-example.yaml" and do a small adjustment.

memory_limit: 32G # The maximum running memory for an observer

# The reserved system memory. system_memory is reserved for general tenants. The default value is 30G.

system_memory: 8G

datafile_size: 50G # Size of the data file.

log_disk_size: 20G # The size of disk space used by the clog files.

syslog_level: INFO # System log level. The default value is INFO.

enable_syslog_wf: false # Print system logs whose levels are higher than WARNING to a separate log file. The default value is true.

enable_syslog_recycle: true # Enable auto system log recycling or not. The default value is false.

max_syslog_file_count: 4 # The maximum number of reserved log files before enabling auto recycling. The default value is 0.

# observer cluster name, consistent with obproxy's cluster_name

appname: obcluster

production_mode: false

# root_password: # root user password, can be empty

# proxyro_password: # proxyro user pasword, consistent with obproxy's observer_sys_password, can be empty

# In this example , support multiple ob process in single node, so different process use different ports.

# If deploy ob cluster in multiple nodes, the port and path setting can be same.

server1:

mysql_port: 2881 # External port for OceanBase Database. The default value is 2881. DO NOT change this value after the cluster is started.

rpc_port: 2882 # Internal port for OceanBase Database. The default value is 2882. DO NOT change this value after the cluster is started.

# The working directory for OceanBase Database. OceanBase Database is started under this directory. This is a required field.

home_path: /home/ob/deploy/observer

# The directory for data storage. The default value is $home_path/store.

# data_dir: /data

# The directory for clog, ilog, and slog. The default value is the same as the data_dir value.

# redo_dir: /redo

zone: zone1

server2:

mysql_port: 2881 # External port for OceanBase Database. The default value is 2881. DO NOT change this value after the cluster is started.

rpc_port: 2882 # Internal port for OceanBase Database. The default value is 2882. DO NOT change this value after the cluster is started.

# The working directory for OceanBase Database. OceanBase Database is started under this directory. This is a required field.

home_path: /home/ob/deploy/observer

# The directory for data storage. The default value is $home_path/store.

# data_dir: /data

# The directory for clog, ilog, and slog. The default value is the same as the data_dir value.

# redo_dir: /redo

zone: zone2

server3:

mysql_port: 2881 # External port for OceanBase Database. The default value is 2881. DO NOT change this value after the cluster is started.

rpc_port: 2882 # Internal port for OceanBase Database. The default value is 2882. DO NOT change this value after the cluster is started.

# The working directory for OceanBase Database. OceanBase Database is started under this directory. This is a required field.

home_path: /home/ob/deploy/observer

# The directory for data storage. The default value is $home_path/store.

# data_dir: /data

# The directory for clog, ilog, and slog. The default value is the same as the data_dir value.

# redo_dir: /redo

zone: zone3

obproxy-ce:

# Set dependent components for the component.

# When the associated configurations are not done, OBD will automatically get the these configurations from the dependent components.

depends:

- oceanbase-ce

servers:

- x.x.x.222

global:

listen_port: 2883 # External port. The default value is 2883.

prometheus_listen_port: 2884 # The Prometheus port. The default value is 2884.

home_path: /home/ob/deploy/obproxy

# oceanbase root server list

# format: ip:mysql_port;ip:mysql_port. When a depends exists, OBD gets this value from the oceanbase-ce of the depends.

# rs_list: 192.168.1.2:2881;192.168.1.3:2881;192.168.1.4:2881

enable_cluster_checkout: false

# observer cluster name, consistent with oceanbase-ce's appname. When a depends exists, OBD gets this value from the oceanbase-ce of the depends.

# cluster_name: obcluster

skip_proxy_sys_private_check: true

enable_strict_kernel_release: false

# obproxy_sys_password: # obproxy sys user password, can be empty. When a depends exists, OBD gets this value from the oceanbase-ce of the depends.

# observer_sys_password: # proxyro user pasword, consistent with oceanbase-ce's proxyro_password, can be empty. When a depends exists, OBD gets this value from the oceanbase-ce of the depends.

obagent:

depends:

- oceanbase-ce

servers:

- name: server1

# Please don't use hostname, only IP can be supported

ip: x.x.x.222

- name: server2

ip: x.x.x.150

- name: server3

ip: x.x.x.155

global:

home_path: /home/ob/deploy/obagent

ob_monitor_status: active

prometheus:

depends:

- obagent

servers:

- x.x.x.222

global:

home_path: /home/ob/deploy/prometheus

grafana:

depends:

- prometheus

servers:

- x.x.x.222

global:

home_path: /home/ob/deploy/grafana

login_password: oceanbase

配置文件的整体格式是按组件来配置,三个节点定义成三个server,分布在三个zone,保存了三副本数据,参数定义参考注释即可。

这里要注意几个端口,observer 对外服务是用2881,对内节点间通信是用2882,obproxy是用2883。

部署集群

准备好配置文件后部署集群就两行命令的事,先执行:

[ob@localhost ~]$ obd cluster deploy obtest -c topology.yaml

这一步会把各组件需要的文件通过shh传到各个节点上,同时创建目录、服务、给权限等等。

等最后输出下面这个信息就表示部署成功了。

接下来按提示启动集群即可:

[ob@localhost ~]$ obd cluster start obtest

Get local repositories ok

Search plugins ok

Load cluster param plugin ok

Open ssh connection ok

Check before start observer ok

Check before start obproxy ok

Check before start obagent ok

Check before start prometheus ok

Check before start grafana ok

Start observer ok

observer program health check ok

Connect to observer x.x.x.222:2881 ok

Initialize oceanbase-ce ok

Start obproxy ok

obproxy program health check ok

Connect to obproxy ok

Initialize obproxy-ce ok

Start obagent ok

obagent program health check ok

Connect to Obagent ok

Start promethues ok

prometheus program health check ok

Connect to Prometheus ok

Initialize prometheus ok

Start grafana ok

grafana program health check ok

Connect to grafana ok

Initialize grafana ok

Wait for observer init ok

+-----------------------------------------------+

| observer |

+-------------+---------+------+-------+--------+

| ip | version | port | zone | status |

+-------------+---------+------+-------+--------+

| x.x.x.150 | 4.2.2.0 | 2881 | zone2 | ACTIVE |

| x.x.x.155 | 4.2.2.0 | 2881 | zone3 | ACTIVE |

| x.x.x.222 | 4.2.2.0 | 2881 | zone1 | ACTIVE |

+-------------+---------+------+-------+--------+

obclient -hx.x.x.150 -P2881 -uroot -p'KHaaKw9dcLwXNvKrT3lc' -Doceanbase -A

+-----------------------------------------------+

| obproxy |

+-------------+------+-----------------+--------+

| ip | port | prometheus_port | status |

+-------------+------+-----------------+--------+

| x.x.x.222 | 2883 | 2884 | active |

+-------------+------+-----------------+--------+

obclient -hx.x.x.222 -P2883 -uroot -p'KHaaKw9dcLwXNvKrT3lc' -Doceanbase -A

+----------------------------------------------------------------+

| obagent |

+-------------+--------------------+--------------------+--------+

| ip | mgragent_http_port | monagent_http_port | status |

+-------------+--------------------+--------------------+--------+

| x.x.x.222 | 8089 | 8088 | active |

| x.x.x.150 | 8089 | 8088 | active |

| x.x.x.155 | 8089 | 8088 | active |

+-------------+--------------------+--------------------+--------+

+-------------------------------------------------------+

| prometheus |

+-------------------------+-------+------------+--------+

| url | user | password | status |

+-------------------------+-------+------------+--------+

| http://x.x.x.222:9090 | admin | qISoDdWHRX | active |

+-------------------------+-------+------------+--------+

+------------------------------------------------------------------+

| grafana |

+-------------------------------------+-------+-----------+--------+

| url | user | password | status |

+-------------------------------------+-------+-----------+--------+

| http://x.x.x.222:3000/d/oceanbase | admin | oceanbase | active |

+-------------------------------------+-------+-----------+--------+

obtest running

Trace ID: 98204f6e-e1d5-11ee-b268-1c697a639d50

If you want to view detailed obd logs, please run: obd display-trace 98204f6e-e1d5-11ee-b268-1c697a639d50

还可以通过 list 和 display 命名查看集群状态:

[ob@localhost ~]$ obd cluster list

[ob@localhost ~]$ obd cluster display obtest

操作集群

前面启动集群的时候已经打印出了连接集群的方式,这里连接入口有两种。

一种是直连任意一台 observer,另一种是走负载均衡代理连接 obproxy,两种方式注意区别 ip 和端口号。另外连接工具用 obclient 或者 mysql 都可以。

[ob@localhost ~]$ obclient -hx.x.x.222 -P2883 -uroot -p'KHaaKw9dcLwXNvKrT3lc' -Doceanbase -A

Welcome to the OceanBase. Commands end with ; or \g.

Your OceanBase connection id is 5

Server version: OceanBase_CE 4.2.2.0 (r100010012024022719-c984fe7cb7a4cef85a40323a0d073f0c9b7b8235) (Built Feb 27 2024 19:20:54)

Copyright (c) 2000, 2018, OceanBase and/or its affiliates. All rights reserved.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

obclient [oceanbase]>

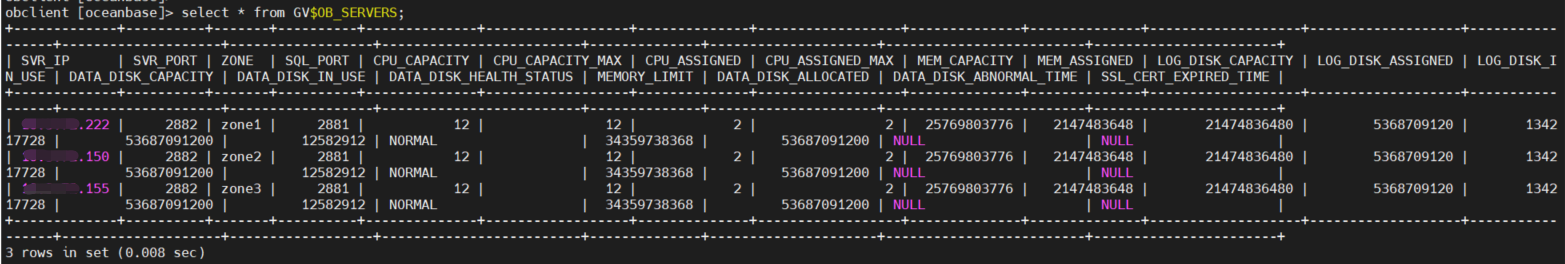

先看一下3个节点的情况:

再看三副本的分布情况:

obclient [oceanbase]> SELECT TENANT_ID,TENANT_NAME,TENANT_TYPE,PRIMARY_ZONE,LOCALITY FROM oceanbase.DBA_OB_TENANTS;

+-----------+-------------+-------------+--------------+---------------------------------------------+

| TENANT_ID | TENANT_NAME | TENANT_TYPE | PRIMARY_ZONE | LOCALITY |

+-----------+-------------+-------------+--------------+---------------------------------------------+

| 1 | sys | SYS | RANDOM | FULL{1}@zone1, FULL{1}@zone2, FULL{1}@zone3 |

+-----------+-------------+-------------+--------------+---------------------------------------------+

1 row in set (0.012 sec)

LOCALITY字段记录了副本的分布,这里面FULL代表全量副本,可以支持读写和参与投票选举leader,后面是该副本分布在哪个zone。

尝试创建跨zone的资源池和租户,注意 UNIT_NUM 不能超过每个zone里的 observer 数量:

obclient [oceanbase]> CREATE RESOURCE UNIT uc1 MAX_CPU 1, MEMORY_SIZE '2G', LOG_DISK_SIZE '2G';

Query OK, 0 rows affected (0.009 sec)

obclient [oceanbase]> CREATE RESOURCE POOL rp1 UNIT 'uc1', UNIT_NUM 1, ZONE_LIST ('zone1', 'zone2', 'zone3');

Query OK, 0 rows affected (0.029 sec)

obclient [oceanbase]> CREATE TENANT tt resource_pool_list=('rp1') set ob_tcp_invited_nodes = '%';

Query OK, 0 rows affected (51.995 sec)

登录到新租户里面做一些数据操作(新租户里的root用户默认是空密码):

[ob@localhost ~]$ obclient -h10.3.72.222 -P2883 -uroot@tt -Doceanbase -A

Welcome to the OceanBase. Commands end with ; or \g.

Your OceanBase connection id is 59

Server version: OceanBase_CE 4.2.2.0 (r100010012024022719-c984fe7cb7a4cef85a40323a0d073f0c9b7b8235) (Built Feb 27 2024 19:20:54)

Copyright (c) 2000, 2018, OceanBase and/or its affiliates. All rights reserved.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

obclient [oceanbase]>

obclient [oceanbase]> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| oceanbase |

| test |

+--------------------+

4 rows in set (0.002 sec)

obclient [oceanbase]> create database tt_db1;

Query OK, 1 row affected (0.069 sec)

obclient [oceanbase]> use tt_db1;

Database changed

obclient [tt_db1]> create table t1(id int primary key,name varchar(50),dt datetime);

Query OK, 0 rows affected (0.234 sec)

obclient [tt_db1]> select * from t1;

Empty set (0.022 sec)

obclient [tt_db1]> insert into t1 values(1,'aaa',now());

Query OK, 1 row affected (0.004 sec)

obclient [tt_db1]> select * from t1;

+----+------+---------------------+

| id | name | dt |

+----+------+---------------------+

| 1 | aaa | 2024-03-14 16:24:00 |

+----+------+---------------------+

1 row in set (0.001 sec)

下一篇尝试从 mysql 迁移数据到 OceanBase。

OceanBase初体验之部署生产标准的三节点分布式集群的更多相关文章

- 部署elasticsearch(三节点)集群+filebeat+kibana

用途 ▷ 通过各个beat实时收集日志.传输至elasticsearch集群 ▷ 通过kibana展示日志 实验架构 名称:IP地址:CPU:内存 kibana&cerebro:192.168 ...

- rancher三节点k8s集群部署例子

rancher三节点k8s集群部署例子 待办 https://rorschachchan.github.io/2019/07/25/使用Rancher2-1部署k8s/

- 理解 OpenStack Swift (1):OpenStack + 三节点Swift 集群+ HAProxy + UCARP 安装和配置

本系列文章着重学习和研究OpenStack Swift,包括环境搭建.原理.架构.监控和性能等. (1)OpenStack + 三节点Swift 集群+ HAProxy + UCARP 安装和配置 ( ...

- 部署AlwaysOn第三步:集群资源组的健康检测和故障转移

资源组是由一个或多个资源组成的组,WSFC的故障转移是以资源组为单位的,资源组中的资源是相互依赖的.一个资源所依赖的其他资源必须和该资源处于同一个资源组,跨资源组的依赖关系是不存在的.在任何时刻,每个 ...

- 三节点Hadoop集群搭建

1. 基础环境搭建 新建3个CentOS6.5操作系统的虚拟机,命名(可自定)为masternode.slavenode1和slavenode2.该过程参考上一篇博文CentOS6.5安装配置详解 2 ...

- Ceph实战入门系列(一)——三节点Ceph集群的安装与部署

安装文档:http://blog.csdn.net/u014139942/article/details/53639124

- Cassandra集群:一,搭建一个三节点的集群

环境准备 JDK1.8 http://download.oracle.com/otn/java/jdk/8u171-b11/512cd62ec5174c3487ac17c61aaa89e8/jdk-8 ...

- Solr系列二:solr-部署详解(solr两种部署模式介绍、独立服务器模式详解、SolrCloud分布式集群模式详解)

一.solr两种部署模式介绍 Standalone Server 独立服务器模式:适用于数据规模不大的场景 SolrCloud 分布式集群模式:适用于数据规模大,高可靠.高可用.高并发的场景 二.独 ...

- Storm入门教程 第三章Storm集群安装部署步骤、storm开发环境

一. Storm集群组件 Storm集群中包含两类节点:主控节点(Master Node)和工作节点(Work Node).其分别对应的角色如下: 主控节点(Master Node)上运行一个被称为N ...

- Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列之flanneld网络介绍及部署(三)

0.前言 整体架构目录:ASP.NET Core分布式项目实战-目录 k8s架构目录:Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列目录 一.flanneld介绍 ...

随机推荐

- electron 中如何安装或更新 vuejs-devtool 最新稳定版

手上正在开发的项目是vue3.0 通过添加 vue-cli-plugin-electron-builder 插件生成 electron 项目,项目在开发过程中发现 beta版的 vuejs-devto ...

- 修复华硕笔记本fn+f2在ubuntu下wifi不能够正常使用和WiFi Disabled (Hard-blocked) (译文)

PS:要转载请注明出处,本人版权所有. PS: 这个只是基于<我自己>的理解, 如果和你的原则及想法相冲突,请谅解,勿喷. 前置说明 本文发布于 2014-12-22 11:49:16 ...

- 反转链表——java

给定一个链表,请你将链表反转过来. 举例:原链表:1→2→3→4→5→null 反转链表:5→4→3→2→1→null 代码: package algorithm_niuke; public clas ...

- 【732. 我的日程安排表 III】差分数组

class MyCalendarThree { private TreeMap<Integer, Integer> cnt; public MyCalendarThree() { cnt ...

- Sealos 云开发:Laf 出嫁了,与 Sealos 正式结合!

千呼万唤始出来,Laf 云开发最近已正式与 Sealos 融合,入住 Sealos!大家可以登录 Sealos 公有云 体验和使用,现在正式介绍一下 Sealos 云开发. Sealos 云开发是什么 ...

- 记录--移动端 H5 Tab 如何滚动居中

这里给大家分享我在网上总结出来的一些知识,希望对大家有所帮助 移动端 H5 Tab 如何滚动居中 Tab 在 PC 端.移动端应用都上很常见,不过 Tab 在移动端 比 PC 端更复杂.为什么呢?移动 ...

- 使用Go语言开发一个短链接服务:六、链接跳转

章节 使用Go语言开发一个短链接服务:一.基本原理 使用Go语言开发一个短链接服务:二.架构设计 使用Go语言开发一个短链接服务:三.项目目录结构设计 使用Go语言开发一个短链接服务:四.生成 ...

- 一文讲透Java核心技术之高可扩展利器SPI

大家好,我是冰河~~ SPI的概念 JAVA SPI = 基于接口的编程+策略模式+配置文件 的动态加载机制 SPI的使用场景 Java是一种面向对象语言,虽然Java8开始支持函数式编程和Strea ...

- Chrome浏览器使用小技巧

前言 Notes made by IT-Pupil-Poo-Poo-Cai(IT小学生蔡坨坨). The notes are for reference only. Personal blog:www ...

- STM32中RFID模块(MFRC522)简单应用

1. 前言 此篇只是对RFID模块的简单概述以及应用,没有原理,对提供的店家提供的代码,进行注释,以及简单使用流程 2. 函数 //功 能:寻卡 //参数说明: req_code[IN]:寻卡方式 ...