CDH:5.14.0 中 Hive BUG记录

最近在工作中,使用CASE WHEN语句的时候,总是出现异常,查看日志是由于数组超界。不知所以然,然后进行了一步步分析,发现这是hive本身的bug,分享出来,一是为了记录,二是想让大家共同看看,欢迎指正。

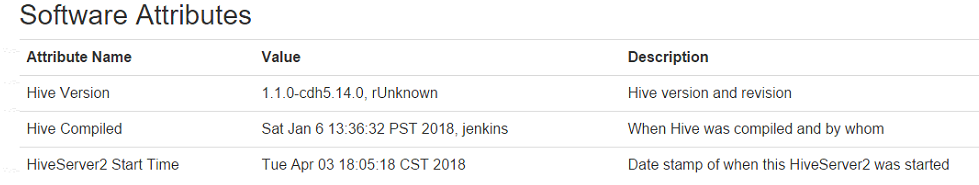

- 使用的是CDH5.14.0版本。

- CDH5.14.0使用的HIVE版本

- 自建日志表log:

+----------------------------------------------------+--+

| createtab_stmt |

+----------------------------------------------------+--+

| CREATE TABLE `log`( |

| `mandt` char(3), |

| `zdate` char(10), |

| `ztime` char(8), |

| `zguid` char(20), |

| `ztype` char(1), |

| `zfuncname` varchar(30), |

| `zseq` varchar(2), |

| `xmldata` string, |

| `xmldata2` string, |

| `field1` varchar(20), |

| `field2` varchar(20), |

| `field3` varchar(20), |

| `field4` varchar(20)) |

| CLUSTERED BY ( |

| zdate) |

| INTO 12 BUCKETS |

| ROW FORMAT SERDE |

| 'org.apache.hadoop.hive.ql.io.orc.OrcSerde' |

| WITH SERDEPROPERTIES ( |

| 'field.delim'='\t', |

| 'serialization.format'='\t') |

| STORED AS INPUTFORMAT |

| 'org.apache.hadoop.hive.ql.io.orc.OrcInputFormat' |

| OUTPUTFORMAT |

| 'org.apache.hadoop.hive.ql.io.orc.OrcOutputFormat' |

| LOCATION |

| 'hdfs://hserver1n:8020/user/hive/warehouse/sap.db/log' |

| TBLPROPERTIES ( |

| 'COLUMN_STATS_ACCURATE'='true', |

| 'compactor.mapreduce.map.memory.mb'='1024', |

| 'compactorthreshold.hive.compactor.delta.num.threshold'='4', |

| 'compactorthreshold.hive.compactor.delta.pct.threshold'='0.5', |

| 'last_modified_by'='hdfs', |

| 'last_modified_time'='1522318887', |

| 'numFiles'='14', |

| 'numRows'='1000000', |

| 'rawDataSize'='0', |

| 'totalSize'='135774020', |

| 'transactional'='true', |

| 'transient_lastDdlTime'='1522393096') |

+----------------------------------------------------+--+

41 rows selected (0.105 seconds)

此表共有13个字段,存储格式是 ORC列式存储,共有12个桶,并启用了事务。

- 查询语句:

select zdate,case zdate when '2013.07.23' then 'a' else 'b' end as zfunc from log limit 1;

- 错误描述(日志太长,部分省略):

Log Type: syslog

Log Upload Time: Tue Apr 03 17:09:14 +0800 2018

Log Length: 80385

2018-04-03 17:08:36,788 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorMapOperator: Initializing Self MAP[4]

2018-04-03 17:08:36,788 INFO [main] org.apache.hadoop.hive.ql.exec.TableScanOperator: Initializing Self TS[0]

2018-04-03 17:08:36,788 INFO [main] org.apache.hadoop.hive.ql.exec.TableScanOperator: Operator 0 TS initialized

2018-04-03 17:08:36,788 INFO [main] org.apache.hadoop.hive.ql.exec.TableScanOperator: Initializing children of 0 TS

2018-04-03 17:08:36,788 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorSelectOperator: Initializing child 1 SEL

2018-04-03 17:08:36,788 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorSelectOperator: Initializing Self SEL[1]

2018-04-03 17:08:36,792 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorSelectOperator: Operator 1 SEL initialized

2018-04-03 17:08:36,792 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorSelectOperator: Initializing children of 1 SEL

2018-04-03 17:08:36,792 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorLimitOperator: Initializing child 2 LIM

2018-04-03 17:08:36,792 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorLimitOperator: Initializing Self LIM[2]

2018-04-03 17:08:36,792 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorLimitOperator: Operator 2 LIM initialized

2018-04-03 17:08:36,792 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorLimitOperator: Initializing children of 2 LIM

2018-04-03 17:08:36,792 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorFileSinkOperator: Initializing child 3 FS

2018-04-03 17:08:36,792 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorFileSinkOperator: Initializing Self FS[3]

2018-04-03 17:08:36,793 INFO [main] org.apache.hadoop.conf.Configuration.deprecation: mapred.task.id is deprecated. Instead, use mapreduce.task.attempt.id

2018-04-03 17:08:36,908 INFO [main] org.apache.hadoop.hive.ql.exec.FileSinkOperator: Using serializer : class org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe[[[B@5d13d4e]:[_col0, _col1]:[char(10), string]] and formatter : org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat@7e1b4e9d

2018-04-03 17:08:36,908 INFO [main] org.apache.hadoop.conf.Configuration.deprecation: mapred.healthChecker.script.timeout is deprecated. Instead, use mapreduce.tasktracker.healthchecker.script.timeout

2018-04-03 17:08:36,909 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorFileSinkOperator: Operator 3 FS initialized

2018-04-03 17:08:36,909 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorFileSinkOperator: Initialization Done 3 FS

2018-04-03 17:08:36,909 INFO [main] org.apache.hadoop.conf.Configuration.deprecation: mapred.task.is.map is deprecated. Instead, use mapreduce.task.ismap

2018-04-03 17:08:36,909 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorLimitOperator: Initialization Done 2 LIM

2018-04-03 17:08:36,909 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorSelectOperator: Initialization Done 1 SEL

2018-04-03 17:08:36,909 INFO [main] org.apache.hadoop.hive.ql.exec.TableScanOperator: Initialization Done 0 TS

2018-04-03 17:08:36,909 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorMapOperator: Initialization Done 4 MAP

2018-04-03 17:08:38,231 FATAL [main] org.apache.hadoop.hive.ql.exec.mr.ExecMapper: org.apache.hadoop.hive.ql.metadata.HiveException: Hive Runtime Error while processing row

at org.apache.hadoop.hive.ql.exec.vector.VectorMapOperator.process(VectorMapOperator.java:52)

at org.apache.hadoop.hive.ql.exec.mr.ExecMapper.map(ExecMapper.java:170)

at org.apache.hadoop.mapred.MapRunner.run(MapRunner.java:54)

at org.apache.hadoop.mapred.MapTask.runOldMapper(MapTask.java:459)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:343)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:164)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1917)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:158)

Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: Error evaluating CASE (zfuncname) WHEN ('ZFI_VENDOR_TO_CRM') THEN ('a') ELSE ('b') END

at org.apache.hadoop.hive.ql.exec.vector.VectorSelectOperator.processOp(VectorSelectOperator.java:126)

at org.apache.hadoop.hive.ql.exec.Operator.forward(Operator.java:815)

at org.apache.hadoop.hive.ql.exec.TableScanOperator.processOp(TableScanOperator.java:98)

at org.apache.hadoop.hive.ql.exec.MapOperator$MapOpCtx.forward(MapOperator.java:157)

at org.apache.hadoop.hive.ql.exec.vector.VectorMapOperator.process(VectorMapOperator.java:45)

... 9 more

Caused by: java.lang.ArrayIndexOutOfBoundsException: 13

at org.apache.hadoop.hive.ql.exec.vector.udf.VectorUDFAdaptor.evaluate(VectorUDFAdaptor.java:117)

at org.apache.hadoop.hive.ql.exec.vector.VectorSelectOperator.processOp(VectorSelectOperator.java:124)

... 13 more 2018-04-03 17:08:38,231 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorMapOperator: 4 finished. closing...

2018-04-03 17:08:38,231 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorMapOperator: RECORDS_IN:0

2018-04-03 17:08:38,231 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorMapOperator: DESERIALIZE_ERRORS:0

2018-04-03 17:08:38,231 INFO [main] org.apache.hadoop.hive.ql.exec.TableScanOperator: 0 finished. closing...

2018-04-03 17:08:38,231 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorSelectOperator: 1 finished. closing...

2018-04-03 17:08:38,231 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorLimitOperator: 2 finished. closing...

2018-04-03 17:08:38,231 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorFileSinkOperator: 3 finished. closing...

2018-04-03 17:08:38,231 INFO [main] org.apache.hadoop.hive.ql.exec.FileSinkOperator: FS[3]: records written - 0

2018-04-03 17:08:38,231 INFO [main] org.apache.hadoop.hive.ql.exec.FileSinkOperator: Final Path: FS hdfs://hserver1n:8020/tmp/hive/hdfs/e62bf883-074a-44b2-8b04-2b69d4475a66/hive_2018-04-03_17-08-17_236_6563852616584890762-25/-mr-10000/.hive-staging_hive_2018-04-03_17-08-17_236_6563852616584890762-25/_tmp.-ext-10001/000000_0

2018-04-03 17:08:38,232 INFO [main] org.apache.hadoop.hive.ql.exec.FileSinkOperator: Writing to temp file: FS hdfs://hserver1n:8020/tmp/hive/hdfs/e62bf883-074a-44b2-8b04-2b69d4475a66/hive_2018-04-03_17-08-17_236_6563852616584890762-25/-mr-10000/.hive-staging_hive_2018-04-03_17-08-17_236_6563852616584890762-25/_task_tmp.-ext-10001/_tmp.000000_0

2018-04-03 17:08:38,232 INFO [main] org.apache.hadoop.hive.ql.exec.FileSinkOperator: New Final Path: FS hdfs://hserver1n:8020/tmp/hive/hdfs/e62bf883-074a-44b2-8b04-2b69d4475a66/hive_2018-04-03_17-08-17_236_6563852616584890762-25/-mr-10000/.hive-staging_hive_2018-04-03_17-08-17_236_6563852616584890762-25/_tmp.-ext-10001/000000_0

2018-04-03 17:08:38,623 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorFileSinkOperator: RECORDS_OUT_0:0

2018-04-03 17:08:38,623 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorFileSinkOperator: 3 Close done

2018-04-03 17:08:38,623 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorLimitOperator: 2 Close done

2018-04-03 17:08:38,623 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorSelectOperator: 1 Close done

2018-04-03 17:08:38,623 INFO [main] org.apache.hadoop.hive.ql.exec.TableScanOperator: 0 Close done

2018-04-03 17:08:38,623 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorMapOperator: 4 Close done

2018-04-03 17:08:38,624 WARN [main] org.apache.hadoop.mapred.YarnChild: Exception running child : java.lang.RuntimeException: org.apache.hadoop.hive.ql.metadata.HiveException: Hive Runtime Error while processing row

at org.apache.hadoop.hive.ql.exec.mr.ExecMapper.map(ExecMapper.java:179)

at org.apache.hadoop.mapred.MapRunner.run(MapRunner.java:54)

at org.apache.hadoop.mapred.MapTask.runOldMapper(MapTask.java:459)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:343)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:164)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1917)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:158)

Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: Hive Runtime Error while processing row

at org.apache.hadoop.hive.ql.exec.vector.VectorMapOperator.process(VectorMapOperator.java:52)

at org.apache.hadoop.hive.ql.exec.mr.ExecMapper.map(ExecMapper.java:170)

... 8 more

Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: Error evaluating CASE (zfuncname) WHEN ('ZFI_VENDOR_TO_CRM') THEN ('a') ELSE ('b') END

at org.apache.hadoop.hive.ql.exec.vector.VectorSelectOperator.processOp(VectorSelectOperator.java:126)

at org.apache.hadoop.hive.ql.exec.Operator.forward(Operator.java:815)

at org.apache.hadoop.hive.ql.exec.TableScanOperator.processOp(TableScanOperator.java:98)

at org.apache.hadoop.hive.ql.exec.MapOperator$MapOpCtx.forward(MapOperator.java:157)

at org.apache.hadoop.hive.ql.exec.vector.VectorMapOperator.process(VectorMapOperator.java:45)

... 9 more

Caused by: java.lang.ArrayIndexOutOfBoundsException: 13

at org.apache.hadoop.hive.ql.exec.vector.udf.VectorUDFAdaptor.evaluate(VectorUDFAdaptor.java:117)

at org.apache.hadoop.hive.ql.exec.vector.VectorSelectOperator.processOp(VectorSelectOperator.java:124)

... 13 more 2018-04-03 17:08:39,369 INFO [main] org.apache.hadoop.mapred.Task: Runnning cleanup for the task

2018-04-03 17:08:39,496 INFO [main] org.apache.hadoop.metrics2.impl.MetricsSystemImpl: Stopping MapTask metrics system...

2018-04-03 17:08:39,496 INFO [main] org.apache.hadoop.metrics2.impl.MetricsSystemImpl: MapTask metrics system stopped.

2018-04-03 17:08:39,496 INFO [main] org.apache.hadoop.metrics2.impl.MetricsSystemImpl: MapTask metrics system shutdown complete.

错误分析:

日志给出的错误原因是13超过了数组的边界,猜想是系统认为总共有12桶,超过了桶的个数,故报错,后面进行验证。

故一步步排除,首先创建了一个简单的表:

0: jdbc:hive2://localhost:10000> create table log2(

. . . . . . . . . . . . . . . .> mandt char(3),

. . . . . . . . . . . . . . . .> zdate char(10),

. . . . . . . . . . . . . . . .> ztime char(8),

. . . . . . . . . . . . . . . .> zguid char(20),

. . . . . . . . . . . . . . . .> ztype char(1),

. . . . . . . . . . . . . . . .> zfuncname varchar(30),

. . . . . . . . . . . . . . . .> zseq varchar(2),

. . . . . . . . . . . . . . . .> xmldata string,

. . . . . . . . . . . . . . . .> xmldata2 string,

. . . . . . . . . . . . . . . .> field1 varchar(20),

. . . . . . . . . . . . . . . .> field2 varchar(20),

. . . . . . . . . . . . . . . .> field3 varchar(20),

. . . . . . . . . . . . . . . .> field4 varchar(20))

. . . . . . . . . . . . . . . .> row format delimited fields terminated by '\t';

然后将log数据库中部分数据导入到此表中,再使用上面查询语句查询(将数据库表名改成新建的表log2),可以得到正确结果。

然后再在上面基础之上使用ORC方式存储,也可以得到正确结果;

然后在ORC基础之上,再使用分桶,也可以得到正确结果,可见并不是分桶的原因;

然后再分桶基础之上,再创建事务表,则会出现错误,可见此错误是由于使用了事务的原因。

为了验证后面的Hive版本是否已解决,下载了apache hive2.3.0版本测试了一下,已没有问题。

CDH:5.14.0 中 Hive BUG记录的更多相关文章

- CentOS7+CDH5.14.0安装全流程记录,图文详解全程实测-总目录

CentOS7+CDH5.14.0安装全流程记录,图文详解全程实测-总目录: 0.Windows 10本机下载Xshell,以方便往Linux主机上上传大文件 1.CentOS7+CDH5.14.0安 ...

- CentOS7+CDH5.14.0安装全流程记录,图文详解全程实测-8CDH5安装和集群配置

Cloudera Manager Server和Agent都启动以后,就可以进行CDH5的安装配置了. 准备文件 从 http://archive.cloudera.com/cdh5/par ...

- CentOS7+CDH5.14.0安装全流程记录,图文详解全程实测-7主节点CM安装子节点Agent配置

主节点安装cloudera manager 准备工作:下载CM和mysql连接驱动包: CM各版本下载地址:http://archive.cloudera.com/cm5/cm/5/ 从里面选择:ht ...

- gcc5+opencv4.0.1 "玄学"bug记录

近期需要使用OpenCV中的gpu加速的一些函数,需要重新编译OpenCV库文件. 由于本机安装的cuda9.0对编译器gcc的版本有要求,平时常用的gcc7.0用不了,所以选用了gcc5.5 . O ...

- CentOS7+CDH5.14.0安装全流程记录,图文详解全程实测-6CM安装前环境检查

检查环境 在正式开始安装CDH之前最好先检查一下能不能相互免密ssh,以及防火墙是否关闭,集群中的时间是否统一,java版本是否是oracle的版本,主节点mysql是否安装正确等. ssh测试 例如 ...

- CentOS7+CDH5.14.0安装全流程记录,图文详解全程实测-5安装JDK及安装mysql数据库

1.安装JDK 可以不用卸载自带的openjdk,配好环境变量即可. 下载文件:jdk-8u151-linux-x64.tar.gz 附:JDK各版本下载地址:https://www.oracle.c ...

- CentOS7+CDH5.14.0安装全流程记录,图文详解全程实测-4配置NTP服务

配置NTP服务.标准的做法是配置NTP服务器,但是这里为了方便就用简化的方式配置了. 这个在安装初期,不是必须的,只要保证各机器的时间同步就行,使用如下命令可以查看时间是否同步: [root@cdh1 ...

- CentOS7+CDH5.14.0安装全流程记录,图文详解全程实测-1虚拟机安装及环境初始化

1.软件准备: VMware-workstation-full-14.1.2-8497320.exe CentOS-7-x86_64-DVD-1804.iso 2.VMare激活码: AU5WA-0E ...

- iOS 7.0.2 的bug记录

在iOS 7.0.2 版本上,如果从主屏幕进入webapp且webapp进入全屏模式,那么alert和修改window.location到某产品对应的itunes下载页面则无效. 可参考下面的代码示例 ...

随机推荐

- Bzoj2337:[HNOI2011]XOR和路径

题面 bzoj Sol 设\(f[i]\)表示\(i到n\)的路径权值某一位为\(1\)的期望 枚举每一位,高斯消元即可 不要问我为什么是\(i\ - \ n\)而不可以是\(1\ - \ i\) # ...

- SQL Server将自己的查询结果作为待查询数据子列之一

嵌套子查询是SQL语句中比较常用的一种查询方法,开发过程中遇到查询需要将自己的某列作为待查询的数据,在参考别人的SQL语句的写法终于实现了自己需要的功能. 查询语句如下: SELECT DISTINC ...

- Java大世界

"java越来越过份了." php狠狠的说,他转头看着C:"C哥,您可是前辈,java最近砸了我不少场子,你老再不出来管管,我怕他眼里就没有您了啊." C哥吸烟 ...

- 快速创建 HTML5 Canvas 电信网络拓扑图

前言 属性列表想必大家都不会陌生,正常用 HTML5 来做的属性列表大概就是用下拉菜单之类的,而且很多情况下,下拉列表还不够好看,怎么办?我试着用 HT for Web 来实现属性栏点击按钮弹出多功能 ...

- 使用Angular CLI从蓝本生成代码

第一篇文章是: "使用angular cli生成angular5项目" : http://www.cnblogs.com/cgzl/p/8594571.html 这篇文章主要是讲生 ...

- route路由的顺序问题了数据包的转发流程

2018-02-28 15:29:26 [root@linux ~]# routeKernel IP routing tableDestination Gateway ...

- Ionic1开发环境配置ji

配置Ionic1开发环境环境:windows7 32位+jdk1.8+ionic1.3,64位系统可以参考下面方法,软件注意选择对应的版本即可. 1.下载JDK并配置Java运行环境 ...

- PHP7变量的内部实现

PHP7变量的内部实现 受篇幅限制,这篇文章将分为两个部分.本部分会讲解PHP5和PHP7在zval结构体的差异,同时也会讨论引用的实现.第二部分会深入探究一些数据类型如string和对象的实现. P ...

- Android库项目中的资源ID冲突

1.前言 Android Studio对模块化开发提供的一个很有用的功能就是可以在主项目下新建库项目(Module),但是在使用库项目时却有一个问题就是资源ID冲突,因为编译时SDK会自动帮我们处理这 ...

- 从Socket入门到BIO,NIO,multiplexing,AIO

Socket入门 最简单的Server端读取Client端内容的demo public class Server { public static void main(String [] args) t ...