[转]kaldi上的深度神经网络

转:http://blog.csdn.net/wbgxx333/article/details/41019453

深度神经网络已经是语音识别领域最热的话题了。从2010年开始,许多关于深度神经网络的文章在这个领域发表。许多大型科技公司(谷歌和微软)开始把DNN用到他们的产品系统里。(备注:谷歌的应该是google now,微软的应该是win7和win8操作系统里的语音识别和他的SDK等等)

但是,没有一个工具箱像kaldi这样可以很好的提供支持。因为先进的技术无时无刻不在发展,这就意味着代码需要跟上先进技术的步伐和代码的架构需要重新去思考。

我们现在在kaldi里提供两套分离的关于深度神经网络代码。一个在代码目录下的nnet/和nnetbin/,这个是由 Karel Vesely提供。此外,还有一个在代码目录nnet-cpu/和nnet-cpubin/,这个是由 Daniel Povey提供(这个代码是从Karel早期版本修改,然后重新写的)。这些代码都是很官方的,这些在以后都会发展的。

在例子目录下,比如: egs/wsj/s5/, egs/rm/s5, egs/swbd/s5 and egs/hkust/s5b,神经网络的例子脚本都可以找到。 Karel的例子脚本可以在local/run_dnn.sh或者local/run_nnet.sh,而Dan的例子脚本在local/run_nnet_cpu.sh。在运行这些脚本前,为了调整系统,run.sh你必须首先被运行。

我们会很快的把这两个神经网络的详细文档公布。现在,我们总结下这两个的最重要的区别:

1.Karel的代码,是用GPU加速的单线程的SGD训练,而Dan的代码是用多个CPU的多线程方式;

2.Karel的代码支持区分性训练,而Dan的代码不支持。

除了这些,在架构上有很多细小的区别。

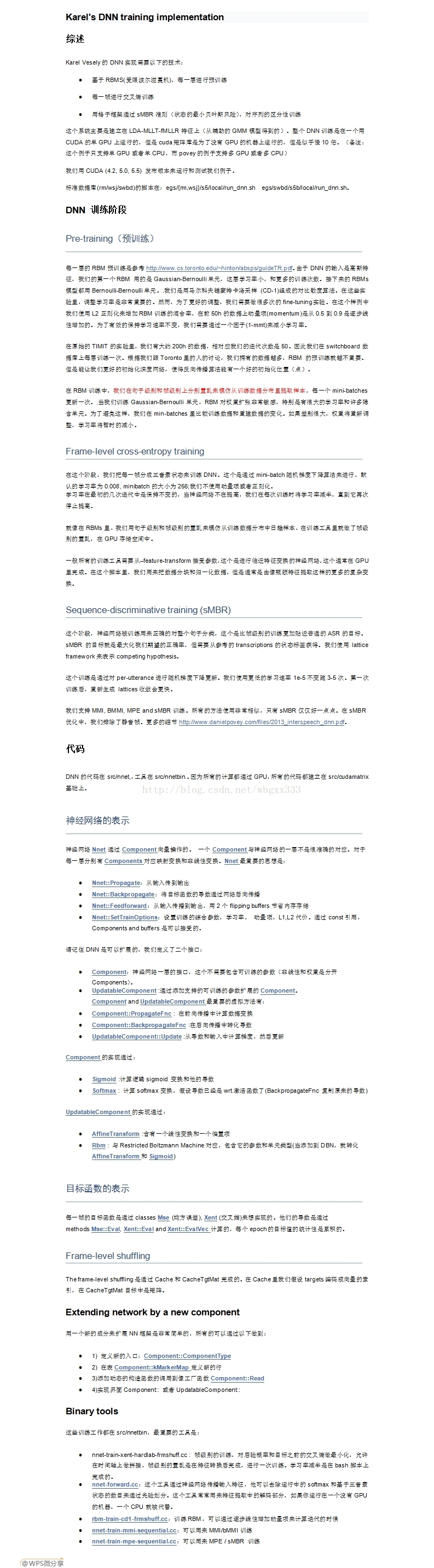

我们希望对于这些库添加更多的文档,Karel的版本的代码有一些稍微过时的文档在Karel's DNN training implementation.

中文翻译见:http://blog.csdn.net/wbgxx333/article/details/24438405

-------------------------------------------------------------------------------------------------------------------------------------------------------

-----------------------------------------------------------------------------------------------------------------------------------------------------

这个是昨晚无意中的发现,由于远在cmu大学的苗亚杰(Yajie Miao)博士的贡献,我们又可以在kaldi上使用深度学习的模块。之前在htk上使用dbn一直还没成功,希望最近可以早点实现。以下是苗亚杰博士的主页上的关于kaldi+pdnn的介绍。希望大家可以把自己的力量也贡献出来,让我们作为学生的多学习学习。

| Kaldi+PDNN -- Implementing DNN-based ASR Systems with Kaldi and PDNN |

||||||||||||||||||||||||||||

| Overview | ||||||||||||||||||||||||||||

| Kaldi+PDNN contains a set of fully-fledged Kaldi ASR recipes, which realize DNN-based acoustic modeling using the PDNN toolkit. The overall pipeline has 3 stages: 1. The initial GMM model is built with the existing Kaldi recipes 2. DNN acoustic models are trained by PDNN 3. The trained DNN model is ported back to Kaldi for hybrid decoding or further tandem system building

|

||||||||||||||||||||||||||||

| Hightlights | ||||||||||||||||||||||||||||

| Model diversity. Deep Neural Networks (DNNs); Deep Bottleneck Features (DBNFs); Deep Convolutional Networks (DCNs) PDNN toolkit. Easy and fast to implement new DNN ideas Open license. All the codes are released under Apache 2.0, the same license as Kaldi Consistency with Kaldi. Recipes follow the Kaldi style and can be integrated seemlessly with the existing setups |

||||||||||||||||||||||||||||

| Release Log | ||||||||||||||||||||||||||||

| Dec 2013 --- version 1.0 (the initial release) Feb 2014 --- version 1.1 (clean up the scripts, add the dnn+fbank recipe run-dnn-fbank.sh, enrich PDNN) |

||||||||||||||||||||||||||||

| Requirements | ||||||||||||||||||||||||||||

| 1. A GPU card should be available on your computing machine. 2. Initial model building should be run, ideally up to train_sat and align_fmllr 3. Software Requirements: * Theano. For information about Theano installation on Ubuntu Linux, refer to this document editted by Wonkyum Lee from CMU. * pfile_utils. This script (that is, kaldi-trunk/tools/install_pfile_utils.sh) installs pfile_utils automatically. |

||||||||||||||||||||||||||||

| Download | ||||||||||||||||||||||||||||

| Kaldi+PDNN is hosted on Sourceforge. You can enter your Kaldi Switchboard setup (such as egs/swbd/s5b) and download the latest version via svn:

Now the new run-*.sh scripts appear in your setup. You can run them directly. |

||||||||||||||||||||||||||||

| Recipes | ||||||||||||||||||||||||||||

|

||||||||||||||||||||||||||||

| Experiments & Results | ||||||||||||||||||||||||||||

| The recipes are developed based on the Kaldi 110-hour Switchboard setup. This is the standard system you can get if you run egs/swbd/s5b/run.sh. Our experiments follow the similar configurations as described inthis paper. We have the following data partitions. The "validation" set is used to measure frame accuracy and determine termination in DNN fine-tuning. training -- train_100k_nohup (110 hours) validation -- train_dev_nohup testing -- eval2000 (HUB5'00)

Our hybrid recipe run-dnn.sh is giving WER comparable with this paper (Table 5 for fMLLR features). We are confident to think that our recipes perform comparably with the Kaldi internal DNN setups. |

||||||||||||||||||||||||||||

| Want to Contribute? | ||||||||||||||||||||||||||||

| We look forward to your contributions. Improvement can be made on the following aspects (but not limited to): 1. Optimization to the above recipes 2 New recipes 3. Porting the recipes to other datasets 4. Experiments and results 5. Contributions to the PDNN toolkit Contact Yajie Miao (ymiao@cs.cmu.edu) if you have any questions or suggestions. |

||||||||||||||||||||||||||||

上述就是苗博士的介绍。具体可见:http://www.cs.cmu.edu/~ymiao/kaldipdnn.html。

有些复杂,后续有时间深入再看。

[转]kaldi上的深度神经网络的更多相关文章

- 云中的机器学习:FPGA 上的深度神经网络

人工智能正在经历一场变革,这要得益于机器学习的快速进步.在机器学习领域,人们正对一类名为“深度学习”算法产生浓厚的兴趣,因为这类算法具有出色的大数据集性能.在深度学习中,机器可以在监督或不受监督的方式 ...

- 深度神经网络DNN的多GPU数据并行框架 及其在语音识别的应用

深度神经网络(Deep Neural Networks, 简称DNN)是近年来机器学习领域中的研究热点,产生了广泛的应用.DNN具有深层结构.数千万参数需要学习,导致训练非常耗时.GPU有强大的计算能 ...

- CNN(卷积神经网络)、RNN(循环神经网络)、DNN(深度神经网络)的内部网络结构有什么区别?

https://www.zhihu.com/question/34681168 CNN(卷积神经网络).RNN(循环神经网络).DNN(深度神经网络)的内部网络结构有什么区别?修改 CNN(卷积神经网 ...

- TensorFlow 深度学习笔记 TensorFlow实现与优化深度神经网络

转载请注明作者:梦里风林 Github工程地址:https://github.com/ahangchen/GDLnotes 欢迎star,有问题可以到Issue区讨论 官方教程地址 视频/字幕下载 全 ...

- TensorFlow实现与优化深度神经网络

TensorFlow实现与优化深度神经网络 转载请注明作者:梦里风林Github工程地址:https://github.com/ahangchen/GDLnotes欢迎star,有问题可以到Issue ...

- 如何用70行Java代码实现深度神经网络算法

http://www.tuicool.com/articles/MfYjQfV 如何用70行Java代码实现深度神经网络算法 时间 2016-02-18 10:46:17 ITeye 原文 htt ...

- 深度神经网络(DNN)模型与前向传播算法

深度神经网络(Deep Neural Networks, 以下简称DNN)是深度学习的基础,而要理解DNN,首先我们要理解DNN模型,下面我们就对DNN的模型与前向传播算法做一个总结. 1. 从感知机 ...

- 深度神经网络(DNN)反向传播算法(BP)

在深度神经网络(DNN)模型与前向传播算法中,我们对DNN的模型和前向传播算法做了总结,这里我们更进一步,对DNN的反向传播算法(Back Propagation,BP)做一个总结. 1. DNN反向 ...

- 深度神经网络(DNN)损失函数和激活函数的选择

在深度神经网络(DNN)反向传播算法(BP)中,我们对DNN的前向反向传播算法的使用做了总结.里面使用的损失函数是均方差,而激活函数是Sigmoid.实际上DNN可以使用的损失函数和激活函数不少.这些 ...

随机推荐

- PHP生成HTML静态页面。

function Generate(){ $html = '<!DOCTYPE html><html lang="en"><head> < ...

- python下彻底解决浏览器多标签打开与切换问题

#coding:utf-8#Right_key_click and Tad switch#by dengpeiyou date:2018-7-7from selenium import webdriv ...

- leetcode1

public class Solution { public int[] TwoSum(int[] nums, int target) { ]; ; i < nums.Length; i++) ...

- Spring中AOP主要用来做什么。Spring注入bean的方式。什么是IOC,什么是依赖注入

Spring中主要用到的设计模式有工厂模式和代理模式. IOC:Inversion of Control控制反转,也叫依赖注入,通过 sessionfactory 去注入实例:IOC就是一个生产和管理 ...

- 36. Valid Sudoku 判断九九有效的数独

[抄题]: Determine if a 9x9 Sudoku board is valid. Only the filled cells need to be validated according ...

- jdbcTemplate的简单介绍

Spring JDBC抽象框架core包提供了JDBC模板类,其中JdbcTemplate是core包的核心类,所以其他模板类都是基于它封装完成的,JDBC模板类是第一种工作模式. JdbcTempl ...

- ES6 的面向对象

JavaScript 语言中,生成实例对象的传统方法是通过构造函数. function Animal(name, age) { this.name = name; this.age = age; } ...

- tiny4412 --Uboot移植(3) 时钟

开发环境:win10 64位 + VMware12 + Ubuntu14.04 32位 工具链:linaro提供的gcc-linaro-6.1.1-2016.08-x86_64_arm-linux-g ...

- JS的事件流的概念(重点)

09-JS的事件流的概念(重点) 在学习jQuery的事件之前,大家必须要对JS的事件有所了解.看下文 事件的概念 HTML中与javascript交互是通过事件驱动来实现的,例如鼠标点击事件 ...

- oracle服务端与客户端字符集不同导致中文乱码解决方案

1.问题描述 用pl/sql登录时,会提示“数据库字符集(ZHS16GBK)和客户端字符集(2%)是不同的,字符集转化可能会造成不可预期的后果”,具体问题是中文乱码,如下图 2.问题分析 不管错误信息 ...