Self-Taught Learning

the promise of self-taught learning and unsupervised feature learning is that if we can get our algorithms to learn from unlabeled data, then we can easily obtain and learn from massive amounts of it.Even though a single unlabeled example is less informative than a single labeled example, if we can get tons of the former---for example, by downloading random unlabeled images/audio clips/text documents off the internet---and if our algorithms can exploit this unlabeled data effectively, then we might be able to achieve better performance than the massive hand-engineering and massive hand-labeling approaches.

Learning features

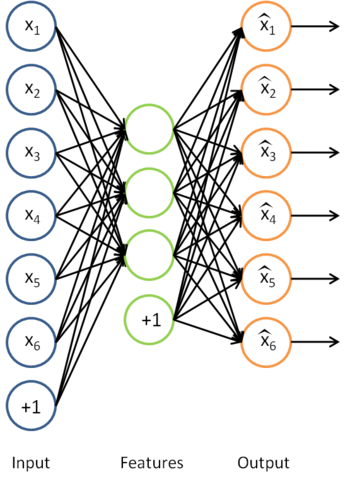

We have already seen how an autoencoder can be used to learn features from unlabeled data. Concretely, suppose we have an unlabeled training set

with

unlabeled examples. (The subscript "u" stands for "unlabeled.") We can then train a sparse autoencoder on this data (perhaps with appropriate whitening or other pre-processing):

Having trained the parameters

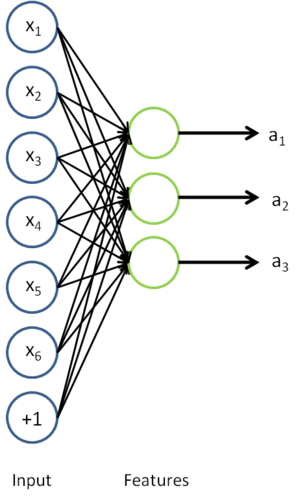

of this model, given any new input

, we can now compute the corresponding vector of activations

of the hidden units. As we saw previously, this often gives a better representation of the input than the original raw input

. We can also visualize the algorithm for computing the features/activations

as the following neural network:

This is just the sparse autoencoder that we previously had, with with the final layer removed.

Now, suppose we have a labeled training set

of

examples. (The subscript "l" stands for "labeled.") We can now find a better representation for the inputs. In particular, rather than representing the first training example as

, we can feed

as the input to our autoencoder, and obtain the corresponding vector of activations

. To represent this example, we can either just replace the original feature vector with

. Alternatively, we can concatenate the two feature vectors together, getting a representation

.

Thus, our training set now becomes

(if we use the replacement representation, and use

to represent the

-th training example), or

(if we use the concatenated representation). In practice, the concatenated representation often works better; but for memory or computation representations, we will sometimes use the replacement representation as well.

Finally, we can train a supervised learning algorithm such as an SVM, logistic regression, etc. to obtain a function that makes predictions on the

values. Given a test example

, we would then follow the same procedure: For feed it to the autoencoder to get

. Then, feed either

or

to the trained classifier to get a prediction.

On pre-processing the data

During the feature learning stage where we were learning from the unlabeled training set

, we may have computed various pre-processing parameters. For example, one may have computed a mean value of the data and subtracted off this mean to perform mean normalization, or used PCA to compute a matrix

to represent the data as

(or used PCA whitening or ZCA whitening). If this is the case, then it is important to save away these preprocessing parameters, and to use the same parameters during the labeled training phase and the test phase, so as to make sure we are always transforming the data the same way to feed into the autoencoder. In particular, if we have computed a matrix

using the unlabeled data and PCA, we should keep the same matrix

and use it to preprocess the labeled examples and the test data. We should not re-estimate a different

matrix (or data mean for mean normalization, etc.) using the labeled training set, since that might result in a dramatically different pre-processing transformation, which would make the input distribution to the autoencoder very different from what it was actually trained on.

On the terminology of unsupervised feature learning

There are two common unsupervised feature learning settings, depending on what type of unlabeled data you have. The more general and powerful setting is the self-taught learning setting, which does not assume that your unlabeled data xu has to be drawn from the same distribution as your labeled data xl. The more restrictive setting where the unlabeled data comes from exactly the same distribution as the labeled data is sometimes called the semi-supervised learning setting. This distinctions is best explained with an example, which we now give.

Suppose your goal is a computer vision task where you'd like to distinguish between images of cars and images of motorcycles; so, each labeled example in your training set is either an image of a car or an image of a motorcycle. Where can we get lots of unlabeled data? The easiest way would be to obtain some random collection of images, perhaps downloaded off the internet. We could then train the autoencoder on this large collection of images, and obtain useful features from them. Because here the unlabeled data is drawn from a different distribution than the labeled data (i.e., perhaps some of our unlabeled images may contain cars/motorcycles, but not every image downloaded is either a car or a motorcycle), we call this self-taught learning.

In contrast, if we happen to have lots of unlabeled images lying around that are all images of either a car or a motorcycle, but where the data is just missing its label (so you don't know which ones are cars, and which ones are motorcycles), then we could use this form of unlabeled data to learn the features. This setting---where each unlabeled example is drawn from the same distribution as your labeled examples---is sometimes called the semi-supervised setting. In practice, we often do not have this sort of unlabeled data (where would you get a database of images where every image is either a car or a motorcycle, but just missing its label?), and so in the context of learning features from unlabeled data, the self-taught learning setting is more broadly applicable.

自学习 VS 半监督学习

半监督学习假设,未标记数据和已标记数据拥有相同的数据分布

Self-Taught Learning的更多相关文章

- 一个Self Taught Learning的简单例子

idea: Concretely, for each example in the the labeled training dataset xl, we forward propagate the ...

- The Brain vs Deep Learning Part I: Computational Complexity — Or Why the Singularity Is Nowhere Near

The Brain vs Deep Learning Part I: Computational Complexity — Or Why the Singularity Is Nowhere Near ...

- What is machine learning?

What is machine learning? One area of technology that is helping improve the services that we use on ...

- How do I learn machine learning?

https://www.quora.com/How-do-I-learn-machine-learning-1?redirected_qid=6578644 How Can I Learn X? ...

- (转) Ensemble Methods for Deep Learning Neural Networks to Reduce Variance and Improve Performance

Ensemble Methods for Deep Learning Neural Networks to Reduce Variance and Improve Performance 2018-1 ...

- A Brief Overview of Deep Learning

A Brief Overview of Deep Learning (This is a guest post by Ilya Sutskever on the intuition behind de ...

- 5 Techniques To Understand Machine Learning Algorithms Without the Background in Mathematics

5 Techniques To Understand Machine Learning Algorithms Without the Background in Mathematics Where d ...

- 深度学习Deep learning

In the last chapter we learned that deep neural networks are often much harder to train than shallow ...

- 【转】The most comprehensive Data Science learning plan for 2017

I joined Analytics Vidhya as an intern last summer. I had no clue what was in store for me. I had be ...

- Neural Networks and Deep Learning

Neural Networks and Deep Learning This is the first course of the deep learning specialization at Co ...

随机推荐

- 安装meteor运行基本demo发生错误。

bogon:~ paul$ curl https://install.meteor.com/ | sh % Total % Received % Xferd Average Speed Time Ti ...

- VS2013+PTVS,python编码问题

1.调试,input('中文'),乱码2.调试,print('中文'),正常3.不调试,input('中文'),正常4.不调试,print('中文'),正常 页面编码方式已经加了"# -- ...

- XML与Plist文件转换

由于工作需要,要解析xml,举一个简单的例子,例如地址,如果是plist的话我们会很好的解析,但是如果已知一个xml的话,当然用原生的xml解析也能解析的出来,但是解析xml的话会是根据标签的头来解析 ...

- DefaultView 的作用(对DataSet查询出的来数据进行排序)

DefaultView 的作用 收藏 一直以来在对数据进行排序, 条件查询都是直接重复构建SQL来进行, 在查询次数和数据量不多的情况下倒没觉得什么, 但慢慢得, 当程序需要对大量数据椐不同条件 ...

- PostgreSQL Replication之第八章 与pgbouncer一起工作(2)

8.2 安装pgbouncer 在我们深入细节之前,我们将看看如何安装pgbouncer.正如PostgreSQL一样,您可以采取两种途径.您可以安装二进制包或者直接从源代码编译.在我们的例子中,我们 ...

- 排序算法(Apex 语言)

/* Code function : 冒泡排序算法 冒泡排序的优点:每进行一趟排序,就会少比较一次,因为每进行一趟排序都会找出一个较大值 时间复杂度:O(n*n) 空间复杂度:1 */ List< ...

- Java获取电脑硬件信息

package com.szht.gpy.util; import java.applet.Applet; import java.awt.Graphics; import java.io.Buffe ...

- Nginx的日志管理

vim /usr/local/nginx/conf/nginx.conf #编辑 nginx 配置文件 server{ ...

- NodeJS学习笔记 (17)集群-cluster(ok)

cluster模块概览 node实例是单线程作业的.在服务端编程中,通常会创建多个node实例来处理客户端的请求,以此提升系统的吞吐率.对这样多个node实例,我们称之为cluster(集群). 借助 ...

- 洛谷1462 通往奥格瑞玛的道路 最短路&&二分

SPFA和二分的使用 跑一下最短路看看能不能回到奥格瑞玛,二分收费最多的点 #include<iostream> #include<cstdio> #include<cs ...