drbd+nfs+keepalived

写的很详细 理论知识:

https://www.cnblogs.com/kevingrace/p/5740940.html

写的很详细 负载:

https://www.cnblogs.com/kevingrace/p/5740953.html

实际操作如下:

1、修改主机名

11.11.11.2 主服务器 主机名:Primary

11.11.11.3 备服务器 主机名:Secondary

11.11.11.4 VIP ******主要操作上边两台设备,下边两台用于辅助测试******

11.11.11.8 web服务器 IIS (用于挂载测试)

11.11.11.9 nginx代理 设置缓存 主机名LB

2、两台机器的防火墙要相互允许访问。最好是关闭selinux和iptables防火墙(两台机器同样操作)

[root@Primary ~]# setenforce 0 //临时性关闭;永久关闭的话,需要修改/etc/sysconfig/selinux的SELINUX为disabled

[root@Primary ~]# /etc/init.d/iptables stop

3、设置hosts文件(两台机器同样操作)

[root@primary ~]# cat /etc/hosts

**********

11.11.11.2 primary

11.11.11.3 secondary

4、两台机器同步时间

[root@Primary ~]# yum install -y netpdate

[root@Primary ~]# ntpdate -u ntp1.aliyun.com

5、DRBD的安装(两台机器上同样操作)

rpm -ivh https://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm

yum list drbd*

yum install -y drbd84-utils kmod-drbd84 加载模块:

[root@Primary ~]# modprobe drbd

查看模块是否已加上

[root@Primary ~]# lsmod |grep drbd

drbd

6、DRBD配置(两台机器上同样操作)

[root@primary ~]# cat /etc/drbd.conf

# You can find an example in /usr/share/doc/drbd.../drbd.conf.example include "drbd.d/global_common.conf"; //这是主要的两个配置文件,这个定义软件策略

include "drbd.d/*.res"; //定义使用磁盘

[root@primary ~]# cp /etc/drbd.d/global_common.conf /etc/drbd.d/global_common.conf.bak

[root@primary ~]# cat /etc/drbd.d/global_common.conf

global {

usage-count no;

udev-always-use-vnr;

} common {

protocol C;

handlers { } startup {

wfc-timeout 12;

degr-wfc-timeout 12;

outdated-wfc-timeout 120;

} disk {

on-io-error detach;

} net {

cram-hmac-alg md5;

sndbuf-size 512k;

shared-secret "testdrbd";

}

syncer {

rate 300M;

}

}

[root@primary ~]#

[root@primary ~]# cat /etc/drbd.d/r0.res

resource r0 {

on primary {

device /dev/drbd0;

disk /dev/sdb1; //这个磁盘需要分过区不需要格式化

address 11.11.11.2:;

meta-disk internal;

}

on secondary {

device /dev/drbd0;

disk /dev/sdb1;

address 11.11.11.3:;

meta-disk internal;

}

}

[root@primary ~]#

7、在两台机器上添加DRBD磁盘

在Primary机器上添加一块30G的硬盘作为DRBD,分区为/dev/sdb1,不做格式化,并在本地系统创建/data目录,不做挂载操作。

[root@Primary ~]# fdisk -l

......

[root@Primary ~]# fdisk /dev/sdb

依次输入"n->p->1->1->回车->w" //分区创建后,再次使用"fdisk /dev/vdd",输入p,即可查看到创建的分区,比如/dev/vdd1 在Secondary机器上添加一块30G的硬盘作为DRBD,分区为/dev/sdb1,不做格式化,并在本地系统创建/data目录,不做挂载操作。

[root@Secondary ~]# fdisk -l

......

[root@Secondary ~]# fdisk /dev/sdb

依次输入"n->p->1->1->回车->w"

8、在两台机器上分别创建DRBD设备并激活r0资源(下面操作在两台机器上都要执行)

[root@Primary ~]# mknod /dev/drbd0 b

mknod: `/dev/drbd0': File exists [root@Primary ~]# drbdadm create-md r0

Writing meta data...

initializing activity log

NOT initialized bitmap

New drbd meta data block successfully created. [root@Primary ~]# drbdadm create-md r0

You want me to create a v08 style flexible-size internal meta data block.

There appears to be a v08 flexible-size internal meta data block

already in place on /dev/vdd1 at byte offset

Do you really want to overwrite the existing v08 meta-data?

[need to type 'yes' to confirm] yes //这里输入"yes"

Writing meta data...

initializing activity log

NOT initialized bitmap

New drbd meta data block successfully created. 启动drbd服务(注意:需要主从共同启动方能生效)

service drbd start

查看状态(两台机器上都执行查看)

[root@primary ~]# cat /proc/drbd

version: 8.4.11-1 (api:1/proto:86-101)

GIT-hash: 66145a308421e9c124ec391a7848ac20203bb03c build by mockbuild@, 2018-11-03 01:26:55

0: cs:SyncSource ro:Secondary/Secondary ds:UpToDate/Inconsistent C r----- //显示都为 备用;数据还在同步,同步完成后把第一个提升为主

ns:3472496 nr:0 dw:0 dr:3472496 al:0 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:f oos:1594256

[============>.......] sync'ed: 68.6% (1556/4948)M

finish: 0:00:55 speed: 28,840 (27,776) K/sec

[root@primary ~]#

由上面两台主机的DRBD状态查看结果里的ro:Secondary/Secondary表示两台主机的状态都是备机状态,ds是磁盘状态,显示的状态内容为“不一致”,这是因为DRBD无法判断哪一方为主机,

应以哪一方的磁盘数据作为标准。9、接着将Primary主机配置为DRBD的主节点

[root@Primary ~]# drbdsetup /dev/drbd0 primary --force 分别查看主从DRBD状态:

[root@primary ~]# cat /proc/drbd

version: 8.4.- (api:/proto:-)

GIT-hash: 66145a308421e9c124ec391a7848ac20203bb03c build by mockbuild@, -- ::

: cs:Connected ro:Primary/Secondary ds:UpToDate/UpToDate C r-----

ns: nr: dw: dr: al: bm: lo: pe: ua: ap: ep: wo:f oos: [root@secondary ~]# cat /proc/drbd

version: 8.4.- (api:/proto:-)

GIT-hash: 66145a308421e9c124ec391a7848ac20203bb03c build by mockbuild@, -- ::

: cs:Connected ro:Secondary/Primary ds:UpToDate/UpToDate C r-----

ns: nr: dw: dr: al: bm: lo: pe: ua: ap: ep: wo:f oos:

[root@secondary ~]# ro在主从服务器上分别显示 Primary/Secondary和Secondary/Primary

ds显示UpToDate/UpToDate 表示主从配置成功

10、挂载DRBD (Primary主节点机器上操作)

从上面Primary主节点的DRBD状态上看到mounted和fstype参数为空,所以这步开始挂载DRBD到系统目录

先格式化/dev/drbd0

[root@Primary ~]# mkfs.ext4 /dev/drbd0 创建挂载目录,然后执行DRBD挂载

[root@Primary ~]# mkdir /data

[root@Primary ~]# mount /dev/drbd0 /data

[root@primary ~]# df -h

文件系统 容量 已用 可用 已用% 挂载点

/dev/mapper/centos-root 17G .4G 16G % /

devtmpfs 899M 899M % /dev

/dev/sda1 1014M 145M 870M % /boot

/dev/drbd0 30G .7G 27G % /data 特别注意:

Secondary节点上不允许对DRBD设备进行任何操作,包括只读,所有的读写操作只能在Primary节点上进行。

只有当Primary节点挂掉时,Secondary节点才能提升为Primary节点

11、DRBD主备故障切换测试

模拟Primary节点发生故障,Secondary接管并提升为Primary

下面是在Primary主节点上操作记录:

[root@Primary ~]# cd /data

[root@Primary data]# touch wangshibo wangshibo1 wangshibo2 wangshibo3

[root@Primary data]# cd ../

[root@Primary /]# umount /data [root@Primary /]# drbdsetup /dev/drbd0 secondary //将Primary主机设置为DRBD的备节点。在实际生产环境中,直接在Secondary主机上提权(即设置为主节点)即可。

[root@Primary /]# /etc/init.d/drbd status

drbd driver loaded OK; device status:

version: 8.3. (api:/proto:-)

GIT-hash: a798fa7e274428a357657fb52f0ecf40192c1985 build by phil@Build64R6, -- ::

m:res cs ro ds p mounted fstype

:r0 Connected Secondary/Secondary UpToDate/UpToDate C 注意:这里实际生产环境若Primary主节点宕机,在Secondary状态信息中ro的值会显示为Secondary/Unknown,只需要进行DRBD提权操作即可。 下面是在Secondary 备份节点上操作记录:

先进行提权操作,即将Secondary手动升级为DRBD的主节点

[root@Secondary ~]# drbdsetup /dev/drbd0 primary

[root@Secondary ~]# /etc/init.d/drbd status

drbd driver loaded OK; device status:

version: 8.3. (api:/proto:-)

GIT-hash: a798fa7e274428a357657fb52f0ecf40192c1985 build by phil@Build64R6, -- ::

m:res cs ro ds p mounted fstype

:r0 Connected Primary/Secondary UpToDate/UpToDate C 然后挂载DRBD

[root@Secondary ~]# mkdir /data

[root@Secondary ~]# mount /dev/drbd0 /data

[root@Secondary ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/VolGroup00-LogVol00

156G 13G 135G % /

tmpfs .9G .9G % /dev/shm

/dev/vda1 190M 89M 92M % /boot

/dev/vdd .8G 23M .2G % /data2

/dev/drbd0 .8G 23M .2G % /data 发现DRBD挂载目录下已经有了之前在远程Primary主机上写入的内容

[root@Secondary ~]# cd /data

[root@Secondary data]# ls

wangshibo wangshibo1 wangshibo2 wangshibo3 在Secondary节点上继续写入数据

[root@Secondary data]# touch huanqiu huanqiu1 huanqiu2 huanqiu3 然后模拟Secondary节点故障,Primary节点再提权升级为DRBD主节点(操作同上,此处省略.......)

到此,DRBD的主从环境的部署工作已经完成。不过上面是记录的是主备手动切换,至于保证DRBD主从结构的智能切换,实现高可用,还需里用到Keepalived或Heartbeat来实现了(会在DRBD主端挂掉的情况下,自动切换从端为主端并自动挂载/data分区)

12、安装nfs

思路:

)在两台机器上安装keepalived,VIP为192.168.1.

)将DRBD的挂载目录/data作为NFS的挂载目录。远程客户机使用vip地址挂载NFS

)当Primary主机发生宕机或NFS挂了的故障时,Secondary主机提权升级为DRBD的主节点,并且VIP资源也会转移过来。

当Primary主机的故障恢复时,会再次变为DRBD的主节点,并重新夺回VIP资源。从而实现故障转移

Primary主机(11.11.11.2)默认作为DRBD的主节点,DRBD挂载目录是/data

Secondary主机(11.11.11.3)是DRBD的备份节点在Primary和Secondary两台主机上安装NFS

[root@Primary ~]# yum install rpcbind nfs-utils

[root@Primary ~]# vim /etc/exports

/data 11.11.11.0/(rw,sync,no_root_squash) service rpcbind start

service nfs start

关闭两台主机的iptables防火墙

防火墙最好关闭,否则可能导致客户机挂载nfs时会失败!

若开启防火墙,需要在iptables中开放nfs相关端口机以及VRRP组播地址

[root@Primary ~]# /etc/init.d/iptables stop 两台机器上的selinux一定要关闭!!!!!!!!!!

否则下面在keepalived.conf里配置的notify_master.sh等脚本执行失败!这是曾经踩过的坑!

[root@Primary ~]# setenforce //临时关闭。永久关闭的话,还需要在/etc/sysconfig/selinux 文件里将SELINUX改为disabled

[root@Primary ~]# getenforce

Permissive

13、在两台主机上安装Keepalived,配合keepalived实现自动fail-over

13.1、Primary端配置

安装Keepalived

[root@Primary ~]# yum install -y openssl-devel popt-devel

[root@Primary ~]# cd /usr/local/src/

[root@Primary src]# wget http://www.keepalived.org/software/keepalived-1.3.5.tar.gz

[root@Primary src]# tar -zvxf keepalived-1.3..tar.gz

[root@Primary src]# cd keepalived-1.3.

[root@Primary keepalived-1.3.]# ./configure --prefix=/usr/local/keepalived

[root@Primary keepalived-1.3.]# make && make install [root@Primary keepalived-1.3.]# cp /usr/local/src/keepalived-1.3./keepalived/etc/init.d/keepalived /etc/rc.d/init.d/

[root@Primary keepalived-1.3.]# cp /usr/local/keepalived/etc/sysconfig/keepalived /etc/sysconfig/

[root@Primary keepalived-1.3.]# mkdir /etc/keepalived/

[root@Primary keepalived-1.3.]# cp /usr/local/keepalived/etc/keepalived/keepalived.conf /etc/keepalived/

[root@Primary keepalived-1.3.]# cp /usr/local/keepalived/sbin/keepalived /usr/sbin/

[root@Primary keepalived-1.3.]# echo "/etc/init.d/keepalived start" >> /etc/rc.local [root@Primary keepalived-1.3.]# chmod +x /etc/rc.d/init.d/keepalived #添加执行权限

[root@Primary keepalived-1.3.]# chkconfig keepalived on #设置开机启动

[root@Primary keepalived-1.3.]# service keepalived start #启动

[root@Primary keepalived-1.3.]# service keepalived stop #关闭

[root@Primary keepalived-1.3.]# service keepalived restart #重启

编译安装keepalived

yum install -y openssl-devel popt-devel

yum install keepalived

-----------Primary主机的keepalived.conf配置

[root@Primary ~]# cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf-bak

[root@primary ~]# cat /etc/keepalived/keepalived.conf

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout

router_id DRBD_HA_MASTER

} vrrp_script chk_nfs {

script "/etc/keepalived/check_nfs.sh"

interval

} vrrp_instance VI_1 {

state MASTER

interface ens33 //注意修改成自己的网卡名称

virtual_router_id

priority

advert_int

authentication {

auth_type PASS

auth_pass

}

track_script {

chk_nfs

}

virtual_ipaddress {

11.11.11.4

}

nopreempt

notify_stop "/etc/keepalived/notify_stop.sh"

notify_master "/etc/keepalived/notify_master.sh"

} 启动keepalived服务

[root@primary ~]# service keepalived start

Redirecting to /bin/systemctl start keepalived.service

[root@primary ~]# ps -ef|grep keepalived

root 7314 1 0 14:22 ? 00:00:00 /usr/sbin/keepalived -D

root 7315 7314 0 14:22 ? 00:00:00 /usr/sbin/keepalived -D

root 7316 7314 0 14:22 ? 00:00:00 /usr/sbin/keepalived -D

root 7431 7119 0 14:22 pts/1 00:00:00 grep --color=auto keepalived

[root@primary ~]#

查看VIP

1、primary的脚本内容

1)此脚本只在Primary机器上配置

[root@primary ~]# cat /etc/keepalived/check_nfs.sh

/sbin/service nfs status &>/dev/null

if [ $? -ne ];then

###如果服务状态不正常,先尝试重启服务

/sbin/service nfs restart

/sbin/service nfs status &>/dev/null

if [ $? -ne ];then

###若重启nfs服务后,仍不正常

###卸载drbd设备

umount /dev/drbd0

###将drbd主降级为备

drbdadm secondary r0

#关闭keepalived

/sbin/service keepalived stop

fi

fi

[root@Primary ~]# chmod 755 /etc/keepalived/check_nfs.sh

)此脚本只在Primary机器上配置

[root@Primary ~]# mkdir /etc/keepalived/logs

[root@primary ~]# cat /etc/keepalived/notify_stop.sh

#!/bin/bash time=`date "+%F %H:%M:%S"`

echo -e "$time ------notify_stop------\n" >> /etc/keepalived/logs/notify_stop.log

/sbin/service nfs stop &>> /etc/keepalived/logs/notify_stop.log

/bin/umount /dev/drbd0 &>> /etc/keepalived/logs/notify_stop.log

/sbin/drbdadm secondary r0 &>> /etc/keepalived/logs/notify_stop.log

echo -e "\n" >> /etc/keepalived/logs/notify_stop.log

[root@primary ~]#[root@Primary ~]# chmod 755 /etc/keepalived/notify_stop.sh

2、此脚本在两台机器上都要配置

[root@primary ~]# cat /etc/keepalived/notify_master.sh

#!/bin/bash time=`date "+%F %H:%M:%S"`

echo -e "$time ------notify_master------\n" >> /etc/keepalived/logs/notify_master.log

/sbin/drbdadm primary data1 &>> /etc/keepalived/logs/notify_master.log

/bin/mount /dev/drbd0 /data &>> /etc/keepalived/logs/notify_master.log

/sbin/service nfs restart &>> /etc/keepalived/logs/notify_master.log

echo -e "\n" >> /etc/keepalived/logs/notify_master.log

[root@primary ~]#

[root@Primary ~]# chmod 755/etc/keepalived/notify_master.sh

13.2、Secondary端配置

-----------Secondary主机的keepalived.conf配置

[root@Secondary ~]# cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf-bak

[root@secondary ~]# cat /etc/keepalived/keepalived.conf

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout

router_id DRBD_HA_BACKUP

} vrrp_instance VI_1 {

state BACKUP

interface ens33 //注意修改成自己的网卡名称

virtual_router_id

priority

advert_int

authentication {

auth_type PASS

auth_pass

} nopreempt

notify_master "/etc/keepalived/notify_master.sh"//当此机器为keepalived的master角色时执行这个脚本

notify_backup "/etc/keepalived/notify_backup.sh"//当此机器为keepalived的backup角色时执行这个脚本

virtual_ipaddress {

11.11.11.4

}

}

启动keepalived服务

[root@Primary ~]# chmod 755/etc/keepalived/notify_master.sh[root@Primary ~]# chmod 755/etc/keepalived/notify_backup.sh

[root@primary ~]# service keepalived start

Redirecting to /bin/systemctl start keepalived.service

1、此脚本只在Secondary机器上配置

[root@Secondary ~]# mkdir /etc/keepalived/logs

[root@Secondary ~]# vim /etc/keepalived/notify_backup.sh

#!/bin/bash time=`date "+%F %H:%M:%S"`

echo -e "$time ------notify_backup------\n" >> /etc/keepalived/logs/notify_backup.log

/sbin/service nfs stop &>> /etc/keepalived/logs/notify_backup.log

/bin/umount /dev/drbd0 &>> /etc/keepalived/logs/notify_backup.log

/sbin/drbdadm secondary data1 &>> /etc/keepalived/logs/notify_backup.log

echo -e "\n" >> /etc/keepalived/logs/notify_backup.log[root@Secondary ~]# chmod 755 /etc/keepalived/notify_backup.sh

14、远程客户挂载NFS

客户端只需要安装rpcbind程序,并确认服务正常

[root@huanqiu ~]# yum install rpcbind nfs-utils

[root@huanqiu ~]# /etc/init.d/rpcbind start linux端:

umount /web/

mount -t nfs 11.11.11.4:/data /web windos2012端:

mount \\11.11.11.4\data -o nolock,rsize=,wsize=,timeo= z: 优,切换中几乎不断

主从出现UpToDate/DUnknown 故障恢复

https://blog.csdn.net/kjsayn/article/details/52958282 windos修改注册表(挂载上去之后没有写权限)

https://jingyan.baidu.com/article/c910274bfd6800cd361d2df3.html

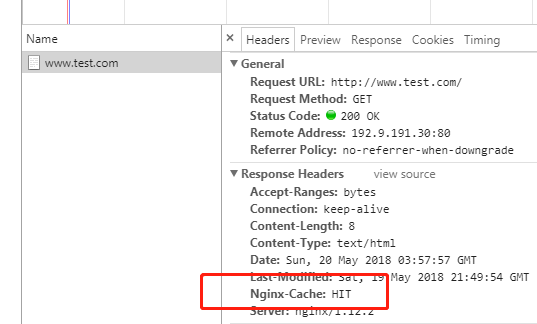

15、前端nginx缓存

创建缓存空间

mkdir /cache

nginx -t

nginx -s reload

[root@localhost conf.d]# pwd

/etc/nginx/conf.d

[root@localhost conf.d]# cat web.conf

upstream node {

server 11.11.11.9:;

}

proxy_cache_path /cache levels=: keys_zone=cache:10m max_size=10g inactive=60m use_temp_path=off;

server {

listen ;

server_name www.test.com;

index index.html;

location / {

proxy_pass http://node;

proxy_cache cache;

proxy_cache_valid 12h;

proxy_cache_valid any 10m;

add_header Nginx-Cache "$upstream_cache_status";

proxy_next_upstream error timeout invalid_header http_500 http_502 http_503 http_504;

}

}

[root@localhost conf.d]#

关于nginx缓存问题,详情请查看另一个博文

15.2、清除缓存

rm删除已缓存的数据

rm -rf /cache/*

16、测试

)

先关闭Primary主机上的keepalived服务。就会发现VIP资源已经转移到Secondary主机上了。

同时,Primary主机的nfs也会主动关闭,同时Secondary会升级为DRBD的主节点

[root@Primary ~]# /etc/init.d/keepalived stop

Stopping keepalived: [ OK ]

[root@Primary ~]# ip addr

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue state UNKNOWN

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

inet6 ::/ scope host

valid_lft forever preferred_lft forever

: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc pfifo_fast state UP qlen

link/ether fa::3e::d1:d6 brd ff:ff:ff:ff:ff:ff

inet 192.168.1.151/ brd 192.168.1.255 scope global eth0

inet6 fe80::f816:3eff:fe35:d1d6/ scope link

valid_lft forever preferred_lft forever 查看系统日志,也能看到VIP资源转移信息

[root@Primary ~]# tail - /var/log/messages

........

May :: localhost Keepalived_vrrp[]: Sending gratuitous ARP on eth0 for 192.168.1.200

May :: localhost Keepalived_vrrp[]: Sending gratuitous ARP on eth0 for 192.168.1.200

May :: localhost Keepalived_vrrp[]: Sending gratuitous ARP on eth0 for 192.168.1.200

May :: localhost Keepalived_vrrp[]: Sending gratuitous ARP on eth0 for 192.168.1.200

May :: localhost Keepalived[]: Stopping

May :: localhost Keepalived_vrrp[]: VRRP_Instance(VI_1) sent priority

May :: localhost Keepalived_vrrp[]: VRRP_Instance(VI_1) removing protocol VIPs. [root@Primary ~]# ps -ef|grep nfs

root : pts/ :: grep --color nfs

[root@Primary ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/VolGroup00-LogVol00

156G 36G 112G % /

tmpfs .9G .9G % /dev/shm

/dev/vda1 190M 98M 83M % /boot

[root@Primary ~]# /etc/init.d/drbd status

drbd driver loaded OK; device status:

version: 8.3. (api:/proto:-)

GIT-hash: a798fa7e274428a357657fb52f0ecf40192c1985 build by phil@Build64R6, -- ::

m:res cs ro ds p mounted fstype

:r0 Connected Secondary/Secondary UpToDate/UpToDate C 登录到Secondary备份机器上,发现VIP资源已经转移过来

[root@Secondary ~]# ip addr

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue state UNKNOWN

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

inet6 ::/ scope host

valid_lft forever preferred_lft forever

: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc pfifo_fast state UP qlen

link/ether fa::3e:4c:7e: brd ff:ff:ff:ff:ff:ff

inet 192.168.1.152/ brd 192.168.1.255 scope global eth0

inet 192.168.1.200/ scope global eth0

inet6 fe80::f816:3eff:fe4c:7e88/ scope link

valid_lft forever preferred_lft forever [root@Secondary ~]# tail - /var/log/messages

........

May :: localhost Keepalived_vrrp[]: Sending gratuitous ARP on eth0 for 192.168.1.200

May :: localhost Keepalived_vrrp[]: Sending gratuitous ARP on eth0 for 192.168.1.200

May :: localhost Keepalived_vrrp[]: Sending gratuitous ARP on eth0 for 192.168.1.200

May :: localhost Keepalived_vrrp[]: Sending gratuitous ARP on eth0 for 192.168.1.200

May :: localhost Keepalived_vrrp[]: Sending gratuitous ARP on eth0 for 192.168.1.200

May :: localhost Keepalived_vrrp[]: VRRP_Instance(VI_1) Sending/queueing gratuitous ARPs on eth0 for 192.168.1.200 [root@Secondary ~]# ip addr

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue state UNKNOWN

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

inet6 ::/ scope host

valid_lft forever preferred_lft forever

: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc pfifo_fast state UP qlen

link/ether fa::3e:4c:7e: brd ff:ff:ff:ff:ff:ff

inet 192.168.1.152/ brd 192.168.1.255 scope global eth0

inet 192.168.1.200/ scope global eth0

inet6 fe80::f816:3eff:fe4c:7e88/ scope link

valid_lft forever preferred_lft forever

[root@Secondary ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/VolGroup00-LogVol00

156G 13G 135G % /

tmpfs .9G .9G % /dev/shm

/dev/vda1 190M 89M 92M % /boot

/dev/drbd0 .8G 23M .2G % /data 当Primary机器的keepalived服务恢复启动后,VIP资源又会强制夺回来(可以查看/var/log/message系统日志)

并且Primary还会再次变为DRBD的主节点 )

关闭Primary主机的nfs服务。根据监控脚本,会主动去启动nfs,只要当启动失败时,才会强制由DRBD的主节点降为备份节点,并关闭keepalived。

从而跟上面流程一样实现故障转移 结论:

在上面的主从故障切换过程中,对于客户端来说,挂载NFS不影响使用,只是会有一点的延迟。

这也验证了drbd提供的数据一致性功能(包括文件的打开和修改状态等),在客户端看来,真个切换过程就是"一次nfs重启"(主nfs停,备nfs启)。

Top:

1、说一下"Split-Brain"(脑裂)的情况:

假设把Primary主机的的eth0设备宕掉,然后直接在Secondary主机上进行提权升级为DRBD的主节点,并且mount挂载DRBD,这时会发现之前在Primary主机上写入的数据文件确实同步过来了。

接着再把Primary主机的eth0设备恢复,看看有没有自动恢复 主从关系。经过查看,发现DRBD检测出了Split-Brain的状况,也就是两个节点都处于standalone状态,

故障描述如下:Split-Brain detected,dropping connection! 这就是传说中的“脑裂”。 DRBD官方推荐的手动恢复方案: )Secondary主机上的操作

# drbdadm secondary r0

# drbdadm disconnect all

# drbdadm --discard-my-data connect r0 //或者"drbdadm -- --discard-my-data connect r0" )Primary主机上的操作

# drbdadm disconnect all

# drbdadm connect r0

# drbdsetup /dev/drbd0 primary

drbd+nfs+keepalived的更多相关文章

- Centos下部署DRBD+NFS+Keepalived高可用环境记录

使用NFS服务器(比如图片业务),一台为主,一台为备.通常主到备的数据同步是通过rsync来做(可以结合inotify做实时同步).由于NFS服务是存在单点的,出于对业务在线率和数据安全的保障,可以采 ...

- DRBD+NFS+Keepalived高可用环境

1.前提条件 准备两台配置相同的服务器 2.安装DRBD [root@server139 ~]# yum -y update kernel kernel-devel [root@server139 ~ ...

- (转)Heartbeat+DRBD+NFS高可用案例

原文:http://9861015.blog.51cto.com/9851015/1939521--------------------------------Heartbeat+DRBD+NFS高可 ...

- Heartbeat+DRBD+NFS 构建高可用的文件系统

1.实验拓扑图 2.修改主机名 1 2 3 vim /etc/sysconfig/network vim /etc/hosts drbd1.free.com drbd2.free.com 3. ...

- nfs+keepalived高可用

1台nfs主被服务器都下载nfs.keepalived yum install nfs-utils rpcbind keepalived -y 2台nfs服务器nfs挂载目录及配置必须相同 3.在主n ...

- Heartbeat+DRBD+NFS

添加路由心跳线 master: # route add -host 10.20.23.111 dev eth2 # echo "/sbin/route add -host 10.20.23. ...

- Keepalived+NFS+SHELL脚本实现NFS-HA高可用

本来想做DRBD+HEARTBEAT,但是领导说再加硬盘浪费资源,没有必要,而且在已有硬盘上做风险较大,所以就只能用rsync来实现数据同步了,实验中发现很多的坑,都用脚本和计划任务给填上了,打算把这 ...

- CentOS7数据库架构之NFS+heartbeat+DRBD(亲测,详解)

目录 参考文档 理论概述 DRBD 架构 NFS 架构部署 部署DRBD 部署heartbeat 部署NFS及配合heartbeat nfs切记要挂载到别的机器上不要为了省事,省机器 参考文档 htt ...

- NFS+sersync+Keepalived高可用方案

标签(linux): nfs+keepalived 笔者Q:972581034 交流群:605799367.有任何疑问可与笔者或加群交流 这套解决方法案的优点是配置比较简单.容易上手,缺点是当主NFS ...

随机推荐

- How to Hack Unity Games using Mono Injection Tutorial

https://guidedhacking.com/threads/how-to-hack-unity-games-using-mono-injection-tutorial.11674/ Unity ...

- 远程桌面工具mRemoteNG与Tsmmc

一.Tsmmc.msc远程管理工具.1.下载链接:https://pan.baidu.com/s/1tV_xP-ITWyKKzAxLSlGxlw 密码:0jrt 将目录下的mstsmhst.dll.m ...

- DNS 预读取功能 链接预取

https://developer.mozilla.org/zh-CN/docs/Controlling_DNS_prefetching DNS 请求需要的带宽非常小,但是延迟却有点高,这一点在手机网 ...

- 到底啥是鸭子类型(duck typing)带简单例子

#百度百科鸭子类型定义 这是程序设计中的一种类型推断风格,这种风格适用于动态语言(比如PHP.Python.Ruby.Typescript.Perl.Objective-C.Lua.Julia.Jav ...

- python : takes 0 positional arguments but 1 was given

def 的要加self, https://blog.csdn.net/u010269790/article/details/78834410

- DialogFragment: DialogFragment的一些理解

Android 自3.0版本引入了DialogFragment这个类,并推荐开发者使用这个类替代之前经常使用的Dialog类,那么DialogFragment相对于之前的Dialog究竟有什么优势呢? ...

- postgresql 利用pgAgent实现定时器任务

1.安装pgAgent 利用Application Stack Builder安装向导,安装pgAgent. 根据安装向导一步一步安装即可. 安装完成之后,windows服务列表中会增加一个服务:Po ...

- osg编译日志3

1>------ 已启动生成: 项目: ZERO_CHECK, 配置: Debug x64 ------1> Checking Build System1> CMake does n ...

- MyBatis原理总结(前期准备)

1.不同框架解决不用问题,框架封装了很多细节,开发者可以使用简单的方式实现功能. 2.三层架构: 1.表现层 2.业务层 3.持久层 都有相应的处理框架. 3.持久层的技术解决方案: JDBC技 ...

- Python3入门(十三)——连接数据库

以Mysql为例: 要操作关系数据库,首先需要连接到数据库,一个数据库连接称为Connection: 连接到数据库后,需要打开游标,称之为Cursor,通过Cursor执行SQL语句,然后,获得执行结 ...