Lessons learned from manually classifying CIFAR-10

Lessons learned from manually classifying CIFAR-10

Apr 27, 2011

CIFAR-10

Note, this post is from 2011 and slightly outdated in some places.

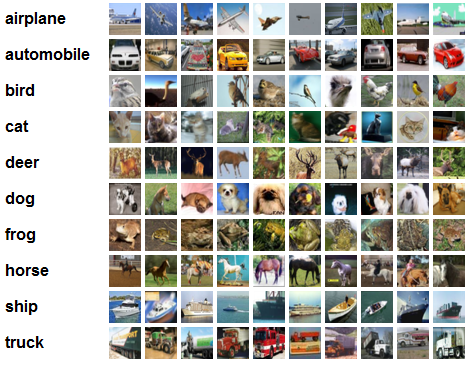

Statistics. CIFAR-10 consists of 50,000 training images, all of them in 1 of 10 categories (displayed left). The test set consists of 10,000 novel images from the same categories, and the task is to classify each to its category. The state of the art is currently at about 80% classification accuracy (4000 centroids), achieved by Adam Coates et al. (PDF). This paper achieved the accuracy by using whitening, k-means to learn many centroids, and then using a soft activation function as features.

State of the Art performance. By the way, running their method with 1600 centroids gives 77% classification accuracy. If you set the clusters to be random the accuracy becomes 70%, and if you set the clusters to be random patches from the training set, the accuracy goes up to 74%. It seems like the whole purpose of k-means is to nicely spread out the clusters around the data. I'm guessing that the 70% random clusters performance might be because many of the clusters are relatively too far away from data manifolds, and never become activated -- it's as if you had much fewer clusters to begin with.

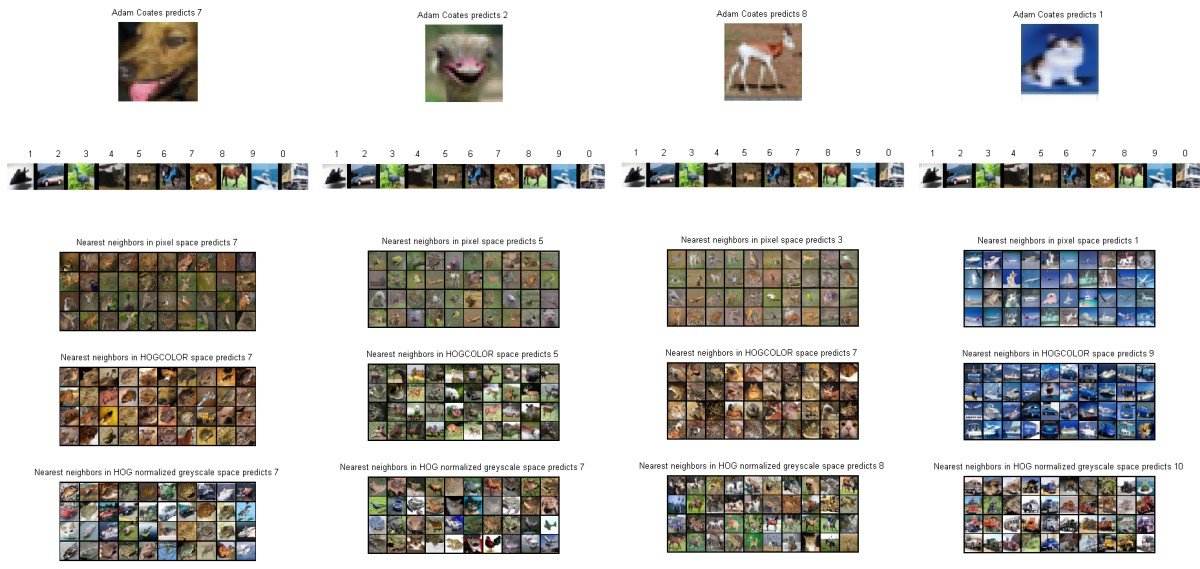

Human Accuracy. Over the weekend I wanted to see what kind of classification accuracy a human would achieve on this dataset. I set out to write some quick MATLAB code that would provide the interface to do this. It showed one image at a time and allowed me to press a key from 0-9 indicating my belief about its class category. My classification accuracy ended up at about 94% on 400 images. Why not 100%? Because some images are really unfair! To give you an idea, here are some questionable images from CIFAR-10:

CIFAR-10 human accuracy is approximately 94%

Observations

A few observations I derived from this exercise:

The objects within classes in this dataset can be extremely varied. For example the "bird" class contains many different types of bird (both big birds and small). Not only are there many types of bird, but the occur at many possible magnifications, all possible angles and all possible poses. Sometimes only parts of the bird are shown. The poses problem is even worse for the dog/cat category, because these animals occur at many many different types of poses, and sometimes only the head is shown. Or left part of the body, etc.

My classification method felt strangely dichotomous. Sometimes you can clearly see the animal or object and classify it based very highly-informative distinct parts (for example, you find ears of a cat). Other times, my recognition was purely based on context and the overall cues in the image such as the colors.

The CIFAR-10 dataset is too small to properly contain examples of everything that it is asking for in the test set. I base this conclusion at least on my multiple ways of visualizing the nearest image in the training set.

I don't quite understand how Adam Coates et al. perform so well on this dataset (80%) with their method. My guess is that it works along the following lines: looking at the image squinting your eyes you can almost always narrow down the category to about 2 or 3. The final disambiguation probably comes from finding very good specific informative patches (like a patch of some kind of fur, or pointy ear part, etc.). The k-means dictionary must be catching these cases and the SVM likely picks up on them.

My impression from this exercise is that it will be hard to go above 80%, but I suspect improvements might be possible up to range of about 85-90%, depending on how wrong I am about the lack of training data. (2015 update: Obviously this prediction was way off, with state of the art now in 95%, as seen in this Kaggle competition leaderboard. I'm impressed!)

I encourage people to try this for themselves (see my code, above), as it is very interesting and fun! I have trouble exactly articulating what I learned, but overall I feel like I gained more intuition for image classification tasks and more appreciation for the difficulty of the problem at hand.

Finally, here is an example of my debugging interface:

The Matlab code used to generate these results can be found here

Lessons learned from manually classifying CIFAR-10的更多相关文章

- Lessons Learned from Developing a Data Product

Lessons Learned from Developing a Data Product For an assignment I was asked to develop a visual ‘da ...

- Lessons learned developing a practical large scale machine learning system

原文:http://googleresearch.blogspot.jp/2010/04/lessons-learned-developing-practical.html Lessons learn ...

- 翻译 | Improving Distributional Similarity with Lessons Learned from Word Embeddings

翻译 | Improving Distributional Similarity with Lessons Learned from Word Embeddings 叶娜老师说:"读懂论文的 ...

- 【翻译】TensorFlow卷积神经网络识别CIFAR 10Convolutional Neural Network (CNN)| CIFAR 10 TensorFlow

原网址:https://data-flair.training/blogs/cnn-tensorflow-cifar-10/ by DataFlair Team · Published May 21, ...

- Elasticsearch Mantanence Lessons Learned Today

Today I troubleshooted an Elasticsearch-cluster-down issue. Several lessons were learned: When many ...

- 【神经网络与深度学习】基于Windows+Caffe的Minst和CIFAR—10训练过程说明

Minst训练 我的路径:G:\Caffe\Caffe For Windows\examples\mnist 对于新手来说,初步完成环境的配置后,一脸茫然.不知如何跑Demo,有么有!那么接下来的教 ...

- DL Practice:Cifar 10分类

Step 1:数据加载和处理 一般使用深度学习框架会经过下面几个流程: 模型定义(包括损失函数的选择)——>数据处理和加载——>训练(可能包括训练过程可视化)——>测试 所以自己写代 ...

- Lessons Learned 1(敏捷项目中的变更影响分析)

问题/现象: 业务信息流转的某些环节,会向相关人员发送通知邮件,邮件中附带有链接,供相关人员进入察看或处理业务.客户要求邮件中的链接,需要进行限制,只有特定人员才能进入处理或察看.总管想了想,应道没问 ...

- Paper Reading - Show and Tell: Lessons learned from the 2015 MSCOCO Image Captioning Challenge

Link of the Paper: https://arxiv.org/abs/1609.06647 A Correlative Paper: Show and Tell: A Neural Ima ...

随机推荐

- PHP mysql 事务处理实例

事务是必须满足4个条件(ACID):原子性(Autmic).一致性(Consistency).隔离性(Isolation).持久性(Durability) 原子性(Autmic):事务在执行性,要做到 ...

- php循环创建目录

代码取自thinkphp中: function mk_dir($dir, $mod = 0777) { if(!is_dir($dir) || mkdir($dir, $mod)) { if(!mk_ ...

- 【easuyi】---easyui中的验证validatebox自定义

这里比较简单的使用就不再多说,主要说一下自定义的validatebox. 1.验证密码是否相等,这个直接参考给定的列子就行,这里主要学习这种灵活使用的方式和方法. <input id=" ...

- xml文件对应的DTD学习

DTD文件: 1.DTD文档主要由(元素,属性,实体,PCDATA,CDATA) 2.声明一个元素:<!ELEMENT 元素名称 (元素内容)> eg: <!ELEMENT pers ...

- STM32F0xx_TIM基本延时配置详细过程

前言 关于定时器大家都应该不会陌生,因为处理器都有这个功能.今天总结的F0系列芯片的定时器根据芯片型号不同,数量也不同.定时器分类:基本定时器.通用定时器和高级定时器.计数位数也有不同,有16位的,有 ...

- 第六章 类型(class)和成员基础

1. 概述 本章讲述如何在一个类型中定义不同种类的成员. 2. 名词解释 3. 主要内容 3.1 类型的各种成员 在一个类型中,可以定义0个或多个以下种类的成员: ① 常量:常量就是指出数据值恒定不变 ...

- javascript arguments

此文为转载文章: 什么是arguments arguments 是是JavaScript里的一个内置对象,它很古怪,也经常被人所忽视,但实际上是很重要的.所有主要的js函数库都利用了arguments ...

- 编译android程序时DEX过程出现错误

今天编译高级设置时出现了错误,这好坑爹啊~ 于是我开始检查代码,发现代码没有错误啊,然后观察MAKE的步骤才发现是DEX时出现了问题!! 下面是错误的LOG: Information:Using ja ...

- 刀哥多线程之并发队列gcd-05-dispatch_queue_concurrent

并发队列 特点 以先进先出的方式,并发调度队列中的任务执行 如果当前调度的任务是同步执行的,会等待任务执行完成后,再调度后续的任务 如果当前调度的任务是异步执行的,同时底层线程池有可用的线程资源,会再 ...

- MongoDB复制机制实例

MongoDB的主从复制是一个主可以多从已从又可以为主进行主从复制.在这里就是实现一主一从一个仲裁服务器使用一个数据库服务器通过提供不同的端口. 一.启动一个MongoDB服务名字是applicati ...