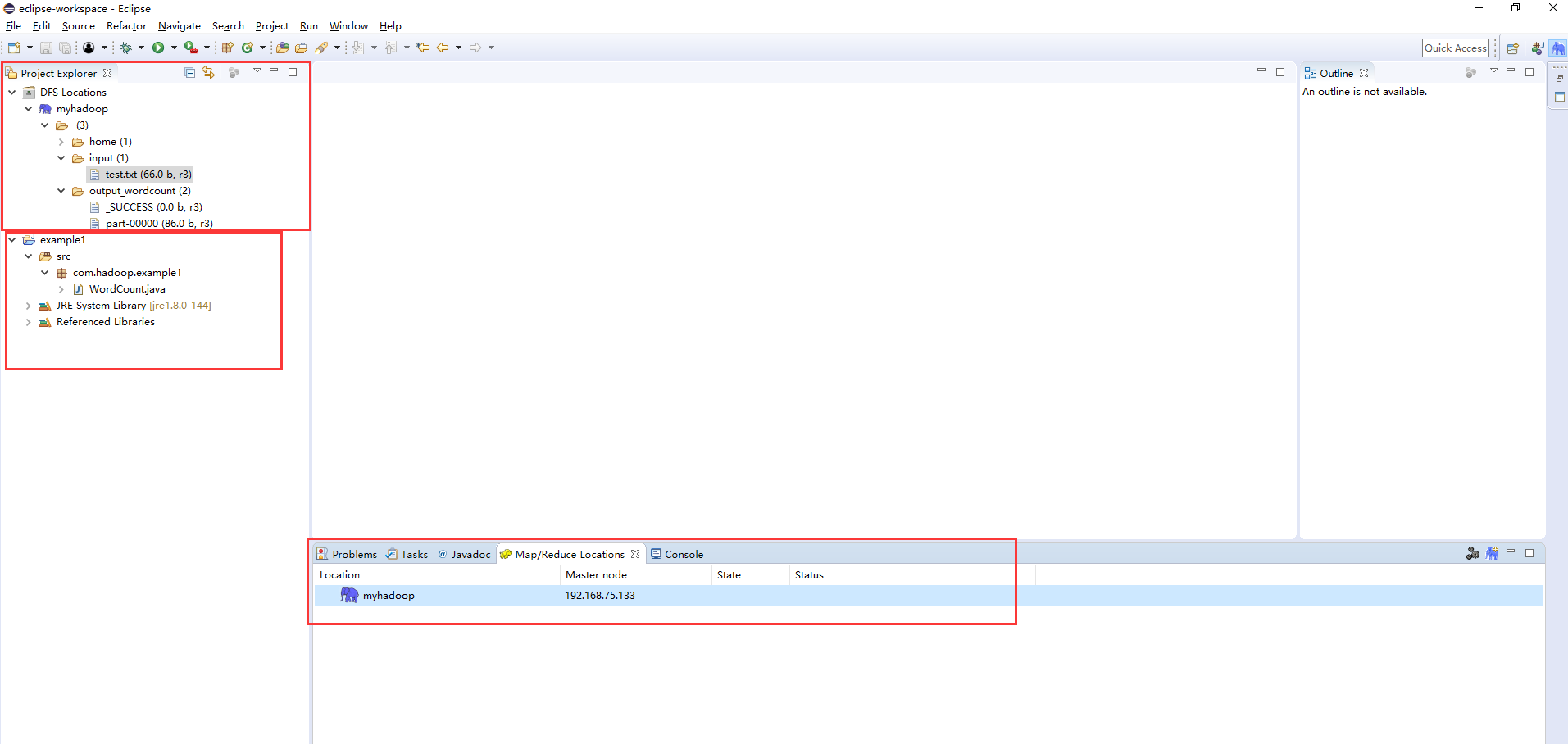

windows下eclipse远程连接hadoop集群开发mapreduce

.png)

.png)

.png)

.png)

.png)

.png)

.png)

liang ni hao ma

wo hen hao

ha

qwe

asasa

xcxc vbv xxxx aaa eee

package com.hadoop.example1;

import java.io.IOException;

import java.util.Iterator;

import java.util.StringTokenizer;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapred.FileInputFormat;

import org.apache.hadoop.mapred.FileOutputFormat;

import org.apache.hadoop.mapred.JobClient;

import org.apache.hadoop.mapred.JobConf;

import org.apache.hadoop.mapred.MapReduceBase;

import org.apache.hadoop.mapred.Mapper;

import org.apache.hadoop.mapred.OutputCollector;

import org.apache.hadoop.mapred.Reducer;

import org.apache.hadoop.mapred.Reporter;

import org.apache.hadoop.mapred.TextInputFormat;

import org.apache.hadoop.mapred.TextOutputFormat;

public class WordCount {

public static class Map extends MapReduceBase implements

Mapper<LongWritable, Text, Text, IntWritable> {

private final static IntWritable one = new IntWritable(1);

private Text word = new Text();

public void map(LongWritable key, Text value,

OutputCollector<Text, IntWritable> output, Reporter reporter)

throws IOException {

String line = value.toString();

StringTokenizer tokenizer = new StringTokenizer(line);

while (tokenizer.hasMoreTokens()) {

word.set(tokenizer.nextToken());

output.collect(word, one);

}

}

}

public static class Reduce extends MapReduceBase implements

Reducer<Text, IntWritable, Text, IntWritable> {

public void reduce(Text key, Iterator<IntWritable> values,

OutputCollector<Text, IntWritable> output, Reporter reporter)

throws IOException {

int sum = 0;

while (values.hasNext()) {

sum += values.next().get();

}

output.collect(key, new IntWritable(sum));

}

}

public static void main(String[] args) throws Exception {

JobConf conf = new JobConf(WordCount.class);

conf.setJobName("wordcount");

conf.setOutputKeyClass(Text.class);

conf.setOutputValueClass(IntWritable.class);

conf.setMapperClass(Map.class);

conf.setCombinerClass(Reduce.class);

conf.setReducerClass(Reduce.class);

conf.setInputFormat(TextInputFormat.class);

conf.setOutputFormat(TextOutputFormat.class);

FileInputFormat.setInputPaths(conf, new Path(args[0]));

FileOutputFormat.setOutputPath(conf, new Path(args[1]));

JobClient.runJob(conf);

}

}

.png)

.png)

14/05/29 13:49:16 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

14/05/29 13:49:16 ERROR security.UserGroupInformation: PriviledgedActionException as:ISCAS cause:java.io.IOException: Failed to set permissions of path: \tmp\hadoop-ISCAS\mapred\staging\ISCAS1655603947\.staging to 0700

Exception in thread "main" java.io.IOException: Failed to set permissions of path: \tmp\hadoop-ISCAS\mapred\staging\ISCAS1655603947\.staging to 0700

at org.apache.hadoop.fs.FileUtil.checkReturnValue(FileUtil.java:691)

at org.apache.hadoop.fs.FileUtil.setPermission(FileUtil.java:664)

at org.apache.hadoop.fs.RawLocalFileSystem.setPermission(RawLocalFileSystem.java:514)

at org.apache.hadoop.fs.RawLocalFileSystem.mkdirs(RawLocalFileSystem.java:349)

at org.apache.hadoop.fs.FilterFileSystem.mkdirs(FilterFileSystem.java:193)

at org.apache.hadoop.mapreduce.JobSubmissionFiles.getStagingDir(JobSubmissionFiles.java:126)

at org.apache.hadoop.mapred.JobClient$2.run(JobClient.java:942)

at org.apache.hadoop.mapred.JobClient$2.run(JobClient.java:936)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Unknown Source)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1190)

at org.apache.hadoop.mapred.JobClient.submitJobInternal(JobClient.java:936)

at org.apache.hadoop.mapreduce.Job.submit(Job.java:550)

at org.apache.hadoop.mapreduce.Job.waitForCompletion(Job.java:580)

at org.apache.hadoop.examples.WordCount.main(WordCount.java:82)

private static void checkReturnValue(boolean rv, File p,

FsPermission permission)

throws IOException

{

/**

* comment the following, disable this function if (!rv)

{

throw new IOException("Failed to set permissions of path: " + p +

" to " +

String.format("%04o", permission.toShort()));

}

*/

}

%60LV47(~(BZWGV.png)

windows下eclipse远程连接hadoop集群开发mapreduce的更多相关文章

- windows下在eclipse上远程连接hadoop集群调试mapreduce错误记录

第一次跑mapreduce,记录遇到的几个问题,hadoop集群是CDH版本的,但我windows本地的jar包是直接用hadoop2.6.0的版本,并没有特意找CDH版本的 1.Exception ...

- windows下eclipse远程连接hadoop错误“Exception in thread"main"java.io.IOException: Call to Master.Hadoop/172.20.145.22:9000 failed ”

在VMware虚拟机下搭建了hadoop集群,ubuntu-12.04,一台master,三台slave.hadoop-0.20.2版本.在 master机器上利用eclipse-3.3连接hadoo ...

- Eclipse远程提交hadoop集群任务

文章概览: 1.前言 2.Eclipse查看远程hadoop集群文件 3.Eclipse提交远程hadoop集群任务 4.小结 1 前言 Hadoop高可用品台搭建完备后,参见<Hadoop ...

- Win7下通过eclipse远程连接CDH集群来执行相应的程序以及错误说明

最近尝试这用用eclipse连接CDH的集群,由于之前尝试过很多次都没连上,有一次发现Cloudera Manager是将连接的端口修改了,所以才导致连接不上CDH的集群,之前Apache hadoo ...

- windows下Eclipse远程连接linux hadoop远程调试 经验(一)

环境 Windows 7 64bit JDK 1.6.0_45 (i586) JDK 1.7.0_51 (i586) Eclipse Kepler Eclipse -plugin-1.2.1.ja ...

- Eclipse/MyEclipse连接Hadoop集群出现:Unable to ... ... org.apache.hadoop.security.AccessControlExceptiom:Permission denied问题

问题详细如下: 解决办法: <property> <name>dfs.premissions</name> <value>false</value ...

- eclipse连接远程hadoop集群开发时权限不足问题解决方案

转自:http://blog.csdn.net/shan9liang/article/details/9734693 eclipse连接远程hadoop集群开发时报错 Exception in t ...

- eclipse连接远程hadoop集群开发时0700问题解决方案

eclipse连接远程hadoop集群开发时报错 错误信息: Exception in thread "main" java.io.IOException:Failed to se ...

- 【hadoop】——window下elicpse连接hadoop集群基础超详细版

1.Hadoop开发环境简介 1.1 Hadoop集群简介 Java版本:jdk-6u31-linux-i586.bin Linux系统:CentOS6.0 Hadoop版本:hadoop-1.0.0 ...

随机推荐

- Celery-4.1 用户指南: Task(任务)

任务是构建 celery 应用的基础块. 任务是可以在任何除可调用对象外的地方创建的一个类.它扮演着双重角色,它定义了一个任务被调用时会发生什么(发送一个消息),以及一个工作单元获取到消息之后将会做什 ...

- bash姿势-没有管道符执行结果相同于管道符

听起来比较别口: 直接看代码: shell如下: [root@sevck_linux ~]# </etc/passwd grep root root:x:::root:/root:/bin/ba ...

- UML在实践中的现状和一些建议

本文是我在csdn上看到的文章,由于认识中的共鸣,摘抄至此. 原文地址:http://blog.csdn.net/vrman/article/details/280157 UML在国内不少地方获得了应 ...

- WebView三个方法区别(解决乱码问题)

最近使用WebView加载中文网页的时候出现乱码问题,网上整理下基本解决方法: 其实我发现这不管是在线还是离线显示都可以使用LoadUrl方法!联网时好像是默认utf-8,离线读取本地时需要设置默认编 ...

- C++面向对象类的实例题目八

题目描述: 编写一个程序输入3个学生的英语和计算机成绩,并按照总分从高到低排序.要求设计一个学生类Student,其定义如下: 程序代码: #include<iostream> using ...

- ==, equals, hashcode的理解

一.java对象的比较 等号(==): 对比对象实例的内存地址(也即对象实例的ID),来判断是否是同一对象实例:又可以说是判断对象实例是否物理相等: equals(): 对比两个对象实例是否相等. 当 ...

- 面试题: 1天的java面试题 已看1

1,自我介绍下,我直接说的项目经历,(哪年在哪个公司呆过) 2,问是否有带过团队的经历,我说去年带过一次. 3,Struts是单例模式还是多例模式?我先说单例模式,后说多例模式. Struts1是单例 ...

- 利用General框架开发RDLC报表

RDLC是微软推出的自家的报表软件,虽然没有一些第三方的报表软件强大好用,但是作为VisualStudio集成的报表工具,在客户要求不高的情况下还是非常值得一用的,本文将介绍通过General代码生成 ...

- php学习笔记-超级全局变量

超级全局变量,超级在哪里呢?相对于global类型的变量,超级全局变量的作用域是没有限制的,函数外.函数内.随便一个PHP文件都可以引用超级全局变量.在PHP中有很多超级全局变量, 常用的有_SERV ...

- Jtabbedpane设置透明、Jpanel设置透明

摘自 https://zhidao.baidu.com/question/983204331427010139.html java中如何设置Jtabbedpane为透明 20 在Jtabbedpane ...