(转) Deep learning architecture diagrams

FastML

Machine learning made easy

Deep learning architecture diagrams

2016-09-30

Like a wild stream after a wet season in African savanna diverges into many smaller streams forming lakes and puddles, deep learning has diverged into a myriad of specialized architectures. Each architecture has a diagram. Here are some of them.

Neural networks are conceptually simple, and that’s their beauty. A bunch of homogenous, uniform units, arranged in layers, weighted connections between them, and that’s all. At least in theory. Practice turned out to be a bit different. Instead of feature engineering, we now have architecture engineering, as described by Stephen Merrity:

The romanticized description of deep learning usually promises that the days of hand crafted feature engineering are gone - that the models are advanced enough to work this out themselves. Like most advertising, this is simultaneously true and misleading.

Whilst deep learning has simplified feature engineering in many cases, it certainly hasn’t removed it. As feature engineering has decreased, the architectures of the machine learning models themselves have become increasingly more complex. Most of the time, these model architectures are as specific to a given task as feature engineering used to be.

To clarify, this is still an important step. Architecture engineering is more general than feature engineering and provides many new opportunities. Having said that, however, we shouldn’t be oblivious to the fact that where we are is still far from where we intended to be.

Not quite as bad as doings of architecture astronauts, but not too good either.

An example of architecture specific to a given task

LSTM diagrams

How to explain those architectures? Naturally, with a diagram. A diagram will make it all crystal clear.

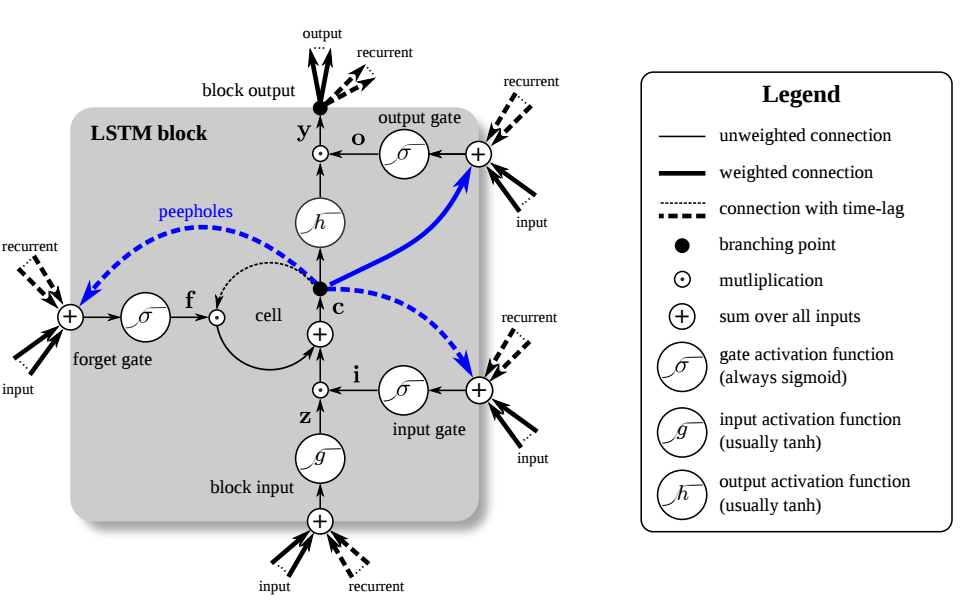

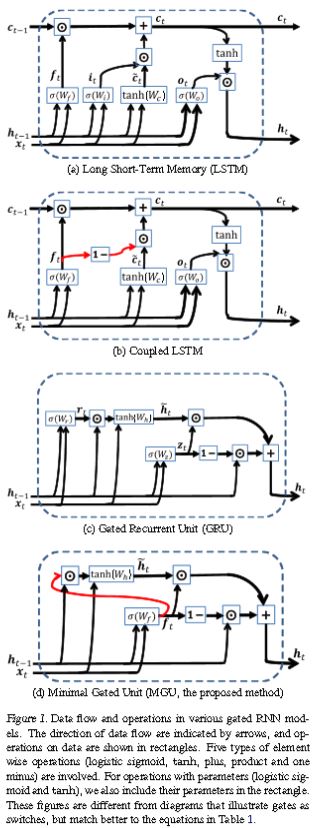

Let’s first inspect the two most popular types of networks these days, CNN and LSTM. You’ve already seen a convnet diagram, so turning to the iconic LSTM:

It’s easy, just take a closer look:

As they say, in mathematics you don’t understand things, you just get used to them.

Fortunately, there are good explanations, for example Understanding LSTM Networks and Written Memories: Understanding, Deriving and Extending the LSTM.

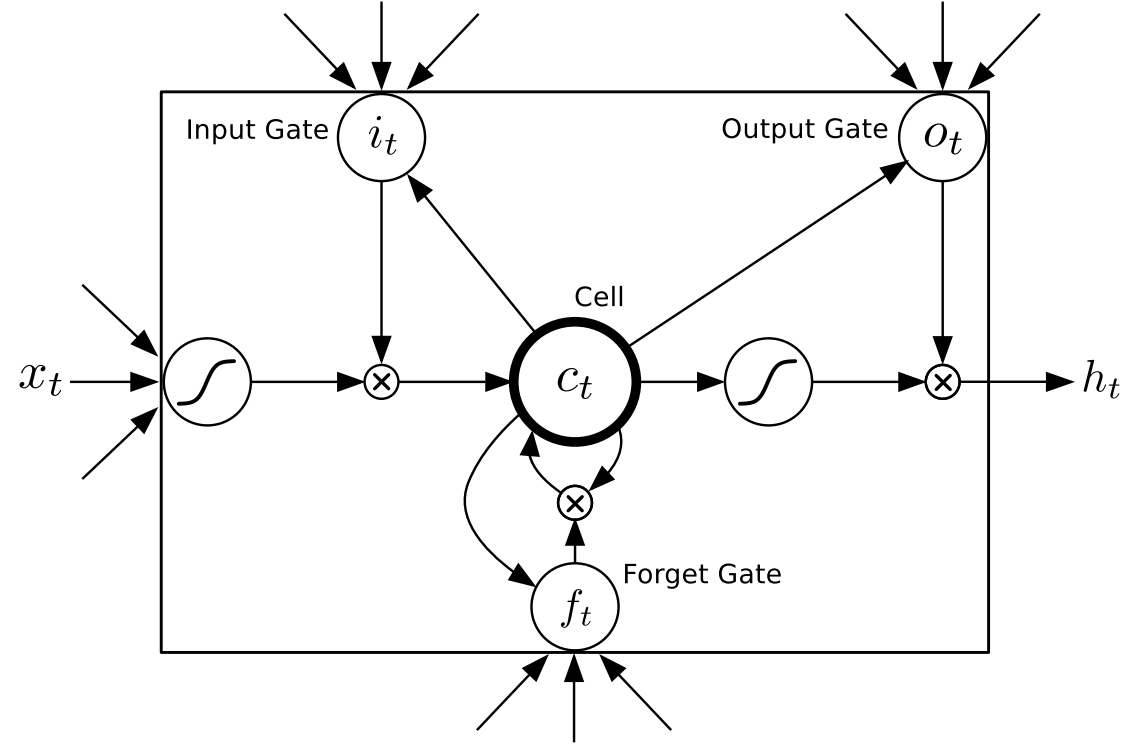

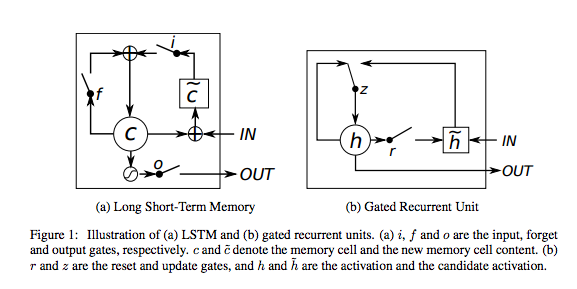

LSTM still too complex? Let’s try a simplified version, GRU (Gated Recurrent Unit). Trivial, really.

Especially this one, called minimal GRU.

More diagrams

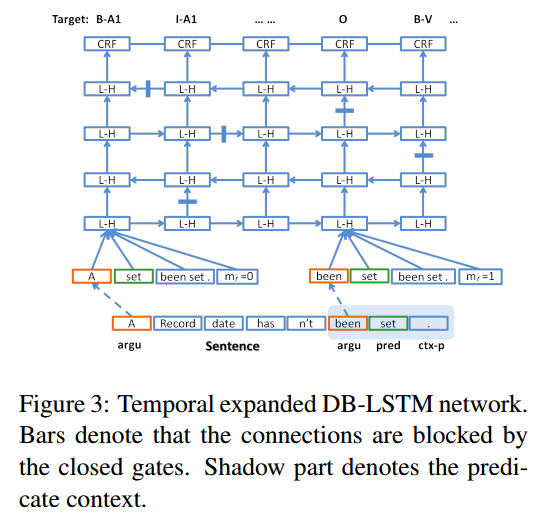

Various modifications of LSTM are now common. Here’s one, called deep bidirectional LSTM:

DB-LSTM, PDF

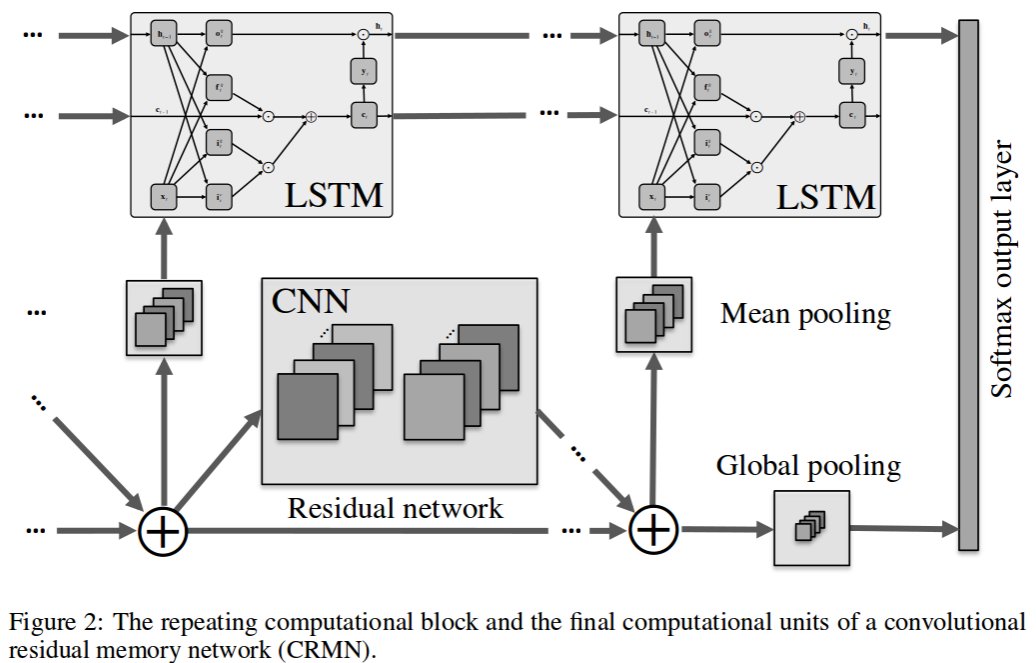

The rest are pretty self-explanatory, too. Let’s start with a combination of CNN and LSTM, since you have both under your belt now:

Convolutional Residual Memory Network, 1606.05262

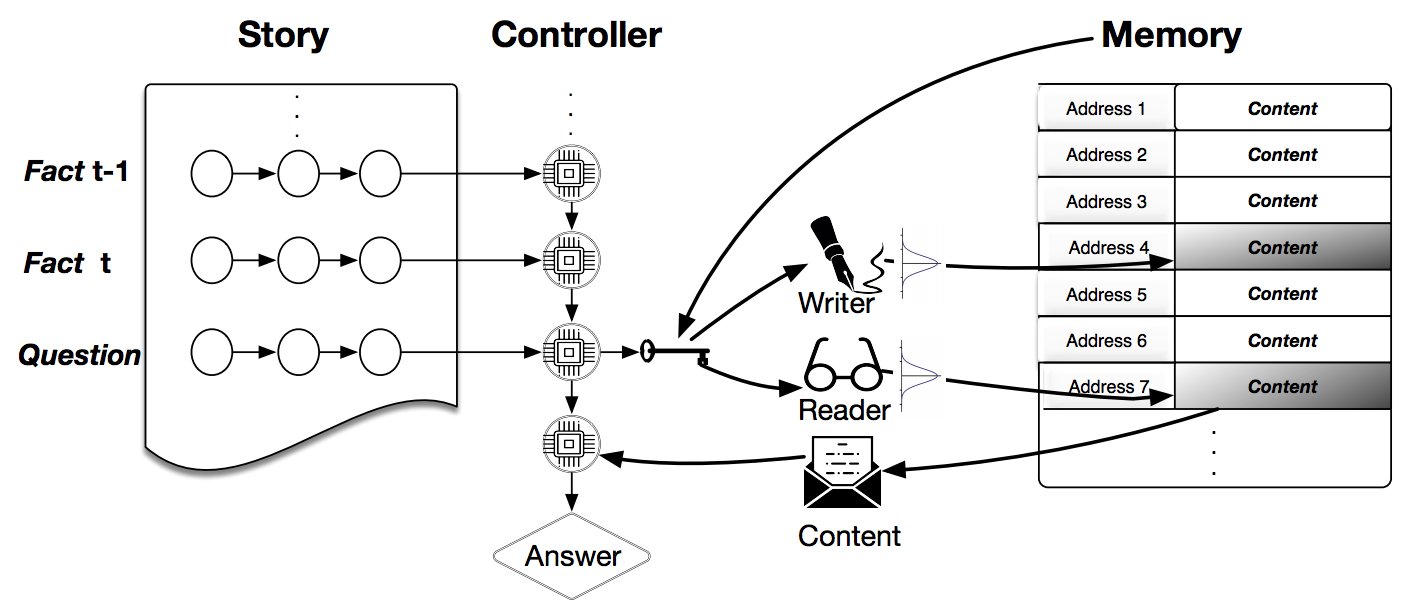

Dynamic NTM, 1607.00036

Evolvable Neural Turing Machines, PDF

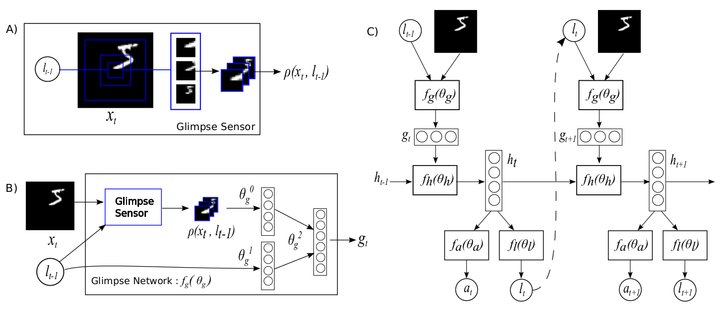

Recurrent Model Of Visual Attention, 1406.6247

Unsupervised Domain Adaptation By Backpropagation, 1409.7495

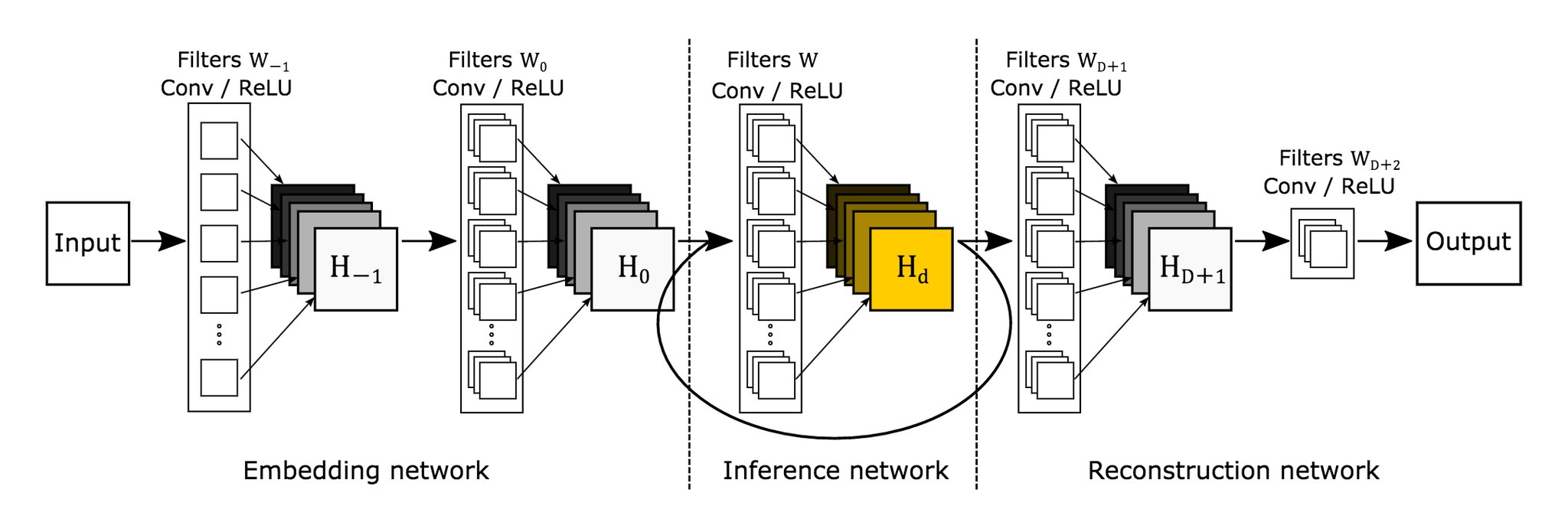

Deeply Recursive CNN For Image Super-Resolution, 1511.04491

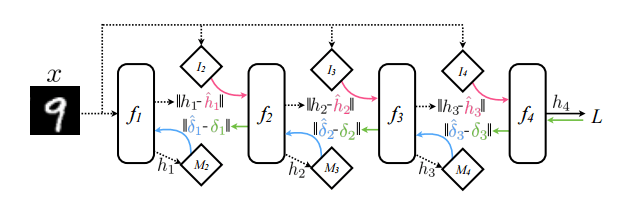

This diagram of multilayer perceptron with synthetic gradients scores high on clarity:

MLP with synthetic gradients, 1608.05343

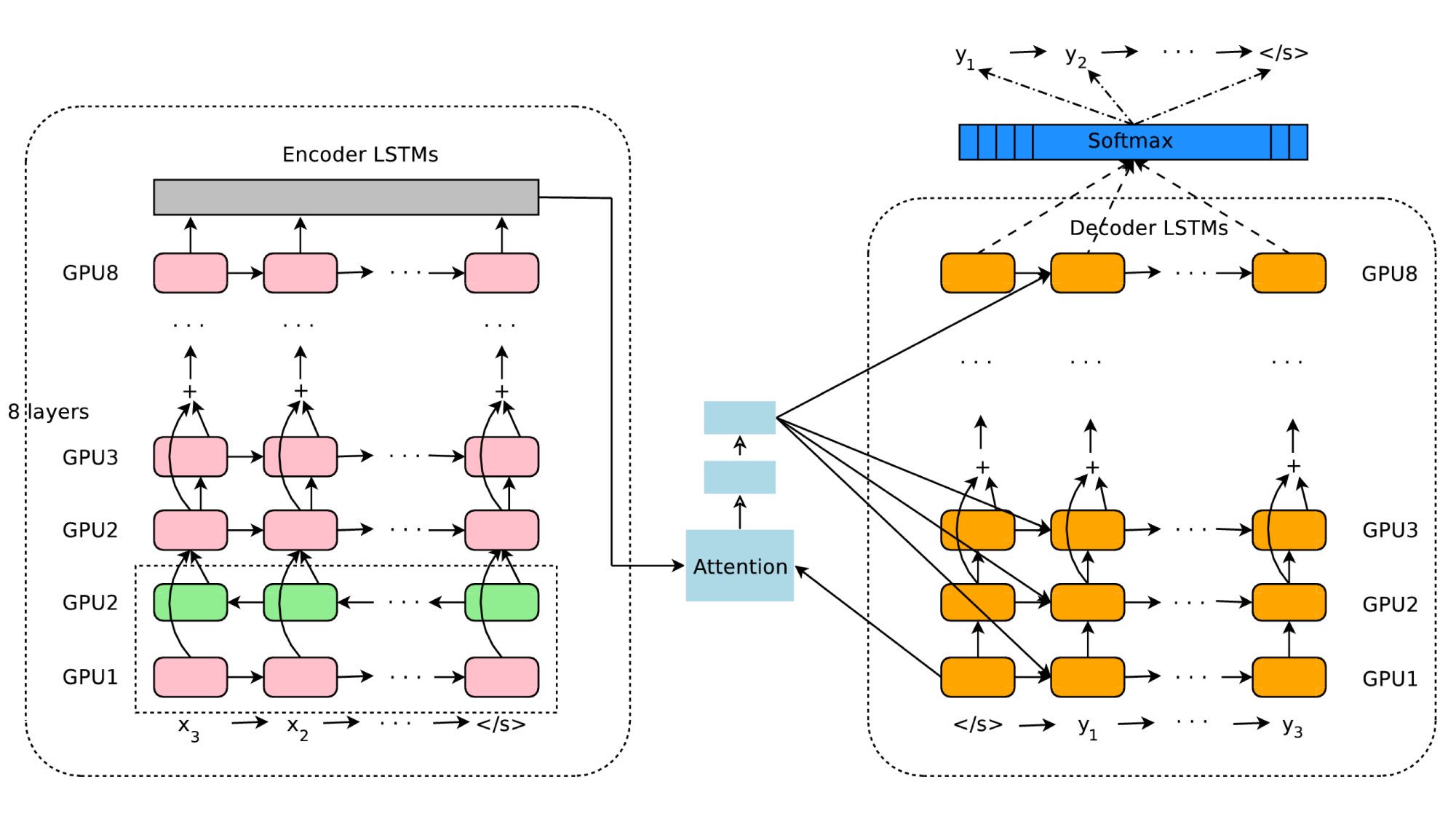

Every day brings more. Here’s a fresh one, again from Google:

Google’s Neural Machine Translation System, 1609.08144

And Now for Something Completely Different

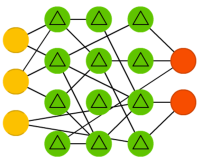

Drawings from the Neural Network ZOO are pleasantly simple, but, unfortunately, serve mostly as eye candy. For example:

ESM, ESN and ELM

These look like not-fully-connected perceptrons, but are supposed to represent a Liquid State Machine, an Echo State Network, and an Extreme Learning Machine.

How does LSM differ from ESN? That’s easy, it has green neuron with triangles. But how does ESN differ from ELM? Both have blue neurons.

Seriously, while similar, ESN is a recursive network and ELM is not. And this kind of thing should probably be visible in an architecture diagram.

Posted by Zygmunt Z. 2016-09-30 basics, neural-networks

« Factorized convolutional neural networks, AKA separable convolutions

Comments

Recent Posts

- Deep learning architecture diagrams

- Factorized convolutional neural networks, AKA separable convolutions

- How to make those 3D data visualizations

- Adversarial validation, part two

- ^one weird trick for training char-^r^n^ns

- Adversarial validation, part one

- Coming out

Follow @fastml for notifications about new posts.

- Status updating...

Also check out @fastml_extra for things related to machine learning and data science in general.

GitHub

Most articles come with some code. We push it to Github.

Cubert

Visualize your data in interactive 3D, as described here.

Copyright © 2016 - Zygmunt Z. - Powered by Octopress

(转) Deep learning architecture diagrams的更多相关文章

- 15 cvpr An Improved Deep Learning Architecture for Person Re-Identification

http://www.umiacs.umd.edu/~ejaz/ * 也是同时学习feature和metric * 输入一对图片,输出是否是同一个人 * 包含了一个新的层: include a lay ...

- Deep Learning in a Nutshell: History and Training

Deep Learning in a Nutshell: History and Training This series of blog posts aims to provide an intui ...

- 深度学习材料:从感知机到深度网络A Deep Learning Tutorial: From Perceptrons to Deep Networks

In recent years, there’s been a resurgence in the field of Artificial Intelligence. It’s spread beyo ...

- 【Deep Learning】genCNN: A Convolutional Architecture for Word Sequence Prediction

作者:Mingxuan Wang.李航,刘群 单位:华为.中科院 时间:2015 发表于:acl 2015 文章下载:http://pan.baidu.com/s/1bnBBVuJ 主要内容: 用de ...

- Why GEMM is at the heart of deep learning

Why GEMM is at the heart of deep learning I spend most of my time worrying about how to make deep le ...

- 【深度学习Deep Learning】资料大全

最近在学深度学习相关的东西,在网上搜集到了一些不错的资料,现在汇总一下: Free Online Books by Yoshua Bengio, Ian Goodfellow and Aaron C ...

- (转) Awesome - Most Cited Deep Learning Papers

转自:https://github.com/terryum/awesome-deep-learning-papers Awesome - Most Cited Deep Learning Papers ...

- (转) Deep Learning Research Review Week 2: Reinforcement Learning

Deep Learning Research Review Week 2: Reinforcement Learning 转载自: https://adeshpande3.github.io/ad ...

- deep learning 的综述

从13年11月初开始接触DL,奈何boss忙or 各种问题,对DL理解没有CSDN大神 比如 zouxy09等 深刻,主要是自己觉得没啥进展,感觉荒废时日(丢脸啊,这么久....)开始开文,即为记录自 ...

随机推荐

- VMware技巧01

1.20160930 VMware® Workstation 10.0.4 build-2249910,使用中遇到问题(WinXP sp3):网卡 桥接模式,NAT模式 都连不上网... 今天,尝试了 ...

- java高薪之路__009_网络

1. InetAddress类2. Socket: IP地址和端口号的结合,socket允许程序把网络连接当成一个流,数据在两个socket间通过IO传输, 通信的两端都要有socket. 主动发起通 ...

- 匿名函数和Lamda

不是本人所写!网络收集 C#中的匿名函数和Lamda是很有意思的东东,那么我们就来介绍一下,这到底是什么玩意,有什么用途了? 打开visual studio 新建一个控制台程序. 我们利用委托来写一个 ...

- 【转】关于 Web GIS

以下部分选自2015-03-01出版的<Web GIS从基础到开发实践(基于ArcGIS API for JavaScript)>一书中的前言部分: Web GIS 概念于1994 年首次 ...

- GetProcAddress 宏

#define FUNC_ADDR(hDll, func) pf##func func = \ (pf##func)GetProcAddress(hDll,#func);\ if(! func) {\ ...

- NGINX怎样处理惊群的

写在前面 写NGINX系列的随笔,一来总结学到的东西,二来记录下疑惑的地方,在接下来的学习过程中去解决疑惑. 也希望同样对NGINX感兴趣的朋友能够解答我的疑惑,或者共同探讨研究. 整个NGINX系列 ...

- (转)js activexobject调用客户机exe文件

原文地址:http://blog.csdn.net/jiafugui/article/details/5364210 function Run(strPath) { try { var objShel ...

- cmake gcc等安装备案

cmake安装,参照 http://www.cnblogs.com/voyagflyer/p/5323748.html cmake2.8以上 安装后的是/usr/local/bin/cmake -ve ...

- Useful blogs

Unofficial Windows Binaries for Python Extension Packages:http://www.lfd.uci.edu/~gohlke/pythonlibs ...

- PHP过滤外部链接及外部图片 添加rel="nofollow"属性

原来站内很多文章都是摘录的外部文章,文章里很多链接要么是时间久了失效了,要么就是一些测试的网址,如:http://localhost/ 之类的,链接多了的话,就形成站内很多死链接,这对SEO优化是很不 ...