2.3AutoEncoder

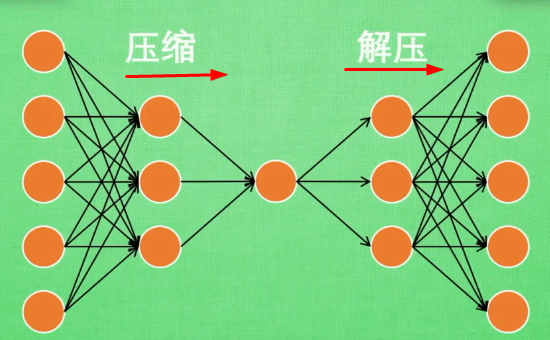

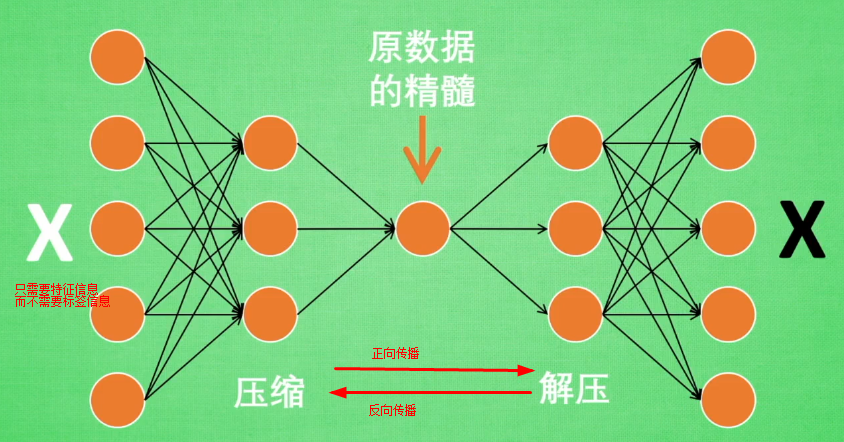

AutoEncoder是包含一个压缩和解压缩的过程,属于一种无监督学习的降维技术。

神经网络接受大量信息,有时候接受的数据达到上千万,可以通过压缩

提取原图片最具有代表性的信息,压缩输入的信息量,在将缩减后的数据放入神经网络中学习,如此学习起来变得轻松了

自编码在这个时候使用,可以将自编码归为无监督学习,类似于PCA,自编码可以为属性降维

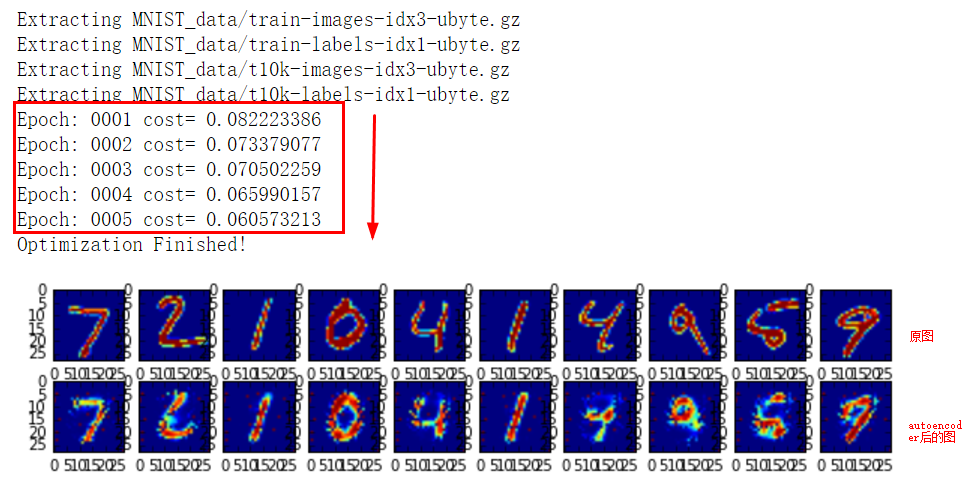

手写体识别代码AutoEncoder

from __future__ import division, print_function, absolute_import import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt # Import MNIST data

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets('MNIST_data', one_hot=False) # Visualize decoder setting

# Parameters

learning_rate = 0.01

training_epochs = 5

batch_size = 256

display_step = 1

examples_to_show = 10 # Network Parameters

n_input = 784 # MNIST data input (img shape: 28*28) # tf Graph input (only pictures)

X = tf.placeholder("float", [None, n_input]) # hidden layer settings

n_hidden_1 = 256 # 1st layer num features

n_hidden_2 = 128 # 2nd layer num features

weights = {

'encoder_h1': tf.Variable(tf.random_normal([n_input, n_hidden_1])),

'encoder_h2': tf.Variable(tf.random_normal([n_hidden_1, n_hidden_2])),

'decoder_h1': tf.Variable(tf.random_normal([n_hidden_2, n_hidden_1])),

'decoder_h2': tf.Variable(tf.random_normal([n_hidden_1, n_input])),

}

biases = {

'encoder_b1': tf.Variable(tf.random_normal([n_hidden_1])),

'encoder_b2': tf.Variable(tf.random_normal([n_hidden_2])),

'decoder_b1': tf.Variable(tf.random_normal([n_hidden_1])),

'decoder_b2': tf.Variable(tf.random_normal([n_input])),

} # Building the encoder

def encoder(x):

# Encoder Hidden layer with sigmoid activation #1

layer_1 = tf.nn.sigmoid(tf.add(tf.matmul(x, weights['encoder_h1']),

biases['encoder_b1']))

# Decoder Hidden layer with sigmoid activation #2

layer_2 = tf.nn.sigmoid(tf.add(tf.matmul(layer_1, weights['encoder_h2']),

biases['encoder_b2']))

return layer_2 # Building the decoder

def decoder(x):

# Encoder Hidden layer with sigmoid activation #1

layer_1 = tf.nn.sigmoid(tf.add(tf.matmul(x, weights['decoder_h1']),

biases['decoder_b1']))

# Decoder Hidden layer with sigmoid activation #2

layer_2 = tf.nn.sigmoid(tf.add(tf.matmul(layer_1, weights['decoder_h2']),

biases['decoder_b2']))

return layer_2 """ # Visualize encoder setting

# Parameters

learning_rate = 0.01 # 0.01 this learning rate will be better! Tested

training_epochs = 10

batch_size = 256

display_step = 1 # Network Parameters

n_input = 784 # MNIST data input (img shape: 28*28) # tf Graph input (only pictures)

X = tf.placeholder("float", [None, n_input]) # hidden layer settings

n_hidden_1 = 128

n_hidden_2 = 64

n_hidden_3 = 10

n_hidden_4 = 2 #2D show weights = {

'encoder_h1': tf.Variable(tf.truncated_normal([n_input, n_hidden_1],)),

'encoder_h2': tf.Variable(tf.truncated_normal([n_hidden_1, n_hidden_2],)),

'encoder_h3': tf.Variable(tf.truncated_normal([n_hidden_2, n_hidden_3],)),

'encoder_h4': tf.Variable(tf.truncated_normal([n_hidden_3, n_hidden_4],)), 'decoder_h1': tf.Variable(tf.truncated_normal([n_hidden_4, n_hidden_3],)),

'decoder_h2': tf.Variable(tf.truncated_normal([n_hidden_3, n_hidden_2],)),

'decoder_h3': tf.Variable(tf.truncated_normal([n_hidden_2, n_hidden_1],)),

'decoder_h4': tf.Variable(tf.truncated_normal([n_hidden_1, n_input],)),

}

biases = {

'encoder_b1': tf.Variable(tf.random_normal([n_hidden_1])),

'encoder_b2': tf.Variable(tf.random_normal([n_hidden_2])),

'encoder_b3': tf.Variable(tf.random_normal([n_hidden_3])),

'encoder_b4': tf.Variable(tf.random_normal([n_hidden_4])), 'decoder_b1': tf.Variable(tf.random_normal([n_hidden_3])),

'decoder_b2': tf.Variable(tf.random_normal([n_hidden_2])),

'decoder_b3': tf.Variable(tf.random_normal([n_hidden_1])),

'decoder_b4': tf.Variable(tf.random_normal([n_input])),

} def encoder(x):

layer_1 = tf.nn.sigmoid(tf.add(tf.matmul(x, weights['encoder_h1']),

biases['encoder_b1']))

layer_2 = tf.nn.sigmoid(tf.add(tf.matmul(layer_1, weights['encoder_h2']),

biases['encoder_b2']))

layer_3 = tf.nn.sigmoid(tf.add(tf.matmul(layer_2, weights['encoder_h3']),

biases['encoder_b3']))

layer_4 = tf.add(tf.matmul(layer_3, weights['encoder_h4']),

biases['encoder_b4'])

return layer_4 def decoder(x):

layer_1 = tf.nn.sigmoid(tf.add(tf.matmul(x, weights['decoder_h1']),

biases['decoder_b1']))

layer_2 = tf.nn.sigmoid(tf.add(tf.matmul(layer_1, weights['decoder_h2']),

biases['decoder_b2']))

layer_3 = tf.nn.sigmoid(tf.add(tf.matmul(layer_2, weights['decoder_h3']),

biases['decoder_b3']))

layer_4 = tf.nn.sigmoid(tf.add(tf.matmul(layer_3, weights['decoder_h4']),

biases['decoder_b4']))

return layer_4

""" # Construct model

encoder_op = encoder(X)

decoder_op = decoder(encoder_op) # Prediction

y_pred = decoder_op

# Targets (Labels) are the input data.

y_true = X # Define loss and optimizer, minimize the squared error

cost = tf.reduce_mean(tf.pow(y_true - y_pred, 2))

optimizer = tf.train.AdamOptimizer(learning_rate).minimize(cost) # Launch the graph

with tf.Session() as sess:

# tf.initialize_all_variables() no long valid from

# 2017-03-02 if using tensorflow >= 0.12

if int((tf.__version__).split('.')[1]) < 12 and int((tf.__version__).split('.')[0]) < 1:

init = tf.initialize_all_variables()

else:

init = tf.global_variables_initializer()

sess.run(init)

total_batch = int(mnist.train.num_examples/batch_size)

# Training cycle

for epoch in range(training_epochs):

# Loop over all batches

for i in range(total_batch):

batch_xs, batch_ys = mnist.train.next_batch(batch_size) # max(x) = 1, min(x) = 0

# Run optimization op (backprop) and cost op (to get loss value)

_, c = sess.run([optimizer, cost], feed_dict={X: batch_xs})

# Display logs per epoch step

if epoch % display_step == 0:

print("Epoch:", '%04d' % (epoch+1),

"cost=", "{:.9f}".format(c)) print("Optimization Finished!") # # Applying encode and decode over test set

encode_decode = sess.run(

y_pred, feed_dict={X: mnist.test.images[:examples_to_show]})

# Compare original images with their reconstructions

f, a = plt.subplots(2, 10, figsize=(10, 2))

for i in range(examples_to_show):

a[0][i].imshow(np.reshape(mnist.test.images[i], (28, 28)))

a[1][i].imshow(np.reshape(encode_decode[i], (28, 28)))

plt.show() # encoder_result = sess.run(encoder_op, feed_dict={X: mnist.test.images})

# plt.scatter(encoder_result[:, 0], encoder_result[:, 1], c=mnist.test.labels)

# plt.colorbar()

# plt.show()

利用AutoEncoder进行类似于PCA的降维

代码:

from __future__ import division, print_function, absolute_import import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt # Import MNIST data

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets('MNIST_data', one_hot=False) """

# Visualize decoder setting

# Parameters

learning_rate = 0.01

training_epochs = 5

batch_size = 256

display_step = 1

examples_to_show = 10 # Network Parameters

n_input = 784 # MNIST data input (img shape: 28*28) # tf Graph input (only pictures)

X = tf.placeholder("float", [None, n_input]) # hidden layer settings

n_hidden_1 = 256 # 1st layer num features

n_hidden_2 = 128 # 2nd layer num features

weights = {

'encoder_h1': tf.Variable(tf.random_normal([n_input, n_hidden_1])),

'encoder_h2': tf.Variable(tf.random_normal([n_hidden_1, n_hidden_2])),

'decoder_h1': tf.Variable(tf.random_normal([n_hidden_2, n_hidden_1])),

'decoder_h2': tf.Variable(tf.random_normal([n_hidden_1, n_input])),

}

biases = {

'encoder_b1': tf.Variable(tf.random_normal([n_hidden_1])),

'encoder_b2': tf.Variable(tf.random_normal([n_hidden_2])),

'decoder_b1': tf.Variable(tf.random_normal([n_hidden_1])),

'decoder_b2': tf.Variable(tf.random_normal([n_input])),

} # Building the encoder

def encoder(x):

# Encoder Hidden layer with sigmoid activation #1

layer_1 = tf.nn.sigmoid(tf.add(tf.matmul(x, weights['encoder_h1']),

biases['encoder_b1']))

# Decoder Hidden layer with sigmoid activation #2

layer_2 = tf.nn.sigmoid(tf.add(tf.matmul(layer_1, weights['encoder_h2']),

biases['encoder_b2']))

return layer_2 # Building the decoder

def decoder(x):

# Encoder Hidden layer with sigmoid activation #1

layer_1 = tf.nn.sigmoid(tf.add(tf.matmul(x, weights['decoder_h1']),

biases['decoder_b1']))

# Decoder Hidden layer with sigmoid activation #2

layer_2 = tf.nn.sigmoid(tf.add(tf.matmul(layer_1, weights['decoder_h2']),

biases['decoder_b2']))

return layer_2 """ # Visualize encoder setting

# Parameters

learning_rate = 0.01 # 0.01 this learning rate will be better! Tested

training_epochs = 10

batch_size = 256

display_step = 1 # Network Parameters

n_input = 784 # MNIST data input (img shape: 28*28) # tf Graph input (only pictures)

X = tf.placeholder("float", [None, n_input]) # hidden layer settings

n_hidden_1 = 128

n_hidden_2 = 64

n_hidden_3 = 10

n_hidden_4 = 2 #2D show weights = {

'encoder_h1': tf.Variable(tf.truncated_normal([n_input, n_hidden_1],)),

'encoder_h2': tf.Variable(tf.truncated_normal([n_hidden_1, n_hidden_2],)),

'encoder_h3': tf.Variable(tf.truncated_normal([n_hidden_2, n_hidden_3],)),

'encoder_h4': tf.Variable(tf.truncated_normal([n_hidden_3, n_hidden_4],)), 'decoder_h1': tf.Variable(tf.truncated_normal([n_hidden_4, n_hidden_3],)),

'decoder_h2': tf.Variable(tf.truncated_normal([n_hidden_3, n_hidden_2],)),

'decoder_h3': tf.Variable(tf.truncated_normal([n_hidden_2, n_hidden_1],)),

'decoder_h4': tf.Variable(tf.truncated_normal([n_hidden_1, n_input],)),

}

biases = {

'encoder_b1': tf.Variable(tf.random_normal([n_hidden_1])),

'encoder_b2': tf.Variable(tf.random_normal([n_hidden_2])),

'encoder_b3': tf.Variable(tf.random_normal([n_hidden_3])),

'encoder_b4': tf.Variable(tf.random_normal([n_hidden_4])), 'decoder_b1': tf.Variable(tf.random_normal([n_hidden_3])),

'decoder_b2': tf.Variable(tf.random_normal([n_hidden_2])),

'decoder_b3': tf.Variable(tf.random_normal([n_hidden_1])),

'decoder_b4': tf.Variable(tf.random_normal([n_input])),

} def encoder(x):

layer_1 = tf.nn.sigmoid(tf.add(tf.matmul(x, weights['encoder_h1']),

biases['encoder_b1']))

layer_2 = tf.nn.sigmoid(tf.add(tf.matmul(layer_1, weights['encoder_h2']),

biases['encoder_b2']))

layer_3 = tf.nn.sigmoid(tf.add(tf.matmul(layer_2, weights['encoder_h3']),

biases['encoder_b3']))

layer_4 = tf.add(tf.matmul(layer_3, weights['encoder_h4']),

biases['encoder_b4'])

return layer_4 def decoder(x):

layer_1 = tf.nn.sigmoid(tf.add(tf.matmul(x, weights['decoder_h1']),

biases['decoder_b1']))

layer_2 = tf.nn.sigmoid(tf.add(tf.matmul(layer_1, weights['decoder_h2']),

biases['decoder_b2']))

layer_3 = tf.nn.sigmoid(tf.add(tf.matmul(layer_2, weights['decoder_h3']),

biases['decoder_b3']))

layer_4 = tf.nn.sigmoid(tf.add(tf.matmul(layer_3, weights['decoder_h4']),

biases['decoder_b4']))

return layer_4 # Construct model

encoder_op = encoder(X)

decoder_op = decoder(encoder_op) # Prediction

y_pred = decoder_op

# Targets (Labels) are the input data.

y_true = X # Define loss and optimizer, minimize the squared error

cost = tf.reduce_mean(tf.pow(y_true - y_pred, 2))

optimizer = tf.train.AdamOptimizer(learning_rate).minimize(cost) # Launch the graph

with tf.Session() as sess:

# tf.initialize_all_variables() no long valid from

# 2017-03-02 if using tensorflow >= 0.12

if int((tf.__version__).split('.')[1]) < 12 and int((tf.__version__).split('.')[0]) < 1:

init = tf.initialize_all_variables()

else:

init = tf.global_variables_initializer()

sess.run(init)

total_batch = int(mnist.train.num_examples/batch_size)

# Training cycle

for epoch in range(training_epochs):

# Loop over all batches

for i in range(total_batch):

batch_xs, batch_ys = mnist.train.next_batch(batch_size) # max(x) = 1, min(x) = 0

# Run optimization op (backprop) and cost op (to get loss value)

_, c = sess.run([optimizer, cost], feed_dict={X: batch_xs})

# Display logs per epoch step

if epoch % display_step == 0:

print("Epoch:", '%04d' % (epoch+1),

"cost=", "{:.9f}".format(c)) print("Optimization Finished!") # # # Applying encode and decode over test set

# encode_decode = sess.run(

# y_pred, feed_dict={X: mnist.test.images[:examples_to_show]})

# # Compare original images with their reconstructions

# f, a = plt.subplots(2, 10, figsize=(10, 2))

# for i in range(examples_to_show):

# a[0][i].imshow(np.reshape(mnist.test.images[i], (28, 28)))

# a[1][i].imshow(np.reshape(encode_decode[i], (28, 28)))

# plt.show() encoder_result = sess.run(encoder_op, feed_dict={X: mnist.test.images})

plt.scatter(encoder_result[:, 0], encoder_result[:, 1], c=mnist.test.labels)

plt.colorbar()

plt.show()

显示如下:

2.3AutoEncoder的更多相关文章

随机推荐

- Eclipse------新建文件时没有JSP File解决方法

1.为没有web选项的eclipse添加web and JavaEE插件 .在Eclipse中菜单help选项中选择install new software选项 .在work with 栏中输入 Ju ...

- flexbox子盒子align-self属性

<!DOCTYPE html> <html lang="en"> <head> <meta charset="UTF-8&quo ...

- Django SimpleCMDB WSGI

一.WSGI 介绍 (1) 在前面的学习中,我们是通过 python manage.py runserver 0.0.0.0:8000 来启动并访问开发服务器的:(2) 但在实际中我们是通过直接访问 ...

- 带有ZLIB_LIBRARY_DEBUG的FindZLIB.cmake文件

CMake自带的FindZLIB.cmake只有ZLIB_LIBRARY,而没有ZLIB_LIBRARY_DEBUG 将下面的代码保存成FindZLIB.cmake,替换掉D:\Program Fil ...

- CopyTransform

// TransformCopier.cs v 1.1 // homepage: http://wiki.unity3d.com/index.php/CopyTransform using Unity ...

- [C] 如何使用头文件 .h 编译 C 源码

在 C 语言中,头文件或包含文件通常是一个源代码文件,程序员使用编译器指令将头文件包含进其他源文件的开始(或头部),由编译器在处理另一个源文件时自动包含进来. 一个头文件一般包含类.子程序.变量和其他 ...

- update select 多字段

update Countrys set ( Abbreviation_cn, Abbreviation_en, Two_code,Three_code, Number_code)= (select [ ...

- Ubuntu Releases 版本下载站

http://releases.ubuntu.com/

- 九度OJ小结

1. 高精度问题 可参考题目 题目1137:浮点数加法 http://ac.jobdu.com/problem.php?pid=1137 对于高精度问题可以考虑使用结构体.上述为浮点数加法,因此该 ...

- [原]sublime Text2

sublime Text2 升级到 2.0.2 build 2221 64位 的破破解 sublime Text2 download website 链接: http://pan.baidu.com/ ...