记一次cdh6.3.2版本spark写入phoniex的错误:Incompatible jars detected between client and server. Ensure that phoenix-[version]-server.jar is put on the classpath of HBase in every region server:

Caused by: java.lang.reflect.InvocationTargetException

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.wanmi.sbc.dw.spark.app.RecommendAnalysisApp$$anonfun$run$2.apply(RecommendAnalysisApp.scala:33)

at com.wanmi.sbc.dw.spark.app.RecommendAnalysisApp$$anonfun$run$2.apply(RecommendAnalysisApp.scala:33)

at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234)

at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234)

at scala.collection.mutable.HashSet.foreach(HashSet.scala:78)

at scala.collection.TraversableLike$class.map(TraversableLike.scala:234)

at scala.collection.mutable.AbstractSet.scala$collection$SetLike$$super$map(Set.scala:46)

at scala.collection.SetLike$class.map(SetLike.scala:92)

at scala.collection.mutable.AbstractSet.map(Set.scala:46)

at com.wanmi.sbc.dw.spark.app.RecommendAnalysisApp$.run(RecommendAnalysisApp.scala:33)

at com.wanmi.sbc.dw.spark.app.RecommendAnalysisApp.run(RecommendAnalysisApp.scala)

... 11 more

Caused by: java.sql.SQLException: ERROR 2006 (INT08): Incompatible jars detected between client and server. Ensure that phoenix-[version]-server.jar is put on the classpath of HBase in every region server: Can't find method newStub in org.apache.phoenix.coprocessor.generated.MetaDataProtos$MetaDataService!

at org.apache.phoenix.exception.SQLExceptionCode$Factory$1.newException(SQLExceptionCode.java:494)

at org.apache.phoenix.exception.SQLExceptionInfo.buildException(SQLExceptionInfo.java:150)

at org.apache.phoenix.query.ConnectionQueryServicesImpl.checkClientServerCompatibility(ConnectionQueryServicesImpl.java:1326)

at org.apache.phoenix.query.ConnectionQueryServicesImpl.ensureTableCreated(ConnectionQueryServicesImpl.java:1162)

at org.apache.phoenix.query.ConnectionQueryServicesImpl.createTable(ConnectionQueryServicesImpl.java:1501)

at org.apache.phoenix.query.DelegateConnectionQueryServices.createTable(DelegateConnectionQueryServices.java:119)

at org.apache.phoenix.schema.MetaDataClient.createTableInternal(MetaDataClient.java:2721)

at org.apache.phoenix.schema.MetaDataClient.createTable(MetaDataClient.java:1114)

at org.apache.phoenix.compile.CreateTableCompiler$1.execute(CreateTableCompiler.java:192)

at org.apache.phoenix.jdbc.PhoenixStatement$2.call(PhoenixStatement.java:408)

at org.apache.phoenix.jdbc.PhoenixStatement$2.call(PhoenixStatement.java:391)

at org.apache.phoenix.call.CallRunner.run(CallRunner.java:53)

at org.apache.phoenix.jdbc.PhoenixStatement.executeMutation(PhoenixStatement.java:390)

at org.apache.phoenix.jdbc.PhoenixStatement.executeMutation(PhoenixStatement.java:378)

at org.apache.phoenix.jdbc.PhoenixStatement.executeUpdate(PhoenixStatement.java:1806)

at org.apache.phoenix.query.ConnectionQueryServicesImpl$12.call(ConnectionQueryServicesImpl.java:2569)

at org.apache.phoenix.query.ConnectionQueryServicesImpl$12.call(ConnectionQueryServicesImpl.java:2532)

at org.apache.phoenix.util.PhoenixContextExecutor.call(PhoenixContextExecutor.java:76)

at org.apache.phoenix.query.ConnectionQueryServicesImpl.init(ConnectionQueryServicesImpl.java:2532)

at org.apache.phoenix.jdbc.PhoenixDriver.getConnectionQueryServices(PhoenixDriver.java:255)

at org.apache.phoenix.jdbc.PhoenixEmbeddedDriver.createConnection(PhoenixEmbeddedDriver.java:150)

at org.apache.phoenix.jdbc.PhoenixDriver.connect(PhoenixDriver.java:221)

at java.sql.DriverManager.getConnection(DriverManager.java:664)

at java.sql.DriverManager.getConnection(DriverManager.java:208)

at org.apache.phoenix.mapreduce.util.ConnectionUtil.getConnection(ConnectionUtil.java:113)

at org.apache.phoenix.mapreduce.util.ConnectionUtil.getInputConnection(ConnectionUtil.java:58)

at org.apache.phoenix.mapreduce.util.PhoenixConfigurationUtil.getSelectColumnMetadataList(PhoenixConfigurationUtil.java:354)

at org.apache.phoenix.spark.PhoenixRDD.toDataFrame(PhoenixRDD.scala:118)

at org.apache.phoenix.spark.SparkSqlContextFunctions.phoenixTableAsDataFrame(SparkSqlContextFunctions.scala:39)

at com.wanmi.sbc.dw.spark.recommend.db.PhoenixDb.select(PhoenixDb.scala:40)

at com.wanmi.sbc.dw.spark.recommend.CommonDimensionData.user_recommend_goods_data$lzycompute(CommonDimensionData.scala:222)

at com.wanmi.sbc.dw.spark.recommend.CommonDimensionData.user_recommend_goods_data(CommonDimensionData.scala:222)

at com.wanmi.sbc.dw.spark.recommend.CommonDimensionData.<init>(CommonDimensionData.scala:254)

at com.wanmi.sbc.dw.spark.recommend.DataAnalysis.<init>(DataAnalysis.scala:16)

at com.wanmi.sbc.dw.spark.recommend.hot.HotGoodsRecommend.<init>(HotGoodsRecommend.scala:19)

... 26 more

Caused by: java.lang.IllegalArgumentException: Can't find method newStub in org.apache.phoenix.coprocessor.generated.MetaDataProtos$MetaDataService!

at org.apache.hadoop.hbase.util.Methods.call(Methods.java:47)

at org.apache.hadoop.hbase.protobuf.ProtobufUtil.newServiceStub(ProtobufUtil.java:1537)

at org.apache.hadoop.hbase.client.HTable$12.call(HTable.java:996)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.NoSuchMethodException: org.apache.phoenix.coprocessor.generated.MetaDataProtos$MetaDataService.newStub(org.apache.hadoop.hbase.shaded.com.google.protobuf.RpcChannel)

at java.lang.Class.getMethod(Class.java:1786)

at org.apache.hadoop.hbase.util.Methods.call(Methods.java:39)

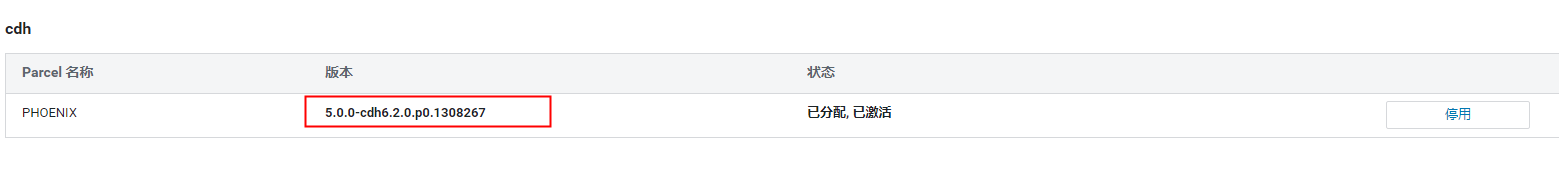

使用parall包安装的phoniex

在cdh集群执行spark读取phoniex数据是报上面错误

spark-shell --jars hdfs://hdfs地址:8020/spark/yarn/phoenix-core-5.0.0-HBase-2.0.jar,hdfs://hdfs地址:8020/spark/yarn/phoenix-spark-5.0.0-HBase-2.0.jar,hdfs://hdfs地址:8020/spark/yarn/hppc-0.7.2.jar,hdfs://hdfs地址:8020/spark/yarn/htrace-core-3.0.4.jar,hdfs://hdfs地址:8020/spark/yarn/htrace-core-3.1.0-incubating.jar,hdfs://hdfs地址:8020/spark/yarn/htrace-core-3.2.0-incubating.jar,hdfs://hdfs地址:8020/spark/yarn/htrace-core4-4.2.0-incubating.jar,hdfs://hdfs地址:8020/spark/yarn/tephra-api-0.6.0.jar,hdfs://hdfs地址:8020/spark/yarn/tephra-core-0.6.0.jar,hdfs://hdfs地址:8020/spark/yarn/tephra-api-0.14.0-incubating.jar,hdfs://hdfs地址:8020/spark/yarn/tephra-core-0.14.0-incubating.jar,hdfs://hdfs地址:8020/spark/yarn/twill-zookeeper-0.8.0.jar,hdfs://hdfs地址:8020/spark/yarn/twill-api-0.8.0.jar,hdfs://hdfs地址:8020/spark/yarn/twill-common-0.8.0.jar,hdfs://hdfs地址:8020/spark/yarn/twill-core-0.8.0.jar,hdfs://hdfs地址:8020/spark/yarn/twill-discovery-api-0.8.0.jar,hdfs://hdfs地址:8020/spark/yarn/twill-discovery-core-0.8.0.jar,hdfs://hdfs地址:8020/spark/yarn/disruptor-3.3.6.jar,hdfs://hdfs地址:8020/spark/yarn/antlr4-runtime-4.7.jar,hdfs://hdfs地址:8020/spark/yarn/antlr-2.7.7.jar,hdfs://hdfs地址:8020/spark/yarn/antlr-runtime-3.4.jar,hdfs://hdfs地址:8020/spark/yarn/antlr-runtime-3.5.2.jar

使用idea开发环境没问题

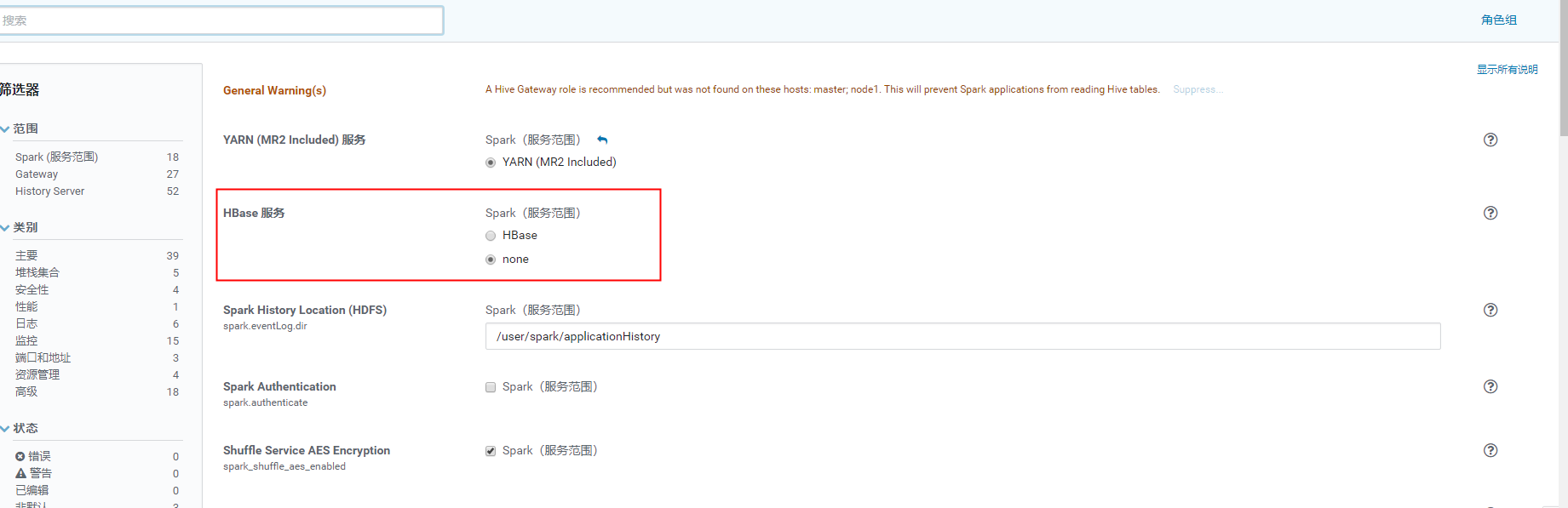

排查了1天,最大可能是cdh的 hbase的jar包依赖冲突的问题

选择将hbase服务依赖去掉,spark启动不加载hbase的classpath

将hbase相关jar copy到spark的classpath ,在运行代码成功了

cp $HBASE_HOME/lib/hbase-* $SPARK_HOME/jars

val df= spark.read.format("org.apache.phoenix.spark").options(Map("table" -> "RECOMMEND.HOT_GOODS_RECOMMEND", "zkUrl" -> "master:2181", "skipNormalizingIdentifier" -> "true")).load()

成功读取

太南了!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

记一次cdh6.3.2版本spark写入phoniex的错误:Incompatible jars detected between client and server. Ensure that phoenix-[version]-server.jar is put on the classpath of HBase in every region server:的更多相关文章

- Hbase Region Server整体架构

Region Server的整体架构 本文主要介绍Region的整体架构,后续再慢慢介绍region的各部分具体实现和源码 RegionServer逻辑架构图 RegionServer职责 1. ...

- habse Region server挂掉

2019-04-28 15:57:28,355 INFO org.apache.hadoop.hbase.regionserver.HeapMemoryManager: heapOccupancyPe ...

- hbase源码系列(三)Client如何找到正确的Region Server

客户端在进行put.delete.get等操作的时候,它都需要数据到底存在哪个Region Server上面,这个定位的操作是通过HConnection.locateRegion方法来完成的. loc ...

- region xx not deployed on any region server

ERROR: Region { meta => month_hotstatic,860010-2288000000_201405_5_exit_00000047486,1400144486405 ...

- 三 Client 如何找到正确的 Region Server

客户端在进行put.delete.get等操作的时候,它都需要数据到底存在哪个Region Server上面,这个定位的操作是通过 Connection.locateRegion方法来完成的. loc ...

- 码农飞升记-02-OracleJDK是什么?OracleJDK的版本怎么选择?

目录 1.Oracle JDK 是什么? 2.Oracle JDK 版本如何选择? 1.Java SE 发布节奏以及不同版本的差距 1.Java SE 8 以及之前版本的发布节奏和不同版本的差距 1. ...

- The server does not support version 3.0 of the J2EE Web module specification

1.错误: 在eclipse中使用run->run on server的时候,选择tomcat6会报错误:The server does not support version 3.0 of t ...

- Solution(项目部署):The server does not support version 3.0 of the J2EE Web module specification

1.错误: 在eclipse中使用run->run on server的时候,选择tomcat6会报错误:The server does not support version 3.0 of t ...

- HBase原理–所有Region切分的细节都在这里了

本文由 网易云发布. 作者:范欣欣(本篇文章仅限内部分享,如需转载,请联系网易获取授权.) Region自动切分是HBase能够拥有良好扩张性的最重要因素之一,也必然是所有分布式系统追求无限 ...

- SQL Server ->> Memory Allocation Mechanism and Performance Analysis(内存分配机制与性能分析)之 -- Minimum server memory与Maximum server memory

Minimum server memory与Maximum server memory是SQL Server下配置实例级别最大和最小可用内存(注意不等于物理内存)的服务器配置选项.它们是管理SQL S ...

随机推荐

- 外部工具连接SaaS模式云数据仓库MaxCompute实战——商业BI分析工具篇

简介: MaxCompute 是面向分析的企业级 SaaS 模式云数据仓库,以 Serverless 架构提供快速.全托管的在线数据仓库服务,消除了传统数据平台在资源扩展性和弹性方面的限制,最小化用户 ...

- 技术干货 | jsAPI 方式下的导航栏的动态化修改

简介: 操作指导:通过 jsAPI 实现导航栏的动态修改. 很多开发同学在接入 H5 容器后都会对容器的导航栏进行深度定制,除了 Native 的定制化之外,还有很多场景是使用到 jsAPI 的 ...

- Quick BI V4.0功能“炸弹”来袭,重磅推出即席分析、模板市场、企业微信免密登录等强势功能

简介: 2021年7月,Quick BI公共云版本迭代新功能:重磅推出即席分析.模板市场,分析门槛再降低:推出企业微信无缝对接,移动端类目个性配置及管理提升多端能力:数据建模配置交互升级至拖拽模式提升 ...

- [TP5] ThinkPHP 默认模块和单模块的设置方式

由于默认是采用多模块的支持,所以多个模块的情况下必须在URL地址中标识当前模块, 如果只有一个模块的话,可以进行模块绑定,方法是应用的入口文件中添加如下代码: // 绑定当前访问到index模块 de ...

- VisualStudio 禁用移动文件到文件夹自动修改命名空间功能

在 VisualStudio 2022 里的某个版本开始,将会在移动文件到其他文件夹时,自动修改命名空间,使用匹配文件夹路径的命名空间.如果这个功能能顺手将其他引用此类型的全部符号同时变更,那自然是很 ...

- Fast Möbius Transform 学习笔记 | FMT

小 Tips:在计算机语言中 \(\cap\) = & / and, \(\cup\) = | / or First. 定义 定义长度为 \(2^n\) 的序列的 and 卷积 \(A = B ...

- 异构数据源同步之表结构同步 → 通过 jdbc 实现,没那么简单

开心一刻 今天坐沙发上看电视,旁边的老婆拿着手机贴了过来 老婆:老公,这次出门旅游,机票我准备买了哈 我:嗯 老婆:你.我.你爸妈.我爸妈,一共六张票 老婆:这上面还有意外保险,要不要买? 我:都特么 ...

- gin框架获取参数

目录 httpext包 获取header头里的参数: scene := httpext.GetHeaderByName(ctx, "scene") // online / dev ...

- 04 elasticsearch学习笔记-Rest风格说明

目录 Rest风格说明 关于文档的基本操作 添加数据PUT 查询 修改文档 删除索引或者文档 Rest风格说明 Rest风格说明 method url地址 描述 PUT localhost:9200/ ...

- cesium基础知识汇总PPT版

以上教程来自火星科技,原视频教程地址如下: https://ke.qq.com/course/468292/3985600802137412#term_id=100560563