LOGIT REGRESSION

Version info: Code for this page was tested in SPSS 20.

Logistic regression, also called a logit model, is used to model dichotomous outcome variables. In the logit model the log odds of the outcome is modeled as a linear combination of the predictor variables.

Please note: The purpose of this page is to show how to use various data analysis commands. It does not cover all aspects of the research process which researchers are expected to do. In particular, it does not cover data cleaning and checking, verification of assumptions, model diagnostics and potential follow-up analyses.

Examples

Example 1: Suppose that we are interested in the factors

that influence whether a political candidate wins an election. The

outcome (response) variable is binary (0/1); win or lose.

The predictor variables of interest are the amount of money spent on the campaign, the

amount of time spent campaigning negatively and whether or not the candidate is an

incumbent.

Example 2: A researcher is interested in how variables, such as GRE (Graduate Record Exam scores),

GPA (grade

point average) and prestige of the undergraduate institution, effect admission into graduate

school. The response variable, admit/don’t admit, is a binary variable.

Description of the data

For our data analysis below, we are going to expand on Example 2 about getting into graduate school. We have generated hypothetical data, which can be obtained from our website by clicking on binary.sav. You can store this anywhere you like, but the syntax below assumes it has been stored in the directory c:data. This dataset has a binary response (outcome, dependent) variable called admit, which is equal to 1 if the individual was admitted to graduate school, and 0 otherwise. There are three predictor variables: gre, gpa, and rank. We will treat the variables gre and gpa as continuous. The variable rank takes on the values 1 through 4. Institutions with a rank of 1 have the highest prestige, while those with a rank of 4 have the lowest. We start out by opening the dataset and looking at some descriptive statistics.

get file = "c:databinary.sav". descriptives /variables=gre gpa.

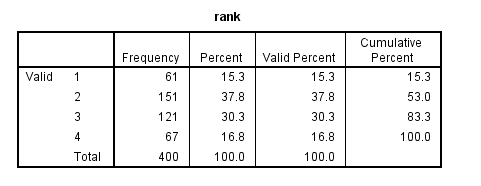

frequencies /variables = rank.

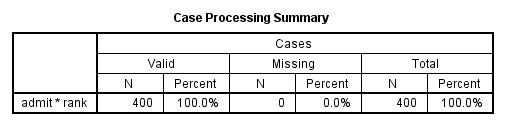

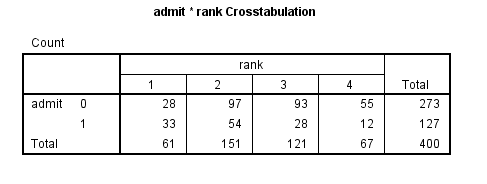

crosstabs /tables = admit by rank.

Analysis methods you might consider

Below is a list of some analysis methods you may have encountered. Some of the methods listed are quite reasonable while others have either fallen out of favor or have limitations.

- Logistic regression, the focus of this page.

- Probit regression. Probit analysis will produce results similar

logistic regression. The choice of probit versus logit depends largely on

individual preferences.

- OLS regression. When used with a binary response variable, this model is known

as a linear probability model and can be used as a way to

describe conditional probabilities. However, the errors (i.e., residuals) from the linear probability model violate the homoskedasticity and

normality of errors assumptions of OLS

regression, resulting in invalid standard errors and hypothesis tests. For

a more thorough discussion of these and other problems with the linear

probability model, see Long (1997, p. 38-40).

- Two-group discriminant function analysis. A multivariate method for dichotomous outcome variables.

- Hotelling’s T2. The 0/1 outcome is turned into the

grouping variable, and the former predictors are turned into outcome

variables. This will produce an overall test of significance but will not

give individual coefficients for each variable, and it is unclear the extent

to which each "predictor" is adjusted for the impact of the other

"predictors."

Logistic regression

Below we use the logistic regression command to run a model predicting the outcome variable admit, using gre, gpa, and rank. The categorical option specifies that rank is a categorical rather than continuous variable. The output is shown in sections, each of which is discussed below.

logistic regression admit with gre gpa rank

/categorical = rank.

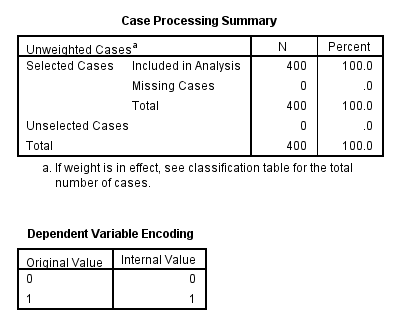

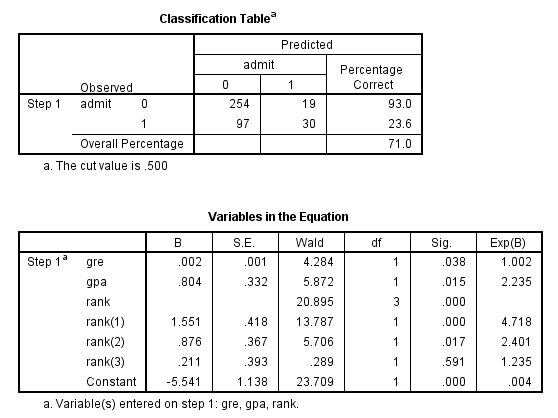

The first table above shows a breakdown of the number of cases used and not used in the analysis. The second table above gives the coding for the outcome variable, admit.

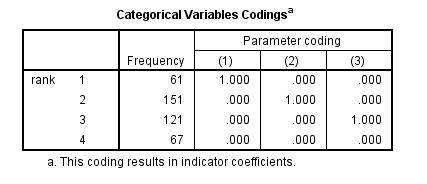

The table above shows how the values of the categorical variable rank were handled, there are terms (essentially dummy variables) in the model for rank=1,rank=2, and rank=3; rank=4 is the omitted category.

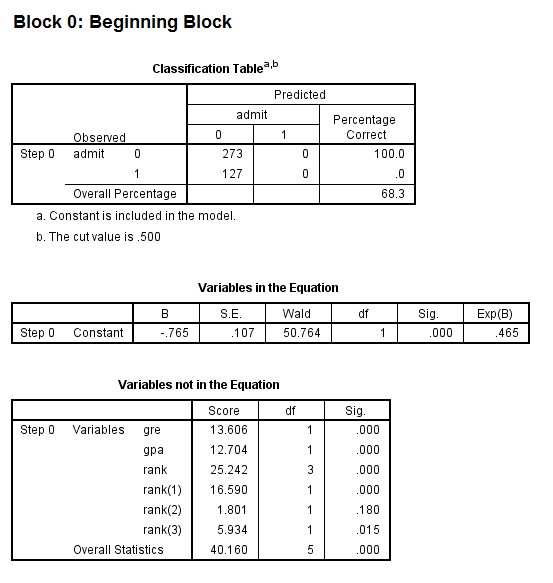

- The first model in the output is a null model, that is, a model with no predictors.

- The constant in the table labeled Variables in the Equation gives the unconditional log odds of admission (i.e., admit=1).

- The table labeled Variables not in the Equation gives the results of a score test, also known as a Lagrange multiplier test. The column labeled Score gives the estimated change in model fit if the term is added to the model, the other two columns give the degrees of freedom, and p-value (labeled Sig.) for the estimated change. Based on the table above, all three of the predictors, gre, gpa, and rank, are expected to improve the fit of the model.

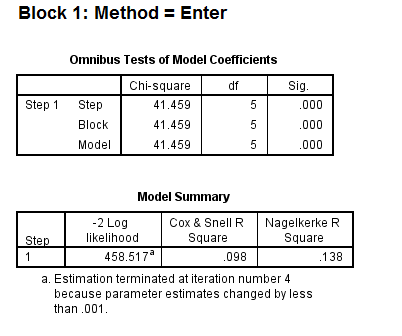

- The first table above gives the overall test for the model that includes the predictors. The chi-square value of 41.46 with a p-value of less than 0.0005 tells us that our model as a whole fits significantly better than an empty model (i.e., a model with no predictors).

- The -2*log likelihood (458.517) in the Model Summary table can be used in comparisons of nested models, but we won’t show an example of that here. This table also gives two measures of pseudo R-square.

- In the table labeled Variables in the Equation we see the coefficients, their standard errors, the Wald test statistic with associated degrees of freedom and p-values, and the exponentiated coefficient (also known as an odds ratio). Both gre and gpa are statistically significant. The overall (i.e., multiple degree of freedom) test for rank is given first, followed by the terms for rank=1, rank=2, and rank=3. The overall effect of rank is statistically significant, as are the terms for rank=1 and rank=2. The logistic regression coefficients give the change in the log odds of the outcome for a one unit increase in the predictor variable.

- For every one unit change in gre, the log odds of admission (versus non-admission) increases by 0.002.

- For a one unit increase in gpa, the log odds of being admitted to graduate school increases by 0.804.

- The indicator variables for rank have a slightly different interpretation. For example, having attended an undergraduate institution with rank of 1, versus an institution with a rank of 4, increases the log odds of admission by 1.551.

Things to consider

- Empty cells or small cells: You should check for empty or small

cells by doing a crosstab between categorical predictors and the outcome variable. If a cell has very few cases (a small cell), the model may become unstable or it might not run at all.

- Separation or quasi-separation (also called perfect prediction), a condition in which the outcome does not vary at some levels of the independent variables. See our page FAQ: What is complete or quasi-complete separation in logistic/probit regression and how do we deal with them? for information on models with perfect prediction.

- Sample size: Both logit and probit models require more cases than OLS regression because they use maximum likelihood estimation techniques. It is also important to keep in mind that when the outcome is rare, even if the overall dataset is large, it can be difficult to estimate a logit model.

- Pseudo-R-squared: Many different measures of pseudo-R-squared exist. They all attempt to provide information similar to that provided by R-squared in OLS regression; however, none of them can be interpreted exactly as R-squared in OLS regression is interpreted. For a discussion of various pseudo-R-squareds see Long and Freese (2006) or our FAQ page What are pseudo R-squareds?

- Diagnostics: The diagnostics for logistic regression are different from those for OLS regression. For a discussion of model diagnostics for logistic regression, see Hosmer and Lemeshow (2000, Chapter 5). Note that diagnostics done for logistic regression are similar to those done for probit regression.

See also

- Annotated Output for Logistic Regression

- Textbook Example: Applied Logistic Regression (2nd Edition) by David Hosmer and Stanley Lemeshow

- SPSS Frequently Asked Questions

- SPSS Code Fragments

- Stat Books for Loan, Logistic Regression and Limited Dependent Variables

References

- Hosmer, D. & Lemeshow, S. (2000). Applied Logistic Regression (Second Edition).

New York: John Wiley & Sons, Inc.

- Long, J. Scott (1997). Regression Models for Categorical and Limited Dependent Variables.

Thousand Oaks, CA: Sage Publications.

LOGIT REGRESSION的更多相关文章

- Logistic回归模型和Python实现

回归分析是研究变量之间定量关系的一种统计学方法,具有广泛的应用. Logistic回归模型 线性回归 先从线性回归模型开始,线性回归是最基本的回归模型,它使用线性函数描述两个变量之间的关系,将连续或离 ...

- 机器学习算法基础(Python和R语言实现)

https://www.analyticsvidhya.com/blog/2015/08/common-machine-learning-algorithms/?spm=5176.100239.blo ...

- Day3 《机器学习》第三章学习笔记

这一章也是本书基本理论的一章,我对这章后面有些公式看的比较模糊,这一会章涉及线性代数和概率论基础知识,讲了几种经典的线性模型,回归,分类(二分类和多分类)任务. 3.1 基本形式 给定由d个属性描述的 ...

- 从线性模型(linear model)衍生出的机器学习分类器(classifier)

1. 线性模型简介 0x1:线性模型的现实意义 在一个理想的连续世界中,任何非线性的东西都可以被线性的东西来拟合(参考Taylor Expansion公式),所以理论上线性模型可以模拟物理世界中的绝大 ...

- 壁虎书4 Training Models

Linear Regression The Normal Equation Computational Complexity 线性回归模型与MSE. the normal equation: a cl ...

- python: 模型的统计信息

/*! * * Twitter Bootstrap * */ /*! * Bootstrap v3.3.7 (http://getbootstrap.com) * Copyright 2011-201 ...

- [译]用R语言做挖掘数据《四》

回归 一.实验说明 1. 环境登录 无需密码自动登录,系统用户名shiyanlou,密码shiyanlou 2. 环境介绍 本实验环境采用带桌面的Ubuntu Linux环境,实验中会用到程序: 1. ...

- Machine Learning and Data Mining(机器学习与数据挖掘)

Problems[show] Classification Clustering Regression Anomaly detection Association rules Reinforcemen ...

- (数据科学学习手札24)逻辑回归分类器原理详解&Python与R实现

一.简介 逻辑回归(Logistic Regression),与它的名字恰恰相反,它是一个分类器而非回归方法,在一些文献里它也被称为logit回归.最大熵分类器(MaxEnt).对数线性分类器等:我们 ...

随机推荐

- P1582 倒水 题解

来水一发水题.. 题目链接. 正解开始: 首先,我们根据题意,可以得知这是一个有关二进制的题目: 具体什么关系,怎么做,我们来具体分析: 对于每个n,我们尝试将其二进制分解,也就是100101之类的形 ...

- 【bzoj2141】排队 [国家集训队2011]排队(树套树)

题目描述 排排坐,吃果果,生果甜嗦嗦,大家笑呵呵.你一个,我一个,大的分给你,小的留给我,吃完果果唱支歌,大家乐和和. 红星幼儿园的小朋友们排起了长长地队伍,准备吃果果.不过因为小朋友们的身高有所区别 ...

- 将网页上指定的表单的数据导入到excel中

很多时候,我们想要将网页上显示的信息,导入到Excel中,但是很多时候无法下手.可是,这个时候,下面这个例子会帮你大忙了. 将html表单指定内容导出到EXCEL中. <!DOCTYPE HTM ...

- andSelf() V1.2 加入先前所选的加入当前元素中

andSelf() V1.2概述 加入先前所选的加入当前元素中 对于筛选或查找后的元素,要加入先前所选元素时将会很有用.直线电机生产厂家 从jQuery1.8开始,.andSelf()方法已经被标注过 ...

- Laravel 多态关联中利用关联表相关字段进行排序的问题

1 目标 1.1 在 Laravel 项目的开发中,多态的需求很常见,按多态关联进行排序的需求也是必须的. 1.2 请想像,我们有一个需求,荣誉栏目多态关联一个档案模型,要求在荣誉中按档案的推荐时间进 ...

- java+大文件上传下载

文件上传下载,与传统的方式不同,这里能够上传和下载10G以上的文件.而且支持断点续传. 通常情况下,我们在网站上面下载的时候都是单个文件下载,但是在实际的业务场景中,我们经常会遇到客户需要批量下载的场 ...

- Windows:获取本地时间

造冰箱的大熊猫@cnblogs 2019/6/4 #include <windows.h> int func() { SYSTEMTIME systime; GetLocalTime ( ...

- Codevs 2482 宝库通道 2007年省队选拔赛安徽

2482 宝库通道 2007年省队选拔赛安徽 时间限制: 1 s 空间限制: 128000 KB 题目等级 : 大师 Master 题目描述 Description 探宝的旅程仍然继续中,由于你的帮助 ...

- python 垃圾回收笔记

目录 引用计数 python内部的引用计数机制 循环引用 调试内存泄漏 总结 python 程序在运行的时候,需要在内存中开辟出一块空间,用于存放运行时产生的临时变量:计算完成后,再将结果输出到永久性 ...

- 初学c++动态联编

先看一下什么是C++联编? 我觉得通俗的讲,用对象来访问类的成员函数就是静态联编. 那什么是动态联编: 一般是通过虚函数实现动态联编. 看一个动态联编的例子: 我比较懒,所以直接粘贴了MOOC视频的图 ...