转载:Why using Single Root I/O Virtualization (SR-IOV) can help improve I/O performance and Reduce Costs

Introduction

While server virtualization is being widely deployed in an effort to reduce costs and optimize data center resource usage, an additional key area where virtualization has an opportunity to shine is in the area of I/O performance and its role in enabling more efficient application execution. The advent of the Single Root I/O Virtualization (SR-IOV) by the PCI-SIG organization provides a step forward in making it easier to implement virtualization within the PCI bus itself. SR-IOV provides additional definitions to the PCI Express® (PCIe®) specification to enable multiple Virtual Machines (VMs) to share PCI hardware resources.

Using virtualization provides several important benefits to system designers:

- It makes it possible to run a large number of virtual machines per server, which reduces the need for hardware and the resultant costs of space and power required by hardware devices

- It creates the ability to start or stop and add or remove servers independently, increasing flexibility and scalability

- It adds the capability to run different operating systems on the same host machine, again reducing the need for discreet hardware

In this paper, we will explore why designing systems that have been natively built on SR-IOV-enabled hardware may be the most cost-effective way to improve I/O performance and how to easily implement SR-IOV in PCIe devices.

Traditional Virtualized System Overview

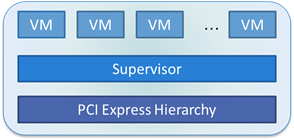

A virtualized system (Figure 1) is a discreet system which contains:

- Several virtual machines (VM)

- A supervisor, also referred to as the Virtual Machine Manager (VMM)

- PCI Express hierarchy

Within this virtualized system, the Supervisor plays a crucial role; it provides the interface between the hardware and the virtual machines, it is responsible for security, and ensures that there is no possible interaction between virtual machines.

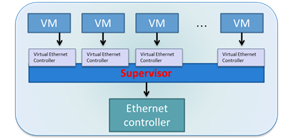

Any system can be virtualized without specific SR-IOV technology. In a more traditional virtualization scenario, the Supervisor must emulate virtual devices and perform resource sharing on their behalf by instantiating a virtual Ethernet controller for each virtual machine (Figure 2). This creates an I/O bottleneck and often results in poor performance. In addition, it creates a tradeoff between the number of virtual machines a physical server can realistically support and the system’s I/O performance. Adding more VMs can aggravate the bottleneck.

Providing a Better Way – The Benefits of SR-IOV Hardware Implementation

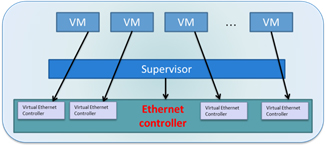

Designing systems with hardware that incorporates SR-IOV support allows virtual devices to be implemented in hardware and enables resource sharing to be handled by a PCI Express® device such as an Ethernet controller (Figure 3).

The benefit of using SR-IOV over more traditional network virtualization is that in SR-IOV virtualization, the VM is talking directly to the network adapter through Direct Memory Access (DMA). Using DMA allows the VM to bypass virtualization transports such as the VM Bus and avoids requiring any processing in the management partition. By avoiding the use of a switch, the best possible system performance is attained, providing close to “bare-metal” performance.

Implementing SR-IOV in an Adapter Card

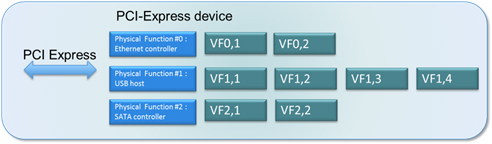

The SR-IOV specification enables a hardware provider to modify their PCI card to define itself as independent devices to a VMM. To achieve this, the SR-IOV architecture distinguishes two types of functions (Figure 4):

Physical Functions (PFs):

Physical Functions (PFs) are full-featured PCIe functions; they are discovered, managed, and manipulated like any other PCIe device and PFs have full configuration space. It is possible to configure or control the PCIe device via the PF and in turn, the PF has the complete ability to move data in and out of the device. Each PCI Express device can have from one (1) and up to eight (8) physical PFs. Each PF is independent and is seen by software as a separate PCI Express device, which allows several devices in the same chip and makes software development easier and less costly.

Virtual Functions (VFs):

Virtual Functions (VFs) are ‘lightweight’ PCIe functions designed solely to move data in and out. Each VF is attached to an underlying PF and each PF can have from zero (0) to one (1) or more VFs. In addition, PFs within the same device can each have a different number of VFs. While VFs, are similar to PFs, they intentionally have a reduced configuration space because they inherit most of their settings from their PF.

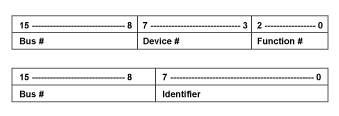

In order to effectively implement SR-IOV, it is necessary to access the VF configuration space.

Traditional routing allows 8 functions and the device number is always “0” in PCIe.

So alternate routing is used with the standard fields to extend the number of functions. This change allows up to 256 functions. (Figure 5)

In addition, SR-IOV extends this further by allowing the use of several consecutive bus numbers for a single device, enabling more than 256 functions.

VFs are light-weight functions and their configuration space is significantly different from PFs. Significant changes are as follows:

- Most registers are hardwired. They are set to “0”, “1” or take the same value as their PF.

- No Base Address Register is implemented

- Only a few RW or RWC registers are implemented in each VF:

- A few PCI/PCI Express “enable” and “status” bits

- MSI/MSI-X registers

- Optionally, some capability registers such as AER may be enabled

In order to access the VF memory spaces, up to 6 VF BARs are implemented in the PF SR-IOV capability. They are similar to normal BAR registers except that their settings apply to all VFs.

During initial SR-IOV set-up and initialization VFs are not enabled and are invisible. The Supervisor then detects the device and configures PFs. If the host system and device driver detect SR-IOV capability then they will:

- Configure the number of VFs

- Assign addresses to VF BARs

- Enable VFs

Once set up, each VM can be assigned a virtual device and can access it directly via its VF driver. There must be at least one VF per VM, otherwise the Supervisor will need to perform some or all sharing management, reducing the benefits of utilizing SR-IOV. The current market trend is to have 64 VMs, so a SR-IOV capable device should support 64 VFs.

Choosing the Right Partners for SR-IOV Success

Many PCI card designers are realizing that the PCI IP they choose is crucial to the success of their SR-IOV implementation. By choosing PCI IP vendors who understand and design for SR-IOV inherently, the PCI cards provide a more seamless integration with the host system.

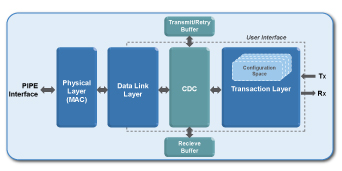

PLDA, a long-time leader in PCIe IP innovation, provides its XpressRICH3® PCIe Gen 3 IP (Figure 6) to many of the leading PCI Express hardware vendors. PLDA’s IP provides native SR-IOV support and the PLDA IP delivers industry-leading specifications to fully enable SR-IOV functionality.

PLDA’s XpressRICH3 PCIe 3.0 IP delivers:

- The ability for each Physical Function (PF) to support up to 64 Virtual Functions (VF)

- Native support for up to 8 PFs

- Enablement of up to a total of 512 functions

- VFs share the same configuration access, status and error reporting interface as their PF

- VFs are mapped after PFs, using several bus numbers if necessary

- Application checks tl_rx_bardec to figure out which VF is receiving a packet

- Same RTL for ASIC and high-end FPGA applications

- x16, x8, x4, x2, x1 at GEN3 (8Gbps) speed

- Backward compatible to GEN2 (5Gbps) and GEN1 (2.5Gbps)

Because PLDA is the industry leader in PCI Express IP, with over 2,500 designs in working silicon, it is also an assurance of ease of integration and first-time right functionality. In addition, PLDA offers free evaluation to enable a hands-on trial of their IP before purchase and provides a comprehensive, SR-IOV Reference Design enabling quick implementation and reducing time-to-market. To schedule a demo of the XpressRICH3 IP running SR-IOV, in which the IP performs both “Read DMA” and “Write DMA”, visit PLDA at www.plda.com.

Summary:

In summary, the key benefits of using SR-IOV to achieve virtualization include:

- Enabling efficient sharing of PCIe devices, optimizing performance and capacity

- Creating hundreds of VFs associated with a single PF, extending the capacity of a device and lowering hardware costs

- Dynamic control by the PF through registers designed to turn on the SR-IOV capability, eliminating the need for time-intensive integration

- Increased performance via direct access to hardware from the virtual machine environment

转载:Why using Single Root I/O Virtualization (SR-IOV) can help improve I/O performance and Reduce Costs的更多相关文章

- OpenStack for NFV applications: enabling Single Root I/O virtualization and PCI-Passthrough

http://superuser.openstack.org/articles/openstack-for-nfv-applications-enabling-single-root-i-o-virt ...

- Carrier-Grade Mirantis OpenStack (the Mirantis NFV Initiative), Part 1: Single Root I/O Virtualization (SR-IOV)

The Mirantis NFV initiative aims to create an NFV ecosystem for OpenStack, with validated hardware ...

- [转载]Linux下非root用户如何安装软件

[转载]Linux下非root用户如何安装软件 来源:https://tlanyan.me/work-with-linux-without-root-permission/ 这是本人遇到的实际问题,之 ...

- 【转载】Centos7修改root密码

参考: https://blog.csdn.net/wcy00q/article/details/70570043 知道root密码,需要修改密码 以root登录系统输入passwd命令默认修改roo ...

- CNA, FCoE, TOE, RDMA, iWARP, iSCSI等概念及 Chelsio T5 产品介绍 转载

CNA, FCoE, TOE, RDMA, iWARP, iSCSI等概念及 Chelsio T5 产品介绍 2016年09月01日 13:56:30 疯子19911109 阅读数:4823 标签: ...

- Dynamic device virtualization

A system and method for providing dynamic device virtualization is herein disclosed. According to on ...

- DPDK support for vhost-user

转载:http://blog.csdn.net/quqi99/article/details/47321023 X86体系早期没有在硬件设计上对虚拟化提供支持,因此虚拟化完全通过软件实现.一个典型的做 ...

- KVM 介绍(4):I/O 设备直接分配和 SR-IOV [KVM PCI/PCIe Pass-Through SR-IOV]

学习 KVM 的系列文章: (1)介绍和安装 (2)CPU 和 内存虚拟化 (3)I/O QEMU 全虚拟化和准虚拟化(Para-virtulizaiton) (4)I/O PCI/PCIe设备直接分 ...

- KVM虚拟化网络优化技术总结

https://www.intel.com/content/dam/www/public/us/en/documents/technology-briefs/sr-iov-nfv-tech-brief ...

随机推荐

- iOS: [UITableView reloadData]

在 iTouch4 或者相差不多的 iPhone 上,不建议在 UIViewController 的 viewWillAppear 的方法中放置 UITableView 的 reloadData 方法 ...

- 查询_修改SQL Server 2005中数据库文件存放路径

1.查看当前的存放路径: select database_id,name,physical_name AS CurrentLocation,state_desc,size from sys.maste ...

- Unity3d + PureMVC框架搭建

0.流程:LoginView-SendNotification()---->LoginCommand--Execute()--->调用proxy中的函数操作模型数据--LoginProxy ...

- 关于使用_bstr_t的一个坑

编程中需要将_variant_t转换为char*,常用的方法是:(const char*)_bstr_t(c_variant_t); 使用_bstr_t的构造函数: _bstr_t(const _v ...

- 关于MFC中的OnPaint和OnDraw

当窗口发生改变后,会产生无效区域,这个无效的区域需要重画. 一般Windows会发送两个消息WM_PAINT(通知客户区 有变化)和WM_NCPAINT(通知非客户区有变化). 非客户区的重画系统自己 ...

- php 用命令行导出和导入MySQL数据库

命令行导出数据库:1,进入MySQL目录下的bin文件夹:cd MySQL中到bin文件夹的目录如我输入的命令行:cd C:\Program Files\MySQL\MySQL Server 4.1\ ...

- mysql中/*!40000 DROP DATABASE IF EXISTS `top_server`*/;这中注释有什么作用?

需求描述: 今天在进行mysqldump实验,使用--add-drop-databases参数,于是在生成的SQL文件中,就出现了. /*!40000 DROP DATABASE IF EXISTS ...

- Python 爬虫实战

图片爬虫实战 链接爬虫实战 糗事百科爬虫实战 微信爬虫实战 多线程爬虫实战

- 在create-react-app的脚手架里面使用scss

之前用vue-cli脚手架的时候,只需要引进sass需要的依赖包便可以引入scss,但是在create-react-app的时候,发现除了需要引入sass依赖,还需要进行配置: 不管用什么方法进行sa ...

- js里面正则表示满足多个条件的写法

实例,满足条件里面必须包含数字,字母和下划线组成 代码如下: var reg = /^([a-z]+\d+\_+)|([a-z]+\_+\d+)|(\_+[a-z]+\d+)|(\_+\d+[a-z] ...