Stream Processing for Everyone with SQL and Apache Flink

Where did we come from?

With the 0.9.0-milestone1 release, Apache Flink added an API to process relational data with SQL-like expressions called the Table API. The central concept of this API is a Table, a structured data set or stream on which relational operations can be applied. The Table API is tightly integrated with the DataSet and DataStream API. A Table can be easily created from a DataSet or DataStream and can also be converted back into a DataSet or DataStream as the following example shows

从0.9开始,引入Table API来支持关系型操作,

val execEnv = ExecutionEnvironment.getExecutionEnvironment

val tableEnv = TableEnvironment.getTableEnvironment(execEnv) // obtain a DataSet from somewhere

val tempData: DataSet[(String, Long, Double)] = // convert the DataSet to a Table

val tempTable: Table = tempData.toTable(tableEnv, 'location, 'time, 'tempF)

// compute your result

val avgTempCTable: Table = tempTable

.where('location.like("room%"))

.select(

('time / (3600 * 24)) as 'day,

'Location as 'room,

(('tempF - 32) * 0.556) as 'tempC

)

.groupBy('day, 'room)

.select('day, 'room, 'tempC.avg as 'avgTempC)

// convert result Table back into a DataSet and print it

avgTempCTable.toDataSet[Row].print()

可以看到可以很简单的把dataset转换为Table,指定其元数据即可

然后对于table就可以进行各种关系型操作,

最后还可以把Table再转换回dataset

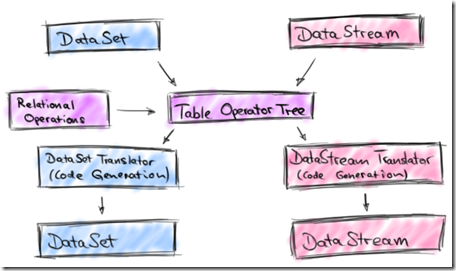

Although the example shows Scala code, there is also an equivalent Java version of the Table API. The following picture depicts the original architecture of the Table API.

对于table的关系型操作,最终通过code generation还是会转换为dataset的逻辑

Table API joining forces with SQL

the community was also well aware of the multitude of dedicated “SQL-on-Hadoop” solutions in the open source landscape (Apache Hive, Apache Drill,Apache Impala, Apache Tajo, just to name a few).

Given these alternatives, we figured that time would be better spent improving Flink in other ways than implementing yet another SQL-on-Hadoop solution.

What we came up with was a revised architecture for a Table API that supports SQL (and Table API) queries on streaming and static data sources.

We did not want to reinvent the wheel and decided to build the new Table API on top of Apache Calcite, a popular SQL parser and optimizer framework. Apache Calcite is used by many projects including Apache Hive, Apache Drill, Cascading, and many more. Moreover, the Calcite community put SQL on streams on their roadmap which makes it a perfect fit for Flink’s SQL interface.

虽然社区已经有很多的Sql-on-Hadoop方案,flink希望把时间花在更有价值的地方,而不是再实现一套

但是当前这样的需要非常强烈,所以在revise Table API的基础上实现对SQL的支持

对于SQL的支持,借助于Calcite,并且Calcite已经把SQL on streams放在roadmap上,有希望成为streaming sql的标准

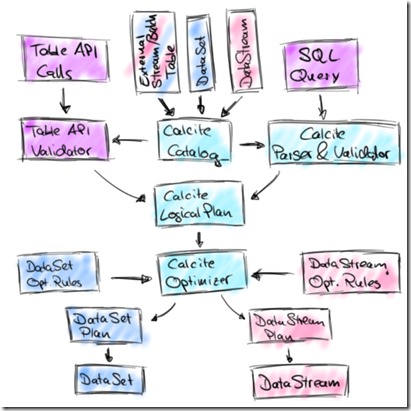

Calcite is central in the new design as the following architecture sketch shows:

The new architecture features two integrated APIs to specify relational queries, the Table API and SQL.

Queries of both APIs are validated against a catalog of registered tables and converted into Calcite’s representation for logical plans.

In this representation, stream and batch queries look exactly the same.

Next, Calcite’s cost-based optimizer applies transformation rules and optimizes the logical plans.

Depending on the nature of the sources (streaming or static) we use different rule sets.

Finally, the optimized plan is translated into a regular Flink DataStream or DataSet program. This step involves again code generation to compile relational expressions into Flink functions.

这里Table API和SQL都统一的转换为Calcite的逻辑plans,然后再通过Calcite Optimizer进行优化,最终通过code generation转换为Flink的函数

With this effort, we are adding SQL support for both streaming and static data to Flink.

However, we do not want to see this as a competing solution to dedicated, high-performance SQL-on-Hadoop solutions, such as Impala, Drill, and Hive.

Instead, we see the sweet spot of Flink’s SQL integration primarily in providing access to streaming analytics to a wider audience.

In addition, it will facilitate integrated applications that use Flink’s API’s as well as SQL while being executed on a single runtime engine

再次说明,支持SQL并不是为了再造一个专用的SQL-on-Hadoop solutions;而是为了让更多的人可以来使用Flink,说白了,这块不是当前的战略重点

How will Flink’s SQL on streams look like?

So far we discussed the motivation for and architecture of Flink’s stream SQL interface, but how will it actually look like?

// get environments

val execEnv = StreamExecutionEnvironment.getExecutionEnvironment

val tableEnv = TableEnvironment.getTableEnvironment(execEnv) // configure Kafka connection

val kafkaProps = ...

// define a JSON encoded Kafka topic as external table

val sensorSource = new KafkaJsonSource[(String, Long, Double)](

"sensorTopic",

kafkaProps,

("location", "time", "tempF")) // register external table

tableEnv.registerTableSource("sensorData", sensorSource) // define query in external table

val roomSensors: Table = tableEnv.sql(

"SELECT STREAM time, location AS room, (tempF - 32) * 0.556 AS tempC " +

"FROM sensorData " +

"WHERE location LIKE 'room%'"

) // define a JSON encoded Kafka topic as external sink

val roomSensorSink = new KafkaJsonSink(...) // define sink for room sensor data and execute query

roomSensors.toSink(roomSensorSink)

execEnv.execute()

跟Table API相比,可以通过纯粹的SQL来做相应的操作

当前SQL不支持,windows aggregation,

但是Calcite的Streaming SQL是支持的,比如,

SELECT STREAM

TUMBLE_END(time, INTERVAL '1' DAY) AS day,

location AS room,

AVG((tempF - 32) * 0.556) AS avgTempC

FROM sensorData

WHERE location LIKE 'room%'

GROUP BY TUMBLE(time, INTERVAL '1' DAY), location

可以用Table API实现,

val avgRoomTemp: Table = tableEnv.ingest("sensorData")

.where('location.like("room%"))

.partitionBy('location)

.window(Tumbling every Days(1) on 'time as 'w)

.select('w.end, 'location, , (('tempF - 32) * 0.556).avg as 'avgTempCs)

What’s up next?

The Flink community is actively working on SQL support for the next minor version Flink 1.1.0. In the first version, SQL (and Table API) queries on streams will be limited to selection, filter, and union operators. Compared to Flink 1.0.0, the revised Table API will support many more scalar functions and be able to read tables from external sources and write them back to external sinks. A lot of work went into reworking the architecture of the Table API and integrating Apache Calcite.

In Flink 1.2.0, the feature set of SQL on streams will be significantly extended. Among other things, we plan to support different types of window aggregates and maybe also streaming joins. For this effort, we want to closely collaborate with the Apache Calcite community and help extending Calcite’s support for relational operations on streaming data when necessary.

1.2会有window aggregates和streaming joins,值得期待。。。

Stream Processing for Everyone with SQL and Apache Flink的更多相关文章

- Building real-time dashboard applications with Apache Flink, Elasticsearch, and Kibana

https://www.elastic.co/cn/blog/building-real-time-dashboard-applications-with-apache-flink-elasticse ...

- Apache Flink 为什么能够成为新一代大数据计算引擎?

众所周知,Apache Flink(以下简称 Flink)最早诞生于欧洲,2014 年由其创始团队捐赠给 Apache 基金会.如同其他诞生之初的项目,它新鲜,它开源,它适应了快速转的世界中更重视的速 ...

- 腾讯大数据平台Oceanus: A one-stop platform for real time stream processing powered by Apache Flink

January 25, 2019Use Cases, Apache Flink The Big Data Team at Tencent In recent years, the increa ...

- Stream Processing 101: From SQL to Streaming SQL in 10 Minutes

转自:https://wso2.com/library/articles/2018/02/stream-processing-101-from-sql-to-streaming-sql-in-ten- ...

- Stream processing with Apache Flink and Minio

转自:https://blog.minio.io/stream-processing-with-apache-flink-and-minio-10da85590787 Modern technolog ...

- Apache Samza - Reliable Stream Processing atop Apache Kafka and Hadoop YARN

http://engineering.linkedin.com/data-streams/apache-samza-linkedins-real-time-stream-processing-fram ...

- 13 Stream Processing Patterns for building Streaming and Realtime Applications

原文:https://iwringer.wordpress.com/2015/08/03/patterns-for-streaming-realtime-analytics/ Introduction ...

- Introducing KSQL: Streaming SQL for Apache Kafka

Update: KSQL is now available as a component of the Confluent Platform. I’m really excited to announ ...

- Storm(2) - Log Stream Processing

Introduction This chapter will present an implementation recipe for an enterprise log storage and a ...

随机推荐

- spring-boot项目在外部tomcat环境下部署

http://m.blog.csdn.net/article/details?id=51009423

- HTML <!DOCTYPE> 标签

在默认情况下,FF和IE的解释标准是不一样的,也就是说,如果一个网页没有声明DOCTYPE,它就会以默认的DOCTYPE解释下面的HTML.在同 一种标准下,不同浏览器的解释模型都有所差异,在默认情况 ...

- POJ3635 Full Tank?(DP + Dijkstra)

题目大概说,一辆带有一个容量有限的油箱的车子在一张图上行驶,每行驶一单位长度消耗一单位油,图上的每个点都可以加油,不过都有各自的单位费用,问从起点驾驶到终点的最少花费是多少? 这题自然想到图上DP,通 ...

- PHP 将秒数转换成时分秒

将秒数转换成时分秒,PHP提供了一个函数gmstrftime,不过该函数仅限于24小时内的秒数转换.对于超过24小时的秒数,我们应该怎么让其显示出来呢,例如 34:02:02 $seconds = 3 ...

- mysql 连接超时解决

修改my.cnf文件即可. ************************************ 在/etc/my.cnf下添加如下两行代码: wait_timeout=31536000inter ...

- 这次,雅虎真的撤销QA团队了

在一个软件开发过程中取消了质量保证团队会发生什么?更少,而不是更多的错误,以及一个大大加快的开发周期. 至少,根据雅虎的经验,确实如此.该公司的首席设计师Amotz Maimon,以及科学与技术高级副 ...

- Android -- TabHost

TabHost 也就相当于Windows下的选项框 有两种实现方式 1. 继承TabActivity (已经废弃):从TabActivity中用getTabHost()方法获取TabHost 2. ...

- SpringMVC+Thymeleaf如何处理URL中的动态查询参数

1.使用一个Map<String, String>接收数据 When you request a Map annotated with @RequestParam Spring creat ...

- C#项目打开/保存文件夹/指定类型文件,获取路径

C#项目打开/保存文件夹/指定类型文件,获取路径 转:http://q1q2q363.xiaoxiang.blog.163.com/blog/static/1106963682011722424325 ...

- Eclipse安装nodeclipse插件

1. Start Eclipse, then select Help > Install New Software... 2. Enter the update site URL into th ...