[Paper] Selection and replacement algorithm for memory performance improvement in Spark

Summary

Spark does not have a good mechanism to select reasonable RDDs to cache their partitions in limited memory. --> Propose a novel selection algorithm, by which Spark can automatically select the RDDs to cache their partitions in memory according to the number of use for RDDs. --> speeds up iterative computations.

Spark use least recently used (LRU) replacement algorithm to evict RDDs, which only consider the usage of the RDDs. --> a novel replacement algorithm called weight replacement (WR) algorithm, which takes comprehensive consideration of the partitions computation cost, the number of use for partitions, and the sizes of the partitions.

Preliminary Information

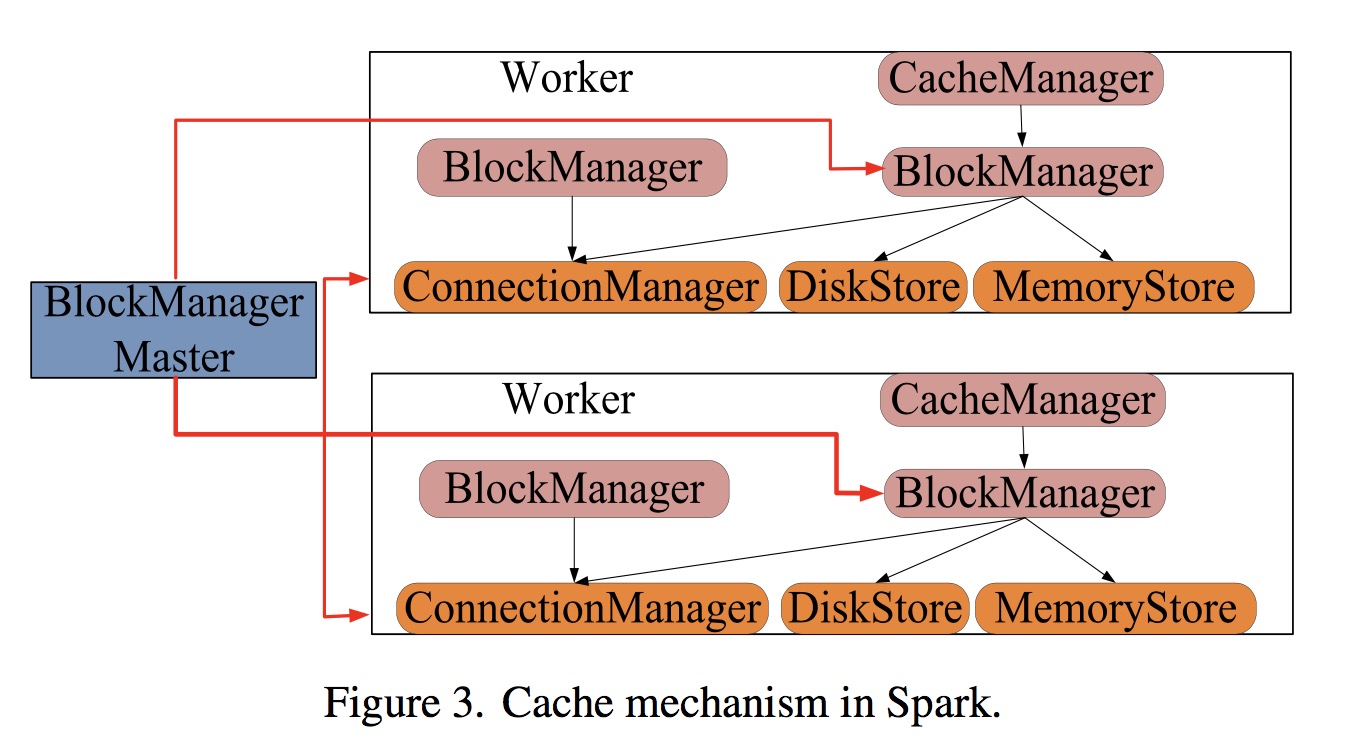

Cache mechanism in Spark

- When RDD partitions have been cached in memory during the iterative computation, an operation which needs the partitions will get them by CacheManager.

- All operations including reading or caching in CacheManager mainly depend on the API of BlockManager. BlockManager decides whether partitions are obtained from memory or disks.

Scheduling model

- The LRU algorithm only considers whether those partitions are recently used while ignores the partitions computation cost and the sizes of the partitions.

- The number of use for partitions can be known from the DAG before tasks are performed.

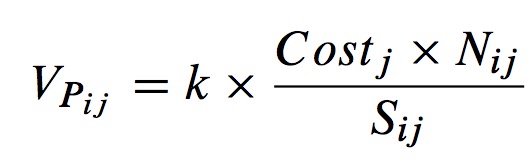

Let Nij be the number of use of j-th partition of RDDi.

Let Sij be the size of j-th partition or RDDi. - The computation time is also an important part. --> Each partition of RDDi starting time STij and finishing time FTij can roughly express its execution and communication time.

Consider the computation cost of partition as Costj = FTij - STij. - After that, we set up a scheduling model and obtain the weight of Pij, which can be expressed as:

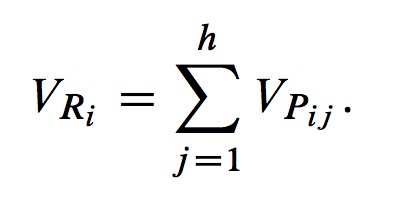

where k is the correction parameter, and it's set to a constant. - Finally, we assume that there are h partitions in RDDi, so the weight of RDDi is:

Proposed Algorithm

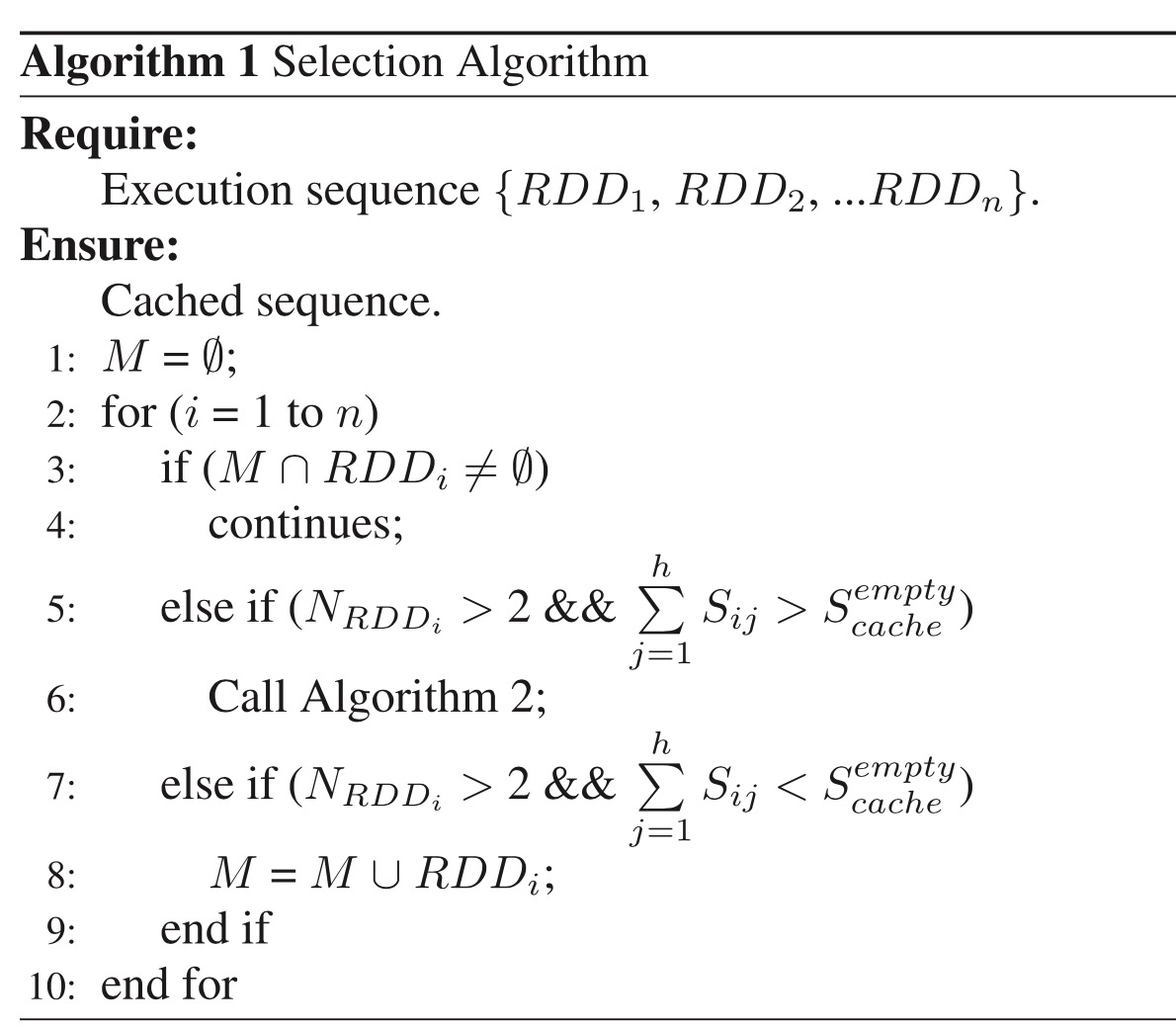

Selection algorithm

- For a given DAG graph,we can get the num of uses for each RDD, expressioned as NRDDi.

- The pseudocode:

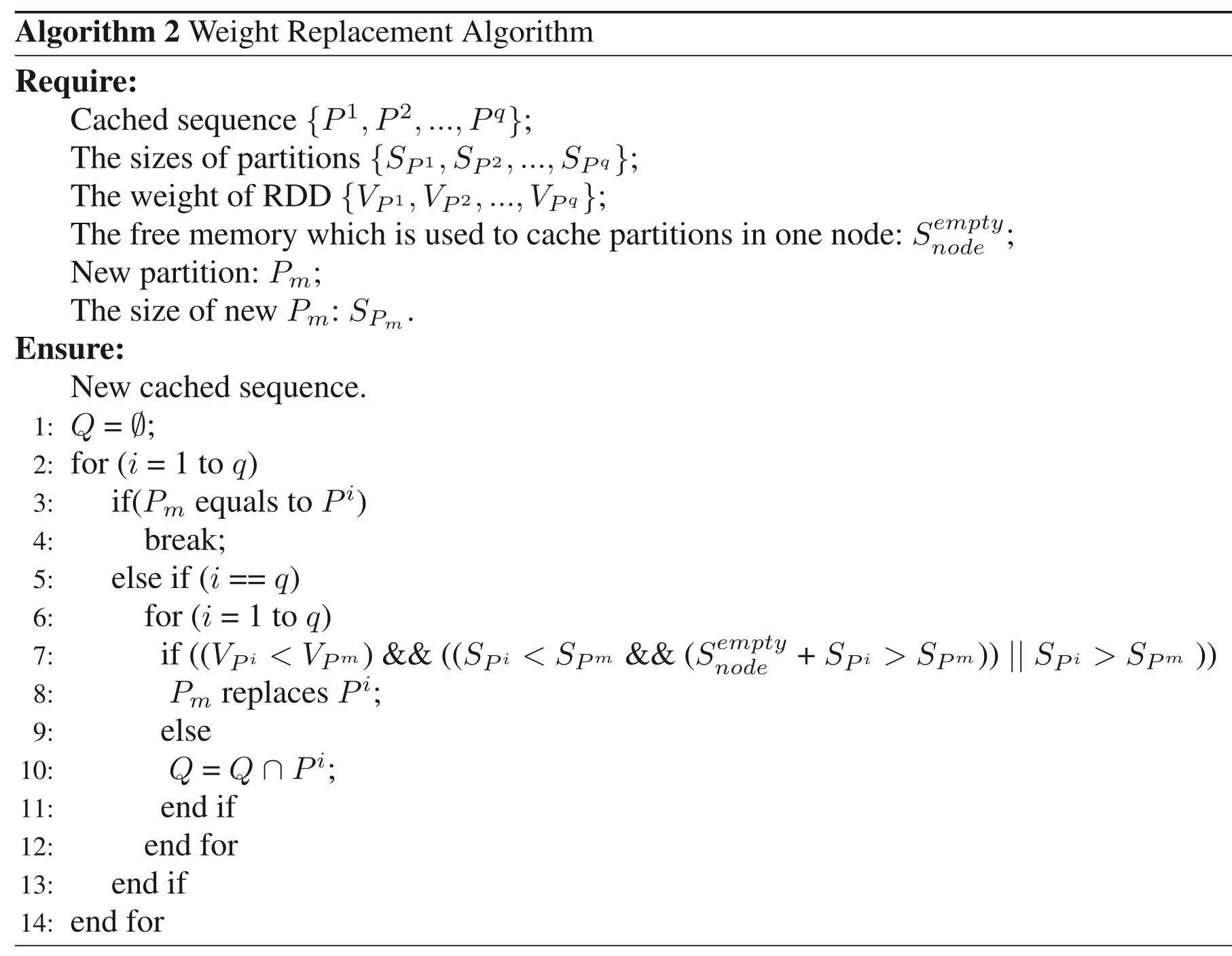

Replacement algorithm

- In this paper, we use weight of partition to evaluate the importance of the partitions.

- When many partitions are cached in memory, we use QuickSort algorithm to sort the partitions according to the value of the partitions.

- The pseudocode:

Experiments

- five servers, six virtual machines, each vm has 100G disk, 2.5GHZ and runs Ubuntu 12.04 operation system while memory is variable, and we set it as 1G, 2G, or 4G in different conditions.

- Hadoop 2.10.4 and Spark-1.1.0.

- use ganglia to observe the memory usage.

- use pageRank algorithm to do expirement, it's iterative.

[Paper] Selection and replacement algorithm for memory performance improvement in Spark的更多相关文章

- Partitioned Replacement for Cache Memory

In a particular embodiment, a circuit device includes a translation look-aside buffer (TLB) configur ...

- Flash-aware Page Replacement Algorithm

1.Abstract:(1)字体太乱,单词中有空格(2) FAPRA此名词第一出现时应有“ FAPRA(Flash-aware Page Replacement Algorithm)”说明. 2.in ...

- Inside Amazon's Kafkaesque "Performance Improvement Plans"

Amazon CEO and brilliant prick Jeff Bezos seems to have lost his magic touch lately. Investors, empl ...

- Hive-Container killed by YARN for exceeding memory limits. 9.2 GB of 9 GB physical memory used. Consider boosting spark.yarn.executor.memoryOverhead.

Caused by: org.apache.spark.SparkException: Job aborted due to stage failure: Task times, most recen ...

- Spring Boot Memory Performance

The Performance Zone is brought to you in partnership with New Relic. Quickly learn how to use Docke ...

- 计算机系统结构总结_Memory Hierarchy and Memory Performance

Textbook: <计算机组成与设计——硬件/软件接口> HI <计算机体系结构——量化研究方法> QR 这是youtube上一个非常好的memory syst ...

- PatentTips - Control register access virtualization performance improvement

BACKGROUND OF THE INVENTION A conventional virtual-machine monitor (VMM) typically runs on a compute ...

- SQL Performance Improvement Techniques(转)

原文地址:http://www.codeproject.com/Tips/1023621/SQL-Performance-Improvement-Techniques This article pro ...

- Ceilometer Polling Performance Improvement

Ceilometer的数据采集agent会定期对nova/keystone/neutron/cinder等服务调用其API的获取信息,默认是20秒一次, # Polling interval for ...

随机推荐

- Spring Batch 背景

在开源项目及其相关社区把大部分注意力集中在基于 web 和 SOA 基于消息机制的框架中时,基于 Java 的批处理框架却无人问津,尽管在企业 T 环境中一直都有这种批处理的需求.但因为缺乏一个标准的 ...

- 1 虚拟环境virtualenv

一.windows下虚拟环境创建 1.1 虚拟环境virtualenv 如果在一台电脑上, 想开发多个不同的项目, 需要用到同一个包的不同版本, 如果使用上面的命令, 在同一个目录下安装或者更新, 新 ...

- unitest 测试集 实例

-->baidy.py #coding=utf-8from selenium import webdriverfrom selenium.webdriver.common.by import B ...

- 纯css实现顶部进度条随滚动条滚动

<!DOCTYPE html> <head> <meta charset="utf-8"> <meta http-equiv=" ...

- python-day97--django-ModelForm

Model Form :直接利用你的models里的字段 应用场景: - ModelForm - 中小型应用程序(model是你自己写的) - Form - 大型应用程序 注意事项: - 1. 类 f ...

- win php安装 oracle11 g

1.下载plsql和oracle11g plsql安装比较简单,就是普通的安装.oracle11 g不用安装, 下面我讲解一下win 64位的系统配置oracle: (1).首先我使用的是warpse ...

- Oracle11g温习-第十六章:用户管理

2013年4月27日 星期六 10:50 1.概念 (1)schema : user.object 就是用户创建的对象 (2)用户认证方式: ...

- 数据结构与算法之PHP实现队列、栈

一.队列 1)队列(Queue)是一种先进先出(FIFO)的线性表,它只允许在表的前端进行删除操作,在表的后端进行插入操作,进行插入操作的端称为队尾,进行删除操作的端称为队头.即入队只能从队尾入,出队 ...

- 一、I/O操作(流的概念)

一.流(Stream) 所谓流(Stream),就是一系列的数据. 当不同的介质之间有数据交互的时候,java就会使用流来实现. 数据源可以使文件,还可以是数据库,网络,甚至是其他的程序 不如读取文件 ...

- for-each、for-in和for-of的区别

for-each.for-in和for-of的区别 1.forEach()方法 用于调用数组的每个元素,并将元素传递给回调函数. 注意: forEach() 对于空数组是不会执行回调函数的. arra ...