Auto-scaling scikit-learn with Apache Spark

来源:https://databricks.com/blog/2016/02/08/auto-scaling-scikit-learn-with-apache-spark.html

Data scientists often spend hours or days tuning models to get the highest accuracy. This tuning typically involves running a large number of independent Machine Learning (ML) tasks coded in Python or R. Following some work presented at Spark Summit Europe 2015, we are excited to release scikit-learn integration package for Apache Spark that dramatically simplifies the life of data scientists using Python. This package automatically distributes the most repetitive tasks of model tuning on a Spark cluster, without impacting the workflow of data scientists:

- When used on a single machine, Spark can be used as a substitute to the default multithreading framework used by scikit-learn (Joblib).

- If a need comes to spread the work across multiple machines, no change is required in the code between the single-machine case and the cluster case.

Scale data science effortlessly

Python is one of the most popular programming languages for data exploration and data science, and this is in no small part due to high quality libraries such as Pandas for data exploration or scikit-learn for machine learning. Scikit-learn provides fast and robust implementations of standard ML algorithms such as clustering, classification, and regression.

Scikit-learn’s strength has typically been in the realm of computing on a single node, though. For some common scenarios, such as parameter tuning, a large number of small tasks can be run in parallel. These scenarios are perfect use cases for Spark.

We explored how to integrate Spark with scikit-learn, and the result is the Scikit-learn integration package for Spark. It combines the strengths of Spark and scikit-learn with no changes to users’ code. It re-implements some components of scikit-learn that benefit the most from distributed computing. Users will find a Spark-based cross-validator class that is fully compatible with scikit-learn’s cross-validation tools. By swapping out a single class import, users can distribute cross-validation for their existing scikit-learn workflows.

Distribute tuning of Random Forests

Consider a classical example of identifying digits in images. Here are a few examples of images taken from the popular digits dataset, with their labels:

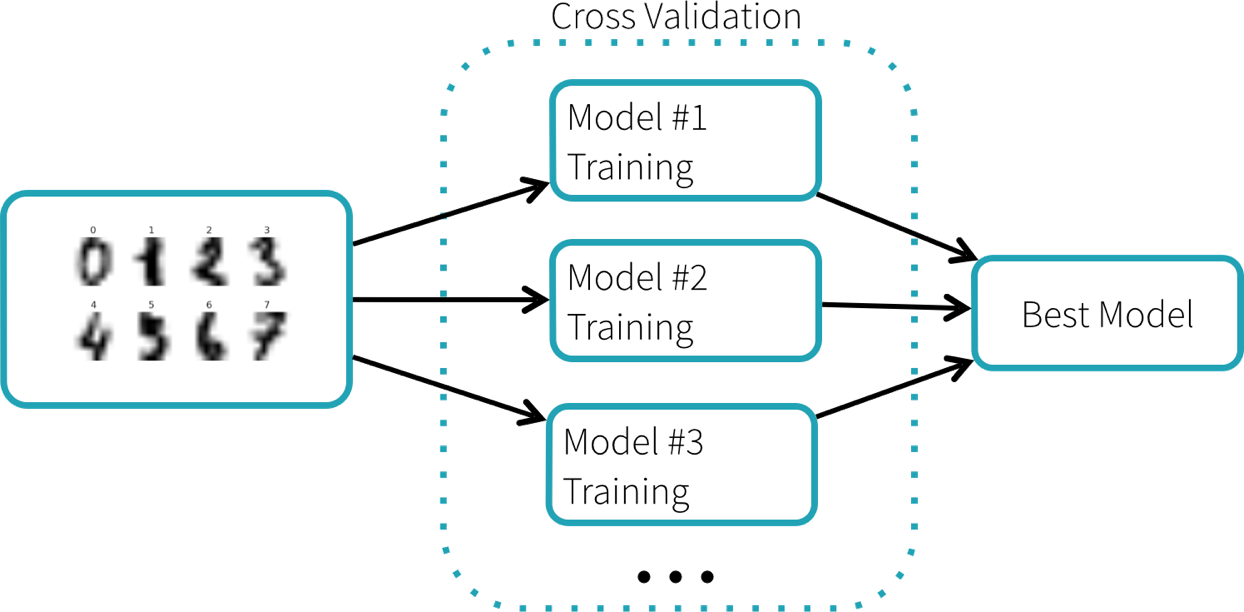

We are going to train a random forest classifier to recognize the digits. This classifier has a number of parameters to adjust, and there is no easy way to know which parameters work best, other than trying out many different combinations. Scikit-learn provides GridSearchCV, a search algorithm that explores many parameter settings automatically. GridSearchCV uses selection by cross-validation, illustrated below. Each parameter setting produces one model, and the best-performing model is selected.

The original code, using only scikit-learn, is as follows:

from sklearn import grid_search, datasets

from sklearn.ensemble import RandomForestClassifier

from sklearn.grid_search import GridSearchCV

digits = datasets.load_digits()

X, y = digits.data, digits.target

param_grid = {"max_depth": [3, None],

"max_features": [1, 3, 10],

"min_samples_split": [1, 3, 10],

"min_samples_leaf": [1, 3, 10],

"bootstrap": [True, False],

"criterion": ["gini", "entropy"],

"n_estimators": [10, 20, 40, 80]}

gs = grid_search.GridSearchCV(RandomForestClassifier(), param_grid=param_grid)

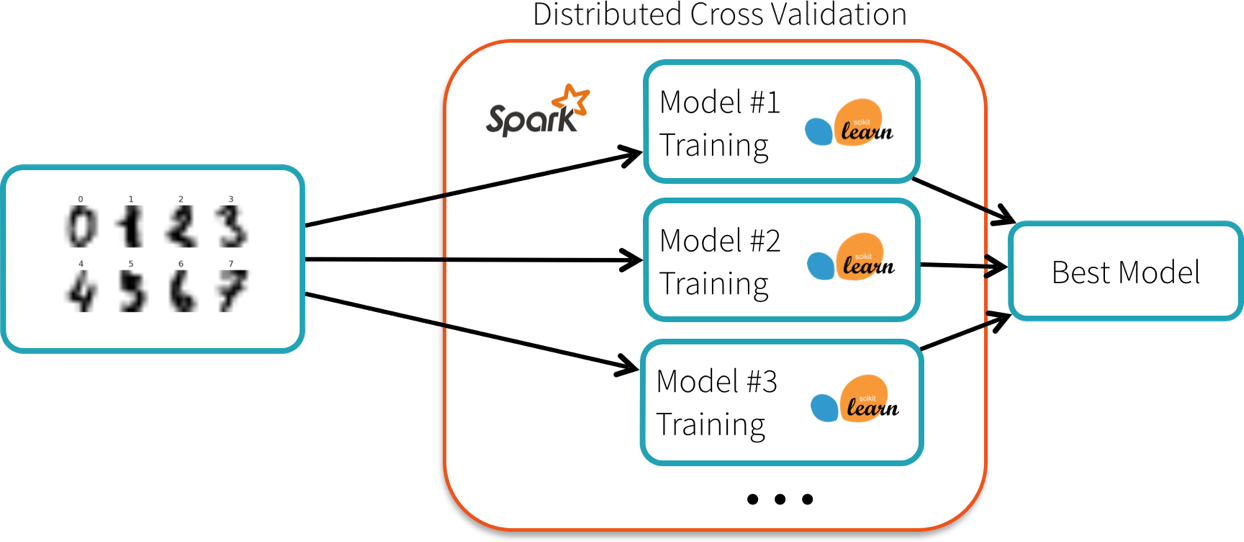

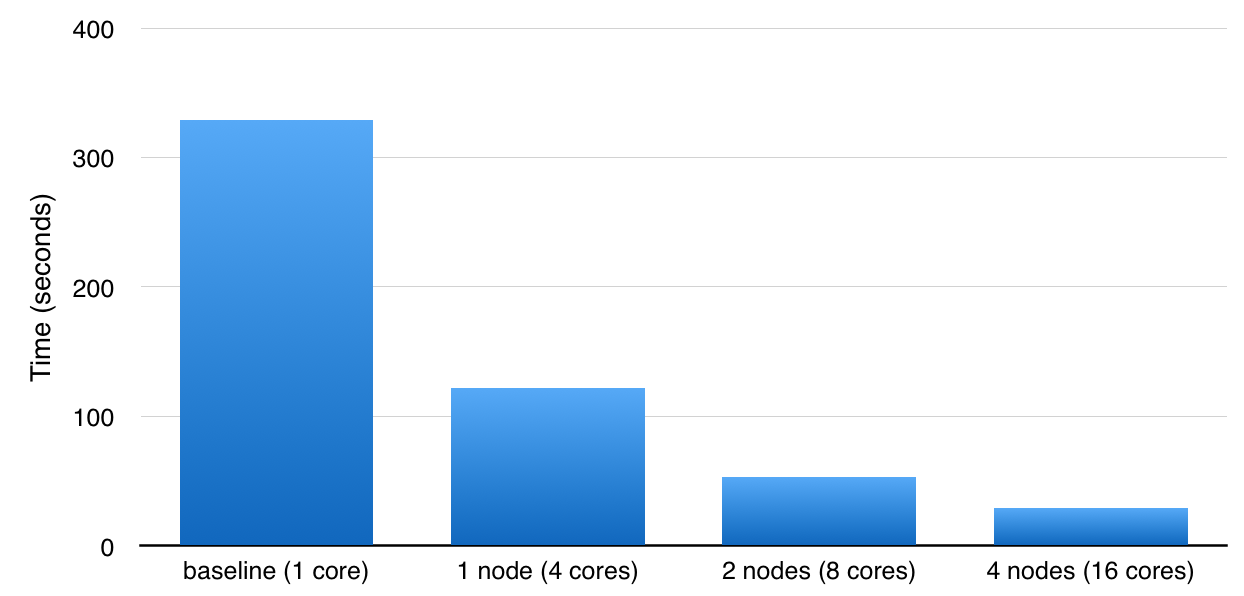

gs.fit(X, y)The dataset is small (in the hundreds of kilobytes), but exploring all the combinations takes about 5 minutes on a single core. The scikit-learn package for Spark provides an alternative implementation of the cross-validation algorithm that distributes the workload on a Spark cluster. Each node runs the training algorithm using a local copy of the scikit-learn library, and reports the best model back to the master:

The code is the same as before, except for a one-line change:

from sklearn import grid_search, datasets

from sklearn.ensemble import RandomForestClassifier

# Use spark_sklearn’s grid search instead:

from spark_sklearn import GridSearchCV

digits = datasets.load_digits()

X, y = digits.data, digits.target

param_grid = {"max_depth": [3, None],

"max_features": [1, 3, 10],

"min_samples_split": [1, 3, 10],

"min_samples_leaf": [1, 3, 10],

"bootstrap": [True, False],

"criterion": ["gini", "entropy"],

"n_estimators": [10, 20, 40, 80]}

gs = grid_search.GridSearchCV(RandomForestClassifier(), param_grid=param_grid)

gs.fit(X, y)This example runs under 30 seconds on a 4-node cluster (which has 16 CPUs). For larger Datasets

" style="box-sizing: border-box; color: rgb(0, 0, 0) !important; text-decoration-line: none !important; border-bottom: 1px dotted rgb(0, 0, 0) !important;">datasets and more parameter settings, the difference is even more dramatic.

Get started

If you would like to try out this package yourself, it is available as a Spark package and as a PyPI library. To get started, check out this example notebook on Databricks.

In addition to distributing ML tasks in Python across a cluster, Scikit-learn integration package for Spark provides additional tools to export data from Spark to python and vice-versa. You can find methods to convert Spark DataFrames

" style="box-sizing: border-box; color: rgb(0, 0, 0) !important; text-decoration-line: none !important; border-bottom: 1px dotted rgb(0, 0, 0) !important;">DataFrames to Pandas dataframes and numpy arrays. More details can be found in this Spark Summit Europe presentation and in the API documentation.

Auto-scaling scikit-learn with Apache Spark的更多相关文章

- (原创)(三)机器学习笔记之Scikit Learn的线性回归模型初探

一.Scikit Learn中使用estimator三部曲 1. 构造estimator 2. 训练模型:fit 3. 利用模型进行预测:predict 二.模型评价 模型训练好后,度量模型拟合效果的 ...

- Offset Management For Apache Kafka With Apache Spark Streaming

An ingest pattern that we commonly see being adopted at Cloudera customers is Apache Spark Streaming ...

- Why Apache Spark is a Crossover Hit for Data Scientists [FWD]

Spark is a compelling multi-purpose platform for use cases that span investigative, as well as opera ...

- Apache Spark 章节1

作者:jiangzz 电话:15652034180 微信:jiangzz_wx 微信公众账号:jiangzz_wy 背景介绍 Spark是一个快如闪电的统一分析引擎(计算框架)用于大规模数据集的处理. ...

- APACHE SPARK 2.0 API IMPROVEMENTS: RDD, DATAFRAME, DATASET AND SQL

What’s New, What’s Changed and How to get Started. Are you ready for Apache Spark 2.0? If you are ju ...

- What’s new for Spark SQL in Apache Spark 1.3(中英双语)

文章标题 What’s new for Spark SQL in Apache Spark 1.3 作者介绍 Michael Armbrust 文章正文 The Apache Spark 1.3 re ...

- A Tale of Three Apache Spark APIs: RDDs, DataFrames, and Datasets(中英双语)

文章标题 A Tale of Three Apache Spark APIs: RDDs, DataFrames, and Datasets 且谈Apache Spark的API三剑客:RDD.Dat ...

- Real Time Credit Card Fraud Detection with Apache Spark and Event Streaming

https://mapr.com/blog/real-time-credit-card-fraud-detection-apache-spark-and-event-streaming/ Editor ...

- How-to: Tune Your Apache Spark Jobs (Part 1)

Learn techniques for tuning your Apache Spark jobs for optimal efficiency. When you write Apache Spa ...

- Using Apache Spark and MySQL for Data Analysis

What is Spark Apache Spark is a cluster computing framework, similar to Apache Hadoop. Wikipedia has ...

随机推荐

- 实验19:Frame-Relay

实验16-1. 帧中继多点子接口 Ø 实验目的通过本实验,读者可以掌握如下技能:(1) 帧中继的基本配置(2) 帧中继的静态映射(3) 多点子接口的应用Ø 实验拓扑 实验步骤n 步骤1 ...

- 探究HashMap1.8的扩容

扩容前 扩容后 机制 else { // preserve order Node<K,V> loHead = null, loTail = null;//低指针 Node<K,V&g ...

- LUA提取免费迅雷账号

--获取http://www.521xunlei.com/ 免费迅雷账号 function getPageid() local http = require("socket.http&quo ...

- [Jinja2]本地加载html模板

import os from jinja2 import Environment, FileSystemLoader env = Environment(loader=FileSystemLoader ...

- Jmeter之设置动态关联

前言 在Jmeter中,如何进行接口关联(上一个接口的返回参数作为下一个接口的入参使用)测试呢?下面我们一起来学习吧! 需求:需要利用商品信息接口的返回结果skuName值作为下一个登录接口参数Use ...

- 一键安装php5.6.40脚本(LAMP环境)

#!/bin/bash #安装依赖软件 yum -y install libxml2-devel curl-devel libjpeg libjpeg-devel libpng libpng-deve ...

- tensorflow开发环境版本组合

记录下各模块的版本 tensorflow 1.15.0 print tf.__version__ cuda 10.0.130 nvcc -v cudnn 7.6.4 ...

- ES6 - 基础学习(8): Promise 对象

概述 Promise是异步编程的一种解决方案,比传统的解决方案(多层嵌套回调.回调函数和事件)更强大也更合理.从语法上说,Promise是一个对象,从它可以获取异步操作的消息,Promise 还提供了 ...

- ELK学习003:Elasticsearch启动常见问题

一.Caused by: java.lang.RuntimeException: can not run elasticsearch as root 这个错误,是因为使用root用户启动elastic ...

- 软件测试常见术语(英->汉)收藏好随时备用!

Defect 缺陷Defect Rate 缺陷率Verification & Validation 验证和确认Failure 故障White-box Testing 白盒测试Black-box ...