hadoop 2.7.1 高可用安装部署

0、系统环境安装

1、同步机器时间

yum install -y ntp #安装时间服务ntpdate us.pool.ntp.org #同步时间

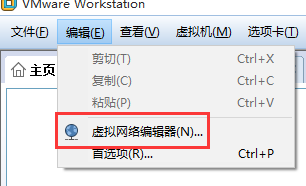

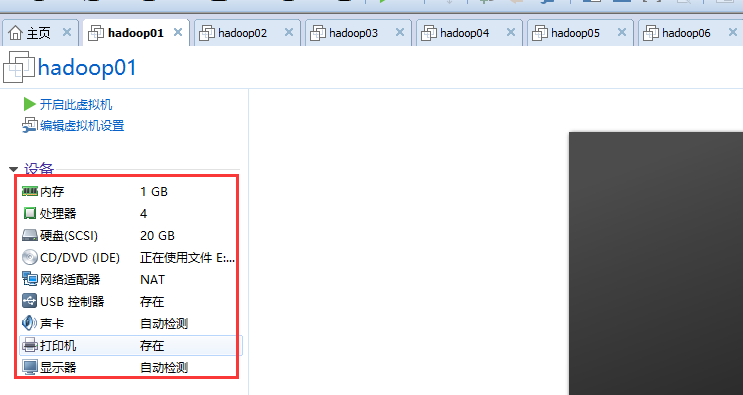

vi /etc/sysconfig/network-scripts/ifcfg-eth0

DEVICE=eth0IPADDR=192.168.8.101NETMASK=255.255.255.0GATEWAY=192.168.8.2HWADDR=00:0C:29:56:63:A1TYPE=EthernetUUID=ecb7f947-8a93-488c-a118-ffb011421cacONBOOT=yesNM_CONTROLLED=yesBOOTPROTO=none

service network restart

ifconfig eth0

eth0 Link encap:Ethernet HWaddr 00:0C:29:6C:20:2Binet addr:192.168.8.101 Bcast:192.168.8.255 Mask:255.255.255.0inet6 addr: fe80::20c:29ff:fe6c:202b/64 Scope:LinkUP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1RX packets:777 errors:0 dropped:0 overruns:0 frame:0TX packets:316 errors:0 dropped:0 overruns:0 carrier:0collisions:0 txqueuelen:1000RX bytes:70611 (68.9 KiB) TX bytes:49955 (48.7 KiB)

rm -f /etc/udev/rules.d/70-persistent-net.rule

vim /etc/sysconfig/network-scripts/ifcfg-eth0

第三步:重启服务器

reboot

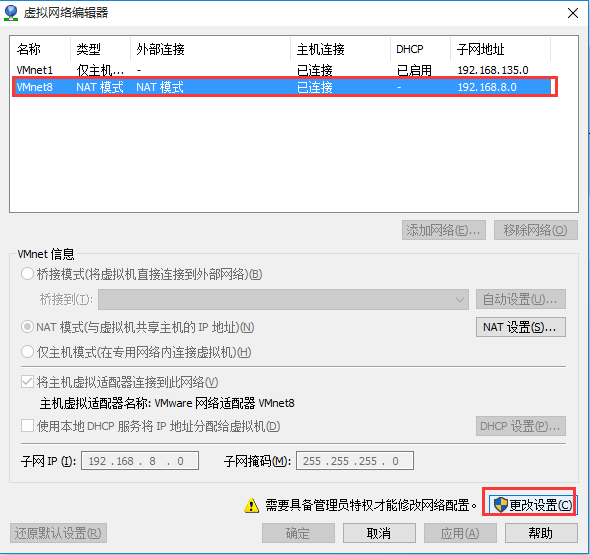

3、设置主机名

vi /etc/sysconfig/network

NETWORKING=yesHOSTNAME=hadoop01NETWORKING_IPV6=no

vi /etc/hosts

127.0.0.1 localhost192.168.8.101 hadoop01

192.168.8.102 hadoop02

192.168.8.103 hadoop03

192.168.8.104 hadoop04

192.168.8.105 hadoop05

192.168.8.106 hadoop06

关闭ipv6

1、查看系统是否开启ipv6

a)通过网卡属性查看

命令:ifconfig

注释:有 “inet6 addr:。。。。。。。“ 的表示开启了ipv6功能

b)通过内核模块加载信息查看

命令:lsmod | grep ipv6

2、ipv6关闭方法

在/etc/modprobe.d/dist.conf结尾添加

alias net-pf-10 offalias ipv6 off

可用vi等编辑器,也可以通过命令:

cat <<EOF>>/etc/modprobe.d/dist.conf

alias net-pf-10 off

alias ipv6 off

EOF

关闭防火墙

chkconfig iptables stopchkconfig iptables off

改好后重启服务器:

reboot

4、安装JDK

vi /etc/proflie

export JAVA_HOME=/soft/jdk1.7.0_80/export PATH=$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$PATH

source /etc/profile

5、SSH免密码登录

cd /root/.sshssh-keygen -t rsa #4个回车

id_rsa id_rsa.pub

ssh-copy-id -i hadoop01ssh-copy-id -i hadoop02ssh-copy-id -i hadoop03ssh-copy-id -i hadoop04ssh-copy-id -i hadoop05ssh-copy-id -i hadoop06

[root@hadoop01 .ssh]# ssh hadoop05Last login: Tue Nov 10 17:43:41 2015 from 192.168.8.1[root@hadoop05 ~]#

ssh-keygen -t rsa #4个回车

ssh-copy-id -i hadoop01

6、安装zookeeper

tar -zxvf zookeeper-3.4.6.tar.gz -C /root/soft

mv zoo.sample.cfg zoo.cfg

vi zoo.cfg

[root@hadoop04 conf]# vi zoo.cfg# The number of milliseconds of each ticktickTime=2000# The number of ticks that the initial# synchronization phase can takeinitLimit=10# The number of ticks that can pass between# sending a request and getting an acknowledgementsyncLimit=5# the directory where the snapshot is stored.# do not use /tmp for storage, /tmp here is just# example sakes.dataDir=/soft/zookeeper-3.4.6/data# the port at which the clients will connectclientPort=2181# the maximum number of client connections.# increase this if you need to handle more clients#maxClientCnxns=60## Be sure to read the maintenance section of the# administrator guide before turning on autopurge.## http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance## The number of snapshots to retain in dataDir#autopurge.snapRetainCount=3# Purge task interval in hours# Set to "0" to disable auto purge feature#autopurge.purgeInterval=1server.1=192.168.8.104:2888:3888server.2=192.168.8.105:2888:3888server.3=192.168.8.106:2888:3888

vi myid

bin/zkServer.sh start #启动bin/zkServer.sh status #查看状态

[root@hadoop04 zookeeper-3.4.6]# bin/zkServer.sh statusJMX enabled by defaultUsing config: /soft/zookeeper-3.4.6/bin/../conf/zoo.cfgMode: leader

[root@hadoop05 zookeeper-3.4.6]# bin/zkServer.sh statusJMX enabled by defaultUsing config: /soft/zookeeper-3.4.6/bin/../conf/zoo.cfgMode: follower

[root@hadoop06 zookeeper-3.4.6]# bin/zkServer.sh statusJMX enabled by defaultUsing config: /soft/zookeeper-3.4.6/bin/../conf/zoo.cfgMode: follower

bin/zkServer.sh stop

[root@hadoop04 zookeeper-3.4.6]# bin/zkServer.sh statusJMX enabled by defaultUsing config: /soft/zookeeper-3.4.6/bin/../conf/zoo.cfgError contacting service. It is probably not running.[root@hadoop04 zookeeper-3.4.6]#

[root@hadoop05 zookeeper-3.4.6]# bin/zkServer.sh statusJMX enabled by defaultUsing config: /soft/zookeeper-3.4.6/bin/../conf/zoo.cfgMode: follower

[root@hadoop06 zookeeper-3.4.6]# bin/zkServer.sh statusJMX enabled by defaultUsing config: /soft/zookeeper-3.4.6/bin/../conf/zoo.cfgMode: leader

7、hadoop安装

[root@hadoop01 hadoop-2.7.1]# lsbin etc include journal lib libexec LICENSE.txt logs NOTICE.txt README.txt sbin share

7.1、添加hadoop目录到环境变量

export JAVA_HOME=/soft/jdk1.7.0_80/export HADOOP_HOME=/soft/hadoop-2.7.1export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin

source /etc/profile

[root@hadoop01 hadoop-2.7.1]# which hadoop/soft/hadoop-2.7.1/bin/hadoop

7.2、配置hadoop-env.sh

vim hadoop-env.sh

export JAVA_HOME=/soft/jdk1.7.0_80/

7.3、配置core-site.xml

<configuration><!-- 指定hdfs的nameservice为ns1 --><property><name>fs.defaultFS</name><value>hdfs://ns1</value></property><!-- 指定hadoop临时目录 --><property><name>hadoop.tmp.dir</name><value>/soft/hadoop-2.7.1/tmp</value></property><!-- 指定zookeeper地址 --><property><name>ha.zookeeper.quorum</name><value>hadoop04:2181,hadoop05:2181,hadoop06:2181</value></property></configuration>

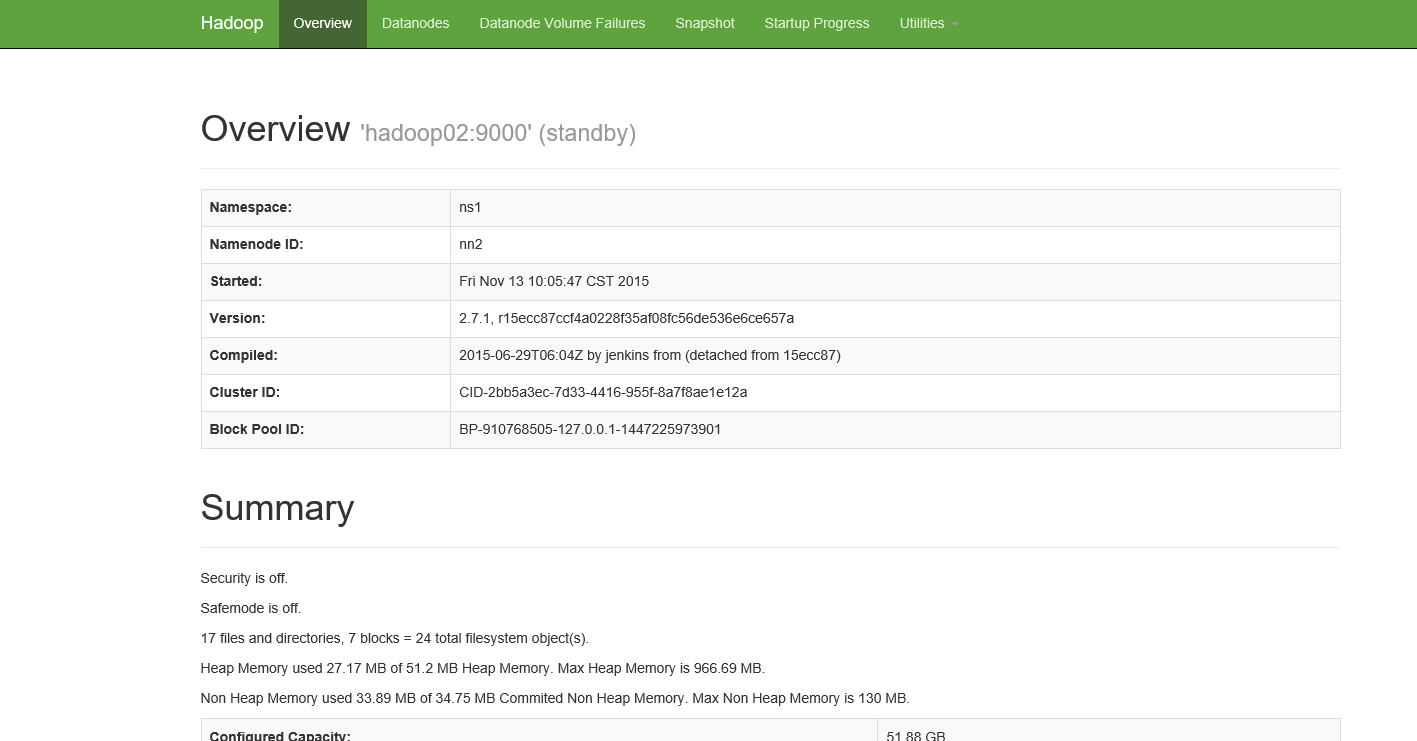

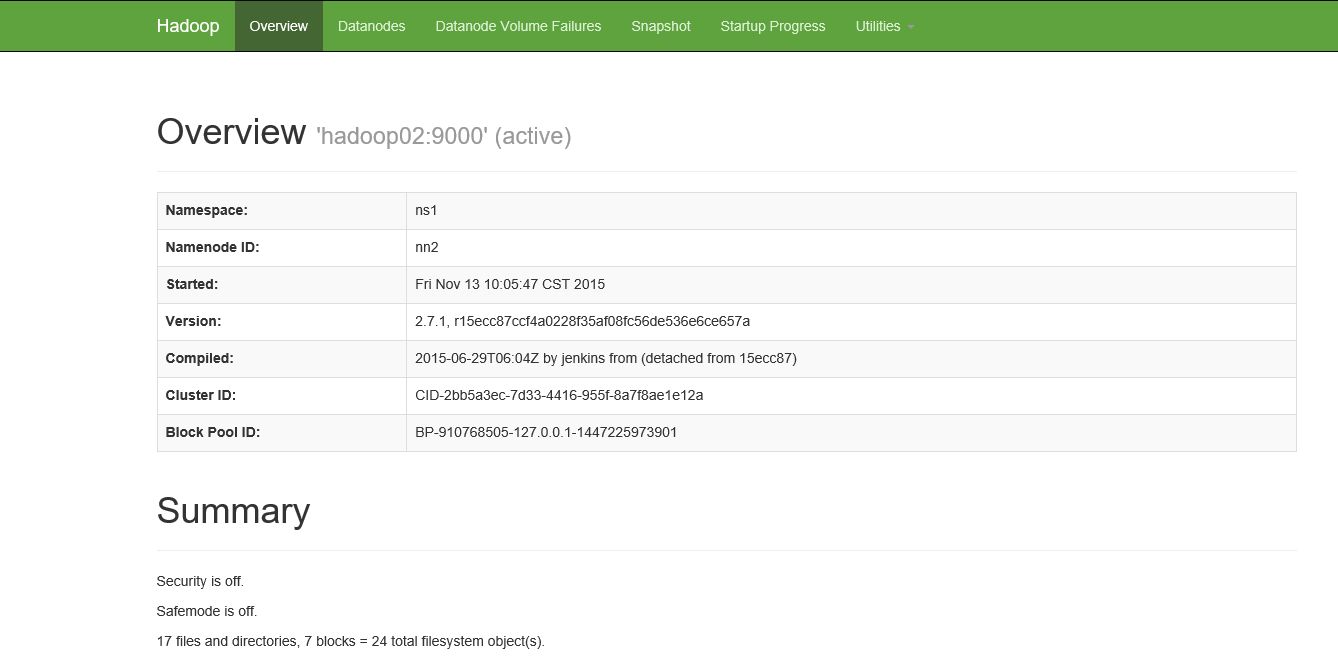

7.4、配置hdfs-site.xml

<configuration><!--指定hdfs的nameservice为ns1,需要和core-site.xml中的保持一致 --><property><name>dfs.nameservices</name><value>ns1</value></property><!-- ns1下面有两个NameNode,分别是nn1,nn2 --><property><name>dfs.ha.namenodes.ns1</name><value>nn1,nn2</value></property><!-- nn1的RPC通信地址 --><property><name>dfs.namenode.rpc-address.ns1.nn1</name><value>hadoop01:9000</value></property><!-- nn1的http通信地址 --><property><name>dfs.namenode.http-address.ns1.nn1</name><value>hadoop01:50070</value></property><!-- nn2的RPC通信地址 --><property><name>dfs.namenode.rpc-address.ns1.nn2</name><value>hadoop02:9000</value></property><!-- nn2的http通信地址 --><property><name>dfs.namenode.http-address.ns1.nn2</name><value>hadoop02:50070</value></property><!-- 指定NameNode的元数据在JournalNode上的存放位置 --><property><name>dfs.namenode.shared.edits.dir</name><value>qjournal://hadoop04:8485;hadoop05:8485;hadoop06:8485/ns1</value></property><!-- 指定JournalNode在本地磁盘存放数据的位置 --><property><name>dfs.journalnode.edits.dir</name><value>/soft/hadoop-2.7.1/journal</value></property><!-- 开启NameNode失败自动切换 --><property><name>dfs.ha.automatic-failover.enabled</name><value>true</value></property><!-- 配置失败自动切换实现方式 --><property><name>dfs.client.failover.proxy.provider.ns1</name><value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value></property><!-- 配置隔离机制 --><property><name>dfs.ha.fencing.methods</name><value>sshfence</value></property><!-- 使用隔离机制时需要ssh免登陆 --><property><name>dfs.ha.fencing.ssh.private-key-files</name><value>/root/.ssh/id_rsa</value></property></configuration>

7.4、配置datanode的配置文件slaves

vi slaves

hadoop04hadoop05hadoop06

7.5、配置mapreduce文件mapred-site.xml

mv mapred-site.xml.example mapred-site.xml

<configuration><!-- 指定mr框架为yarn方式 --><property><name>mapreduce.framework.name</name><value>yarn</value></property></configuration>

7.6、配置yarn-site.xml

<configuration><!-- 指定resourcemanager地址 --><property><name>yarn.resourcemanager.hostname</name><value>hadoop03</value></property><!-- 指定nodemanager启动时加载server的方式为shuffle server --><property><name>yarn.nodemanager.aux-services</name><value>mapreduce_shuffle</value></property></configuration>

scp -r hadoop2.7.1 hadoop02:/soft/scp -r hadoop2.7.1 hadoop03:/soft/scp -r hadoop2.7.1 hadoop04:/soft/scp -r hadoop2.7.1 hadoop05:/soft/scp -r hadoop2.7.1 hadoop06:/soft/

7.7、启动Zookeeper服务

[root@hadoop04 zookeeper-3.4.6]# bin/zkServer.sh startJMX enabled by defaultUsing config: /soft/zookeeper-3.4.6/bin/../conf/zoo.cfgStarting zookeeper ... STARTED

7.8、在hadoop01上启动journalnode

[root@hadoop01 hadoop-2.7.1]# sbin/hadoop-daemons.sh start journalnode

[root@hadoop04 zookeeper-3.4.6]# jps1532 JournalNode1796 Jps1470 QuorumPeerMain

7.9、在hadoop01上格式化hadoop

hadoop namenode -format

scp -r tmp/ hadoop02:/soft/hadoop-2.7.1/

7.10、在hadoop01上格式化ZK

hdfs zkfc -formatZK

7.11、在hadoop01上 启动HDFS

sbin/start-dfs.sh

7.12 在hadoop01上启动YARN

sbin/start-yarn.sh

8、使用hadoop集群测试

192.168.8.101 hadoop01192.168.8.102 hadoop02192.168.8.103 hadoop03192.168.8.104 hadoop04192.168.8.105 hadoop05192.168.8.106 hadoop06

[root@hadoop01 hadoop-2.7.1]# jps1614 NameNode2500 Jps1929 DFSZKFailoverController[root@hadoop01 hadoop-2.7.1]# kill -9 1614

hadoop 2.7.1 高可用安装部署的更多相关文章

- openstack pike 集群高可用 安装 部署 目录汇总

# openstack pike 集群高可用 安装部署#安装环境 centos 7 史上最详细的openstack pike版 部署文档欢迎经验分享,欢迎笔记分享欢迎留言,或加QQ群663105353 ...

- Redis之Sentinel高可用安装部署

背景: 之前通过Redis Sentinel 高可用实现说明和Redis 复制.Sentinel的搭建和原理说明大致已经介绍了sentinel的原理和实现,本文再次做个简单的回顾.具体的信息见前面的两 ...

- hadoop高可用安装和原理详解

本篇主要从hdfs的namenode和resourcemanager的高可用进行安装和原理的阐述. 一.HA安装 1.基本环境准备 1.1.1.centos7虚拟机安装,详情见VMware安装Cent ...

- hadoop和hbase高可用模式部署

记录apache版本的hadoop和hbase的安装,并启用高可用模式. 1. 主机环境 我这里使用的操作系统是centos 6.5,安装在vmware上,共三台. 主机名 IP 操作系统 用户名 安 ...

- hadoop HA+Federation(高可用联邦)搭建配置(一)

hadoop HA+Federation(高可用联邦)搭建配置(一) 标签(空格分隔): 未分类 介绍 hadoop 集群一共有4种部署模式,详见<hadoop 生态圈介绍>. HA联邦模 ...

- Kubernetes实战(一):k8s v1.11.x v1.12.x 高可用安装

说明:部署的过程中请保证每个命令都有在相应的节点执行,并且执行成功,此文档已经帮助几十人(仅包含和我取得联系的)快速部署k8s高可用集群,文档不足之处也已更改,在部署过程中遇到问题请先检查是否遗忘某个 ...

- Centos下SFTP双机高可用环境部署记录

SFTP(SSH File Transfer Protocol),安全文件传送协议.有时也被称作 Secure File Transfer Protocol 或 SFTP.它和SCP的区别是它允许用户 ...

- Haproxy+Keepalived高可用环境部署梳理(主主和主从模式)

Nginx.LVS.HAProxy 是目前使用最广泛的三种负载均衡软件,本人都在多个项目中实施过,通常会结合Keepalive做健康检查,实现故障转移的高可用功能. 1)在四层(tcp)实现负载均衡的 ...

- LVS+Keepalived 高可用环境部署记录(主主和主从模式)

之前的文章介绍了LVS负载均衡-基础知识梳理, 下面记录下LVS+Keepalived高可用环境部署梳理(主主和主从模式)的操作流程: 一.LVS+Keepalived主从热备的高可用环境部署 1)环 ...

随机推荐

- collection.toArray(new String[0])中new String[0]的作用

new string[0]的作用 比如:String[] result = set.toArray(new String[0]); Collection的公有方法中,toArray()是比较重要的一个 ...

- 从bind函数看js中的柯里化

以下是百度百科对柯里化函数的解释:柯里化(Currying)是把接受多个参数的函数变换成接受一个单一参数(最初函数的第一个参数)的函数,并且返回接受余下的参数且返回结果的新函数的技术.概念太抽象,可能 ...

- Linux基础入门学习笔记之三

第四节 Linux目录结构及文件基本操作 Linux目录结构 Linux 的目录与 Windows 的目录的区别 目录与存储介质(磁盘,内存,DVD 等)的关系 Windows 一直是==以存储介质为 ...

- 如何用python解析mysqldump文件

一.前言 最近在做离线数据导入HBase项目,涉及将存储在Mysql中的历史数据通过bulkload的方式导入HBase.由于源数据已经不在DB中,而是以文件形式存储在机器磁盘,此文件是mysqldu ...

- ssm使用Ajax的formData进行异步图片上传返回图片路径,并限制格式和大小

之前整理过SSM的文件上传,这次直接用代码了. 前台页面和js //form表单 <form id= "uploadForm" enctype="multipart ...

- 《精通Python设计模式》学习结构型之装饰器模式

这只是实现了Python的装饰器模式. 其实,python的原生的装饰器的应用比这个要强,要广的. ''' known = {0:0, 1:1} def fibonacci(n): assert(n ...

- JavaScript中判断日期是否相等

问题 做一个节日提示网页,首先获得当前日期,然后与最近的节日比较,如果恰好是同一天,提示"XX节快乐!"否则,提示"离XX节还有X天".判断是否恰好同一天的时候 ...

- CCF CSP 201609-4 交通规划

CCF计算机职业资格认证考试题解系列文章为meelo原创,请务必以链接形式注明本文地址 CCF CSP 201609-4 交通规划 问题描述 G国国王来中国参观后,被中国的高速铁路深深的震撼,决定为自 ...

- Web前端开发最佳实践(8):还没有给CSS样式排序?其实你可以更专业一些

前言 CSS样式排序是指按照一定的规则排列CSS样式属性的定义,排序并不会影响CSS样式的功能和性能,只是让代码看起来更加整洁.CSS代码的逻辑性并不强,一般的开发者写CSS样式也很随意,所以如果不借 ...

- javascript大神修炼记(6)——OOP思想(继承)

读者朋友们大家好,我们今天这一讲就接着前面的封装继续讲解,今天就是在前面内容上面的升级,OOP思想中的继承,我们就先来解释一下继承到底是什么意思,我们在什么地方会用到继续. 继承就是,后代继续祖先的一 ...