[转帖]如何理解 kernel.pid_max & kernel.threads-max & vm.max_map_count

https://www.cnblogs.com/apink/p/15728381.html

背景说明

运行环境信息,Kubernetes + docker 、应用系统java程序

问题描述

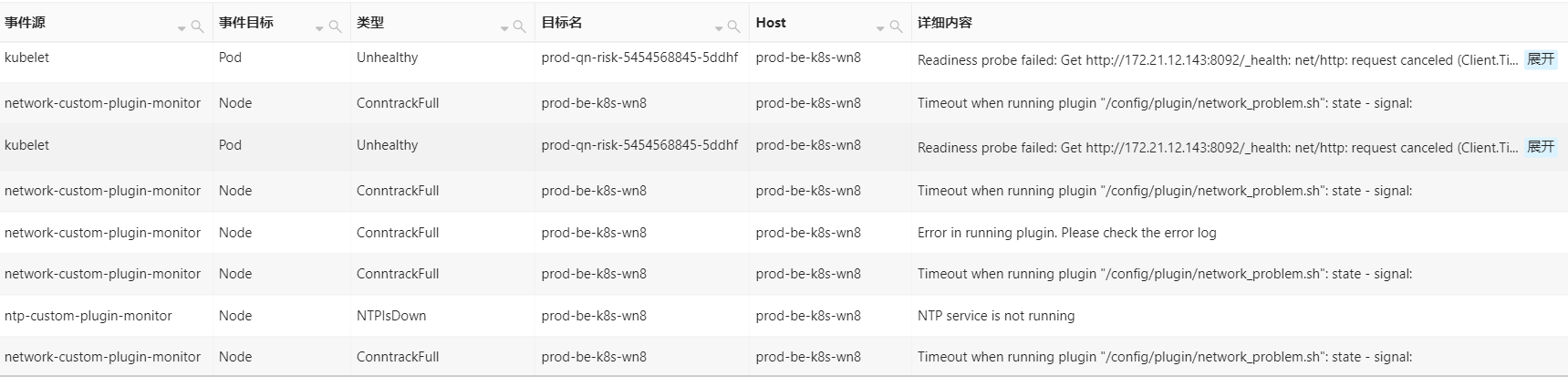

- 首先从Kubernetes事件中心告警信息如下,该告警集群常规告警事件(其实从下面这些常规告警信息是无法判断是什么故障问题)

最初怀疑是docker服务有问题,切换至节点上查看docker & kubelet 日志,如下

kubelet日志,kubelet无法初始化线程,需要增加所处运行用户的进程限制,大致意思就是需要调整ulimit -u(具体分析如下,此处先描述问题)

<root@PROD-BE-K8S-WN8 ~># journalctl -u "kubelet" --no-pager --follow-- Logs begin at Wed 2019-12-25 11:30:13 CST. --Dec 22 14:21:51 PROD-BE-K8S-WN8 kubelet[3124]: encoding/json.(*decodeState).unmarshal(0xc000204580, 0xcafe00, 0xc00048f440, 0xc0002045a8, 0x0)Dec 22 14:21:51 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/encoding/json/decode.go:180 +0x1eaDec 22 14:21:51 PROD-BE-K8S-WN8 kubelet[3124]: encoding/json.Unmarshal(0xc00025e000, 0x9d38, 0xfe00, 0xcafe00, 0xc00048f440, 0x0, 0x0)Dec 22 14:21:51 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/encoding/json/decode.go:107 +0x112Dec 22 14:21:51 PROD-BE-K8S-WN8 kubelet[3124]: github.com/go-openapi/spec.Swagger20Schema(0xc000439680, 0x0, 0x0)Dec 22 14:21:51 PROD-BE-K8S-WN8 kubelet[3124]: /go/src/github.com/cilium/cilium/vendor/github.com/go-openapi/spec/spec.go:82 +0xb8Dec 22 14:21:51 PROD-BE-K8S-WN8 kubelet[3124]: github.com/go-openapi/spec.MustLoadSwagger20Schema(...)Dec 22 14:21:51 PROD-BE-K8S-WN8 kubelet[3124]: /go/src/github.com/cilium/cilium/vendor/github.com/go-openapi/spec/spec.go:66Dec 22 14:21:51 PROD-BE-K8S-WN8 kubelet[3124]: github.com/go-openapi/spec.init.4()Dec 22 14:21:51 PROD-BE-K8S-WN8 kubelet[3124]: /go/src/github.com/cilium/cilium/vendor/github.com/go-openapi/spec/spec.go:38 +0x57Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: runtime: failed to create new OS thread (have 15 already; errno=11)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: runtime: may need to increase max user processes (ulimit -u)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: fatal error: newosprocDec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: goroutine 1 [running, locked to thread]:Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: runtime.throw(0xcbf07e, 0x9)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/runtime/panic.go:1116 +0x72 fp=0xc00099fe20 sp=0xc00099fdf0 pc=0x4376d2Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: runtime.newosproc(0xc000600800)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/runtime/os_linux.go:161 +0x1c5 fp=0xc00099fe80 sp=0xc00099fe20 pc=0x433be5Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: runtime.newm1(0xc000600800)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/runtime/proc.go:1843 +0xdd fp=0xc00099fec0 sp=0xc00099fe80 pc=0x43dcbdDec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: runtime.newm(0xcf1010, 0x0, 0xffffffffffffffff)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/runtime/proc.go:1822 +0x9b fp=0xc00099fef8 sp=0xc00099fec0 pc=0x43db3bDec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: runtime.startTemplateThread()Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/runtime/proc.go:1863 +0xb2 fp=0xc00099ff28 sp=0xc00099fef8 pc=0x43ddb2Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: runtime.LockOSThread()Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/runtime/proc.go:3845 +0x6b fp=0xc00099ff48 sp=0xc00099ff28 pc=0x44300bDec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: main.init.0()Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /go/src/github.com/cilium/cilium/plugins/cilium-cni/cilium-cni.go:66 +0x30 fp=0xc00099ff58 sp=0xc00099ff48 pc=0xb2fa50Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: runtime.doInit(0x11c73a0)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/runtime/proc.go:5652 +0x8a fp=0xc00099ff88 sp=0xc00099ff58 pc=0x44720aDec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: runtime.main()Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/runtime/proc.go:191 +0x1c5 fp=0xc00099ffe0 sp=0xc00099ff88 pc=0x439e85Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: runtime.goexit()Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/runtime/asm_amd64.s:1374 +0x1 fp=0xc00099ffe8 sp=0xc00099ffe0 pc=0x46fc81Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: goroutine 11 [chan receive]:Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: k8s.io/klog/v2.(*loggingT).flushDaemon(0x121fc40)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /go/src/github.com/cilium/cilium/vendor/k8s.io/klog/v2/klog.go:1131 +0x8bDec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: created by k8s.io/klog/v2.init.0Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /go/src/github.com/cilium/cilium/vendor/k8s.io/klog/v2/klog.go:416 +0xd8Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: goroutine 12 [select]:Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: io.(*pipe).Read(0xc000422780, 0xc00034b000, 0x1000, 0x1000, 0xba4480, 0x1, 0xc00034b000)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/io/pipe.go:57 +0xe7Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: io.(*PipeReader).Read(0xc00000e380, 0xc00034b000, 0x1000, 0x1000, 0x0, 0x0, 0x0)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/io/pipe.go:134 +0x4cDec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: bufio.(*Scanner).Scan(0xc00052ef38, 0x0)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/bufio/scan.go:214 +0xa9Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: github.com/sirupsen/logrus.(*Entry).writerScanner(0xc00016e1c0, 0xc00000e380, 0xc000516300)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /go/src/github.com/cilium/cilium/vendor/github.com/sirupsen/logrus/writer.go:59 +0xb4Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: created by github.com/sirupsen/logrus.(*Entry).WriterLevelDec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /go/src/github.com/cilium/cilium/vendor/github.com/sirupsen/logrus/writer.go:51 +0x1b7Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: goroutine 13 [select]:Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: io.(*pipe).Read(0xc0004227e0, 0xc000180000, 0x1000, 0x1000, 0xba4480, 0x1, 0xc000180000)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/io/pipe.go:57 +0xe7Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: io.(*PipeReader).Read(0xc00000e390, 0xc000180000, 0x1000, 0x1000, 0x0, 0x0, 0x0)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/io/pipe.go:134 +0x4cDec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: bufio.(*Scanner).Scan(0xc00020af38, 0x0)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/bufio/scan.go:214 +0xa9Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: github.com/sirupsen/logrus.(*Entry).writerScanner(0xc00016e1c0, 0xc00000e390, 0xc000516320)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /go/src/github.com/cilium/cilium/vendor/github.com/sirupsen/logrus/writer.go:59 +0xb4Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: created by github.com/sirupsen/logrus.(*Entry).WriterLevelDec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /go/src/github.com/cilium/cilium/vendor/github.com/sirupsen/logrus/writer.go:51 +0x1b7Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: goroutine 14 [select]:Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: io.(*pipe).Read(0xc000422840, 0xc0004c2000, 0x1000, 0x1000, 0xba4480, 0x1, 0xc0004c2000)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/io/pipe.go:57 +0xe7Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: io.(*PipeReader).Read(0xc00000e3a0, 0xc0004c2000, 0x1000, 0x1000, 0x0, 0x0, 0x0)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/io/pipe.go:134 +0x4cDec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: bufio.(*Scanner).Scan(0xc00052af38, 0x0)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/bufio/scan.go:214 +0xa9Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: github.com/sirupsen/logrus.(*Entry).writerScanner(0xc00016e1c0, 0xc00000e3a0, 0xc000516340)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /go/src/github.com/cilium/cilium/vendor/github.com/sirupsen/logrus/writer.go:59 +0xb4Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: created by github.com/sirupsen/logrus.(*Entry).WriterLevelDec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /go/src/github.com/cilium/cilium/vendor/github.com/sirupsen/logrus/writer.go:51 +0x1b7Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: goroutine 15 [select]:Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: io.(*pipe).Read(0xc0004228a0, 0xc000532000, 0x1000, 0x1000, 0xba4480, 0x1, 0xc000532000)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/io/pipe.go:57 +0xe7Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: io.(*PipeReader).Read(0xc00000e3b0, 0xc000532000, 0x1000, 0x1000, 0x0, 0x0, 0x0)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/io/pipe.go:134 +0x4cDec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: bufio.(*Scanner).Scan(0xc00052ff38, 0x0)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/bufio/scan.go:214 +0xa9Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: github.com/sirupsen/logrus.(*Entry).writerScanner(0xc00016e1c0, 0xc00000e3b0, 0xc000516360)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /go/src/github.com/cilium/cilium/vendor/github.com/sirupsen/logrus/writer.go:59 +0xb4Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: created by github.com/sirupsen/logrus.(*Entry).WriterLevelDec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /go/src/github.com/cilium/cilium/vendor/github.com/sirupsen/logrus/writer.go:51 +0x1b7

<root@PROD-BE-K8S-WN8 ~># journalctl -u "kubelet" --no-pager --follow-- Logs begin at Wed 2019-12-25 11:30:13 CST. --Dec 22 14:21:51 PROD-BE-K8S-WN8 kubelet[3124]: encoding/json.(*decodeState).unmarshal(0xc000204580, 0xcafe00, 0xc00048f440, 0xc0002045a8, 0x0)Dec 22 14:21:51 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/encoding/json/decode.go:180 +0x1eaDec 22 14:21:51 PROD-BE-K8S-WN8 kubelet[3124]: encoding/json.Unmarshal(0xc00025e000, 0x9d38, 0xfe00, 0xcafe00, 0xc00048f440, 0x0, 0x0)Dec 22 14:21:51 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/encoding/json/decode.go:107 +0x112Dec 22 14:21:51 PROD-BE-K8S-WN8 kubelet[3124]: github.com/go-openapi/spec.Swagger20Schema(0xc000439680, 0x0, 0x0)Dec 22 14:21:51 PROD-BE-K8S-WN8 kubelet[3124]: /go/src/github.com/cilium/cilium/vendor/github.com/go-openapi/spec/spec.go:82 +0xb8Dec 22 14:21:51 PROD-BE-K8S-WN8 kubelet[3124]: github.com/go-openapi/spec.MustLoadSwagger20Schema(...)Dec 22 14:21:51 PROD-BE-K8S-WN8 kubelet[3124]: /go/src/github.com/cilium/cilium/vendor/github.com/go-openapi/spec/spec.go:66Dec 22 14:21:51 PROD-BE-K8S-WN8 kubelet[3124]: github.com/go-openapi/spec.init.4()Dec 22 14:21:51 PROD-BE-K8S-WN8 kubelet[3124]: /go/src/github.com/cilium/cilium/vendor/github.com/go-openapi/spec/spec.go:38 +0x57Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: runtime: failed to create new OS thread (have 15 already; errno=11)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: runtime: may need to increase max user processes (ulimit -u)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: fatal error: newosprocDec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: goroutine 1 [running, locked to thread]:Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: runtime.throw(0xcbf07e, 0x9)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/runtime/panic.go:1116 +0x72 fp=0xc00099fe20 sp=0xc00099fdf0 pc=0x4376d2Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: runtime.newosproc(0xc000600800)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/runtime/os_linux.go:161 +0x1c5 fp=0xc00099fe80 sp=0xc00099fe20 pc=0x433be5Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: runtime.newm1(0xc000600800)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/runtime/proc.go:1843 +0xdd fp=0xc00099fec0 sp=0xc00099fe80 pc=0x43dcbdDec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: runtime.newm(0xcf1010, 0x0, 0xffffffffffffffff)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/runtime/proc.go:1822 +0x9b fp=0xc00099fef8 sp=0xc00099fec0 pc=0x43db3bDec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: runtime.startTemplateThread()Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/runtime/proc.go:1863 +0xb2 fp=0xc00099ff28 sp=0xc00099fef8 pc=0x43ddb2Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: runtime.LockOSThread()Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/runtime/proc.go:3845 +0x6b fp=0xc00099ff48 sp=0xc00099ff28 pc=0x44300bDec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: main.init.0()Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /go/src/github.com/cilium/cilium/plugins/cilium-cni/cilium-cni.go:66 +0x30 fp=0xc00099ff58 sp=0xc00099ff48 pc=0xb2fa50Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: runtime.doInit(0x11c73a0)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/runtime/proc.go:5652 +0x8a fp=0xc00099ff88 sp=0xc00099ff58 pc=0x44720aDec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: runtime.main()Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/runtime/proc.go:191 +0x1c5 fp=0xc00099ffe0 sp=0xc00099ff88 pc=0x439e85Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: runtime.goexit()Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/runtime/asm_amd64.s:1374 +0x1 fp=0xc00099ffe8 sp=0xc00099ffe0 pc=0x46fc81Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: goroutine 11 [chan receive]:Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: k8s.io/klog/v2.(*loggingT).flushDaemon(0x121fc40)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /go/src/github.com/cilium/cilium/vendor/k8s.io/klog/v2/klog.go:1131 +0x8bDec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: created by k8s.io/klog/v2.init.0Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /go/src/github.com/cilium/cilium/vendor/k8s.io/klog/v2/klog.go:416 +0xd8Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: goroutine 12 [select]:Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: io.(*pipe).Read(0xc000422780, 0xc00034b000, 0x1000, 0x1000, 0xba4480, 0x1, 0xc00034b000)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/io/pipe.go:57 +0xe7Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: io.(*PipeReader).Read(0xc00000e380, 0xc00034b000, 0x1000, 0x1000, 0x0, 0x0, 0x0)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/io/pipe.go:134 +0x4cDec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: bufio.(*Scanner).Scan(0xc00052ef38, 0x0)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/bufio/scan.go:214 +0xa9Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: github.com/sirupsen/logrus.(*Entry).writerScanner(0xc00016e1c0, 0xc00000e380, 0xc000516300)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /go/src/github.com/cilium/cilium/vendor/github.com/sirupsen/logrus/writer.go:59 +0xb4Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: created by github.com/sirupsen/logrus.(*Entry).WriterLevelDec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /go/src/github.com/cilium/cilium/vendor/github.com/sirupsen/logrus/writer.go:51 +0x1b7Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: goroutine 13 [select]:Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: io.(*pipe).Read(0xc0004227e0, 0xc000180000, 0x1000, 0x1000, 0xba4480, 0x1, 0xc000180000)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/io/pipe.go:57 +0xe7Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: io.(*PipeReader).Read(0xc00000e390, 0xc000180000, 0x1000, 0x1000, 0x0, 0x0, 0x0)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/io/pipe.go:134 +0x4cDec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: bufio.(*Scanner).Scan(0xc00020af38, 0x0)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/bufio/scan.go:214 +0xa9Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: github.com/sirupsen/logrus.(*Entry).writerScanner(0xc00016e1c0, 0xc00000e390, 0xc000516320)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /go/src/github.com/cilium/cilium/vendor/github.com/sirupsen/logrus/writer.go:59 +0xb4Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: created by github.com/sirupsen/logrus.(*Entry).WriterLevelDec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /go/src/github.com/cilium/cilium/vendor/github.com/sirupsen/logrus/writer.go:51 +0x1b7Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: goroutine 14 [select]:Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: io.(*pipe).Read(0xc000422840, 0xc0004c2000, 0x1000, 0x1000, 0xba4480, 0x1, 0xc0004c2000)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/io/pipe.go:57 +0xe7Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: io.(*PipeReader).Read(0xc00000e3a0, 0xc0004c2000, 0x1000, 0x1000, 0x0, 0x0, 0x0)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/io/pipe.go:134 +0x4cDec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: bufio.(*Scanner).Scan(0xc00052af38, 0x0)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/bufio/scan.go:214 +0xa9Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: github.com/sirupsen/logrus.(*Entry).writerScanner(0xc00016e1c0, 0xc00000e3a0, 0xc000516340)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /go/src/github.com/cilium/cilium/vendor/github.com/sirupsen/logrus/writer.go:59 +0xb4Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: created by github.com/sirupsen/logrus.(*Entry).WriterLevelDec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /go/src/github.com/cilium/cilium/vendor/github.com/sirupsen/logrus/writer.go:51 +0x1b7Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: goroutine 15 [select]:Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: io.(*pipe).Read(0xc0004228a0, 0xc000532000, 0x1000, 0x1000, 0xba4480, 0x1, 0xc000532000)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/io/pipe.go:57 +0xe7Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: io.(*PipeReader).Read(0xc00000e3b0, 0xc000532000, 0x1000, 0x1000, 0x0, 0x0, 0x0)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/io/pipe.go:134 +0x4cDec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: bufio.(*Scanner).Scan(0xc00052ff38, 0x0)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /usr/local/go/src/bufio/scan.go:214 +0xa9Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: github.com/sirupsen/logrus.(*Entry).writerScanner(0xc00016e1c0, 0xc00000e3b0, 0xc000516360)Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /go/src/github.com/cilium/cilium/vendor/github.com/sirupsen/logrus/writer.go:59 +0xb4Dec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: created by github.com/sirupsen/logrus.(*Entry).WriterLevelDec 22 14:22:06 PROD-BE-K8S-WN8 kubelet[3124]: /go/src/github.com/cilium/cilium/vendor/github.com/sirupsen/logrus/writer.go:51 +0x1b7

- 于是查看系统日志,如下(本来想追踪当前时间的系统日志,但当时系统反应超级慢,但是当时的系统load是很低并没有很高,而且CPU & MEM利用率也不高,具体在此是系统为什么反应慢,后面再分析 “问题一”)

在执行查看系统命令,提示无法创建进程

<root@PROD-BE-K8S-WN8 ~># dmesg -TL

-bash: fork: retry: No child processes

[Fri Sep 17 18:25:53 2021] Linux version 5.11.1-1.el7.elrepo.x86_64 (mockbuild@Build64R7) (gcc (GCC) 9.3.1 20200408 (Red Hat 9.3.1-2), GNU ld version 2.32-16.el7) #1 SMP Mon Feb 22 17:30:33 EST 2021

[Fri Sep 17 18:25:53 2021] Command line: BOOT_IMAGE=/boot/vmlinuz-5.11.1-1.el7.elrepo.x86_64 root=UUID=8770013a-4455-4a77-b023-04d04fa388c8 ro crashkernel=auto spectre_v2=retpoline net.ifnames=0 console=tty0 console=ttyS0,115200n8 noibrs

[Fri Sep 17 18:25:53 2021] x86/fpu: Supporting XSAVE feature 0x001: 'x87 floating point registers'

[Fri Sep 17 18:25:53 2021] x86/fpu: Supporting XSAVE feature 0x002: 'SSE registers'

[Fri Sep 17 18:25:53 2021] x86/fpu: Supporting XSAVE feature 0x004: 'AVX registers'

[Fri Sep 17 18:25:53 2021] x86/fpu: Supporting XSAVE feature 0x008: 'MPX bounds registers'

[Fri Sep 17 18:25:53 2021] x86/fpu: Supporting XSAVE feature 0x010: 'MPX CSR'

[Fri Sep 17 18:25:53 2021] x86/fpu: Supporting XSAVE feature 0x020: 'AVX-512 opmask'

[Fri Sep 17 18:25:53 2021] x86/fpu: Supporting XSAVE feature 0x040: 'AVX-512 Hi256'

- 尝试在该节点新建Container,如下

提示初始化线程失败,资源不够

<root@PROD-BE-K8S-WN8 ~># docker run -it --rm tomcat bash

runtime/cgo: runtime/cgo: pthread_create failed: Resource temporarily unavailable

pthread_create failed: Resource temporarily unavailable

SIGABRT: abort

PC=0x7f34d16023d7 m=3 sigcode=18446744073709551610 goroutine 0 [idle]:

runtime: unknown pc 0x7f34d16023d7

stack: frame={sp:0x7f34cebb8988, fp:0x0} stack=[0x7f34ce3b92a8,0x7f34cebb8ea8)

00007f34cebb8888: 000055f2b345a7bf <runtime.(*mheap).scavengeLocked+559> 00007f34cebb88c0

00007f34cebb8898: 000055f2b3450e0e <runtime.(*mTreap).end+78> 0000000000000000

故障分析

根据以上的故障问题初步分析,第一反应是ulimi -u值太小,已经被hit(触及到,突破该参数的上限),于是查看各用户的ulimi -u,官方描述就是max user processes(该参数的值上限应该小于user.max_pid_namespace的值,该参数是内核初始化分配的)

监控信息

- 查看用户的max processes的上限,如下

<root@PROD-BE-K8S-WN8 ~># ulimit -u

249047

<root@PROD-BE-K8S-WN8 ~># ulimit -a

core file size (blocks, -c) 0

data seg size (kbytes, -d) unlimited

scheduling priority (-e) 0

file size (blocks, -f) unlimited

pending signals (-i) 249047

max locked memory (kbytes, -l) 64

max memory size (kbytes, -m) unlimited

open files (-n) 65535

pipe size (512 bytes, -p) 8

POSIX message queues (bytes, -q) 819200

real-time priority (-r) 0

stack size (kbytes, -s) 8192

cpu time (seconds, -t) unlimited

max user processes (-u) 249047

virtual memory (kbytes, -v) unlimited

file locks (-x) unlimited

- 因为ulimit是针对于每用户而言的,具体还要验证每个用户的limit的配置,如下

根据以下配置判断,并没有超出设定的范围

# 默认limits.conf的配置

# End of file

root soft nofile 65535

root hard nofile 65535

* soft nofile 65535

* hard nofile 65535 # limits.d/20.nproc.conf的配置,如下

# Default limit for number of user's processes to prevent

# accidental fork bombs.

# See rhbz #432903 for reasoning. * soft nproc 65536

root soft nproc unlimited

- 查看节点运行的进程

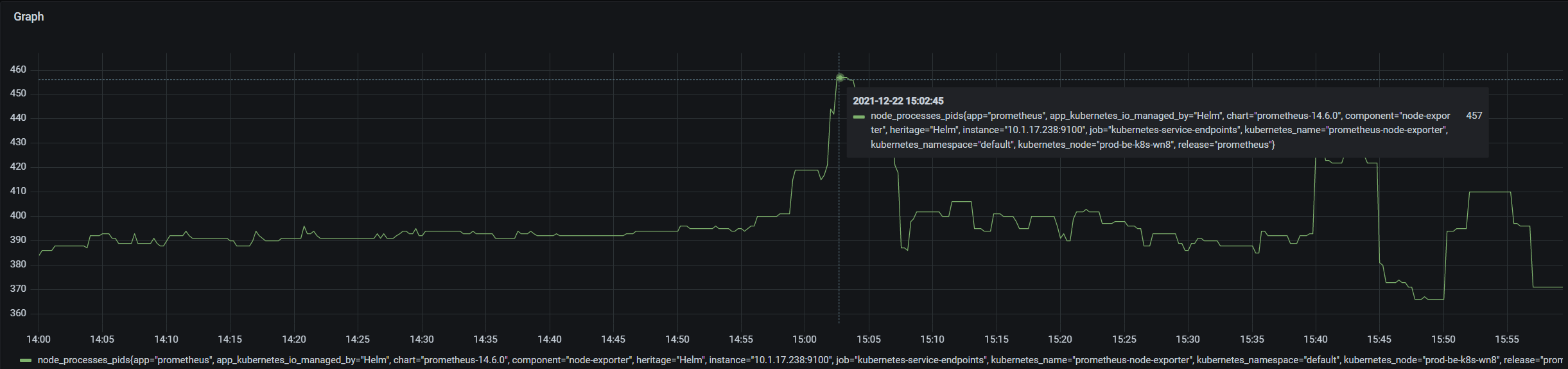

从监控信息可以看到在故障最高使用457个进程

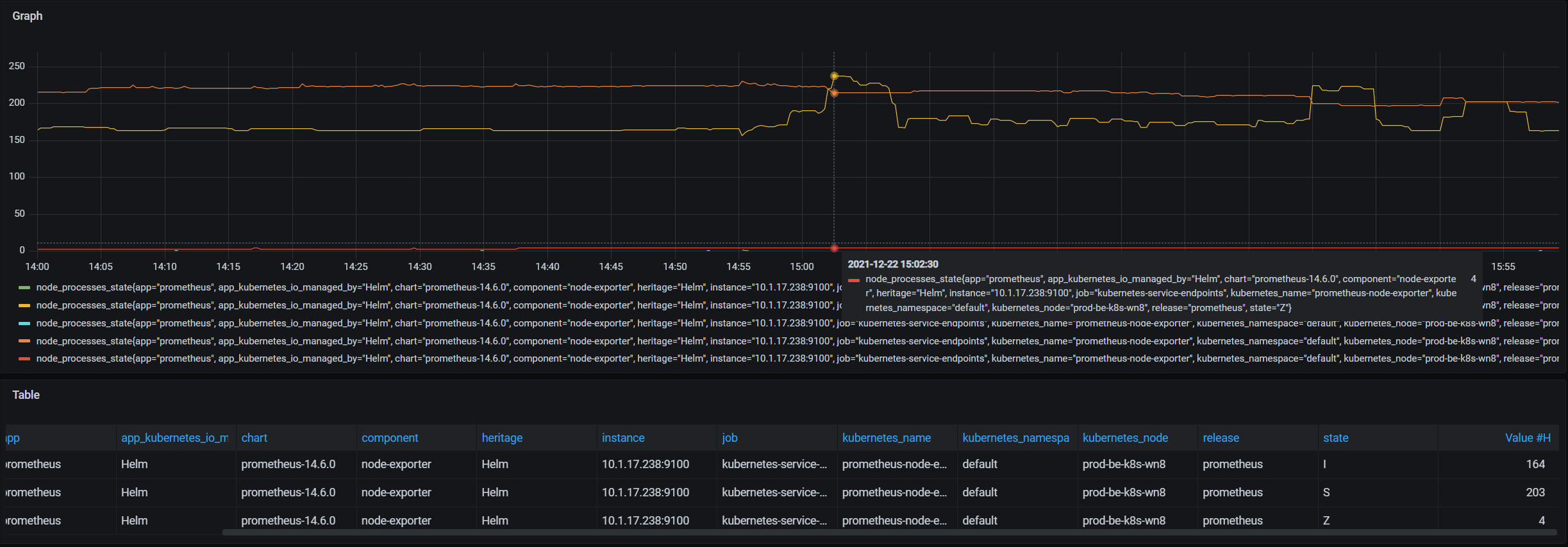

- 查看系统中的进程状态,如下

虽然说有个Z状的进程,但是也不影响当前系统运行

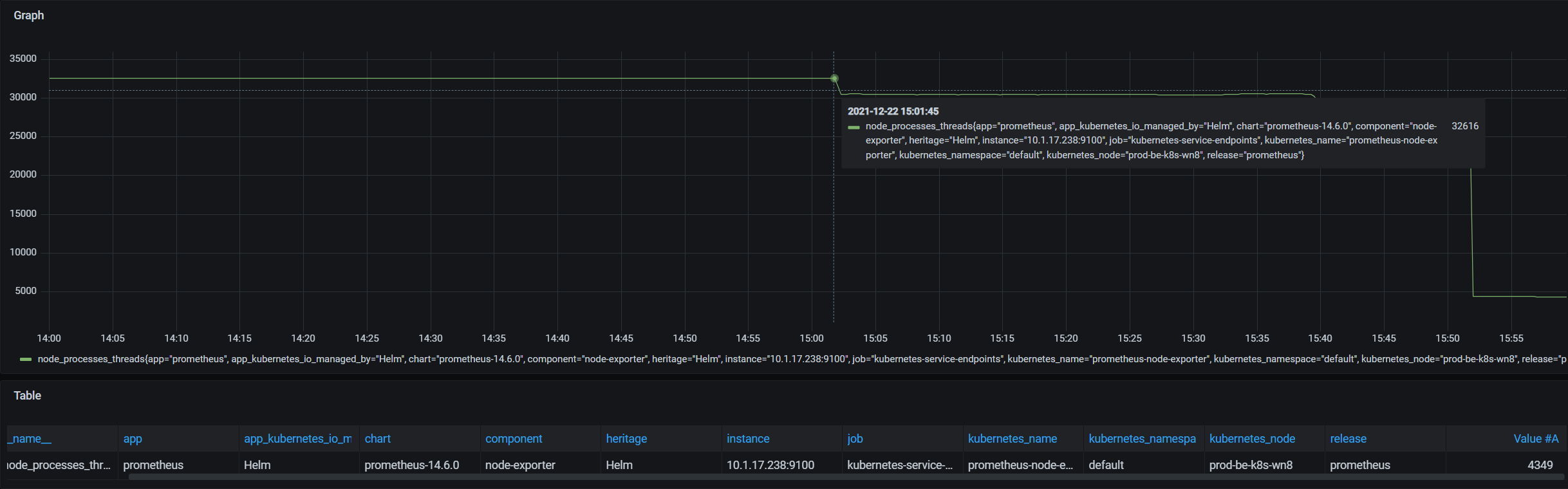

- 查看系统create的线程数,如下

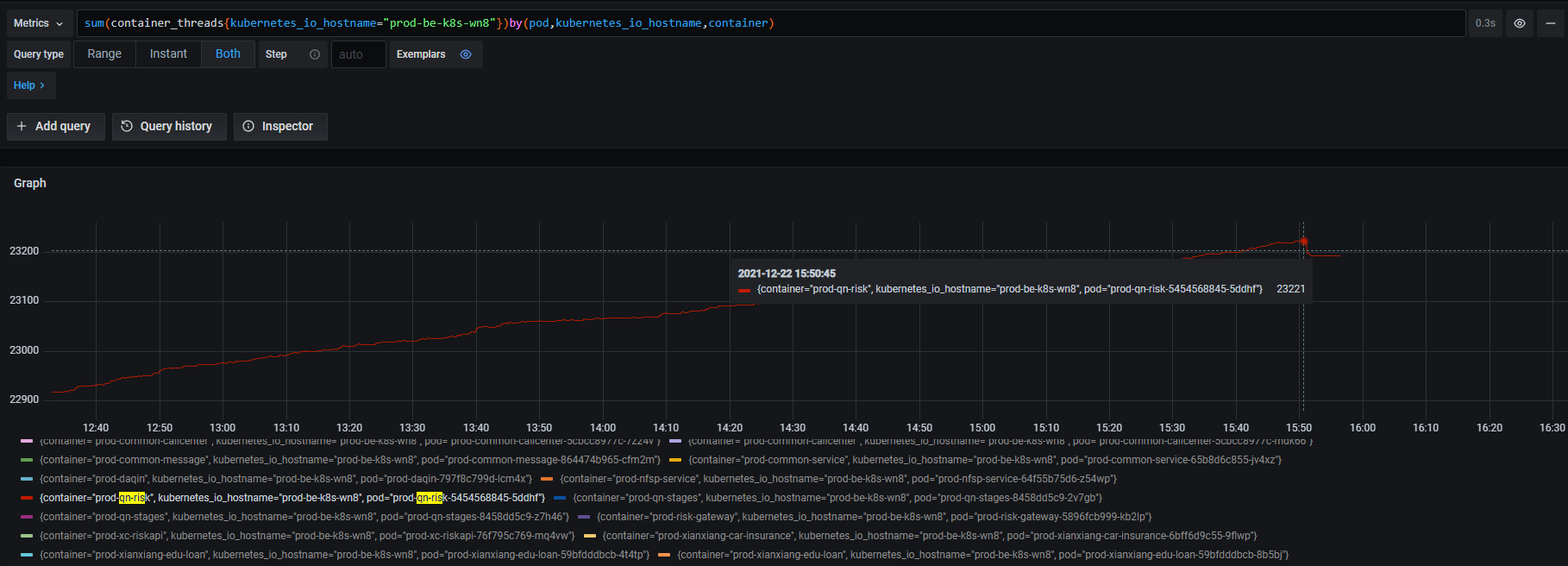

从下监控图表,当时最大线程数是 32616

分析过程

从以上监控信息分析,故障时间区间,系统运行的线程略高 31616,但是该值却没有超过当前用户的ulimit -u的值,初步排除该线索

- 根据系统抛出的错误提示,Google一把 fork: Resource temporarily unavailable

https://github.com/awslabs/amazon-eks-ami/issues/239

在整个帖子看到一条这样的提示

One possible cause is running out of process IDs. Check you don't have 40.000 defunct processes or similar on nodes with problems

- 于是根据该线索,翻阅linux内核文档,搜索PID相关字段,其中找到如下相关的PID参数

kernel.core_uses_pid = 1

引用官方文档

https://www.kernel.org/doc/html/latest/admin-guide/sysctl/kernel.html#core-uses-pid

参数大致意思是为系统coredump文件命名,实际生成的名字为 “core.PID”,则排除该参数引起的问题

kernel.ns_last_pid = 23068

引用官方文档

https://www.kernel.org/doc/html/latest/admin-guide/sysctl/kernel.html#ns-last-pid

参数大致意思是,记录当前系统最后分配的PID identifiy,当kernel fork执行下一个task时,kernel将从此pid分配identify

kernel.pid_max = 32768

引用官方文档

https://www.kernel.org/doc/html/latest/admin-guide/sysctl/kernel.html#pid-max

参数大致意思是,kernel允许当前系统分配的最大PID identify,如果kernel 在fork时hit到这个值时,kernel会wrap back到内核定义的minimum PID identify,意思就是不能分配大于该参数设定的值+1,该参数边界范围是全局的,属于系统全局边界

通过该参数的阐述,大致问题定位到了,在linux中其实thread & process 的创建都会被该参数束缚,因为无论是线程还是进程结构体基本上一样的,都需要PID来标识

user.max_pid_namespaces = 253093

引用官方文档

https://www.kernel.org/doc/html/latest/admin-guide/sysctl/user.html#max-pid-namespaces

参数大致意思是,在当前所属用户namespace下允许该用户创建的最大的PID,意思应该是最大进程吧,等同于参数ulimit -u的值,由内核初始化而定义的,具体算法应该是(init_task.signal->rlim[RLIMIT_NPROC].rlim_max = max_threads/2)

kernel.cad_pid = 1

引用官方文档

https://www.kernel.org/doc/html/latest/admin-guide/sysctl/kernel.html#cad-pid

参数大致意思是,向系统发送reboot信号,特别针对于ctrl+alt+del,对于该参数不需要理解太多,用不到

- 查看系统内核参数kernel.pid_max,如下

关于该参数的初始值是如何计算的,下面会分析的

<root@PROD-BE-K8S-WN8 ~># sysctl -a | grep pid_max

kernel.pid_max = 32768 - 返回系统中,需要定位是哪个应用系统create如此之多的线程,如下(推荐安装监控系统,用于记录监控数据信息)

- 通常网上的教程都是盲目的调整对应的内核参数值,个人认为运维所有操作都是被动的,不具备根治问题,需要从源头解决问题,最好是抛给研发,在应用系统初始化,create适当的线程量

具体如何优化内核参数,下面来分析

参数分析

相关内核参数详细说明,及如何调整,及相互关系,及计算方式,参数边界,如下说明

kernel.pid_max

概念就不详述了,参考上文(大致意思就是,系统最大可分配的PID identify,理解有点抽象,严格意义是最大标识,每个进程的标识符,当然也代表最大进程吧)

话不多说,分析源代码,如有误,请指出

- 代码地址

int pid_max = PID_MAX_DEFAULT; #define RESERVED_PIDS 300 int pid_max_min = RESERVED_PIDS + 1;

int pid_max_max = PID_MAX_LIMIT;上面代表表示,pid_max默认赋值等于PID_MAX_DEFAULT的值,但是初始创建的PID identify是RESERVD_PIDS + 1,也就是等于301,小于等于300是系统内核保留值(可能是特殊使用吧)

那么PID_MAX_DEFAULT的值是如何计算的及初始化时是如何定义的及默认值、最大值,及LIMIT的值

具体PID_MAX_DEFAULT代码地址,如下

linux/threads.h at v5.11-rc1 · torvalds/linux · GitHub

/*

* This controls the default maximum pid allocated to a process

* 大致意思就是,如果在编译内核时指定或者为CONFIG_BASE_SMALl赋值了,那么默认值就是4096,反而就是32768

*/

#define PID_MAX_DEFAULT (CONFIG_BASE_SMALL ? 0x1000 : 0x8000) /*

* A maximum of 4 million PIDs should be enough for a while.

* [NOTE: PID/TIDs are limited to 2^30 ~= 1 billion, see FUTEX_TID_MASK.]

* 如果CONFIG_BASE_SMALL被赋值了,则最大值就是32768,如果条件不成立,则判断long的类型通常应该是操作系统版本,如果大于4字节,取值范围大约就是4 million,精确计算就是4,194,304,如果条件还不成立则只能取值最被设置的PID_MAX_DEFAULT的值

*/ #define PID_MAX_LIMIT (CONFIG_BASE_SMALL ? PAGE_SIZE * 8 : \

(sizeof(long) > 4 ? 4 * 1024 * 1024 : PID_MAX_DEFAULT))

但是翻阅man proc的官方文档,明确说明:如果OS为64位系统PID_MAX_LIMIT的边界值为2的22次方,精确计算就是2*1024*1024*1024等于1,073,741,824,10亿多。而32BIT的操作系统默认就是32768

如何查看CONFIG_BASE_SMALL的值,如下

<root@HK-K8S-WN1 ~># cat /boot/config-5.11.1-1.el7.elrepo.x86_64 | grep CONFIG_BASE_SMALL

CONFIG_BASE_SMALL=00代表未被赋值

kernel.threads-max

Documentation for /proc/sys/kernel/ — The Linux Kernel documentation

- 参数解释:

该参数大致意思是,系统内核fork()允许创建的最大线程数,在内核初始化时已经设定了此值,但是即使设定了该值,但是线程结构只能占用可用RAM page的一部分,约1/8(注意是可用内存,即Available memory page),如果超出此值1/8则threads-max的值会减少

内核初始化时,默认指定最小值为MIN_THREADS = 20,MAX_THREADS的最大边界值是由FUTEX_TID_MASK值而约束,但是在内核初始化时,kernel.threads-max的值是根据系统实际的物理内存计算出来的,如下代码

linux/fork.c at v5.16-rc1 · torvalds/linux · GitHub

/*

* set_max_threads

*/

static void set_max_threads(unsigned int max_threads_suggested)

{

u64 threads;

unsigned long nr_pages = totalram_pages(); /*

* The number of threads shall be limited such that the thread

* structures may only consume a small part of the available memory.

*/

if (fls64(nr_pages) + fls64(PAGE_SIZE) > 64)

threads = MAX_THREADS;

else

threads = div64_u64((u64) nr_pages * (u64) PAGE_SIZE,

(u64) THREAD_SIZE * 8UL); if (threads > max_threads_suggested)

threads = max_threads_suggested; max_threads = clamp_t(u64, threads, MIN_THREADS, MAX_THREADS);

}

kernel.threads-max该参数一般不需要手动更改,因为在内核根据现在有的内存已经算好了,不建议修改

那么kernel.threads-max由FUTEX_TID_MASK常量所约束,那它的具体值是多少呢,如下

linux/futex.h at v5.16-rc1 · torvalds/linux · GitHub

#define FUTEX_TID_MASK 0x3fffffff

vm.max_map_count

Documentation for /proc/sys/vm/ — The Linux Kernel documentation

- 参数解释

大致意思是,允许系统进程最大分配的内存MAP区域,一般应用程序占用少于1000个map,但是个别程序,特别针对于被malloc分配,可能会大量消耗,每个allocation会占用一到二个map,默认值为65530

通过设定此值可以限制进程使用VMA(虚拟内存区域)的数量。虚拟内存区域是一个连续的虚拟地址空间区域。在进程的生命周期中,每当程序尝试在内存中映射文件,链接到共享内存段,或者分配堆空间的时候,这些区域将被创建。调优这个值将限制进程可拥有VMA的数量。限制一个进程拥有VMA的总数可能导致应用程序出错,因为当进程达到了VMA上线但又只能释放少量的内存给其他的内核进程使用时,操作系统会抛出内存不足的错误。如果你的操作系统在NORMAL区域仅占用少量的内存,那么调低这个值可以帮助释放内存给内核用参数大致作用就是这样的。

可以总结一下什么情况下,适当的增加

- 压力测试,压测应用程序最大create的线程数量

- 高并发的应用系统,单进程并发非常高

参考文档

http://www.kegel.com/c10k.html#limits.threads

https://listman.redhat.com/archives/phil-list/2003-August/msg00005.html

https://bugzilla.redhat.com/show_bug.cgi?id=1459891

配置建议

参数边界

| 参数名称 | 范围边界 |

| kernel.pid_max | 系统全局限制 |

| kernel.threads-max | 系统全局限制 |

| vm.max_map_count | 进程级别限制 |

| /etc/security/limits.conf | 用户级别限制 |

总结建议

- kernel.pid_max约束整个系统最大create的线程与进程数量,无论是线程还是进程,都不能hit到此设定的值,错误有二种(create接近抛出Resource temporarily unavailable,create大于抛出No more processes...);可以根据实际应用场景及应用平台修改此值,比如Kubernetes平台,一个节点可能运行上百Container instance,或者是高并发,多线程的应用

- kernel.threads-max只针对事个系统所有用户的最大他create的线程数量,就大于系统所有用户设定的ulimit -u的值,最好ulimit -u 精确的计算一下(不推荐手动修改该参数,该参数是由在内核初始化系统算出来的结果,如果将其放大可以会造成内存溢出,一般系统默认值不会被hit到)

- vm.max_map_count是针对系统单个进程允许被分配的VMA区域,如果在压测时,会有二种情况抛出(线程不够11=no more threads allowed,资源不够12 = out of mem.)但是此值了不能设置的太大,会造成内存开销,回收慢;此值的调整,需要根据实际压测结果而定(常指可以被create多少个线程达到饱和)

- limits.conf针对用户级别的,在设置此值时,需要考虑到上面二个全局参数的值,用户的total值(不管是nproc 还是nofile)不能大于与之对应的kernel.pid_max & kernel.threads-max & fs.file-max

- Linux通常不会对单个CPU的create线程数做上限,过于复杂,个人认为内存不好精确计算吧

[转帖]如何理解 kernel.pid_max & kernel.threads-max & vm.max_map_count的更多相关文章

- 测试kernel.pid_max值

# sysctl kernel.pid_max kernel.pid_max = # sysctl - kernel.pid_max = #include <unistd.h> #incl ...

- 嵌入式 uboot引导kernel,kernel引导fs

1.uboot引导kernel: u-boot中有个bootm命令,它可以引导内存中的应用程序映像(Kernel),bootm命令对应 common/cmd_bootm.c中的do_bootm()函数 ...

- 在新版linux上编译老版本的kernel出现kernel/timeconst.h] Error 255

在使用ubuntu16.4编译Linux-2.6.31内核时出现这样的错误 可以修改timeconst.pl的内容后正常编译. 以下是编译错误提示的内容: Can't use 'defined(@ar ...

- 嵌入式 uboot引导kernel,kernel引导fs【转】

转自:http://www.cnblogs.com/lidabo/p/5383934.html#3639633 1.uboot引导kernel: u-boot中有个bootm命令,它可以引导内存中的应 ...

- 【java】基于Tomcat的WebSocket转帖 + 自己理解

网址:http://redstarofsleep.iteye.com/blog/1488639 原帖时间是2012-5-8,自己书写时间是2013年6月21日10:39:06 Java代码 packa ...

- 【转帖】理解 Linux 的虚拟内存

理解 Linux 的虚拟内存 https://www.cnblogs.com/zhenbianshu/p/10300769.html 段页式内存 文章了里面讲了 页表 没讲段表 记得最开始的时候 学习 ...

- [转帖]深入理解latch: cache buffers chains

深入理解latch: cache buffers chains http://blog.itpub.net/12679300/viewspace-1244578/ 原创 Oracle 作者:wzq60 ...

- [转帖]彻底理解cookie,session,token

彻底理解cookie,session,token https://www.cnblogs.com/moyand/p/9047978.html 发展史 1.很久很久以前,Web 基本上就是文档的浏览而已 ...

- [转帖]深入理解 MySQL—锁、事务与并发控制

深入理解 MySQL—锁.事务与并发控制 http://www.itpub.net/2019/04/28/1723/ 跟oracle也类似 其实所有的数据库都有相同的机制.. 学习了机制才能够更好的工 ...

- 解决Unable to create new native thread

两种类型的Out of Memory java.lang.OutOfMemoryError: Java heap space error 当JVM尝试在堆中分配对象,堆中空间不足时抛出.一般通过设定J ...

随机推荐

- Windows Server 2012 R2 无法更新问题

Windows Server 2012 R2 无法更新问题 新安装的ISO镜像由于年久失修,原先的Update服务器可能已经失效,需要安装更新补丁,才可以正常指向新的更新服务器,甚至连系统激活(输入正 ...

- 浅谈树形DP

树形DP是动态规划中最难也最常考的内容.具有DP和图论相结合的特点. 但从本质上来说,树形DP只不过是一种线性DP,只是将它与搜索结合起来了而已. 树形DP的基本步骤 读图 树形DP的题目中,通常会给 ...

- Tailscale 基础教程:Headscale 的部署方法和使用教程

Tailscale 是一种基于 WireGuard 的虚拟组网工具,它在用户态实现了 WireGuard 协议,相比于内核态 WireGuard 性能会有所损失,但在功能和易用性上下了很大功夫: 开箱 ...

- P1990-覆盖墙壁

分情况: \[\left\{ \begin{aligned} & 条形 \left\{ \begin{aligned} 横着\\ 竖着\\ \end{aligned}\right. \\ &a ...

- 开源云原生网关Linux Traefik本地部署结合内网穿透远程访问

开源云原生网关Linux Traefik本地部署结合内网穿透远程访问 前言 Træfɪk 是一个云原生的新型的 HTTP 反向代理.负载均衡软件,能轻易的部署微服务.它支持多种后端 (Docker ...

- openGemini内核源码正式对外开源

摘要:openGemini是一个开源的分布式时序数据库系统,可广泛应用于物联网.车联网.运维监控.工业互联网等业务场景,具备卓越的读写性能和高效的数据分析能力. 本文分享自华为云社区<华为云面向 ...

- 一文带你熟知ForkJoin

摘要:ForkJoin将复杂的计算当做一个任务,而分解的多个计算则是当做一个个子任务来并行执行. 本文分享自华为云社区<[高并发]什么是ForkJoin?看这一篇就够了!>,作者:冰 河. ...

- PNG文件解读(2):PNG格式文件结构与数据结构解读—解码PNG数据

PNG文件识别 之前写过<JPEG/Exif/TIFF格式解读(1):JEPG图片压缩与存储原理分析>,JPEG文件是以,FFD8开头,FFD9结尾,中间存储着以0xFFE0~0xFFEF ...

- 如何配置Apple推送证书 push证书

转载:如何配置Apple推送证书 push证书 想要制作push证书,就需要使用快捷工具appuploader工具制 作证书,然后使用Apple的推送功能配置push证书,就可以得到了.PS:pu ...

- App备案与iOS云管理式证书 ,公钥及证书SHA-1指纹的获取方法

iOS 备案查看信息 iOS平台Bundle ID 公钥 证书SHA-1指纹 IOS平台服务器域名 获取 Bundle ID: 或者 https://developer.apple.com/accou ...