flink源码编译(windows环境)

前言

最新开始捣鼓flink,fucking the code之前,编译是第一步。

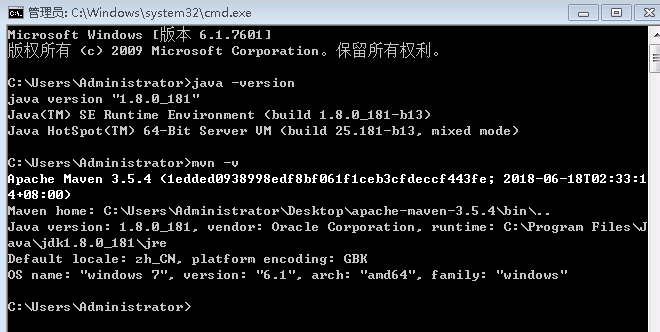

编译环境

win7 java maven

编译步骤

https://ci.apache.org/projects/flink/flink-docs-release-1.6/start/building.html 官方文档搞起,如下:

Building Flink from Source

This page covers how to build Flink 1.6.1 from sources.

Build Flink

In order to build Flink you need the source code. Either download the source of a release or clone the git repository.

In addition you need Maven 3 and a JDK (Java Development Kit). Flink requires at least Java 8 to build.

NOTE: Maven 3.3.x can build Flink, but will not properly shade away certain dependencies. Maven 3.2.5 creates the libraries properly. To build unit tests use Java 8u51 or above to prevent failures in unit tests that use the PowerMock runner.

To clone from git, enter:

git clone https://github.com/apache/flinkThe simplest way of building Flink is by running:

mvn clean install -DskipTestsThis instructs Maven (mvn) to first remove all existing builds (clean) and then create a new Flink binary (install).

To speed up the build you can skip tests, QA plugins, and JavaDocs:

mvn clean install -DskipTests -DfastThe default build adds a Flink-specific JAR for Hadoop 2, to allow using Flink with HDFS and YARN.

Dependency Shading

Flink shades away some of the libraries it uses, in order to avoid version clashes with user programs that use different versions of these libraries. Among the shaded libraries are Google Guava, Asm, Apache Curator, Apache HTTP Components, Netty, and others.

The dependency shading mechanism was recently changed in Maven and requires users to build Flink slightly differently, depending on their Maven version:

Maven 3.0.x, 3.1.x, and 3.2.x It is sufficient to call mvn clean install -DskipTests in the root directory of Flink code base.

Maven 3.3.x The build has to be done in two steps: First in the base directory, then in the distribution project:

mvn clean install -DskipTests

cd flink-dist

mvn clean installNote: To check your Maven version, run mvn --version.

Hadoop Versions

Info Most users do not need to do this manually. The download page contains binary packages for common Hadoop versions.

Flink has dependencies to HDFS and YARN which are both dependencies from Apache Hadoop. There exist many different versions of Hadoop (from both the upstream project and the different Hadoop distributions). If you are using a wrong combination of versions, exceptions can occur.

Hadoop is only supported from version 2.4.0 upwards. You can also specify a specific Hadoop version to build against:

mvn clean install -DskipTests -Dhadoop.version=2.6.1Vendor-specific Versions 指定hadoop发行商

To build Flink against a vendor specific Hadoop version, issue the following command:

mvn clean install -DskipTests -Pvendor-repos -Dhadoop.version=2.6.1-cdh5.0.0The -Pvendor-repos activates a Maven build profile that includes the repositories of popular Hadoop vendors such as Cloudera, Hortonworks, or MapR.

官网给出的是指定cdh发行商的版本,这里我给出一个hdp发行商的版本

mvn clean install -DskipTests -Pvendor-repos -Dhadoop.version=2.7.3.2.6.1.114-2

详细的版本信息可以从http://repo.hortonworks.com/content/repositories/releases/org/apache/hadoop/hadoop-common/查看Scala Versions

Info Users that purely use the Java APIs and libraries can ignore this section.

Flink has APIs, libraries, and runtime modules written in Scala. Users of the Scala API and libraries may have to match the Scala version of Flink with the Scala version of their projects (because Scala is not strictly backwards compatible).

Flink 1.4 currently builds only with Scala version 2.11.

We are working on supporting Scala 2.12, but certain breaking changes in Scala 2.12 make this a more involved effort. Please check out this JIRA issue for updates.

Encrypted File Systems

If your home directory is encrypted you might encounter a java.io.IOException: File name too long exception. Some encrypted file systems, like encfs used by Ubuntu, do not allow long filenames, which is the cause of this error.

The workaround is to add:

<args>

<arg>-Xmax-classfile-name</arg>

<arg>128</arg>

</args>in the compiler configuration of the pom.xml file of the module causing the error. For example, if the error appears in the flink-yarn module, the above code should be added under the <configuration> tag of scala-maven-plugin. See this issue for more information.

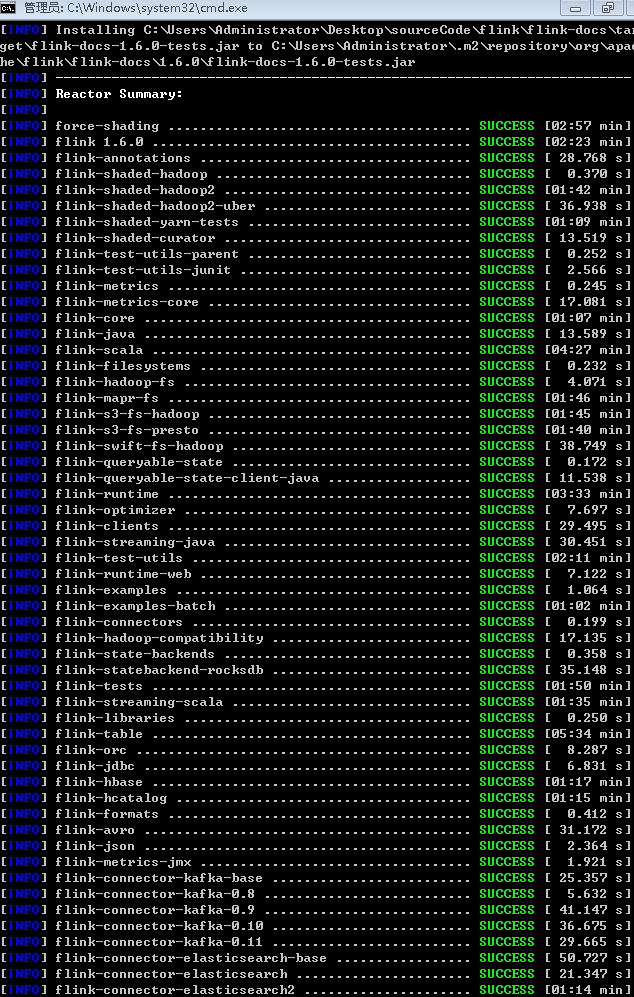

编译结果

flink\flink-dist\target\flink-1.6.0-bin\flink-1.6.0下会有编译结果

flink源码编译(windows环境)的更多相关文章

- HBase-2.2.3源码编译-Windows版

源码环境一览 windows: 7 64Bit Java: 1.8.0_131 Maven:3.3.9 Git:2.24.0.windows.1 HBase:2.2.3 Hadoop:2.8.5 下载 ...

- 终于完成了 源码 编译lnmp环境

经过了大概一个星期的努力,终于按照海生的编译流程将lnmp环境源码安装出来了 nginx 和php 主要参考 http://hessian.cn/p/1273.html mysql 主要参考 http ...

- 查看android源码,windows环境下载源码

查看源码 参考: http://blog.csdn.net/janronehoo/article/details/8560304 步骤: 添加chrome插件 Android SDK Search 进 ...

- python在windows(双版本)及linux(源码编译)环境下安装

python下载 下载地址:https://www.python.org/downloads/ 可以下载需要的版本,这里选择2.7.12和3.6.2 下面第一个是linux版本,第二个是windows ...

- git在windows及linux(源码编译)环境下安装

git在windows下安装 下载地址:https://git-scm.com/ 默认安装即可 验证 git --version git在linux下安装 下载地址:https://mirrors.e ...

- TensorFlow Python2.7环境下的源码编译(一)环境准备

参考: https://blog.csdn.net/yhily2008/article/details/79967118 https://tensorflow.google.cn/install/in ...

- TensorFlow Python3.7环境下的源码编译(一)环境准备

参考: https://blog.csdn.net/yhily2008/article/details/79967118 https://tensorflow.google.cn/install/in ...

- vcmi(魔法门英雄无敌3 - 开源复刻版) 源码编译

vcmi源码编译 windows+cmake+mingw ##1 准备 HoMM3 gog.com CMake 官网 vcmi 源码 下载 QT5 with mingw 官网 Boost 源码1.55 ...

- 麒麟系统开发笔记(三):从Qt源码编译安装之编译安装Qt5.12

前言 上一篇,是使用Qt提供的安装包安装的,有些场景需要使用到从源码编译的Qt,所以本篇如何在银河麒麟系统V4上编译Qt5.12源码. 银河麒麟V4版本 系统版本: Qt源码下载 ...

随机推荐

- 初识函数库libpcap

由于工作上的需要,最近简单学习了抓包函数库libpcap,顺便记下笔记,方便以后查看 一.libpcap简介 libpcap(Packet Capture Library),即数据包捕获函数库, ...

- 功能强大的swagger-editor的介绍与使用

一.Swagger Editor简介 Swagger Editor是一个开源的编辑器,并且它也是一个基于Angular的成功案例.在Swagger Editor中,我们可以基于YAML等语法定义我们的 ...

- ActiveMQ的使用

ActiveMQ使用分为两大块:生产者和消费者 一.准备 项目导入jar包:activemq-all-5.15.3.jar 并buildpath 二.生产者 创建连接工厂 ActiveMQCon ...

- Ubuntu软件中心的完全启用

新安装的Ubuntu英文版如果不做配置是无法使用Ubuntu软件中心下载或安装软件的,本文就简单介绍一下安装完Ubuntu后该做哪些配置才能完全启用Ubuntu软件中心. 安装完Ubuntu后我们要对 ...

- 如何在js或者jquery中操作EL表达式的一个List集合

------------吾亦无他,唯手熟尔,谦卑若愚,好学若饥------------- 先说明此篇博客看明白了可以干嘛: 就是在js或者jquery中操作一个EL表达式的一个list集合或者复杂类型 ...

- SSL WSS HTTPS

SSLSSL(Secure Socket Layer,安全套接层) 简单来说是一种加密技术, 通过它, 我们可以在通信的双方上建立一个安全的通信链路, 因此数据交互的双方可以安全地通信, 而不需要担心 ...

- MyBatis-Spring中间件逻辑分析(怎么把Mapper接口注册到Spring中)

1. 文档介绍 1.1. 为什么要写这个文档 接触Spring和MyBatis也挺久的了,但是一直还停留在使用的层面上,导致很多时候光知道怎么用,而不知道其具体原理,这样就很难做一 ...

- python实现简单的一个刷票点赞功能

投票网址:http://best.zhaopin.com/?sid=121128100&site=sou 在以上网址中找到"XXX技术有限公司",通过Python进行刷票. ...

- Maven项目中,系统设置的CLASSPATH环境变量问题

在Maven项目中,系统的CLASSPATH环境变量失效了吗?在用Maven开发登录网站时,servlet-api出现错误,jdbc也出现错误,都是ClassNotFoundException,但这两 ...

- MySQL使用细节

************************************************************************ MySQL使用细节,包括部分常用函数以及注意如何提高数 ...