Object Detection with 10 lines of code - Image AI

To perform object detection using ImageAI, all you need to do is

- Install Python on your computer system

- Install ImageAI and its dependencies

3. Download the Object Detection model file

4. Run the sample codes (which is as few as 10 lines)

Now let’s get started.

1) Download and install Python 3 from official Python Language website

2) Install the following dependencies via pip:

i. Tensorflow

pip install tensorflow

ii. Numpy

pip install numpy

iii. SciPy

pip install scipy

iv. OpenCV

pip install opencv-python

v. Pillow

pip install pillow

vi. Matplotlib

pip install matplotlib

vii. H5py

pip install h5py

viii. Keras

pip install keras

ix. ImageAI

pip installhttps://github.com/OlafenwaMoses/ImageAI/releases/download/2.0.1/imageai-2.0.1-py3-none-any.whl

3) Download the RetinaNet model file that will be used for object detection via this link.

Great. Now that you have installed the dependencies, you are ready to write your first object detection code. Create a Python file and give it a name (For example, FirstDetection.py), and then write the code below into it. Copy the RetinaNet model file and the image you want to detect to the folder that contains the python file.

FirstDetection.py

from imageai.Detection import ObjectDetection

import os execution_path = os.getcwd() detector = ObjectDetection()

detector.setModelTypeAsRetinaNet()

detector.setModelPath( os.path.join(execution_path , "resnet50_coco_best_v2.0.1.h5"))

detector.loadModel()

detections = detector.detectObjectsFromImage(input_image=os.path.join(execution_path , "image.jpg"), output_image_path=os.path.join(execution_path , "imagenew.jpg")) for eachObject in detections:

print(eachObject["name"] + " : " + eachObject["percentage_probability"] )

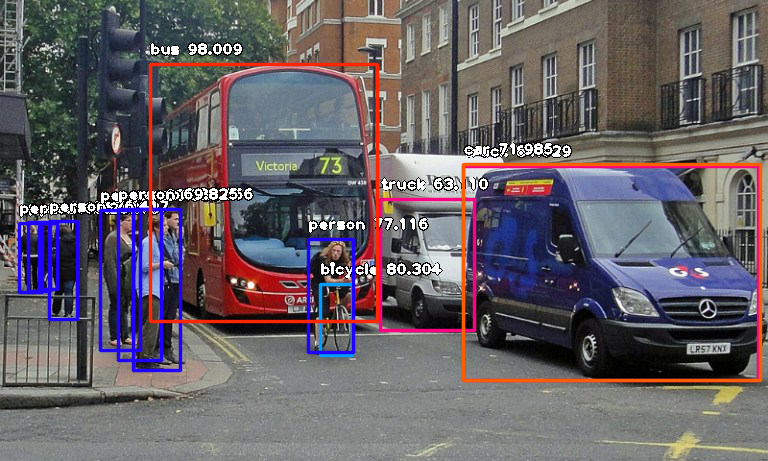

Then run the code and wait while the results prints in the console. Once the result is printed to the console, go to the folder in which your FirstDetection.py is and you will find a new image saved. Take a look at a 2 image samples below and the new images saved after detection.

Before Detection:

Image Credit: alzheimers.co.uk

Image Credit: Wikicommons

After Detection:

Console result for above image:

person : 55.8402955532074

person : 53.21805477142334

person : 69.25139427185059

person : 76.41745209693909

bicycle : 80.30363917350769

person : 83.58567953109741

person : 89.06581997871399

truck : 63.10953497886658

person : 69.82483863830566

person : 77.11606621742249

bus : 98.00949096679688

truck : 84.02870297431946

car : 71.98476791381836

Console result for above image:

person : 71.10445499420166

person : 59.28672552108765

person : 59.61582064628601

person : 75.86382627487183

motorcycle : 60.1050078868866

bus : 99.39600229263306

car : 74.05484318733215

person : 67.31776595115662

person : 63.53200078010559

person : 78.2265305519104

person : 62.880998849868774

person : 72.93365597724915

person : 60.01397967338562

person : 81.05944991111755

motorcycle : 50.591760873794556

motorcycle : 58.719027042388916

person : 71.69321775436401

bicycle : 91.86570048332214

motorcycle : 85.38855314254761

Now let us explain how the 10-line code works.

from imageai.Detection import ObjectDetection

import os execution_path = os.getcwd()

In the above 3 lines, we imported the ImageAI object detection class in the first line, imported the python os class in the second line and defined a variable to hold the path to the folder where our python file, RetinaNet model file and images are in the third line.

detector = ObjectDetection()

detector.setModelTypeAsRetinaNet()

detector.setModelPath( os.path.join(execution_path , "resnet50_coco_best_v2.0.1.h5"))

detector.loadModel()

detections = detector.detectObjectsFromImage(input_image=os.path.join(execution_path , "image.jpg"), output_image_path=os.path.join(execution_path , "imagenew.jpg"))

In the 5 lines of code above, we defined our object detection class in the first line, set the model type to RetinaNet in the second line, set the model path to the path of our RetinaNet model in the third line, load the model into the object detection class in the fourth line, then we called the detection function and parsed in the input image path and the output image path in the fifth line.

for eachObject in detections:

print(eachObject["name"] + " : " + eachObject["percentage_probability"] )

In the above 2 lines of code, we iterate over all the results returned by the detector.detectObjectsFromImage function in the first line, then print out the name and percentage probability of the model on each object detected in the image in the second line.

ImageAI supports many powerful customization of the object detection process. One of it is the ability to extract the image of each object detected in the image. By simply parsing the extra parameter extract_detected_objects=True into the detectObjectsFromImagefunction as seen below, the object detection class will create a folder for the image objects, extract each image, save each to the new folder created and return an extra array that contains the path to each of the images.

detections, extracted_images = detector.detectObjectsFromImage(input_image=os.path.join(execution_path , "image.jpg"), output_image_path=os.path.join(execution_path , "imagenew.jpg"), extract_detected_objects=True)

Object Detection with 10 lines of code - Image AI的更多相关文章

- 论文阅读笔记四十六:Feature Selective Anchor-Free Module for Single-Shot Object Detection(CVPR2019)

论文原址:https://arxiv.org/abs/1903.00621 摘要 本文提出了基于无anchor机制的特征选择模块,是一个简单高效的单阶段组件,其可以结合特征金字塔嵌入到单阶段检测器中. ...

- (转)Awesome Object Detection

Awesome Object Detection 2018-08-10 09:30:40 This blog is copied from: https://github.com/amusi/awes ...

- 论文阅读笔记五十二:CornerNet-Lite: Efficient Keypoint Based Object Detection(CVPR2019)

论文原址:https://arxiv.org/pdf/1904.08900.pdf github:https://github.com/princeton-vl/CornerNet-Lite 摘要 基 ...

- 论文阅读笔记五十一:CenterNet: Keypoint Triplets for Object Detection(CVPR2019)

论文链接:https://arxiv.org/abs/1904.08189 github:https://github.com/Duankaiwen/CenterNet 摘要 目标检测中,基于关键点的 ...

- 论文阅读笔记四十八:Bounding Box Regression with Uncertainty for Accurate Object Detection(CVPR2019)

论文原址:https://arxiv.org/pdf/1809.08545.pdf github:https://github.com/yihui-he/KL-Loss 摘要 大规模的目标检测数据集在 ...

- object detection[NMS]

非极大抑制,是在对象检测中用的较为频繁的方法,当在一个对象区域,框出了很多框,那么如下图: 上图来自这里 目的就是为了在这些框中找到最适合的那个框.有以下几种方式: 1 nms 2 soft-nms ...

- 论文阅读笔记五十六:(ExtremeNet)Bottom-up Object Detection by Grouping Extreme and Center Points(CVPR2019)

论文原址:https://arxiv.org/abs/1901.08043 github: https://github.com/xingyizhou/ExtremeNet 摘要 本文利用一个关键点检 ...

- 课程四(Convolutional Neural Networks),第三 周(Object detection) —— 2.Programming assignments:Car detection with YOLOv2

Autonomous driving - Car detection Welcome to your week 3 programming assignment. You will learn abo ...

- YOLO object detection with OpenCV

Click here to download the source code to this post. In this tutorial, you’ll learn how to use the Y ...

随机推荐

- 一文搞懂TCP与UDP的区别

摘要:计算机网络基础 引言 网络协议是每个前端工程师都必须要掌握的知识,TCP/IP 中有两个具有代表性的传输层协议,分别是 TCP 和 UDP,本文将介绍下这两者以及它们之间的区别. 一.TCP/I ...

- 当placeholder的字体大小跟input大小不一致,placeholder垂直居中

当placeholder的字体大小跟input大小不一致,实现placeholder垂直居中 设计稿的placeholder的样式是这样的 输入值的时候大小是这样的 最后想要实现的效果是这样的 当我这 ...

- js中函数表达式和自执行函数表达式的用法总结

立即调用函数表达式 给函数体加大括号,在有变量声明的情形下,没有任何区别 但是,如果只是[自动执行]的情形下,就会不同 因为,一个匿名函数,不赋值或函数体不加小括号,是不能自动执行的 //以下情形并无 ...

- 【代码笔记】Web-JavaScript-JavaScript void

一,效果图. 二,代码. <!DOCTYPE html> <html> <head> <meta charset="utf-8"> ...

- 关于Skyline沿对象画boundingbox的探讨

先来说说为什么要搞这个?项目中经常遇到的一个操作就是选定对象,以前都是通过Tint设置对象颜色来标识选定对象,但是随着图层中模型增多,模型色彩丰富,会出现选定色与对象颜色对比不明显的情况.因为看到Te ...

- C# MessageBox自动关闭

本文以一个简单的小例子,介绍如何让MessageBox弹出的对话框,在几秒钟内自动关闭.特别是一些第三方插件(如:dll)弹出的对话框,最为适用.本文仅供学习分享使用,如有不足之处,还请指正. 概述 ...

- C++二分查找算法演示源码

如下内容段是关于C++二分查找算法演示的内容. #include <cstdio>{ int l = 0, r = n-1; int mid; while (l <= r){ mid ...

- C#:在匿名方法中捕获外部变量

先来一段代码引入主题.如果你可以直接说出代码的输出结果,说明本文不适合你.(代码引自<深入理解C#>第三版) class Program { private delegate void T ...

- C#窗体打包步骤

1.在项目下选择InstallerProjects的Setup Project建立打包工具. 2.找到项目bin目录Release下的文件全部复制下来. 3.复制完之后全部粘贴到Application ...

- 利用ZYNQ SOC快速打开算法验证通路(3)——PS端DMA缓存数据到PS端DDR

上篇该系列博文中讲述W5500接收到上位机传输的数据,此后需要将数据缓存起来.当数据量较大或者其他数据带宽较高的情况下,片上缓存(OCM)已无法满足需求,这时需要将大量数据保存在外挂的DDR SDRA ...