原生MapReduce开发样例

一、需求

data: 将相同名字合并为一个,并计算出平均数 tom

小明

jerry

2哈

tom

tom

小明

二、编码

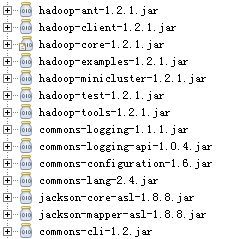

1.导入jar包

2.编码

2.1Map编写

package com.wzy.studentscore; import java.io.IOException;

import java.util.StringTokenizer; import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper; /**

* @author:吴兆跃

* @version 创建时间:2018年6月5日 下午5:58:55

* 类说明:

*/

public class ScoreMap extends Mapper<LongWritable, Text, Text, IntWritable>{

@Override

public void map(LongWritable key, Text value, Context context)

throws IOException,InterruptedException{ String line = value.toString(); //一行的数据

StringTokenizer tokenizerArticle = new StringTokenizer(line, "\n"); System.out.println("key: "+key);

System.out.println("value-line: "+line);

System.out.println("count: "+tokenizerArticle.countTokens()); while(tokenizerArticle.hasMoreTokens()){

String token = tokenizerArticle.nextToken();

System.out.println("token: "+token); StringTokenizer tokenizerLine = new StringTokenizer(token);

String strName = tokenizerLine.nextToken(); // 得到name

String strScore = tokenizerLine.nextToken(); // 得到分数 Text name = new Text(strName);

int scoreInt = Integer.parseInt(strScore);

context.write(name, new IntWritable(scoreInt)); }

System.out.println("context: "+context.toString());

} }

2.2Reduce编写

package com.wzy.studentscore; import java.io.IOException;

import java.util.Iterator; import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer; /**

* @author:吴兆跃

* @version 创建时间:2018年6月5日 下午6:50:28

* 类说明:

*/

public class ScoreReduce extends Reducer<Text, IntWritable, Text, IntWritable>{ @Override

public void reduce(Text key, Iterable<IntWritable> values, Context context)

throws IOException, InterruptedException{ int sum = ;

int count = ;

Iterator<IntWritable> iterator = values.iterator();

while(iterator.hasNext()){

sum += iterator.next().get(); //求和

count++;

}

int average = (int)sum / count; //求平均数

context.write(key, new IntWritable(average));

} }

2.3运行类编写

package com.wzy.studentscore; import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner; /**

* @author:吴兆跃

* @version 创建时间:2018年6月5日 下午6:59:29

* 类说明:

*/

public class ScoreProcess extends Configured implements Tool{ public static void main(String[] args) throws Exception {

int ret = ToolRunner.run(new ScoreProcess(), new String[]{"input","output"});

System.exit(ret);

} @Override

public int run(String[] args) throws Exception {

Job job = new Job(getConf());

job.setJarByClass(ScoreProcess.class);

job.setJobName("score_process"); job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class); job.setMapperClass(ScoreMap.class);

job.setCombinerClass(ScoreReduce.class);

job.setReducerClass(ScoreReduce.class); job.setInputFormatClass(TextInputFormat.class);

job.setOutputFormatClass(TextOutputFormat.class); FileInputFormat.setInputPaths(job, new Path(args[]));

FileOutputFormat.setOutputPath(job, new Path(args[])); boolean success = job.waitForCompletion(true);

return success ? : ;

} }

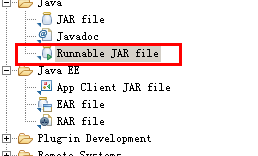

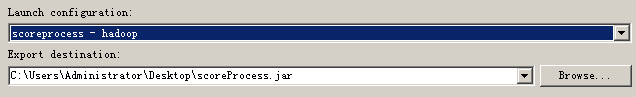

3.打包

三、调试

1. java本地运行

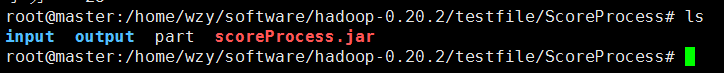

root@master:/home/wzy/software/hadoop-0.20./testfile/ScoreProcess# ls

input part scoreProcess.jar

root@master:/home/wzy/software/hadoop-0.20./testfile/ScoreProcess# java -jar scoreProcess.jar

Jun , :: AM org.apache.hadoop.util.NativeCodeLoader <clinit>

WARNING: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Jun , :: AM org.apache.hadoop.mapreduce.lib.input.FileInputFormat listStatus

INFO: Total input paths to process :

Jun , :: AM org.apache.hadoop.io.compress.snappy.LoadSnappy <clinit>

WARNING: Snappy native library not loaded

Jun , :: AM org.apache.hadoop.mapred.JobClient monitorAndPrintJob

INFO: Running job: job_local1903623691_0001

Jun , :: AM org.apache.hadoop.mapred.LocalJobRunner$Job$MapTaskRunnable run

INFO: Starting task: attempt_local1903623691_0001_m_000000_0

Jun , :: AM org.apache.hadoop.mapred.LocalJobRunner$Job run

INFO: Waiting for map tasks

Jun , :: AM org.apache.hadoop.util.ProcessTree isSetsidSupported

INFO: setsid exited with exit code

Jun , :: AM org.apache.hadoop.mapred.Task initialize

INFO: Using ResourceCalculatorPlugin : org.apache.hadoop.util.LinuxResourceCalculatorPlugin@5ddf714a

Jun , :: AM org.apache.hadoop.mapred.MapTask runNewMapper

INFO: Processing split: file:/home/wzy/software/hadoop-0.20./testfile/ScoreProcess/input/data:+

Jun , :: AM org.apache.hadoop.mapred.MapTask$MapOutputBuffer <init>

INFO: io.sort.mb =

Jun , :: AM org.apache.hadoop.mapred.MapTask$MapOutputBuffer <init>

INFO: data buffer = /

Jun , :: AM org.apache.hadoop.mapred.MapTask$MapOutputBuffer <init>

INFO: record buffer = /

key:

value-line: tom

count:

token: tom

context: org.apache.hadoop.mapreduce.Mapper$Context@41b9bff9

key:

value-line: 小明

count:

token: 小明

context: org.apache.hadoop.mapreduce.Mapper$Context@41b9bff9

key:

value-line: jerry

count:

token: jerry

context: org.apache.hadoop.mapreduce.Mapper$Context@41b9bff9

key:

value-line: 哈2

count:

token: 哈2

context: org.apache.hadoop.mapreduce.Mapper$Context@41b9bff9

key:

value-line: tom

count:

token: tom

context: org.apache.hadoop.mapreduce.Mapper$Context@41b9bff9

key:

value-line: tom

count:

token: tom

context: org.apache.hadoop.mapreduce.Mapper$Context@41b9bff9

key:

value-line: 小明

count:

token: 小明

context: org.apache.hadoop.mapreduce.Mapper$Context@41b9bff9

root@master:/home/wzy/software/hadoop-0.20./testfile/ScoreProcess# ls

input output part scoreProcess.jar

root@master:/home/wzy/software/hadoop-0.20./testfile/ScoreProcess# cd output/

root@master:/home/wzy/software/hadoop-0.20./testfile/ScoreProcess/output# ls

part-r- _SUCCESS

root@master:/home/wzy/software/hadoop-0.20./testfile/ScoreProcess/output# cat part-r-

jerry

tom

哈2

小明

2. 在hadoop hdfs上运行

2.1 data文件上传到hdfs

root@master:/home/wzy/software/hadoop-0.20./testfile/ScoreProcess# ../../bin/hadoop fs -mkdir /user

root@master:/home/wzy/software/hadoop-0.20./testfile/ScoreProcess# ../../bin/hadoop fs -mkdir /user/root

root@master:/home/wzy/software/hadoop-0.20./testfile/ScoreProcess# ../../bin/hadoop fs -mkdir /user/root/input

root@master:/home/wzy/software/hadoop-0.20./testfile/ScoreProcess# ../../bin/hadoop fs -put input/data /user/root/input

root@master:/home/wzy/software/hadoop-0.20./testfile/ScoreProcess# ../../bin/hadoop fs -ls /user/root/input

Found items

-rw-r--r-- root supergroup -- : /user/root/input/data

2.2 运行

root@master:/home/wzy/software/hadoop-0.20./testfile/ScoreProcess# ../../bin/hadoop jar scoreProcess.jar

// :: INFO input.FileInputFormat: Total input paths to process :

// :: INFO mapred.JobClient: Running job: job_201806060358_0002

// :: INFO mapred.JobClient: map % reduce %

// :: INFO mapred.JobClient: map % reduce %

// :: INFO mapred.JobClient: map % reduce %

// :: INFO mapred.JobClient: Job complete: job_201806060358_0002

// :: INFO mapred.JobClient: Counters:

// :: INFO mapred.JobClient: Map-Reduce Framework

// :: INFO mapred.JobClient: Combine output records=

// :: INFO mapred.JobClient: Spilled Records=

// :: INFO mapred.JobClient: Reduce input records=

// :: INFO mapred.JobClient: Reduce output records=

// :: INFO mapred.JobClient: Map input records=

// :: INFO mapred.JobClient: Map output records=

// :: INFO mapred.JobClient: Map output bytes=

// :: INFO mapred.JobClient: Reduce shuffle bytes=

// :: INFO mapred.JobClient: Combine input records=

// :: INFO mapred.JobClient: Reduce input groups=

// :: INFO mapred.JobClient: FileSystemCounters

// :: INFO mapred.JobClient: HDFS_BYTES_READ=

// :: INFO mapred.JobClient: FILE_BYTES_WRITTEN=

// :: INFO mapred.JobClient: FILE_BYTES_READ=

// :: INFO mapred.JobClient: HDFS_BYTES_WRITTEN=

// :: INFO mapred.JobClient: Job Counters

// :: INFO mapred.JobClient: Launched map tasks=

// :: INFO mapred.JobClient: Launched reduce tasks=

// :: INFO mapred.JobClient: Data-local map tasks=

2.3 查看结果

root@master:/home/wzy/software/hadoop-0.20./testfile/ScoreProcess# ../../bin/hadoop fs -ls /user/root/output/

Found items

drwxr-xr-x - root supergroup -- : /user/root/output/_logs

-rw-r--r-- root supergroup -- : /user/root/output/part-r-00000

root@master:/home/wzy/software/hadoop-0.20./testfile/ScoreProcess# ../../bin/hadoop fs -get /user/root/output/part-r- part

root@master:/home/wzy/software/hadoop-0.20./testfile/ScoreProcess# ls

input output part scoreProcess.jar

root@master:/home/wzy/software/hadoop-0.20./testfile/ScoreProcess# cat part

jerry

tom

2哈

小明

原生MapReduce开发样例的更多相关文章

- spring+springmvc+hibernate架构、maven分模块开发样例小项目案例

maven分模块开发样例小项目案例 spring+springmvc+hibernate架构 以用户管理做測试,分dao,sevices,web层,分模块开发測试!因时间关系.仅仅測查询成功.其它的准 ...

- hadoop学习;block数据块;mapreduce实现样例;UnsupportedClassVersionError异常;关联项目源代码

对于开源的东东,尤其是刚出来不久,我认为最好的学习方式就是能够看源代码和doc,測试它的样例 为了方便查看源代码,关联导入源代码的项目 先前的项目导入源代码是关联了源代码文件 block数据块,在配置 ...

- hadoop得知;block数据块;mapreduce实现样例;UnsupportedClassVersionError变态;该项目的源代码相关联

对于开源的东西.特别是刚出来不久.我认为最好的学习方法是能够看到源代码,doc,样品测试 为了方便查看源代码,导入与项目相关的源代码 watermark/2/text/aHR0cDovL2Jsb2cu ...

- PyQt开发样例: 利用QToolBox开发的桌面工具箱Demo

老猿Python博文目录 专栏:使用PyQt开发图形界面Python应用 老猿Python博客地址 一.引言 toolBox工具箱是一个容器部件,对应类为QToolBox,在其内有一列从上到下顺序排列 ...

- OpenHarmony 3.1 Beta 样例:使用分布式菜单创建点餐神器

(以下内容来自开发者分享,不代表 OpenHarmony 项目群工作委员会观点) 刘丽红 随着社会的进步与发展,科技手段的推陈出新,餐饮行业也在寻求新的突破与变革,手机扫描二维码点餐系统已经成为餐饮行 ...

- AppCan移动应用开发平台新增9个超有用插件(内含演示样例代码)

使用AppCan平台进行移动开发.你所须要具备的是Html5+CSS +JS前端语言基础.此外.Hybrid混合模式应用还需结合原生语言对功能模块进行封装,对于没有原生基础的开发人员,怎样实现App里 ...

- [b0010] windows 下 eclipse 开发 hdfs程序样例 (二)

目的: 学习windows 开发hadoop程序的配置 相关: [b0007] windows 下 eclipse 开发 hdfs程序样例 环境: 基于以下环境配置好后. [b0008] Window ...

- 构造Scala开发环境并创建ApiDemos演示样例项目

从2011年開始写Android ApiDemos 以来.Android的版本号也更新了非常多,眼下的版本号已经是4.04. ApiDemos中的样例也添加了不少,有必要更新Android ApiDe ...

- 让你提前认识软件开发(19):C语言中的协议及单元測试演示样例

第1部分 又一次认识C语言 C语言中的协议及单元測试演示样例 [文章摘要] 在实际的软件开发项目中.常常要实现多个模块之间的通信.这就须要大家约定好相互之间的通信协议,各自依照协议来收发和解析消息. ...

随机推荐

- 印象笔记ipad端快捷键

- 判断数A和数B中有多少个位不相同

1. A & B,得到的结果C中的1的位表明了A和B中相同的位都是1的位:2. A | B, 得到的结果D中的1的位表明了A和B在该位至少有一个为1的位,包含了A 与 B 都是1的位数,经过前 ...

- Numpy用于数组的文件输入输出

这一章比较简单,内容也比较少.而且对于文件的读写,还是使用pandas比较好.numpy主要是读写文本数据和二进制数据的. 将数组以二进制的格式保存到硬盘上 主要的函数有numpy.save和nump ...

- Google Cloud Platfrom中使用Linux VM

Linkes https://cloud.google.com/compute/docs/quickstart-linuxhttps://console.cloud.google.com/comput ...

- id函数

描述 id() 函数用于获取对象的内存地址. 语法 id 语法: id([object]) 参数说明: object -- 对象. 返回值 返回对象的内存地址. 实例 以下实例展示了 id 的使用方法 ...

- 移植opencv2.4.9到android过程记录

http://blog.csdn.net/brightming/article/details/50606463 在移植到arm开发板的时候已经说过,OpenCV已经为各平台准备了一套cmake交叉编 ...

- h => h(App)解析

在创建Vue实例时经常看见render: h => h(App)的语句,现做出如下解析: h即为createElement,将h作为createElement的别名是Vue生态系统的通用管理,也 ...

- [Android]开源中国源码分析之一---启动界面

开源中国android端版本号:2.4 启动界面: 在AndroidManifest.xml中找到程序的入口, <activity android:name=".AppStart&qu ...

- eclipse maven 项目 maven build 无反应

eclipse maven 项目 使用maven build ,clean 等命令均无反应,控制台无任何输出 1.打开Window --> Preferences --> Java --& ...

- iptables基础知识详解

iptables防火墙可以用于创建过滤(filter)与NAT规则.所有Linux发行版都能使用iptables,因此理解如何配置 iptables将会帮助你更有效地管理Linux防火墙.如果你是第一 ...