Recurrent Neural Network[Content]

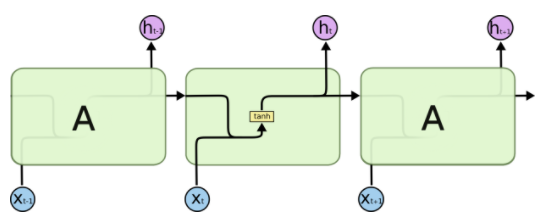

1. RNN

图1.1 标准RNN模型的结构

2. BiRNN

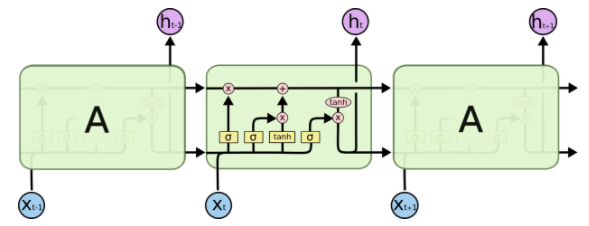

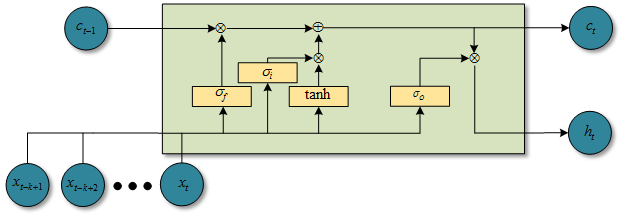

3. LSTM

图3.1 LSTM模型的结构

4. Clockwork RNN

5. Depth Gated RNN

6. Grid LSTM

7. DRAW

8. RLVM

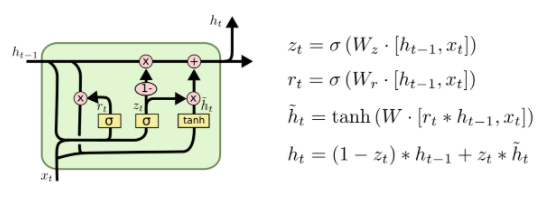

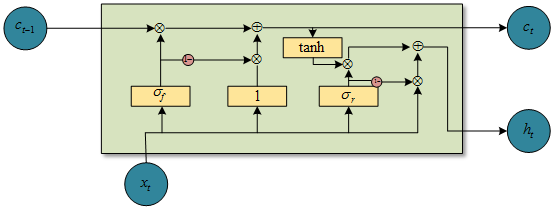

9. GRU

图9.1 GRU模型的结构

10. NTM

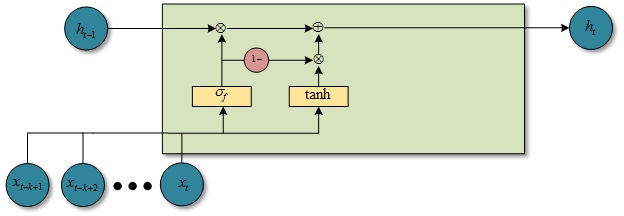

11. QRNN

图11.1 f-pooling时候的QRNN结构图

图11.2 fo-pooling时候的QRNN结构图

图11.3 ifo-pooling时候的QRNN结构图

点这里,QRNN

12. Persistent RNN

13. SRU

图13.1 SRU模型的结构

点这里,SRU

参考文献:

- [RNN&Depth] - Pascanu R, Gulcehre C, Cho K, et al. How to construct deep recurrent neural networks[J]. arXiv preprint arXiv:1312.6026, 2013.

- [survey] - Lipton Z C, Berkowitz J, Elkan C. A critical review of recurrent neural networks for sequence learning[J]. arXiv preprint arXiv:1506.00019, 2015.

.. [survey] - Jozefowicz R, Zaremba W, Sutskever I. An empirical exploration of recurrent network architectures[C]//Proceedings of the 32nd International Conference on Machine Learning (ICML-15). 2015: 2342-2350.

.. [survey] - Greff K, Srivastava R K, Koutník J, et al. LSTM: A search space odyssey[J]. IEEE transactions on neural networks and learning systems, 2017.

.. [survey] - Karpathy A, Johnson J, Fei-Fei L. Visualizing and understanding recurrent networks[J]. arXiv preprint arXiv:1506.02078, 2015. - [RNN] - Elman, Jeffrey L. “Finding structure in time.” Cognitive science 14.2 (1990): 179-211.

- [BiRNN] - Schuster, Mike, and Kuldip K. Paliwal. “Bidirectional recurrent neural networks.” IEEE Transactions on Signal Processing 45.11 (1997): 2673-2681.

- [LSTM] - Hochreiter, Sepp, and Jürgen Schmidhuber. “Long short-term memory.” Neural computation 9.8 (1997): 1735-1780

.. [LSTM] - 理解 LSTM 网络

.. [LSTM Variants] - Gers F A, Schmidhuber J. Recurrent nets that time and count[C]//Neural Networks, 2000. IJCNN 2000, Proceedings of the IEEE-INNS-ENNS International Joint Conference on. IEEE, 2000, 3: 189-194. - [Multi-dimensional RNN] - Alex Graves, Santiago Fernandez, and Jurgen Schmidhuber, Multi-Dimensional Recurrent Neural Networks, ICANN 2007

- [GFRNN] - Junyoung Chung, Caglar Gulcehre, Kyunghyun Cho, Yoshua Bengio, Gated Feedback Recurrent Neural Networks, arXiv:1502.02367 / ICML 2015

- [Tree-Structured RNNs] - Kai Sheng Tai, Richard Socher, and Christopher D. Manning, Improved Semantic Representations From Tree-Structured Long Short-Term Memory Networks, arXiv:1503.00075 / ACL 2015

.. [Tree-Structured RNNs] - Samuel R. Bowman, Christopher D. Manning, and Christopher Potts, Tree-structured composition in neural networks without tree-structured architectures, arXiv:1506.04834 - [Clockwork RNN] - Koutník J, Greff K, Gomez F, et al. A Clockwork RNN[J]. arXiv preprint arXiv:1402.3511, 2014.

- [Depth Gated RNN] - Yao K, Cohn T, Vylomova K, et al. Depth-gated recurrent neural networks[J]. arXiv preprint, 2015.

- [Grid LSTM] - Kalchbrenner N, Danihelka I, Graves A. Grid long short-term memory[J]. arXiv preprint arXiv:1507.01526, 2015.

- [Segmental RNN] - Lingpeng Kong, Chris Dyer, Noah Smith, "Segmental Recurrent Neural Networks", ICLR 2016.

- [Seq2seq for Sets ] - Oriol Vinyals, Samy Bengio, Manjunath Kudlur, "Order Matters: Sequence to sequence for sets", ICLR 2016.

- [Hierarchical Recurrent Neural Networks] - Junyoung Chung, Sungjin Ahn, Yoshua Bengio, "Hierarchical Multiscale Recurrent Neural Networks", arXiv:1609.01704

- [DRAW] - Gregor K, Danihelka I, Graves A, et al. DRAW: A recurrent neural network for image generation[J]. arXiv preprint arXiv:1502.04623, 2015.

- [RLVM] - Chung J, Kastner K, Dinh L, et al. A recurrent latent variable model for sequential data[C]//Advances in neural information processing systems. 2015: 2980-2988.

- [Generate] - Bayer J, Osendorfer C. Learning stochastic recurrent networks[J]. arXiv preprint arXiv:1411.7610, 2014.

- [GRU] - Cho K, Van Merriënboer B, Gulcehre C, et al. Learning phrase representations using RNN encoder-decoder for statistical machine translation[J]. arXiv preprint arXiv:1406.1078, 2014.

.. [GRU] - Cho K, Van Merriënboer B, Bahdanau D, et al. On the properties of neural machine translation: Encoder-decoder approaches[J]. arXiv preprint arXiv:1409.1259, 2014.

.. [GRU] - Chung, Junyoung, et al. “Empirical evaluation of gated recurrent neural networks on sequence modeling.” arXiv preprint arXiv:1412.3555 (2014). - [NTM] - Graves, Alex, Greg Wayne, and Ivo Danihelka. “Neural turing machines.” arXiv preprint arXiv:1410.5401 (2014).

- [Neural GPU] - Łukasz Kaiser, Ilya Sutskever, arXiv:1511.08228 / ICML 2016 (under review)

- [QRNN] - Bradbury J, Merity S, Xiong C, et al. Quasi-recurrent neural networks[J]. arXiv preprint arXiv:1611.01576, 2016.

- [Memory Network] - Jason Weston, Sumit Chopra, Antoine Bordes, Memory Networks, arXiv:1410.3916

- [Pointer Network] - Oriol Vinyals, Meire Fortunato, and Navdeep Jaitly, Pointer Networks, arXiv:1506.03134 / NIPS 2015

- [Deep Attention Recurrent Q-Network] - Ivan Sorokin, Alexey Seleznev, Mikhail Pavlov, Aleksandr Fedorov, Anastasiia Ignateva, Deep Attention Recurrent Q-Network , arXiv:1512.01693

- [Dynamic Memory Networks] - Ankit Kumar, Ozan Irsoy, Peter Ondruska, Mohit Iyyer, James Bradbury, Ishaan Gulrajani, Victor Zhong, Romain Paulus, Richard Socher, "Ask Me Anything: Dynamic Memory Networks for Natural Language Processing", arXiv:1506.07285

- [SRU] - Lei T, Zhang Y. Training RNNs as Fast as CNNs[J]. arXiv preprint arXiv:1709.02755, 2017.

- [知乎] - 如何评价新提出的RNN变种SRU

- [attention] - Xu K, Ba J, Kiros R, et al. Show, attend and tell: Neural image caption generation with visual attention[C]//International Conference on Machine Learning. 2015: 2048-2057.

- [Persistent RNN] - Diamos G, Sengupta S, Catanzaro B, et al. Persistent rnns: Stashing recurrent weights on-chip[C]//International Conference on Machine Learning. 2016: 2024-2033.

.. [Persistent RNN] - Diamos G, Sengupta S, Catanzaro B, et al. Persistent RNNs: Stashing Weights on Chip[J]. 2016. - [github] - Awesome Recurrent Neural Networks.

Recurrent Neural Network[Content]的更多相关文章

- Recurrent Neural Network系列1--RNN(循环神经网络)概述

作者:zhbzz2007 出处:http://www.cnblogs.com/zhbzz2007 欢迎转载,也请保留这段声明.谢谢! 本文翻译自 RECURRENT NEURAL NETWORKS T ...

- Recurrent Neural Network(循环神经网络)

Reference: Alex Graves的[Supervised Sequence Labelling with RecurrentNeural Networks] Alex是RNN最著名变种 ...

- Recurrent Neural Network系列2--利用Python,Theano实现RNN

作者:zhbzz2007 出处:http://www.cnblogs.com/zhbzz2007 欢迎转载,也请保留这段声明.谢谢! 本文翻译自 RECURRENT NEURAL NETWORKS T ...

- Recurrent Neural Network系列3--理解RNN的BPTT算法和梯度消失

作者:zhbzz2007 出处:http://www.cnblogs.com/zhbzz2007 欢迎转载,也请保留这段声明.谢谢! 这是RNN教程的第三部分. 在前面的教程中,我们从头实现了一个循环 ...

- Recurrent Neural Network系列4--利用Python,Theano实现GRU或LSTM

yi作者:zhbzz2007 出处:http://www.cnblogs.com/zhbzz2007 欢迎转载,也请保留这段声明.谢谢! 本文翻译自 RECURRENT NEURAL NETWORK ...

- 循环神经网络(Recurrent Neural Network,RNN)

为什么使用序列模型(sequence model)?标准的全连接神经网络(fully connected neural network)处理序列会有两个问题:1)全连接神经网络输入层和输出层长度固定, ...

- Recurrent Neural Network[survey]

0.引言 我们发现传统的(如前向网络等)非循环的NN都是假设样本之间无依赖关系(至少时间和顺序上是无依赖关系),而许多学习任务却都涉及到处理序列数据,如image captioning,speech ...

- 【NLP】Recurrent Neural Network and Language Models

0. Overview What is language models? A time series prediction problem. It assigns a probility to a s ...

- 课程五(Sequence Models),第一 周(Recurrent Neural Networks) —— 1.Programming assignments:Building a recurrent neural network - step by step

Building your Recurrent Neural Network - Step by Step Welcome to Course 5's first assignment! In thi ...

随机推荐

- 从项目需求角度,使用纯CSS方案解决垂直居中

CSS是HTML元素的剪刀手,它极度的丰富了web页面的修饰.在众多CSS常见的样式需求中,有一奇葩式的存在[垂直居中],因为不管是从逻辑实现方面还是从正常需求量来讲,这都没理由让这个需求在实践过程中 ...

- JMeter 线程组之ConcurrencyThreadGroup介绍

线程组之ConcurrencyThreadGroup by:授客 QQ:1033553122 测试环境 apache-jmeter-3.2 jmeter-plugins-manager-1.3.jar ...

- loadrunner 脚本优化-参数化之Parameter List参数同行取值

脚本优化-参数化之Parameter List参数同行取值 by:授客 QQ:1033553122 select next row 记录选择方式 Same line as,这个选项只有当参数多余一个时 ...

- 简单的Array.sort 排序方法

[排序]sort类 Arrays.sort升序排序 import java.util.Arrays;//导入Arrays类public class menu{ public static voi ...

- 关于ARM CM3的启动文件分析

下面以ARM Cortex_M3裸核的启动代码为例,做一下简单的分析.首先,在启动文件中完成了三项工作: 1. 堆栈以及堆的初始化 2. 定位中断向量表 3. 调用Reset Handler. ...

- The log scan number (620023:3702:1) passed to log scan in database 'xxxx' is not valid

昨天一台SQL Server 2008R2的数据库在凌晨5点多抛出下面告警信息: The log scan number (620023:3702:1) passed to log scan in d ...

- c/c++ 标准顺序容器 之 push_back,push_front,insert,emplace 操作

c/c++ 标准顺序容器 之 push_back,push_front,insert,emplace 操作 关键概念:向容器添加元素时,添加的是元素的拷贝,而不是对象本身.随后对容器中元素的任何改变都 ...

- c 指针函数 vs 函数指针

指针函数,函数指针 #include <stdio.h> int max(int a, int b){ return a > b ? a : b; } //函数指针,2个int参数, ...

- 平衡二叉树(Balanced Binary Tree 或 Height-Balanced Tree)又称AVL树

平衡二叉树(Balanced Binary Tree 或 Height-Balanced Tree)又称AVL树 (a)和(b)都是排序二叉树,但是查找(b)的93节点就需要查找6次,查找(a)的93 ...

- ubuntu 打开eclipse出现A Java Runtime Environment (JRE) or Java Development Kit (JDK) must be ... 解决方法(转载)

原创作者:http://www.cnblogs.com/jerome-rong/archive/2013/02/19/2916608.html Java RunTime Environment (JR ...