Hadoop2-认识Hadoop大数据处理架构-单机部署

一、Hadoop原理介绍

1、请参考原理篇:Hadoop1-认识Hadoop大数据处理架构

二、centos7单机部署hadoop 前期准备

1、创建用户

[root@web3 ~]# useradd -m hadoop -s /bin/bash #---创建hadoop用户

[root@web3 ~]# passwd hadoop #---创建密码

Changing password for user hadoop.

New password:

BAD PASSWORD: The password is a palindrome

Retype new password:

passwd: all authentication tokens updated successfully.

[root@web3 ~]#

2、添加用户权限

[root@web3 ~]# chmod u+w /etc/sudoers #---给sudo文件写权限

[root@web3 ~]# cat /etc/sudoers |grep hadoop #---这里自行vim添加,这里是用cat命令展示为添加后的效果

hadoop ALL=(ALL) ALL

[root@web3 ~]#

[root@web3 ~]# chmod u-w /etc/sudoers #---给sudo去掉写权限

3、安装软件openssh,生成授权,免密码

1)安装

[root@web3 ~]# su hadoop #切换用户hadoop

[hadoop@web3 root]$ sudo yum install openssh-clients openssh-server #---安装openssh

1)操作步骤

cd .ssh/

ssh-keygen -t rsa

cat id_rsa.pub >> authorized_keys

chmod ./authorized_keys

4、安装java

sudo yum install java-1.8.-openjdk java-1.8.-openjdk-devel

#---用rpm -ql查看java相关目录

[hadoop@web3 bin]$ rpm -ql java-1.8.0-openjdk.x86_64 1:1.8.0.222.b10-1.el7_7

/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.222.b10-1.el7_7.x86_64/jre/bin/policytool

/usr/lib/jvm/java-1.8.-openjdk-1.8.0.222.b10-.el7_7.x86_64/jre/lib/amd64/libawt_xawt.so

/usr/lib/jvm/java-1.8.-openjdk-1.8.0.222.b10-.el7_7.x86_64/jre/lib/amd64/libjawt.so

/usr/lib/jvm/java-1.8.-openjdk-1.8.0.222.b10-.el7_7.x86_64/jre/lib/amd64/libjsoundalsa.so

/usr/lib/jvm/java-1.8.-openjdk-1.8.0.222.b10-.el7_7.x86_64/jre/lib/amd64/libsplashscreen.so

/usr/share/applications/java-1.8.-openjdk-1.8.0.222.b10-.el7_7.x86_64-policytool.desktop

/usr/share/icons/hicolor/16x16/apps/java-1.8.-openjdk.png

/usr/share/icons/hicolor/24x24/apps/java-1.8.-openjdk.png

/usr/share/icons/hicolor/32x32/apps/java-1.8.-openjdk.png

/usr/share/icons/hicolor/48x48/apps/java-1.8.-openjdk.png

package :1.8.0.222.b10-.el7_7 is not installed

[hadoop@web3 bin]$

5、添加环境变量

[hadoop@web3 bin]$ cat ~/.bashrc

# .bashrc

# Source global definitions

if [ -f /etc/bashrc ]; then

. /etc/bashrc

fi

# Uncomment the following line if you don't like systemctl's auto-paging feature:

# export SYSTEMD_PAGER=

# User specific aliases and functions

#---添加此环境变量

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.222.b10-1.el7_7.x86_64

#---输出检查

[hadoop@web3 jvm]$ echo $JAVA_HOME

/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.222.b10-1.el7_7.x86_64

#---输出jave版本

[hadoop@web3 jvm]$ java -version

openjdk version "1.8.0_222"

OpenJDK Runtime Environment (build 1.8.0_222-b10)

OpenJDK 64-Bit Server VM (build 25.222-b10, mixed mode)

#---使用变量输出java版本

[hadoop@web3 jvm]$ $JAVA_HOME/bin/java -version

openjdk version "1.8.0_222"

OpenJDK Runtime Environment (build 1.8.0_222-b10)

OpenJDK 64-Bit Server VM (build 25.222-b10, mixed mode)

[hadoop@web3 jvm]$

java -version 与$JAVA_HOME/bin/jave -version运行一直即代表添加成功

6、开始安装hadoop 3.1.2

下载路径:http://mirror.bit.edu.cn/apache/hadoop/common/

上传到服务器

[hadoop@web3 root]$ cd

[hadoop@web3 ~]$ ll

total

-rw-r--r-- hadoop hadoop Oct : hadoop-3.1..tar.gz

drwxrwxr-x hadoop hadoop Oct : ssh

[hadoop@web3 ~]$ sudo tar -zxf hadoop-3.1.2.tar.gz -C /usr/local

[sudo] password for hadoop:

[hadoop@web3 ~]$ cd /usr/local

[hadoop@web3 local]$ sudo mv hadoop-3.1.2/ ./hadoop

[hadoop@web3 local]$ ll

total

drwxr-xr-x. root root Nov bin

drwxr-xr-x. root root Nov etc

drwxr-xr-x. root root Nov games

drwxr-xr-x hadoop Jan hadoop

drwxr-xr-x. root root Nov include

drwxr-xr-x. root root Nov lib

drwxr-xr-x. root root Nov lib64

drwxr-xr-x. root root Nov libexec

drwxr-xr-x. root root Nov sbin

drwxr-xr-x. root root Aug share

drwxr-xr-x. root root Nov src

[hadoop@web3 local]$ chown -R hadoop:hadoop ./hadoop

[hadoop@web3 local]$ cd hadoop/

[hadoop@web3 hadoop]$ ./bin/hadoop version

Hadoop 3.1.

Source code repository https://github.com/apache/hadoop.git -r 1019dde65bcf12e05ef48ac71e84550d589e5d9a

Compiled by sunilg on --29T01:39Z

Compiled with protoc 2.5.

From source with checksum 64b8bdd4ca6e77cce75a93eb09ab2a9

This command was run using /usr/local/hadoop/share/hadoop/common/hadoop-common-3.1..jar

[hadoop@web3 hadoop]$ pwd

/usr/local/hadoop

[hadoop@web3 hadoop]$

三、Hadoop单机配置-非分布式

hadoop默认模式为非分布式模式,无需进行其他配置即可运行,非分布式即但java进程,方便进行调试

hadoop附带了丰富的例子(./bin/hadoop jar ./share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.2.jar 可以看到所有例子),包括wordcount、terasort、join、grep等

1、现在运行grep测试一下

这个实例是运行grep例子,将input文件夹所有文件作为输入,筛选当中符合正则表达式dfs[a-z.]+的单词并统计出现的次数,最后输出结果到output文件夹中

mkdir ./input

cp ./etc/hadoop/*.xml ./input #---将配置文件作为输入文件

./bin/hadoop jar ./share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.2.jar grep ./input ./output 'dfs[a-z.]+'

2、正确的运行结果

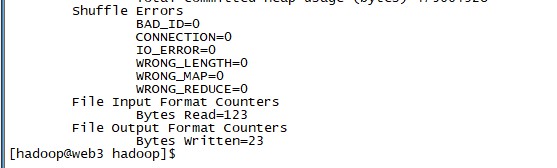

运行hadoop实例,成功的话会输出很多作业的相关信息,最后的输出信息就是下面图示,作业结果会输出在指定的output文件夹中,通过命令cat ./output/* 查看结果,符合正则的单词dfsadmin出现了一次。

[hadoop@web3 hadoop]$ cat ./output/* #如果要重新运行

1 dfsadmin

[hadoop@web3 hadoop]$

四、Hadoop伪分布式配置

hadoop可以在单台节点以伪分布式运行,hadoop进程以分离的java进程来运行,节点作为namenode也作为datanode,同时,读取的时HDFS中的文件

1、设置环境变量

[hadoop@web3 hadoop]$ vim ~/.bashrc # .bashrc # Source global definitions

if [ -f /etc/bashrc ]; then

. /etc/bashrc

fi # Uncomment the following line if you don't like systemctl's auto-paging feature:

# export SYSTEMD_PAGER= # User specific aliases and functions

#Java environment variables

export JAVA_HOME=/usr/lib/jvm/java-1.8.-openjdk-1.8.0.222.b10-.el7_7.x86_64

#Hadoop environment Variables

export HADOOP_HOME=/usr/local/hadoop

export HADOOP_INSTALL=$HADOOP_HOME

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

~

更新环境变量

2、修改被指文件

配置文件位于/usr/local/hadoop/etc/hadoop/中,伪分布式需要两个配置文件core-site.xml和hdfs-site.xml,hadoop的配置文件时xml格式,每个配置声明property的name和value的方式来实现

core-site.xml

修改标注红色字体部分

[hadoop@web3 hadoop]$ vim ./etc/hadoop/core-site.xml <?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

--> <!-- Put site-specific property overrides in this file. --> <configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/usr/local/hadoop/tmp</value>

<description>Abase for other temporary directories.</description>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

~

~

hdfs-site.xml

[hadoop@web3 hadoop]$ vim ./etc/hadoop/hdfs-site.xml <?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

--> <!-- Put site-specific property overrides in this file. --> <configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/usr/local/hadoop/tmp/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/usr/local/hadoop/tmp/dfs/data</value>

</property>

</configuration>

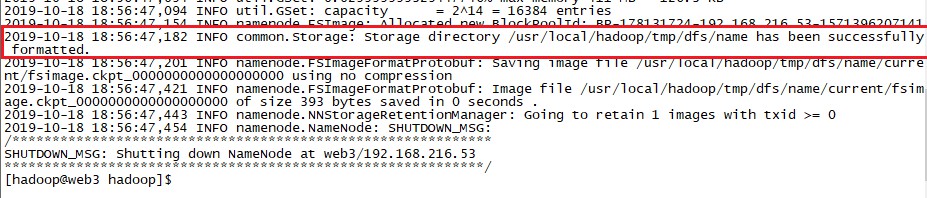

3、执行namenode的格式化

[hadoop@web3 hadoop]$ ./bin/hdfs namenode -format

WARNING: /usr/local/hadoop/logs does not exist. Creating.

-- ::, INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = web3/192.168.216.53

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 3.1.2

STARTUP_MSG: classpath = /usr/local/hadoop/etc/hadoop:/usr/local/hadoop/share/hadoop/common/lib/accessors-smart-1.2.jar:/usr/local/hadoop/share/hadoop/common/lib/asm-

。。。。。。。。。。。。。。。。。这里省略一堆。。。。。。。。。。。。。。。。。。。。。。。。。

2019-10-18 18:56:47,031 INFO namenode.FSDirectory: XAttrs enabled? true

2019-10-18 18:56:47,032 INFO namenode.NameNode: Caching file names occurring more than 10 times

2019-10-18 18:56:47,046 INFO snapshot.SnapshotManager: Loaded config captureOpenFiles: false, skipCaptureAccessTimeOnlyChange: false, snapshotDiffAllowSnapRootDescendant: true, maxSnapshotLimit: 65536

2019-10-18 18:56:47,049 INFO snapshot.SnapshotManager: SkipList is disabled

2019-10-18 18:56:47,057 INFO util.GSet: Computing capacity for map cachedBlocks

2019-10-18 18:56:47,057 INFO util.GSet: VM type = 64-bit

2019-10-18 18:56:47,057 INFO util.GSet: 0.25% max memory 411 MB = 1.0 MB

2019-10-18 18:56:47,058 INFO util.GSet: capacity = 2^17 = 131072 entries

2019-10-18 18:56:47,083 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10

2019-10-18 18:56:47,084 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10

2019-10-18 18:56:47,084 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25

2019-10-18 18:56:47,090 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

2019-10-18 18:56:47,090 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

2019-10-18 18:56:47,094 INFO util.GSet: Computing capacity for map NameNodeRetryCache

2019-10-18 18:56:47,094 INFO util.GSet: VM type = 64-bit

2019-10-18 18:56:47,094 INFO util.GSet: 0.029999999329447746% max memory 411 MB = 126.3 KB

2019-10-18 18:56:47,094 INFO util.GSet: capacity = 2^14 = 16384 entries

2019-10-18 18:56:47,154 INFO namenode.FSImage: Allocated new BlockPoolId: BP-178131724-192.168.216.53-1571396207141

2019-10-18 18:56:47,182 INFO common.Storage: Storage directory /usr/local/hadoop/tmp/dfs/name has been successfully formatted.

2019-10-18 18:56:47,201 INFO namenode.FSImageFormatProtobuf: Saving image file /usr/local/hadoop/tmp/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression

2019-10-18 18:56:47,421 INFO namenode.FSImageFormatProtobuf: Image file /usr/local/hadoop/tmp/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 393 bytes saved in 0 seconds .

2019-10-18 18:56:47,443 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

2019-10-18 18:56:47,454 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at web3/192.168.216.53

************************************************************/

[hadoop@web3 hadoop]$

执行后查看最后几行的info信息,查看是否成功

可以看到已经成功格式化

4、开启namenode和datanode守护进程

[hadoop@web3 hadoop]$ ./sbin/start-dfs.sh #--开启namenode和datanode守护进程

Starting namenodes on [localhost]

localhost: Warning: Permanently added 'localhost' (ECDSA) to the list of known hosts.

Starting datanodes

Starting secondary namenodes [web3]

web3: Warning: Permanently added 'web3,fe80::9416:80e8:f210:1e24%ens33' (ECDSA) to the list of known hosts.

-- ::, WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[hadoop@web3 hadoop]$ jps #--检查是否启动,jps 看到namenode和datanode就说明启动了

NameNode

DataNode

SecondaryNameNode

Jps

[hadoop@web3 hadoop]$

如提示WARN util.NativeCodeLoader,整个提示不会影响正常启动

5、查看监听端口并访问web界面

1)查看监听端口

如下:应该是43332整个端口

[hadoop@web3 hadoop]$ netstat -unltop

(Not all processes could be identified, non-owned process info

will not be shown, you would have to be root to see it all.)

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name Timer

tcp 0.0.0.0: 0.0.0.0:* LISTEN /java off (0.00//)

tcp 0.0.0.0: 0.0.0.0:* LISTEN /java off (0.00//)

tcp 0.0.0.0: 0.0.0.0:* LISTEN /java off (0.00//)

tcp 0.0.0.0: 0.0.0.0:* LISTEN /java off (0.00//)

tcp 0.0.0.0: 0.0.0.0:* LISTEN - off (0.00//)

tcp 192.168.122.1: 0.0.0.0:* LISTEN - off (0.00//)

tcp 0.0.0.0: 0.0.0.0:* LISTEN - off (0.00//)

tcp 127.0.0.1: 0.0.0.0:* LISTEN - off (0.00//)

tcp 127.0.0.1: 0.0.0.0:* LISTEN - off (0.00//)

tcp 127.0.0.1: 0.0.0.0:* LISTEN - off (0.00//)

tcp 0 0 127.0.0.1:43332 0.0.0.0:* LISTEN 17553/java off (0.00//)

tcp 0.0.0.0: 0.0.0.0:* LISTEN /java off (0.00//)

tcp 127.0.0.1: 0.0.0.0:* LISTEN /java off (0.00//)

tcp6 ::: :::* LISTEN - off (0.00//)

tcp6 ::: :::* LISTEN - off (0.00//)

tcp6 ::: :::* LISTEN - off (0.00//)

tcp6 ::: :::* LISTEN - off (0.00//)

tcp6 ::: :::* LISTEN - off (0.00//)

udp 0.0.0.0: 0.0.0.0:* - off (0.00//)

udp 0.0.0.0: 0.0.0.0:* - off (0.00//)

udp 192.168.122.1: 0.0.0.0:* - off (0.00//)

udp 0.0.0.0: 0.0.0.0:* - off (0.00//)

[hadoop@web3 hadoop]$

2)访问web端

成功启动后web访问一下,可以查看namenode和datanode信息,还可以在线查看hdfs中的文件如下图:

http://localhost:43332

五、Hadoop伪分布式实例

1、HDFS中创建用户目录

#--HDFS中创建用户目录

[hadoop@web3 hadoop]$ ./bin/hdfs dfs -mkdir -p /user/hadoop

2019-10-18 22:56:44,350 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2、创建一个input目录,并复制/usr/local/hadoop/etc/hadoop文件中的所有xml文件

[hadoop@web3 hadoop]$ ./bin/hdfs dfs -mkdir input

2019-10-18 22:58:03,745 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[hadoop@web3 hadoop]$ ./bin/hdfs dfs -put ./etc/hadoop/*.xml input

2019-10-18 22:58:39,703 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

3、查看HDFS文件列表

[hadoop@web3 hadoop]$ ./bin/hdfs dfs -ls input

-- ::, WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Found items

-rw-r--r-- hadoop supergroup -- : input/capacity-scheduler.xml

-rw-r--r-- hadoop supergroup -- : input/core-site.xml

-rw-r--r-- hadoop supergroup -- : input/hadoop-policy.xml

-rw-r--r-- hadoop supergroup -- : input/hdfs-site.xml

-rw-r--r-- hadoop supergroup -- : input/httpfs-site.xml

-rw-r--r-- hadoop supergroup -- : input/kms-acls.xml

-rw-r--r-- hadoop supergroup -- : input/kms-site.xml

-rw-r--r-- hadoop supergroup -- : input/mapred-site.xml

-rw-r--r-- hadoop supergroup -- : input/yarn-site.xml

[hadoop@web3 hadoop]$

4、实例演示

伪分布式运行mapreduce作业的方式和单机一样,区别在于伪分布式读取的是HDFS中的文件

[hadoop@web3 hadoop]$ ./bin/hadoop jar ./share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.2.jar grep input output 'dfs[a-z.]+'

2019-10-18 23:06:38,782 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2019-10-18 23:06:40,494 INFO impl.MetricsConfig: loaded properties from hadoop-metrics2.properties

2019-10-18 23:06:40,809 INFO impl.MetricsSystemImpl: Scheduled Metric snapshot period at 10 second(s).

2019-10-18 23:06:40,810 INFO impl.MetricsSystemImpl: JobTracker metrics system started

2019-10-18 23:06:41,480 INFO input.FileInputFormat: Total input files to process : 9

2019-10-18 23:06:41,591 INFO mapreduce.JobSubmitter: number of splits:9

2019-10-18 23:06:42,290 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_local1738759870_0001

2019-10-18 23:06:42,293 INFO mapreduce.JobSubmitter: Executing with tokens: []

。。。。。。。。。。。。。。#省略若干#。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。。

Shuffle Errors

BAD_ID=

CONNECTION=

IO_ERROR=

WRONG_LENGTH=

WRONG_MAP=

WRONG_REDUCE=

File Input Format Counters

Bytes Read=

File Output Format Counters

Bytes Written=

#---检查运行结果

[hadoop@web3 hadoop]$ ./bin/hdfs dfs -cat output/*

2019-10-18 23:07:19,640 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

1 dfsadmin

1 dfs.replication

1 dfs.namenode.name.dir

1 dfs.datanode.data.dir

[hadoop@web3 hadoop]$

5、实例2,也可以把结果取回本地

删除本地output

rm -r ./output

将hdfs中的output拷贝到本机

./bin/hdfs dfs -get output ./output

查看

cat ./output/*

[hadoop@web3 hadoop]$ rm -r ./output

[hadoop@web3 hadoop]$ ./bin/hdfs dfs -get output ./output

-- ::, WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[hadoop@web3 hadoop]$ cat ./output/*

1 dfsadmin

1 dfs.replication

1 dfs.namenode.name.dir

1 dfs.datanode.data.dir

[hadoop@web3 hadoop]$

删除hdfs output

注意hadoop运行程序时,输出目录不能存在,否则会提示错误

[hadoop@web3 hadoop]$ ./bin/hdfs dfs -rm -r output

-- ::, WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Deleted output

[hadoop@web3 hadoop]$

六、启动YARN

伪分布式启动YARN也可以,一般不会影响程序执行,上面./sbin/start-dfs.sh启动hadoop,仅仅时启动了MapReduce环境,还可以启动YARN,让YARN来复制资源管理与任务调度。

还有上面例子未见JobTracker和TaskTracker,这时因为新版hadoop使用了新的MapReduce框架(MapReduce V2,也称为YARN,Yet Another Resource Negotiator)

YARN是从MapReduce中分离出来的,复制资源管理与任务调度。YARN运行于MapReduce之上,提供了高可用性、高扩展性

1、编辑mapred-site.xml

[hadoop@web3 hadoop]$ cat ./etc/hadoop/mapred-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

--> <!-- Put site-specific property overrides in this file. --> <configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

2、编辑yarn-site.xml

[hadoop@web3 hadoop]$ cat ./etc/hadoop/yarn-site.xml

<?xml version="1.0"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

3、启动YARN

[hadoop@web3 hadoop]$ ./sbin/start-yarn.sh

Starting resourcemanager

Starting nodemanagers

[hadoop@web3 hadoop]$ jps

DataNode

ResourceManager #---启动后多了一个ResourceManager

Jps

NodeManager #---启动后多了一个NodeManager

SecondaryNameNode

NameNode

#---开启历史服务器,能在web中查看任务运行情况

[hadoop@web3 hadoop]$ ./sbin/mr-jobhistory-daemon.sh start historyserver

4、提示

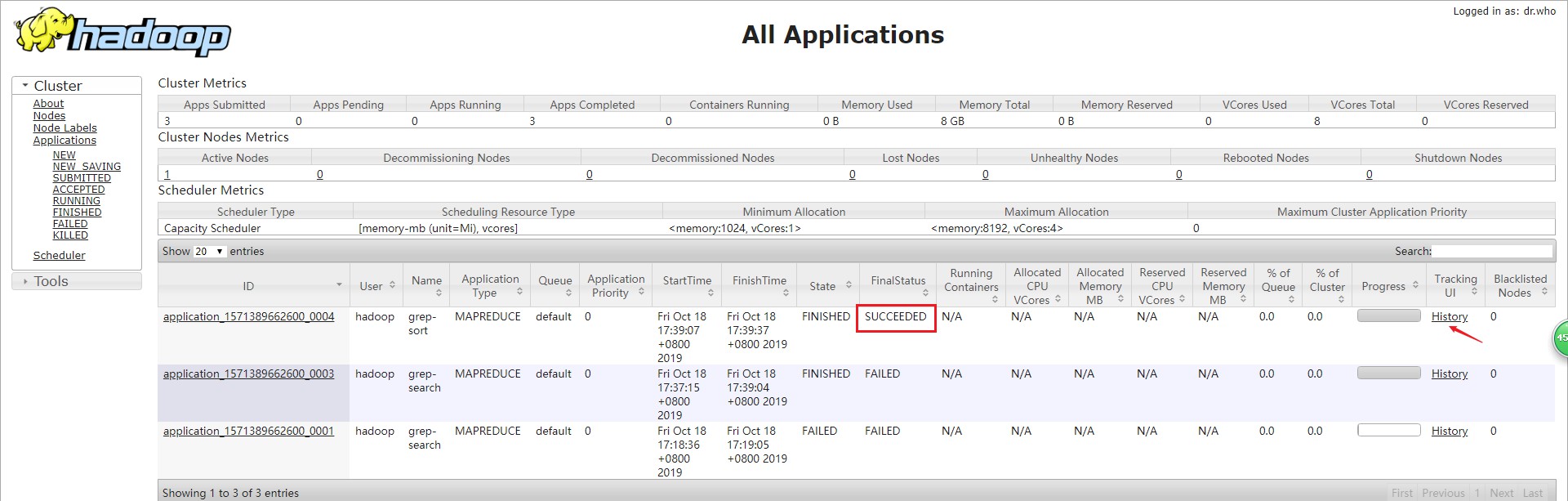

启动YARN之后,运行实例的方法还是一样的,仅仅是资源管理方式、任务调度不同。观察日志可以发现,不启用YARN时,是“mapred.LocalJobRunner”在跑,启用YARN之后,是“mapred.YARNRuner”在跑任务,启用YARN有个好处是可以通过web界面查看任务情况

http://localhost:8088/cluster

通过netstat -untlop可以看到监听到了8088

[hadoop@web3 hadoop]$ netstat -untlop

(Not all processes could be identified, non-owned process info

will not be shown, you would have to be root to see it all.)

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name Timer

tcp 0.0.0.0: 0.0.0.0:* LISTEN /java off (0.00//)

tcp 0.0.0.0: 0.0.0.0:* LISTEN /java off (0.00//)

tcp 0.0.0.0: 0.0.0.0:* LISTEN /java off (0.00//)

tcp 0.0.0.0: 0.0.0.0:* LISTEN /java off (0.00//)

tcp 0.0.0.0: 0.0.0.0:* LISTEN /java off (0.00//)

tcp 0.0.0.0: 0.0.0.0:* LISTEN - off (0.00//)

tcp 192.168.122.1: 0.0.0.0:* LISTEN - off (0.00//)

tcp 0.0.0.0: 0.0.0.0:* LISTEN - off (0.00//)

tcp 127.0.0.1: 0.0.0.0:* LISTEN - off (0.00//)

tcp 0 0 0.0.0.0:8088 0.0.0.0:* LISTEN 24982/java off (0.00//)

tcp 0.0.0.0: 0.0.0.0:* LISTEN /java off (0.00//)

tcp 127.0.0.1: 0.0.0.0:* LISTEN - off (0.00//)

tcp 0.0.0.0: 0.0.0.0:* LISTEN /java off (0.00//)

tcp 127.0.0.1: 0.0.0.0:* LISTEN - off (0.00//)

tcp 0.0.0.0: 0.0.0.0:* LISTEN /java off (0.00//)

tcp 0.0.0.0: 0.0.0.0:* LISTEN /java off (0.00//)

tcp 0.0.0.0: 0.0.0.0:* LISTEN /java off (0.00//)

tcp 0.0.0.0: 0.0.0.0:* LISTEN /java off (0.00//)

tcp 127.0.0.1: 0.0.0.0:* LISTEN /java off (0.00//)

tcp 0.0.0.0: 0.0.0.0:* LISTEN /java off (0.00//)

tcp 0.0.0.0: 0.0.0.0:* LISTEN /java off (0.00//)

tcp 127.0.0.1: 0.0.0.0:* LISTEN /java off (0.00//)

tcp6 ::: :::* LISTEN - off (0.00//)

tcp6 ::: :::* LISTEN - off (0.00//)

tcp6 ::: :::* LISTEN - off (0.00//)

tcp6 ::: :::* LISTEN - off (0.00//)

tcp6 ::: :::* LISTEN - off (0.00//)

udp 0.0.0.0: 0.0.0.0:* - off (0.00//)

udp 0.0.0.0: 0.0.0.0:* - off (0.00//)

udp 192.168.122.1: 0.0.0.0:* - off (0.00//)

udp 0.0.0.0: 0.0.0.0:* - off (0.00//)

[hadoop@web3 hadoop]$

5、访问web界面

6、运行一个任务

提示错误

Error: Could not find or load main class org.apache.hadoop.mapreduce.v2.app.MRAppMaster Please check whether your etc/hadoop/mapred-site.xml contains the below configuration:

看到如下提示,下面排错就按照提示修改mapred-site.xml

[-- ::52.678]Container exited with a non-zero exit code . Error file: prelaunch.err.

Last bytes of prelaunch.err :

Last bytes of stderr :

Error: Could not find or load main class org.apache.hadoop.mapreduce.v2.app.MRAppMaster Please check whether your etc/hadoop/mapred-site.xml contains the below configuration:

<property>

<name>yarn.app.mapreduce.am.env</name>

<value>HADOOP_MAPRED_HOME=${full path of your hadoop distribution directory}</value>

</property>

<property>

<name>mapreduce.map.env</name>

<value>HADOOP_MAPRED_HOME=${full path of your hadoop distribution directory}</value>

</property>

<property>

<name>mapreduce.reduce.env</name>

<value>HADOOP_MAPRED_HOME=${full path of your hadoop distribution directory}</value>

</property> [-- ::52.679]Container exited with a non-zero exit code . Error file: prelaunch.err.

Last bytes of prelaunch.err :

Last bytes of stderr :

Error: Could not find or load main class org.apache.hadoop.mapreduce.v2.app.MRAppMaster Please check whether your etc/hadoop/mapred-site.xml contains the below configuration:

<property>

<name>yarn.app.mapreduce.am.env</name>

<value>HADOOP_MAPRED_HOME=${full path of your hadoop distribution directory}</value>

</property>

<property>

<name>mapreduce.map.env</name>

<value>HADOOP_MAPRED_HOME=${full path of your hadoop distribution directory}</value>

</property>

<property>

<name>mapreduce.reduce.env</name>

<value>HADOOP_MAPRED_HOME=${full path of your hadoop distribution directory}</value>

</property>

7、排错

修改配置文件mapred-site.xml,

[root@web3 hadoop]# cat ./etc/hadoop/mapred-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

--> <!-- Put site-specific property overrides in this file. --> <configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>yarn.app.mapreduce.am.env</name>

<value>HADOOP_MAPRED_HOME=$HADOOP_HOME</value>

</property>

<property>

<name>mapreduce.map.env</name>

<value>HADOOP_MAPRED_HOME=$HADOOP_HOME</value>

</property>

<property>

<name>mapreduce.reduce.env</name>

<value>HADOOP_MAPRED_HOME=$HADOOP_HOME</value>

</property>

</configuration>

[root@web3 hadoop]#

7、再次运行

运行成功

[hadoop@web3 hadoop]$ ./bin/hadoop jar ./share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1..jar grep input output 'dfs[a-z.]+'

很明显,YARN主要是为集群提供更好的资源管理与任务调度,在单机上反之会使程序跑的更慢,所以单机是否开启YARN要看实际情况

8、关闭YARN

./sbin/stop-yarn.sh

./sbin/mr-jobhistory-daemon.sh stop historyserver

本文参考1:http://dblab.xmu.edu.cn/blog/install-hadoop-in-centos/

本文参考2:http://hadoop.apache.org/docs/stable/hadoop-project-dist/hadoop-common/SingleCluster.html

转载请注明出处:https://www.cnblogs.com/zhangxingeng/p/11675760.html

Hadoop2-认识Hadoop大数据处理架构-单机部署的更多相关文章

- Hadoop1-认识Hadoop大数据处理架构

一.简介概述 1.什么是Hadoop Hadoop是Apache软件基金会旗下的一个开源分布式计算平台,为用户提供了系统底层细节透明的分布式基础架构 Hadoop是基于java语言开发,具有很好的跨平 ...

- 0基础搭建Hadoop大数据处理-编程

Hadoop的编程可以是在Linux环境或Winows环境中,在此以Windows环境为示例,以Eclipse工具为主(也可以用IDEA).网上也有很多开发的文章,在此也参考他们的内容只作简单的介绍和 ...

- Hadoop生态圈-CentOs7.5单机部署ClickHouse

Hadoop生态圈-CentOs7.5单机部署ClickHouse 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. 到了新的公司,认识了新的同事,生产环境也得你去适应新的集群环境,我 ...

- Hadoop大数据处理读书笔记

几个关键性的概念 云计算:是指利用大量计算节点构成的可动态调整的虚拟化计算资源.通过并行化和分布式计算技术,实现业务质量可控的大数据处理的计算技术. NameNode:是HDFS系统中的管理者.它负责 ...

- hadoop大数据处理之表与表的连接

hadoop大数据处理之表与表的连接 前言: hadoop中表连接其实类似于我们用sqlserver对数据进行跨表查询时运用的inner join一样,两个连接的数据要有关系连接起来,中间必须有一个 ...

- hadoop大数据处理平台与案例

大数据可以说是从搜索引擎诞生之处就有了,我们熟悉的搜索引擎,如百度搜索引擎.360搜索引擎等可以说是大数据技处理技术的最早的也是比较基础的一种应用.大概在2015年大数据都还不是非常火爆,2015年可 ...

- 0基础搭建Hadoop大数据处理-初识

在互联网的世界中数据都是以TB.PB的数量级来增加的,特别是像BAT光每天的日志文件一个盘都不够,更何况是还要基于这些数据进行分析挖掘,更甚者还要实时进行数据分析,学习,如双十一淘宝的交易量的实时展示 ...

- 0基础搭建Hadoop大数据处理-集群安装

经过一系列的前期环境准备,现在可以开始Hadoop的安装了,在这里去apache官网下载2.7.3的版本 http://www.apache.org/dyn/closer.cgi/hadoop/com ...

- 《Hadoop大数据架构与实践》学习笔记

学习慕课网的视频:Hadoop大数据平台架构与实践--基础篇http://www.imooc.com/learn/391 一.第一章 #,Hadoop的两大核心: #,HDFS,分布式文件系统 ...

随机推荐

- Mac下Pycharm中升级pip失败,通过终端升级pip

使用 Pycharm 使,需要下载相关的第三方包,结果提示安装失败,提示要升级 pip 版本,但是通过 Pycharm 重新安装却失败,原因可能是出在通过 Pycharm 时升级 pip 是没有权限的 ...

- [内网渗透]Cobaltstrike指令大全

0x01 安装 Cobaltstrike是需要java环境才能运行的 linux下终端运行: sudo apt-get install openjdk-8-jdk windows下: 百度一堆配置JA ...

- (转载)基于Linux C的socket抓包程序和Package分析

转载自 https://blog.csdn.net/kleguan/article/details/27538031 1. Linux抓包源程序 在OSI七层模型中,网卡工作在物理层和数据链路层的MA ...

- 循环(for,while,until)与循环控制符(break,continue)

一.for循环 第一种风格 for ((;;;))(类似C语言风格) do command done 例子:for ((i=0;i<10;i++)) do echo $i done 第二种风 ...

- Linux下查看目录文件数和文件大小

一.查看当前目录下文件个数 在linux下查看目录下有多少文件可以用:ls -l 命令查看,ls -lR 递归查看所有目录, 如果文件很多,则用wc命令 和 grep 命令进行过滤. wc命令显示输 ...

- Linux零拷贝技术 直接 io

Linux零拷贝技术 .https://kknews.cc/code/2yeazxe.html https://zhuanlan.zhihu.com/p/76640160 https://clou ...

- 000 list与map的foreach使用

一:list的使用 1.程序 package com.jun.it.java8; import java.util.ArrayList; import java.util.List; public c ...

- EOS require_auth函数

action的结构 要说清楚这个方法的含义和用法,咱们需要从action的结构说起.详见eoslib.hpp中的action类,这里把它的结构简化表示成下面这样: * struct action { ...

- PHP session_start() open failed: Permission denied session 无法使用的问题

日志显示报错如下: PHP message: PHP Warning: session_start(): open(/) 报错显示无法打开 seesion 文件,没有权限,所以需要给 /var/lib ...

- vue---封装request做数据请求

参考文章: https://www.cnblogs.com/qiuchuanji/p/10048805.html https://www.cnblogs.com/XHappyness/p/999387 ...