Kubernetes-在Kubernetes集群上搭建HBase集群

经过3天的努力,终于在Kubernetes上把HBase集群搭建起来了,搭建步骤如下。

创建HBase镜像

配置文件包含core-site.xml、hbase-site.xml、hdfs-site.xml和yarn-site.xml,因为我这里是基于我之前搭建和zookeeper和Hadoop环境进行的,所以配置文件里面很多地方都是根据这两套环境做的,如果要搭建高可用的HBase集群,需要另外做镜像,当前镜像的配置不支持。

core-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop-hdfs-master:9000/</value>

</property>

<property>

<name>io.compression.codecs</name>

<value>

org.apache.hadoop.io.compress.GzipCodec,

org.apache.hadoop.io.compress.DefaultCodec,

com.hadoop.compression.lzo.LzoCodec,

com.hadoop.compression.lzo.LzopCodec,

org.apache.hadoop.io.compress.BZip2Codec

</value>

</property>

<property>

<name>io.compression.codec.lzo.class</name>

<value>com.hadoop.compression.lzo.LzoCodec</value>

</property>

<property>

<name>dfs.namenode.rpc-bind-host</name>

<value>0.0.0.0</value>

</property>

<property>

<name>hadoop.security.token.service.use_ip</name>

<value>false</value>

</property>

</configuration>

hbase-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.rootdir</name>

<value>hdfs://@HDFS_PATH@/hbase/</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>@ZOOKEEPER_IP_LIST@</value>

</property>

<property>

<name>hbase.zookeeper.property.clientPort</name>

<value>@ZOOKEEPER_PORT@</value>

</property>

<property>

<name>hbase.regionserver.restart.on.zk.expire</name>

<value>true</value>

</property>

<property>

<name>hbase.client.pause</name>

<value>50</value>

</property>

<property>

<name>hbase.client.retries.number</name>

<value>3</value>

</property>

<property>

<name>hbase.rpc.timeout</name>

<value>2000</value>

</property>

<property>

<name>hbase.client.operation.timeout</name>

<value>3000</value>

</property>

<property>

<name>hbase.client.scanner.timeout.period</name>

<value>10000</value>

</property>

<property>

<name>zookeeper.session.timeout</name>

<value>300000</value>

</property>

<property>

<name>hbase.hregion.max.filesize</name>

<value>1073741824</value>

</property>

<property>

<name>fs.hdfs.impl</name>

<value>org.apache.hadoop.hdfs.DistributedFileSystem</value>

</property>

<property>

<name>hbase.client.keyvalue.maxsize</name>

<value>1048576000</value>

</property>

</configuration>

hdfs-site.xml

<?xml version="1.0"?>

<configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:///root/hdfs/namenode</value>

<description>NameNode directory for namespace and transaction logs storage.</description>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:///root/hdfs/datanode</value>

<description>DataNode directory</description>

</property>

<property>

<name>dfs.namenode.datanode.registration.ip-hostname-check</name>

<value>false</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

</configuration>

yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce_shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>hadoop-hdfs-master</value>

</property>

<property>

<name>yarn.resourcemanager.bind-host</name>

<value>0.0.0.0</value>

</property>

</configuration>

start-kubernetes-hbase.sh

#!/bin/bash

export HBASE_CONF_FILE=/opt/hbase/conf/hbase-site.xml

export HADOOP_USER_NAME=root

export HBASE_MANAGES_ZK=false

sed -i "s/@HDFS_PATH@/$HDFS_PATH/g" $HBASE_CONF_FILE

sed -i "s/@ZOOKEEPER_IP_LIST@/$ZOOKEEPER_SERVICE_LIST/g" $HBASE_CONF_FILE

sed -i "s/@ZOOKEEPER_PORT@/$ZOOKEEPER_PORT/g" $HBASE_CONF_FILE

sed -i "s/@ZNODE_PARENT@/$ZNODE_PARENT/g" $HBASE_CONF_FILE

# set fqdn

for i in $(seq 1 10)

do

if grep --quiet $CLUSTER_DOMAIN /etc/hosts; then

break

elif grep --quiet $POD_NAME /etc/hosts; then

cat /etc/hosts | sed "s/$POD_NAME/${POD_NAME}.${POD_NAMESPACE}.svc.${CLUSTER_DOMAIN} $POD_NAME/g" > /etc/hosts.bak

cat /etc/hosts.bak > /etc/hosts

break

else

echo "waiting for /etc/hosts ready"

sleep 1

fi

done

if [ "$HBASE_SERVER_TYPE" = "master" ]; then

/opt/hbase/bin/hbase master start

elif [ "$HBASE_SERVER_TYPE" = "regionserver" ]; then

/opt/hbase/bin/hbase regionserver start

fi

Dockerfile

FROM java:8

MAINTAINER leo.lee(lis85@163.com)

ENV HBASE_VERSION 1.2.6.1

ENV HBASE_INSTALL_DIR /opt/hbase

ENV JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

RUN mkdir -p ${HBASE_INSTALL_DIR} && \

curl -L http://mirrors.hust.edu.cn/apache/hbase/stable/hbase-${HBASE_VERSION}-bin.tar.gz | tar -xz --strip=1 -C ${HBASE_INSTALL_DIR}

RUN sed -i "s/httpredir.debian.org/mirrors.163.com/g" /etc/apt/sources.list

# build LZO

WORKDIR /tmp

RUN apt-get update && \

apt-get install -y build-essential maven lzop liblzo2-2 && \

wget http://www.oberhumer.com/opensource/lzo/download/lzo-2.10.tar.gz && \

tar zxvf lzo-2.10.tar.gz && \

cd lzo-2.10 && \

./configure --enable-shared --prefix /usr/local/lzo-2.10 && \

make && make install && \

cd .. && git clone https://github.com/twitter/hadoop-lzo.git && cd hadoop-lzo && \

git checkout release-0.4.20 && \

C_INCLUDE_PATH=/usr/local/lzo-2.10/include LIBRARY_PATH=/usr/local/lzo-2.10/lib mvn clean package && \

apt-get remove -y build-essential maven && \

apt-get clean autoclean && \

apt-get autoremove --yes && \

rm -rf /var/lib/{apt,dpkg,cache.log}/ && \

cd target/native/Linux-amd64-64 && \

tar -cBf - -C lib . | tar -xBvf - -C /tmp && \

mkdir -p ${HBASE_INSTALL_DIR}/lib/native && \

cp /tmp/libgplcompression* ${HBASE_INSTALL_DIR}/lib/native/ && \

cd /tmp/hadoop-lzo && cp target/hadoop-lzo-0.4.20.jar ${HBASE_INSTALL_DIR}/lib/ && \

echo "export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/lzo-2.10/lib" >> ${HBASE_INSTALL_DIR}/conf/hbase-env.sh && \

rm -rf /tmp/lzo-2.10* hadoop-lzo lib libgplcompression*

ADD hbase-site.xml /opt/hbase/conf/hbase-site.xml

ADD core-site.xml /opt/hbase/conf/core-site.xml

ADD hdfs-site.xml /opt/hbase/conf/hdfs-site.xml

ADD start-kubernetes-hbase.sh /opt/hbase/bin/start-kubernetes-hbase.sh

RUN chmod +777 /opt/hbase/bin/start-kubernetes-hbase.sh

WORKDIR ${HBASE_INSTALL_DIR}

RUN echo "export HBASE_JMX_BASE=\"-Dcom.sun.management.jmxremote.ssl=false -Dcom.sun.management.jmxremote.authenticate=false\"" >> conf/hbase-env.sh && \

echo "export HBASE_MASTER_OPTS=\"\$HBASE_MASTER_OPTS \$HBASE_JMX_BASE -Dcom.sun.management.jmxremote.port=10101\"" >> conf/hbase-env.sh && \

echo "export HBASE_REGIONSERVER_OPTS=\"\$HBASE_REGIONSERVER_OPTS \$HBASE_JMX_BASE -Dcom.sun.management.jmxremote.port=10102\"" >> conf/hbase-env.sh && \

echo "export HBASE_THRIFT_OPTS=\"\$HBASE_THRIFT_OPTS \$HBASE_JMX_BASE -Dcom.sun.management.jmxremote.port=10103\"" >> conf/hbase-env.sh && \

echo "export HBASE_ZOOKEEPER_OPTS=\"\$HBASE_ZOOKEEPER_OPTS \$HBASE_JMX_BASE -Dcom.sun.management.jmxremote.port=10104\"" >> conf/hbase-env.sh && \

echo "export HBASE_REST_OPTS=\"\$HBASE_REST_OPTS \$HBASE_JMX_BASE -Dcom.sun.management.jmxremote.port=10105\"" >> conf/hbase-env.sh

ENV PATH=$PATH:/opt/hbase/bin

CMD /opt/hbase/bin/start-kubernetes-hbase.sh

把这些文件放入同一级目录,然后使用命令创建镜像

docker build -t leo/hbase:1.2.6.1 .

创建成功后通过通过命令【docker images】就可以查看到镜像了

【注意】,这里有一个坑,【start-kubernetes-hbase.sh】文件的格式,如果该文件是在Windows机器上创建的,默认的格式会是doc,如果不将格式修改为unix,就会报错【/bin/bash^M: bad interpreter: No such file or directory】,导致该脚本文件在Linux上无法运行,修改的方法很简单,使用vim命令修改文件,然后按下【ESC】,输入【:set ff=unix】,然后回车,wq保存。

编写yaml文件

hbase.yaml

apiVersion: v1

kind: Service

metadata:

name: hbase-master

spec:

clusterIP: None

selector:

app: hbase-master

ports:

- name: rpc

port: 16000

- name: http

port: 16010

---

apiVersion: v1

kind: Pod

metadata:

name: hbase-master

labels:

app: hbase-master

spec:

containers:

- env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: HBASE_SERVER_TYPE

value: master

- name: HDFS_PATH

value: hadoop-hdfs-master:9000

- name: ZOOKEEPER_SERVICE_LIST

value: zk-cs

- name: ZOOKEEPER_PORT

value: "2181"

image: registry.docker.uih/library/leo-hbase:1.2.6.1

imagePullPolicy: IfNotPresent

name: hbase-master

ports:

- containerPort: 16000

protocol: TCP

- containerPort: 16010

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: hbase-region-1

spec:

clusterIP: None

selector:

app: hbase-region-1

ports:

- name: rpc

port: 16020

- name: http

port: 16030

---

apiVersion: v1

kind: Service

metadata:

name: hbase-region-2

spec:

clusterIP: None

selector:

app: hbase-region-2

ports:

- name: rpc

port: 16020

- name: http

port: 16030

---

apiVersion: v1

kind: Service

metadata:

name: hbase-region-3

spec:

clusterIP: None

selector:

app: hbase-region-3

ports:

- name: rpc

port: 16020

- name: http

port: 16030

---

apiVersion: v1

kind: Pod

metadata:

labels:

app: hbase-region-1

name: hbase-region-1

spec:

containers:

- env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: HBASE_SERVER_TYPE

value: regionserver

- name: HDFS_PATH

value: hadoop-hdfs-master:9000

- name: ZOOKEEPER_SERVICE_LIST

value: zk-cs

- name: ZOOKEEPER_PORT

value: "2181"

image: registry.docker.uih/library/leo-hbase:1.2.6.1

imagePullPolicy: IfNotPresent

name: hbase-region-1

ports:

- containerPort: 16020

protocol: TCP

- containerPort: 16030

protocol: TCP

---

apiVersion: v1

kind: Pod

metadata:

labels:

app: hbase-region-2

name: hbase-region-2

spec:

containers:

- env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: HBASE_SERVER_TYPE

value: regionserver

- name: HDFS_PATH

value: hadoop-hdfs-master:9000

- name: ZOOKEEPER_SERVICE_LIST

value: zk-cs

- name: ZOOKEEPER_PORT

value: "2181"

image: registry.docker.uih/library/leo-hbase:1.2.6.1

imagePullPolicy: IfNotPresent

name: hbase-region-2

ports:

- containerPort: 16020

protocol: TCP

- containerPort: 16030

protocol: TCP

---

apiVersion: v1

kind: Pod

metadata:

labels:

app: hbase-region-3

name: hbase-region-3

spec:

containers:

- env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: HBASE_SERVER_TYPE

value: regionserver

- name: HDFS_PATH

value: hadoop-hdfs-master:9000

- name: ZOOKEEPER_SERVICE_LIST

value: zk-cs

- name: ZOOKEEPER_PORT

value: "2181"

image: registry.docker.uih/library/leo-hbase:1.2.6.1

imagePullPolicy: IfNotPresent

name: hbase-region-3

ports:

- containerPort: 16020

protocol: TCP

- containerPort: 16030

protocol: TCP

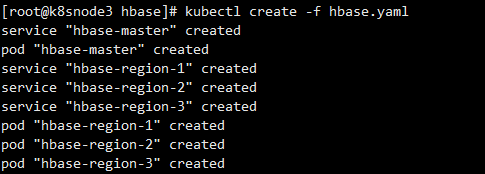

创建服务和POD

kubectl create -f hbase.yaml

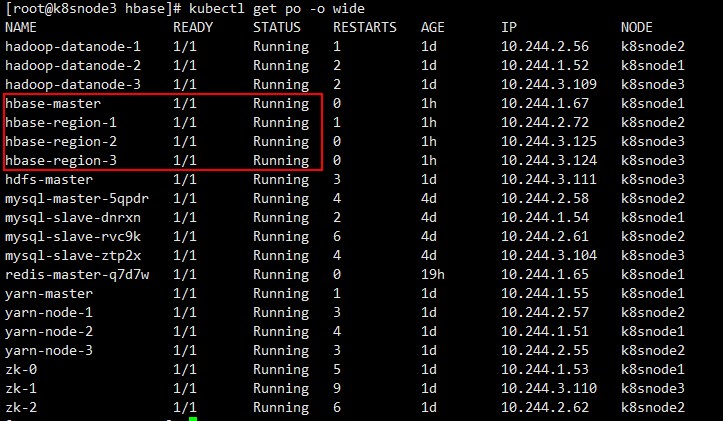

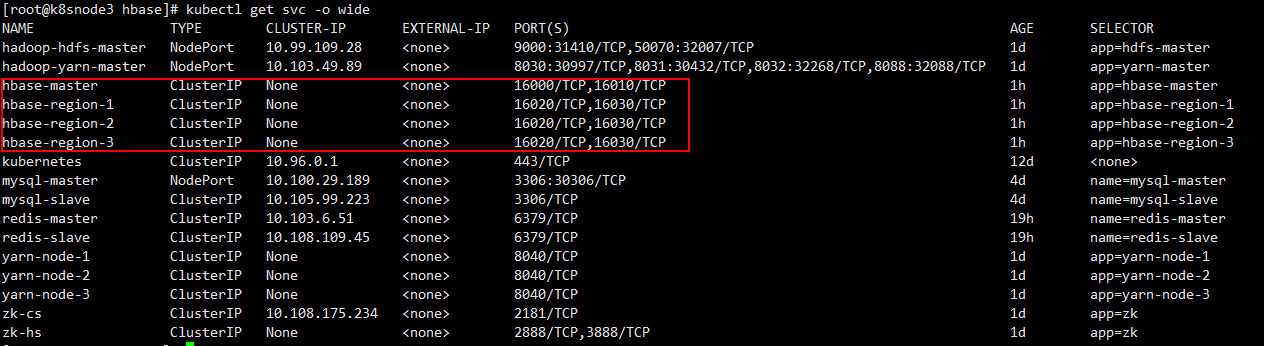

分别查看POD和service

kubectl get po -o wide

kubectl get svc -o wide

搭建成功!!

Kubernetes-在Kubernetes集群上搭建HBase集群的更多相关文章

- 在Hadoop集群上,搭建HBase集群

(1)下载Hbase包,并解压:这里下载的是0.98.4版本,对应的hadoop-1.2.1集群 (2)覆盖相关的包:在这个版本里,Hbase刚好和Hadoop集群完美配合,不需要进行覆盖. 不过这里 ...

- Hadoop集群上搭建Ranger

There are two types of people in the world. I hate both of them. Hadoop集群上搭建Ranger 在搭建Ranger工程之前,需要完 ...

- nginx的简单使用和使用nginx在windows上搭建tomcat集群

nginx是一款轻量级的web服务器,常用的作用为服务器/反向代理服务器以及电子邮件(IMAP/POP3)代理服务器 1.为什么我们要使用Nginx? 反向代理: 反向代理(Reverse Proxy ...

- 在windows上搭建redis集群

一 所需软件 Redis.Ruby语言运行环境.Redis的Ruby驱动redis-xxxx.gem.创建Redis集群的工具redis-trib.rb 二 安装配置redis redis下载地址 ...

- Azure上搭建ActiveMQ集群-基于ZooKeeper配置ActiveMQ高可用性集群

ActiveMQ从5.9.0版本开始,集群实现方式取消了传统的Master-Slave方式,增加了基于ZooKeeper+LevelDB的实现方式. 本文主要介绍了在Windows环境下配置基于Zoo ...

- 在Hadoop集群上的HBase配置

之前,我们已经在hadoop集群上配置了Hive,今天我们来配置下Hbase. 一.准备工作 1.ZooKeeper下载地址:http://archive.apache.org/dist/zookee ...

- 从零搭建HBase集群

本文从零开始搭建大数据集群,涉及Linux集群安装搭建,Hadoop集群搭建,HBase集群搭建,Java接口封装,对接Java的C#类库封装 Linux集群搭建与配置 Hadoop集群搭建与配置 H ...

- Centos7上搭建activemq集群和zookeeper集群

Zookeeper集群的搭建 1.环境准备 Zookeeper版本:3.4.10. 三台服务器: IP 端口 通信端口 10.233.17.6 2181 2888,3888 10.233.17.7 2 ...

- 基于docker快速搭建hbase集群

一.概述 HBase是一个分布式的.面向列的开源数据库,该技术来源于 Fay Chang 所撰写的Google论文"Bigtable:一个结构化数据的分布式存储系统".就像Bigt ...

随机推荐

- Java哈希表入门

Java哈希表(Hash Table) 最近做题经常用到哈希表来进行快速查询,遂记录Java是如何实现哈希表的.这里只简单讲一下利用Map和HashMap实现哈希表. 首先,什么是Map和HashMa ...

- [一起面试AI]NO.5过拟合、欠拟合与正则化是什么?

Q1 过拟合与欠拟合的区别是什么,什么是正则化 欠拟合指的是模型不能够再训练集上获得足够低的「训练误差」,往往由于特征维度过少,导致拟合的函数无法满足训练集,导致误差较大. 过拟合指的是模型训练误差与 ...

- Vulnhub JIS-CTF-VulnUpload靶机渗透

配置问题解决 参考我的这篇文章https://www.cnblogs.com/A1oe/p/12571032.html更改网卡配置文件进行解决. 信息搜集 找到靶机 nmap -sP 192.168. ...

- Go gRPC教程-客户端流式RPC(四)

前言 上一篇介绍了服务端流式RPC,客户端发送请求到服务器,拿到一个流去读取返回的消息序列. 客户端读取返回的流的数据.本篇将介绍客户端流式RPC. 客户端流式RPC:与服务端流式RPC相反,客户端不 ...

- 【Web】阿里icon图标webpack插件(webpack-qc-iconfont-plugin)详解

webpack-qc-iconfont-plugin webpack-qc-iconfont-plugin是一个webpack插件,可以轻松地帮你将阿里icon的图标项目下载至本地 开发初衷 之前已经 ...

- C++ namespace 命名空间

namespace即"命名空间",也称"名称空间" 那么这个 "名称空间" 是干啥的呢? 我们都知道,C/C++中的作用域可以由一个符号 { ...

- TP的where方法的使用

1.Thinkphp中where()条件的使用 总是有人觉得,thinkphp的where()就是写我要进行增加.查询.修改.删除数据的条件,很简单的,其实我想告诉你,where()是写条件语句的,但 ...

- JAVA debug 断点调试

更多调试参看 https://www.cnblogs.com/yjd_hycf_space/p/7483471.html 先编译好要调试的程序.1.设置断点 选定要设置断点的代码行,在行号的区域后面单 ...

- MySQL锁---InnoDB行锁需要注意的细节

前言 换了工作之后,接近半年没有发博客了(一直加班),emmmm.....今天好不容易有时间,记录下工作中遇到的一些问题,接下来应该重拾知识点了.因为新公司工作中MySQL库经常出现查询慢,锁等待,节 ...

- 使用 Chrome 插件 Vimium 打造黑客浏览器

之前一直用 cVim,与 Vimium 功能类似,但是之后不在更新了,故转战到 Vimium. 简介 官网:http://vimium.github.io/ Vimium 是 Google Chrom ...