(原创)Stanford Machine Learning (by Andrew NG) --- (week 1) Linear Regression

Andrew NG的Machine learning课程地址为:https://www.coursera.org/course/ml

在Linear Regression部分出现了一些新的名词,这些名词在后续课程中会频繁出现:

| Cost Function | Linear Regression | Gradient Descent | Normal Equation | Feature Scaling | Mean normalization |

| 损失函数 | 线性回归 | 梯度下降 | 正规方程 | 特征归一化 | 均值标准化 |

Model Representation

- m: number of training examples

- x(i): input (features) of ith training example

- xj(i): value of feature j in ith training example

- y(i): “output” variable / “target” variable of ith training example

- n: number of features

- θ: parameters

- Hypothesis: hθ(x) = θ0 + θ1x1 + θ2x2 + … +θnxn

Cost Function

IDEA: Choose θso that hθ(x) is close to y for our training examples (x, y).

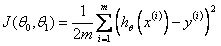

A.Linear Regression with One Variable Cost Function

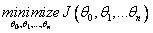

Cost Function:

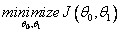

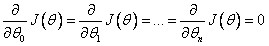

Goal:

Contour Plot:

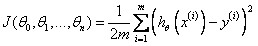

B.Linear Regression with Multiple Variable Cost Function

Cost Function:

Goal:

Gradient Descent

Outline

Gradient Descent Algorithm

迭代过程收敛图可能如下:

(此为等高线图,中间为最小值点,图中蓝色弧线为可能的收敛路径。)

Learning Rate α:

1) If α is too small, gradient descent can be slow to converge;

2) If α is too large, gradient descent may not decrease on every iteration or may not converge;

3) For sufficiently small α , J(θ) should decrease on every iteration;

Choose Learning Rate α: Debug, 0.001, 0.003, 0.006, 0.01, 0.03, 0.06, 0.1, 0.3, 0.6, 1.0;

“Batch” Gradient Descent: Each step of gradient descent uses all the training examples;

“Stochastic” gradient descent: Each step of gradient descent uses only one training examples.

Normal Equation

IDEA: Method to solve for θ analytically.

for every j, then

for every j, then

Restriction: Normal Equation does not work when (XTX) is non-invertible.

PS: 当矩阵为满秩矩阵时,该矩阵可逆。列向量(feature)线性无关且行向量(样本)线性无关的个数大于列向量的个数(特征个数n).

Gradient Descent Algorithm VS. Normal Equation

Gradient Descent:

- Need to choose α;

- Needs many iterations;

- Works well even when n is large; (n > 1000 is appropriate)

Normal Equation:

- No need to choose α;

- Don’t need to iterate;

- Need to compute (XTX)-1 ;

- Slow if n is very large. (n < 1000 is OK)

Feature Scaling

IDEA: Make sure features are on a similar scale.

好处: 减少迭代次数,有利于快速收敛

Example: If we need to get every feature into approximately a -1 ≤ xi ≤ 1 range, feature values located in [-3, 3] or [-1/3, 1/3] fields are acceptable.

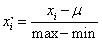

Mean normalization:

HOMEWORK

好了,既然看完了视频课程,就来做一下作业吧,下面是Linear Regression部分作业的核心代码:

1.computeCost.m/computeCostMulti.m

J=/(*m)*sum((theta'*X'-y').^2);

2.gradientDescent.m/gradientDescentMulti.m

h=X*theta-y;

v=X'*h;

v=v*alpha/m;

theta1=theta;

theta=theta-v;

(原创)Stanford Machine Learning (by Andrew NG) --- (week 1) Linear Regression的更多相关文章

- (原创)Stanford Machine Learning (by Andrew NG) --- (week 3) Logistic Regression & Regularization

coursera上面Andrew NG的Machine learning课程地址为:https://www.coursera.org/course/ml 我曾经使用Logistic Regressio ...

- (原创)Stanford Machine Learning (by Andrew NG) --- (week 10) Large Scale Machine Learning & Application Example

本栏目来源于Andrew NG老师讲解的Machine Learning课程,主要介绍大规模机器学习以及其应用.包括随机梯度下降法.维批量梯度下降法.梯度下降法的收敛.在线学习.map reduce以 ...

- (原创)Stanford Machine Learning (by Andrew NG) --- (week 8) Clustering & Dimensionality Reduction

本周主要介绍了聚类算法和特征降维方法,聚类算法包括K-means的相关概念.优化目标.聚类中心等内容:特征降维包括降维的缘由.算法描述.压缩重建等内容.coursera上面Andrew NG的Mach ...

- (原创)Stanford Machine Learning (by Andrew NG) --- (week 7) Support Vector Machines

本栏目内容来源于Andrew NG老师讲解的SVM部分,包括SVM的优化目标.最大判定边界.核函数.SVM使用方法.多分类问题等,Machine learning课程地址为:https://www.c ...

- (原创)Stanford Machine Learning (by Andrew NG) --- (week 9) Anomaly Detection&Recommender Systems

这部分内容来源于Andrew NG老师讲解的 machine learning课程,包括异常检测算法以及推荐系统设计.异常检测是一个非监督学习算法,用于发现系统中的异常数据.推荐系统在生活中也是随处可 ...

- (原创)Stanford Machine Learning (by Andrew NG) --- (week 4) Neural Networks Representation

Andrew NG的Machine learning课程地址为:https://www.coursera.org/course/ml 神经网络一直被认为是比较难懂的问题,NG将神经网络部分的课程分为了 ...

- (原创)Stanford Machine Learning (by Andrew NG) --- (week 1) Introduction

最近学习了coursera上面Andrew NG的Machine learning课程,课程地址为:https://www.coursera.org/course/ml 在Introduction部分 ...

- (原创)Stanford Machine Learning (by Andrew NG) --- (week 5) Neural Networks Learning

本栏目内容来自Andrew NG老师的公开课:https://class.coursera.org/ml/class/index 一般而言, 人工神经网络与经典计算方法相比并非优越, 只有当常规方法解 ...

- (原创)Stanford Machine Learning (by Andrew NG) --- (week 6) Advice for Applying Machine Learning & Machine Learning System Design

(1) Advice for applying machine learning Deciding what to try next 现在我们已学习了线性回归.逻辑回归.神经网络等机器学习算法,接下来 ...

随机推荐

- Eclipse连接海马模拟器

找到海马模拟器安装目录: 使用cmd 命令进入命令行:D: cd:D:\Program Files (x86)\Droid4X 进入模拟器所在目录 运行adb connect 127.0.0.1:26 ...

- 安装Vue.js devtools

1.下载安装 https://github.com/vuejs/vue-devtools#vue-devtools 通过以上地址下载安装包,解压以后进入文件,按住shift,点击鼠标右键打开命令窗口 ...

- 实战手工注入某站,mssql注入

昨天就搞下来的,但是是工具搞得,为了比赛还是抛弃一阵子的工具吧.内容相对简单,可掠过. 报错得到sql语句: DataSet ds2 = BusinessLibrary.classHelper.Get ...

- io多路复用-select()

参照<Unix网络编程>相关章节内容,实现了一个简单的单线程IO多路复用服务器与客户端. 普通迭代服务器,由于执行recvfrom则会发生阻塞,直到客户端发送数据并正确接收后才能够返回,一 ...

- sk_buff结构

sk_buff结构用来描述已接收或者待发送的数据报文信息:skb在不同网络协议层之间传递,可被用于不同网络协议,如二层的mac或其他链路层协议,三层的ip,四层的tcp或者udp协议,其中某些成员变量 ...

- python写一段脚本代码自动完成输入(目录下的所有)文件的数据替换(修改数据和替换数据都是输入的)【转】

转自:http://blog.csdn.net/lixiaojie1012/article/details/23628129 初次尝试python语言,感觉用着真舒服,简单明了,库函数一调用就OK了 ...

- binlog_server备份binlogs

在主库上建一个复制用的账号: root@localhost [(none)]>grant replication slave on *.* to 'wyz'@'%' identified by ...

- 运输层和TCP/IP协议

0. 基本要点 运输层是为相互通信的应用进程提供逻辑通信. 端口和套接字的意义 什么是无连接UDP 什么是面向连接的TCP 在不可靠的网络上实现可靠传输的工作原理,停止等待协议和ARQ协议 TCP的滑 ...

- FineReport——笔记

1填报分页:需要在填报预览下的链接后添加:&__cutpage__=v:

- spring mvc注解文件上传下载

需要两个包: 包如何导入就不介绍了,前端代码如下(一定要加enctype="multipart/form-data"让服务器知道是文件上传): <form action=&q ...