Hive的Shell里hive> 执行操作时,出现FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask错误的解决办法(图文详解)

不多说,直接上干货!

这个问题,得非 你的hive和hbase是不是同样都是CDH版本,还是一个是apache版本,一个是CDH版本。

问题详情

[kfk@bigdata-pro01 apache-hive-1.0.-bin]$ bin/hive Logging initialized using configuration in file:/opt/modules/apache-hive-1.0.-bin/conf/hive-log4j.properties

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/modules/hadoop-2.6./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/modules/apache-hive-1.0.-bin/lib/hive-jdbc-1.0.-standalone.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

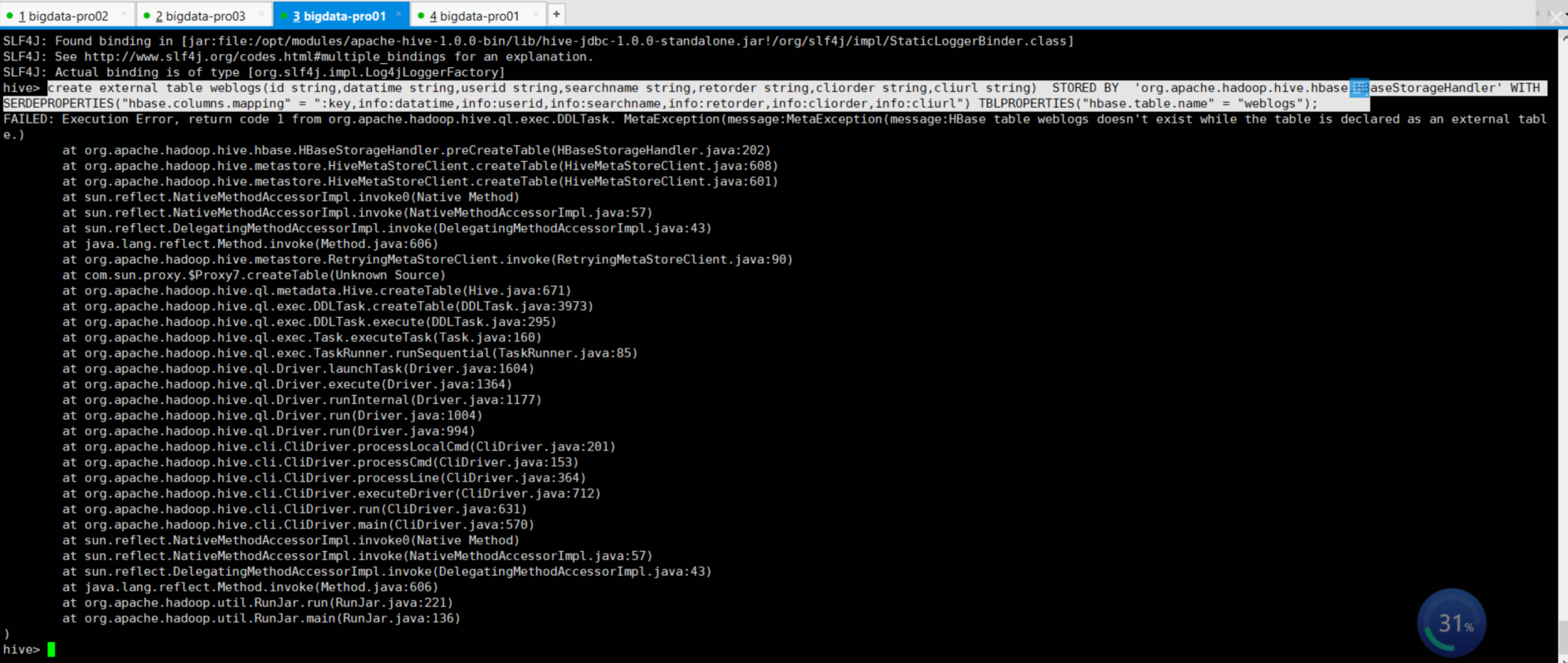

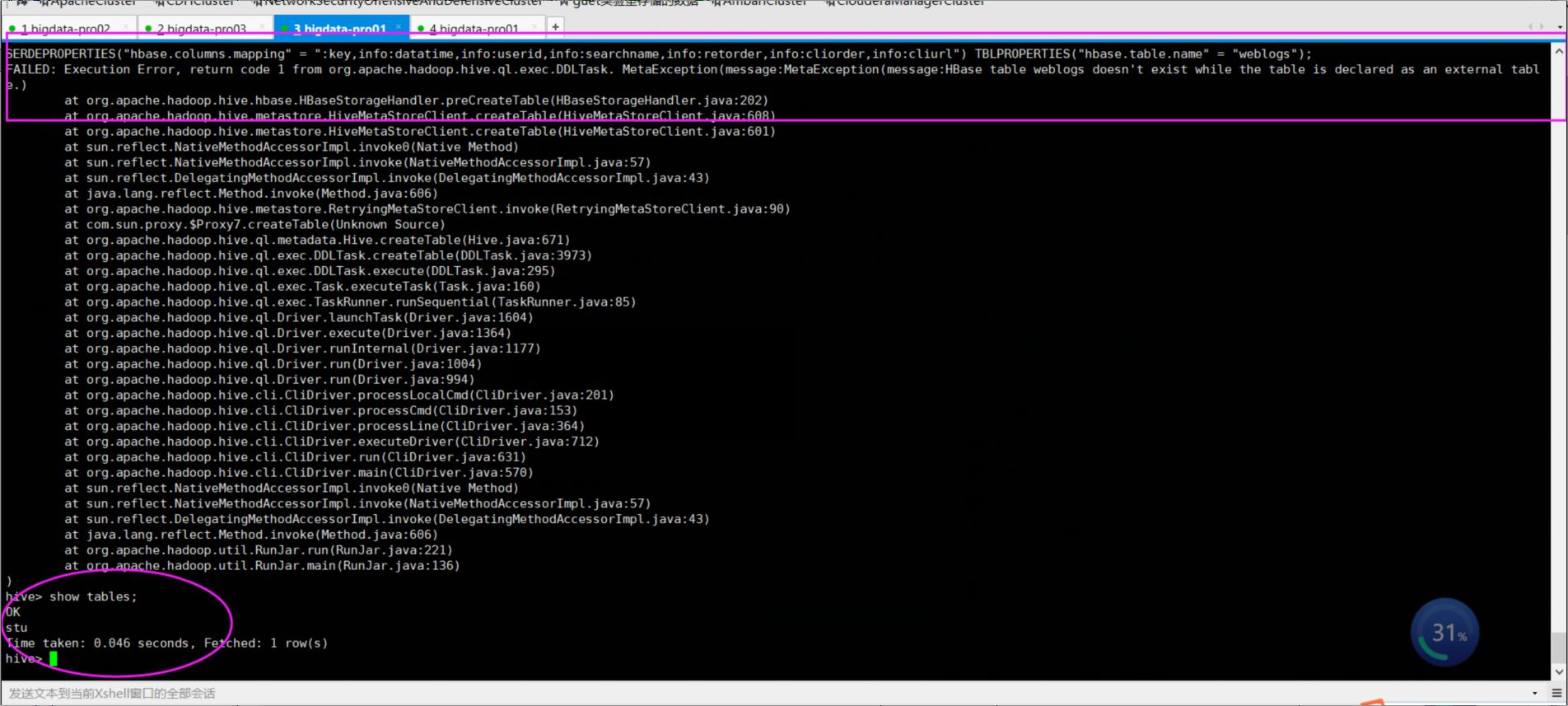

hive> create external table weblogs(id string,datatime string,userid string,searchname string,retorder string,cliorder string,cliurl string) STORED BY 'org.apache.hadoop.hive.hbase.HBaseStorageHandler' WITH SERDEPROPERTIES("hbase.columns.mapping" = ":key,info:datatime,info:userid,info:searchname,info:retorder,info:cliorder,info:cliurl") TBLPROPERTIES("hbase.table.name" = "weblogs");

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. MetaException(message:MetaException(message:HBase table weblogs doesn't exist while the table is declared as an external table.)

at org.apache.hadoop.hive.hbase.HBaseStorageHandler.preCreateTable(HBaseStorageHandler.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.createTable(HiveMetaStoreClient.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.createTable(HiveMetaStoreClient.java:)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:)

at java.lang.reflect.Method.invoke(Method.java:)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.invoke(RetryingMetaStoreClient.java:)

at com.sun.proxy.$Proxy7.createTable(Unknown Source)

at org.apache.hadoop.hive.ql.metadata.Hive.createTable(Hive.java:)

at org.apache.hadoop.hive.ql.exec.DDLTask.createTable(DDLTask.java:)

at org.apache.hadoop.hive.ql.exec.DDLTask.execute(DDLTask.java:)

at org.apache.hadoop.hive.ql.exec.Task.executeTask(Task.java:)

at org.apache.hadoop.hive.ql.exec.TaskRunner.runSequential(TaskRunner.java:)

at org.apache.hadoop.hive.ql.Driver.launchTask(Driver.java:)

at org.apache.hadoop.hive.ql.Driver.execute(Driver.java:)

at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:)

at org.apache.hadoop.hive.cli.CliDriver.processLocalCmd(CliDriver.java:)

at org.apache.hadoop.hive.cli.CliDriver.processCmd(CliDriver.java:)

at org.apache.hadoop.hive.cli.CliDriver.processLine(CliDriver.java:)

at org.apache.hadoop.hive.cli.CliDriver.executeDriver(CliDriver.java:)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:)

at java.lang.reflect.Method.invoke(Method.java:)

at org.apache.hadoop.util.RunJar.run(RunJar.java:)

at org.apache.hadoop.util.RunJar.main(RunJar.java:)

)

说明没创建成功。

Hive和HBase同是CDH版本的解决办法

首先通过用下面的命令,重新启动hive

./hive -hiveconf hive.root.logger=DEBUG,console 进行debug

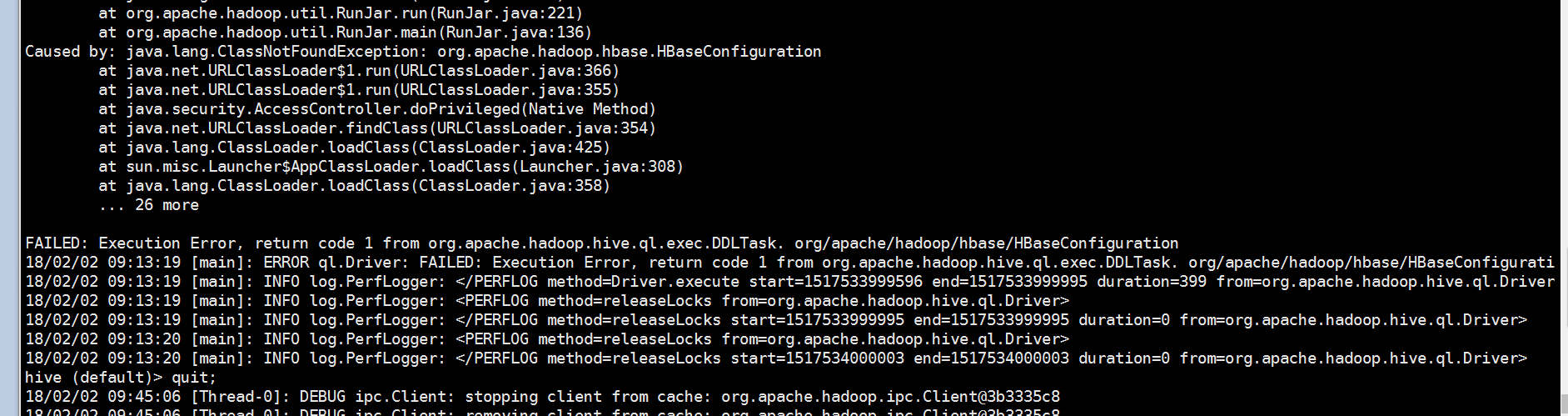

查看到错误原因

调遣hbase包错误,配置文件不能加载。

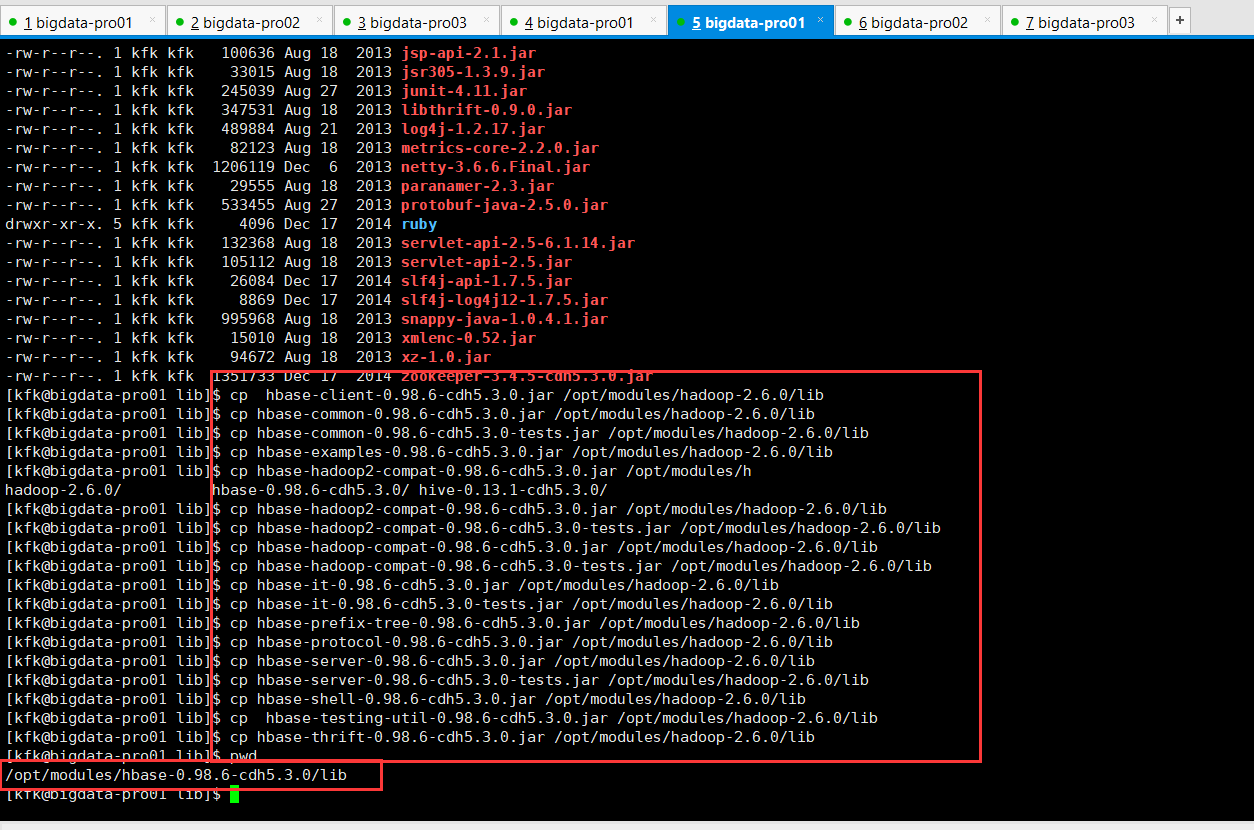

将hbase目录下jar包拷贝到hadoop/lib下,(注意了我这里为了保险起见3个节点都做了这一步)

[kfk@bigdata-pro01 lib]$ cp hbase-client-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro01 lib]$ cp hbase-common-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro01 lib]$ cp hbase-common-0.98.-cdh5.3.0-tests.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro01 lib]$ cp hbase-examples-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro01 lib]$ cp hbase-hadoop2-compat-0.98.-cdh5.3.0.jar /opt/modules/h

hadoop-2.6./ hbase-0.98.-cdh5.3.0/ hive-0.13.-cdh5.3.0/

[kfk@bigdata-pro01 lib]$ cp hbase-hadoop2-compat-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro01 lib]$ cp hbase-hadoop2-compat-0.98.-cdh5.3.0-tests.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro01 lib]$ cp hbase-hadoop-compat-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro01 lib]$ cp hbase-hadoop-compat-0.98.-cdh5.3.0-tests.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro01 lib]$ cp hbase-it-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro01 lib]$ cp hbase-it-0.98.-cdh5.3.0-tests.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro01 lib]$ cp hbase-prefix-tree-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro01 lib]$ cp hbase-protocol-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro01 lib]$ cp hbase-server-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro01 lib]$ cp hbase-server-0.98.-cdh5.3.0-tests.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro01 lib]$ cp hbase-shell-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro01 lib]$ cp hbase-testing-util-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro01 lib]$ cp hbase-thrift-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro01 lib]$ pwd

/opt/modules/hbase-0.98.-cdh5.3.0/lib

[kfk@bigdata-pro01 lib]$

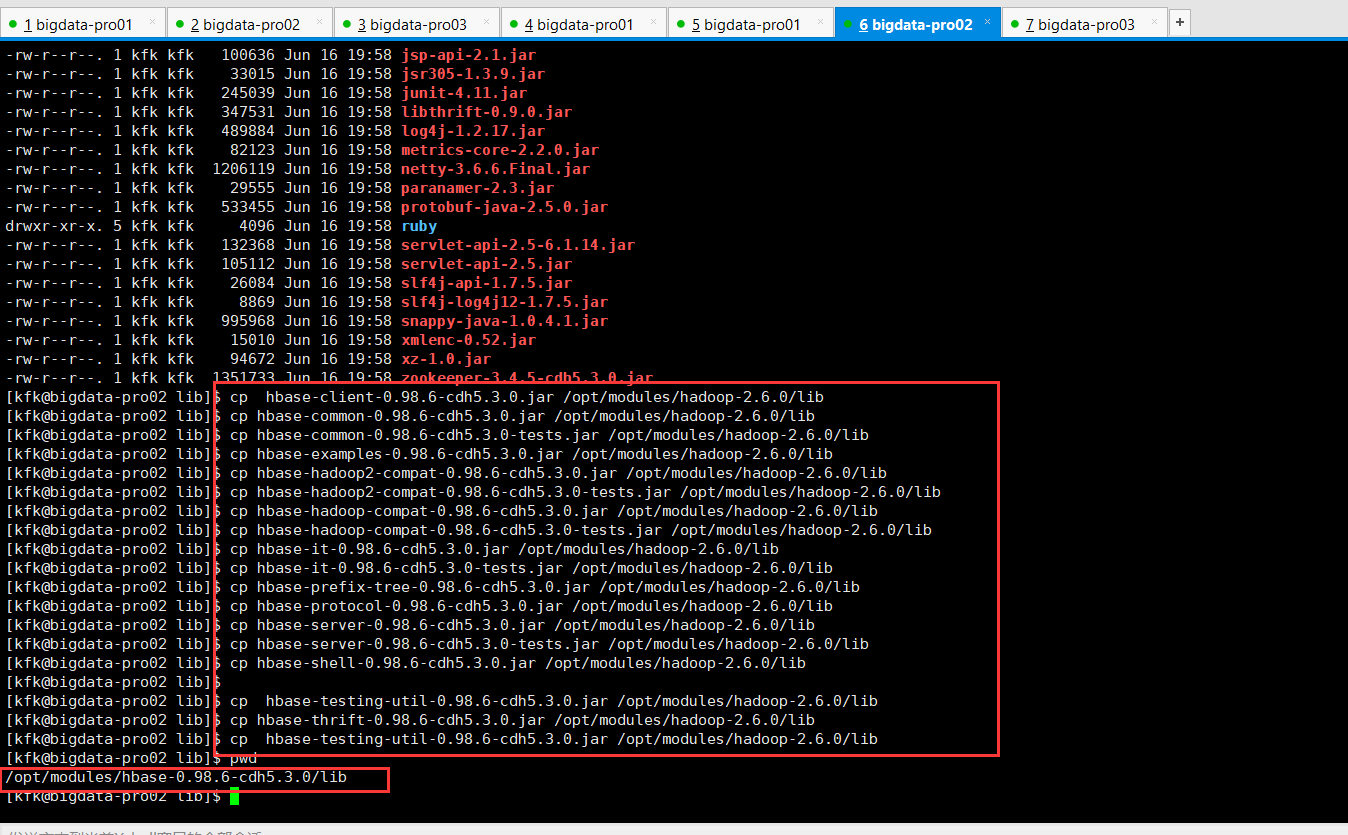

[kfk@bigdata-pro02 lib]$ cp hbase-client-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro02 lib]$ cp hbase-common-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro02 lib]$ cp hbase-common-0.98.-cdh5.3.0-tests.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro02 lib]$ cp hbase-examples-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro02 lib]$ cp hbase-hadoop2-compat-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro02 lib]$ cp hbase-hadoop2-compat-0.98.-cdh5.3.0-tests.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro02 lib]$ cp hbase-hadoop-compat-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro02 lib]$ cp hbase-hadoop-compat-0.98.-cdh5.3.0-tests.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro02 lib]$ cp hbase-it-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro02 lib]$ cp hbase-it-0.98.-cdh5.3.0-tests.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro02 lib]$ cp hbase-prefix-tree-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro02 lib]$ cp hbase-protocol-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro02 lib]$ cp hbase-server-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro02 lib]$ cp hbase-server-0.98.-cdh5.3.0-tests.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro02 lib]$ cp hbase-shell-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro02 lib]$

[kfk@bigdata-pro02 lib]$ cp hbase-testing-util-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro02 lib]$ cp hbase-thrift-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro02 lib]$ cp hbase-testing-util-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro02 lib]$ pwd

/opt/modules/hbase-0.98.-cdh5.3.0/lib

[kfk@bigdata-pro02 lib]$

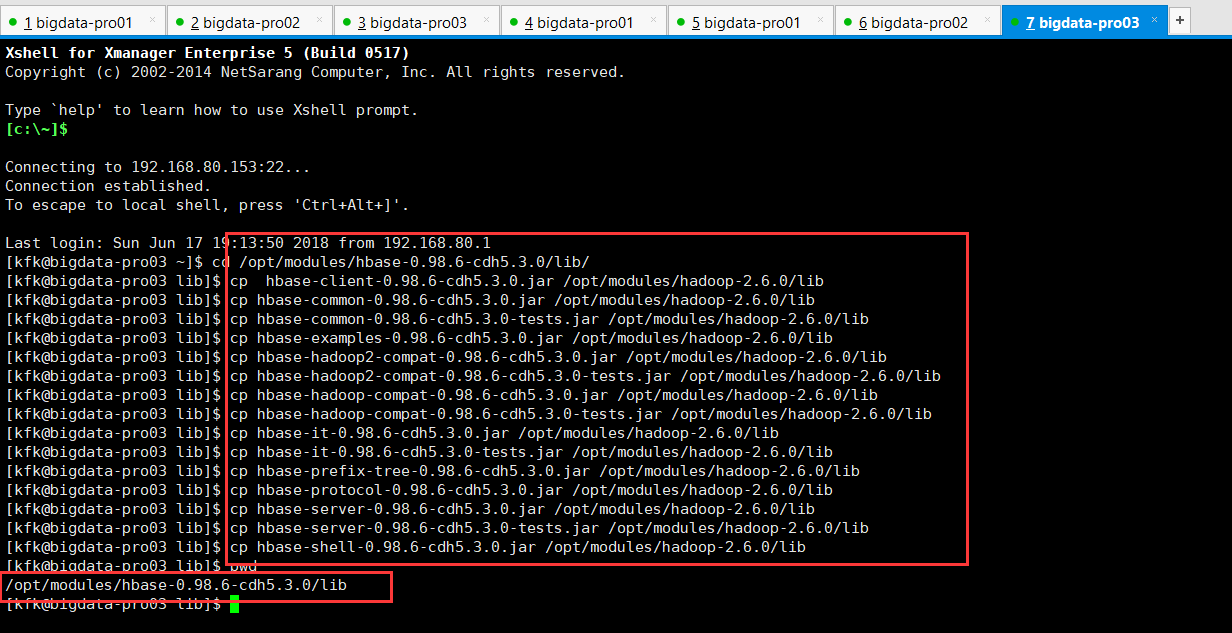

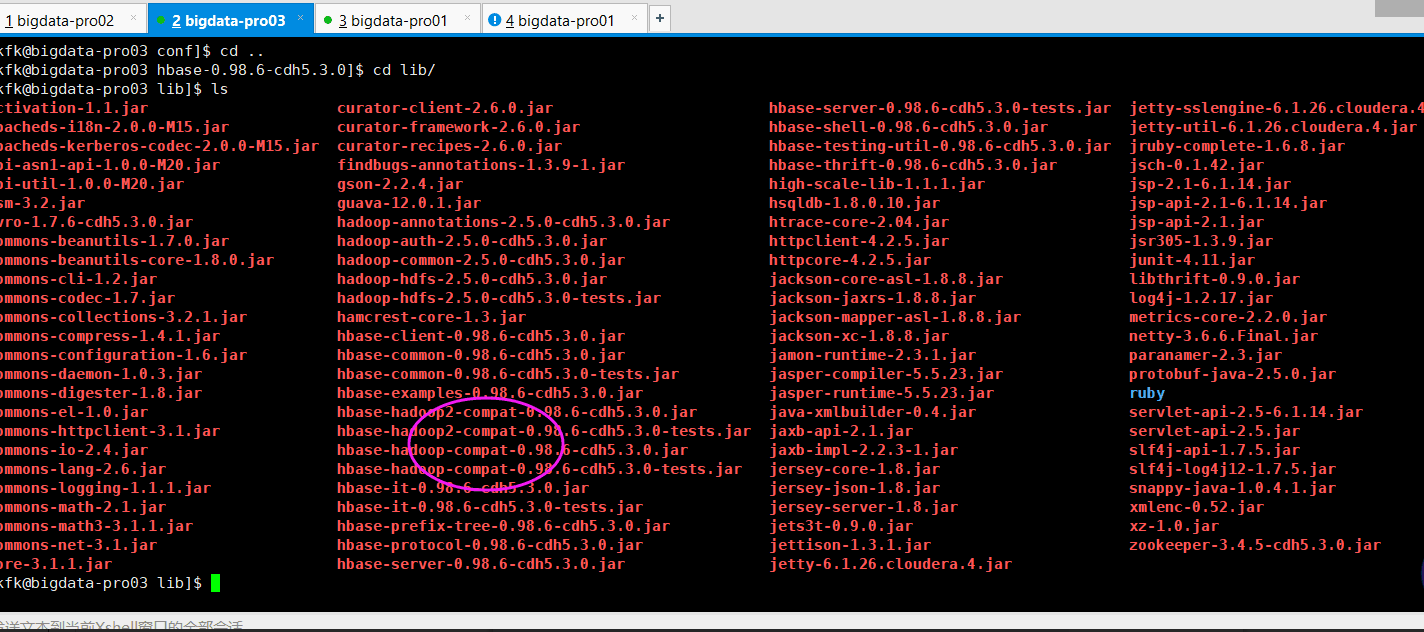

[kfk@bigdata-pro03 ~]$ cd /opt/modules/hbase-0.98.-cdh5.3.0/lib/

[kfk@bigdata-pro03 lib]$ cp hbase-client-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro03 lib]$ cp hbase-common-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro03 lib]$ cp hbase-common-0.98.-cdh5.3.0-tests.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro03 lib]$ cp hbase-examples-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro03 lib]$ cp hbase-hadoop2-compat-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro03 lib]$ cp hbase-hadoop2-compat-0.98.-cdh5.3.0-tests.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro03 lib]$ cp hbase-hadoop-compat-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro03 lib]$ cp hbase-hadoop-compat-0.98.-cdh5.3.0-tests.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro03 lib]$ cp hbase-it-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro03 lib]$ cp hbase-it-0.98.-cdh5.3.0-tests.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro03 lib]$ cp hbase-prefix-tree-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro03 lib]$ cp hbase-protocol-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro03 lib]$ cp hbase-server-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro03 lib]$ cp hbase-server-0.98.-cdh5.3.0-tests.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro03 lib]$ cp hbase-shell-0.98.-cdh5.3.0.jar /opt/modules/hadoop-2.6./lib

[kfk@bigdata-pro03 lib]$ pwd

/opt/modules/hbase-0.98.-cdh5.3.0/lib

[kfk@bigdata-pro03 lib]$

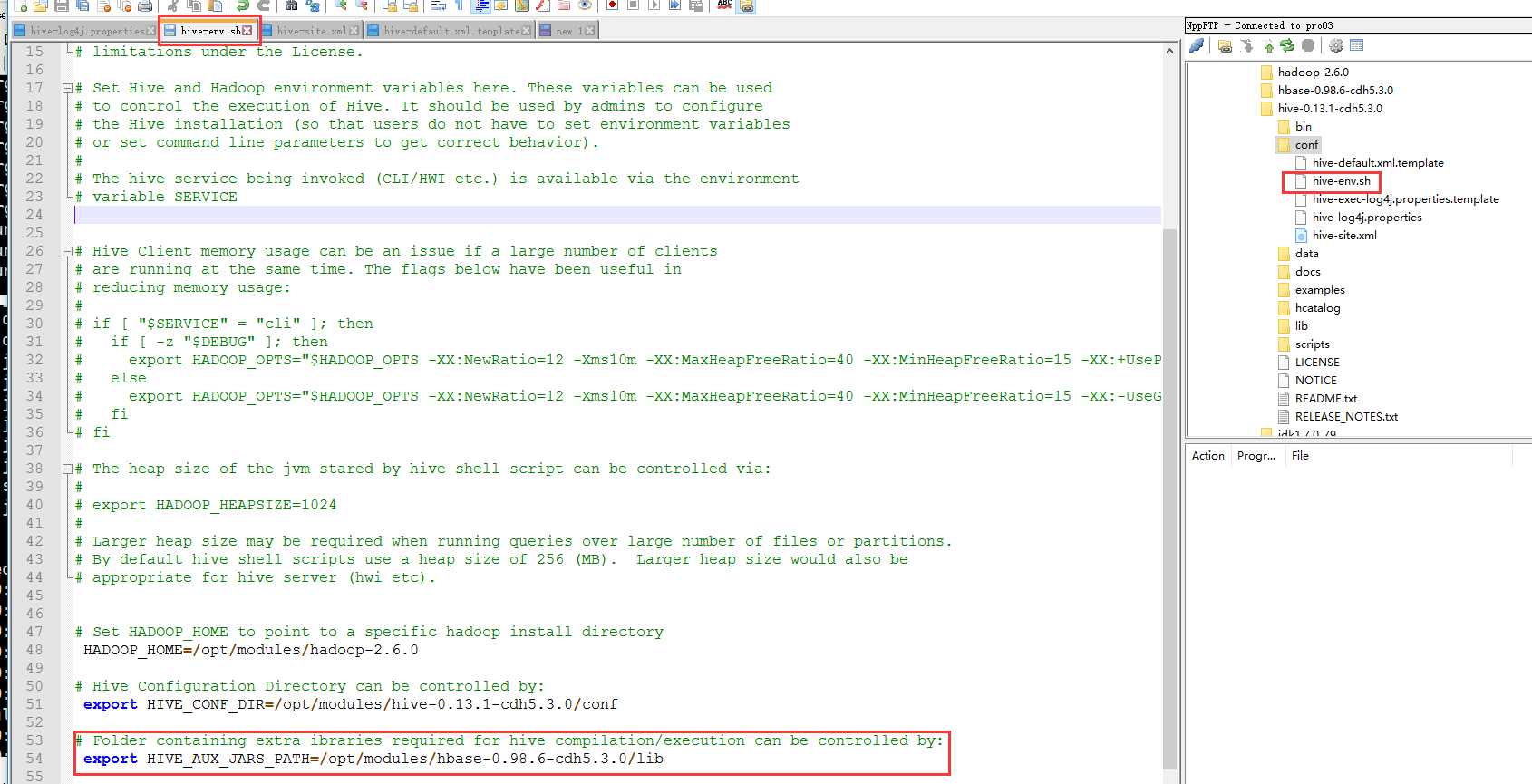

然后,修改hive的配置文件,使hbase的lib包生效

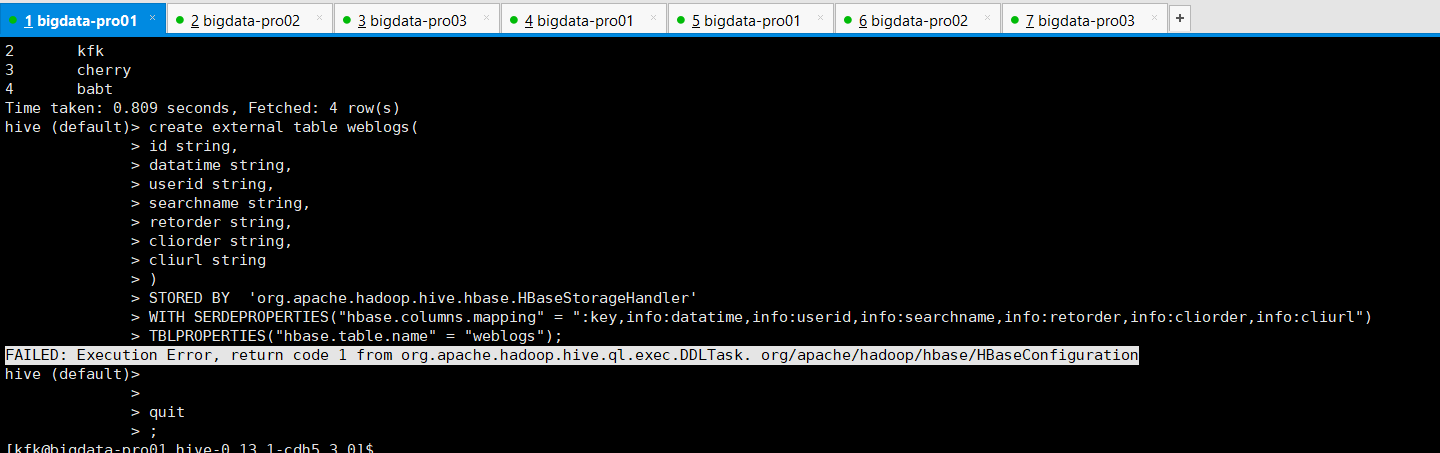

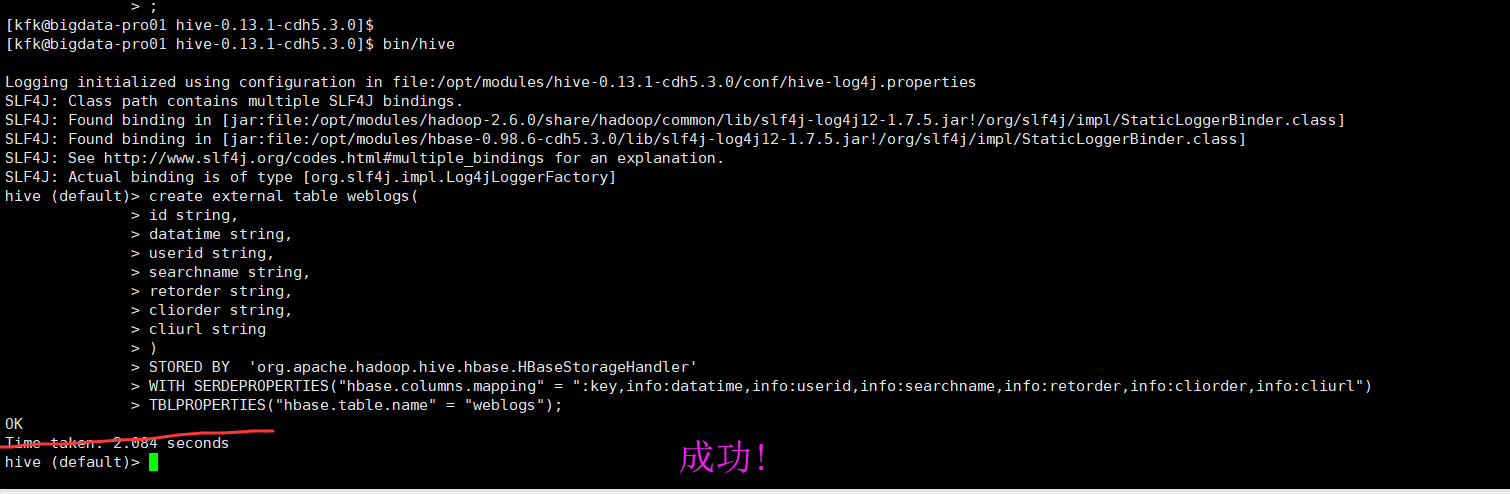

重启一下hive,再次建表

[kfk@bigdata-pro01 hive-0.13.-cdh5.3.0]$ bin/hive Logging initialized using configuration in file:/opt/modules/hive-0.13.-cdh5.3.0/conf/hive-log4j.properties

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/modules/hadoop-2.6./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/modules/hbase-0.98.-cdh5.3.0/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

hive (default)> create external table weblogs(

> id string,

> datatime string,

> userid string,

> searchname string,

> retorder string,

> cliorder string,

> cliurl string

> )

> STORED BY 'org.apache.hadoop.hive.hbase.HBaseStorageHandler'

> WITH SERDEPROPERTIES("hbase.columns.mapping" = ":key,info:datatime,info:userid,info:searchname,info:retorder,info:cliorder,info:cliurl")

> TBLPROPERTIES("hbase.table.name" = "weblogs");

OK

Time taken: 2.084 seconds

hive (default)>

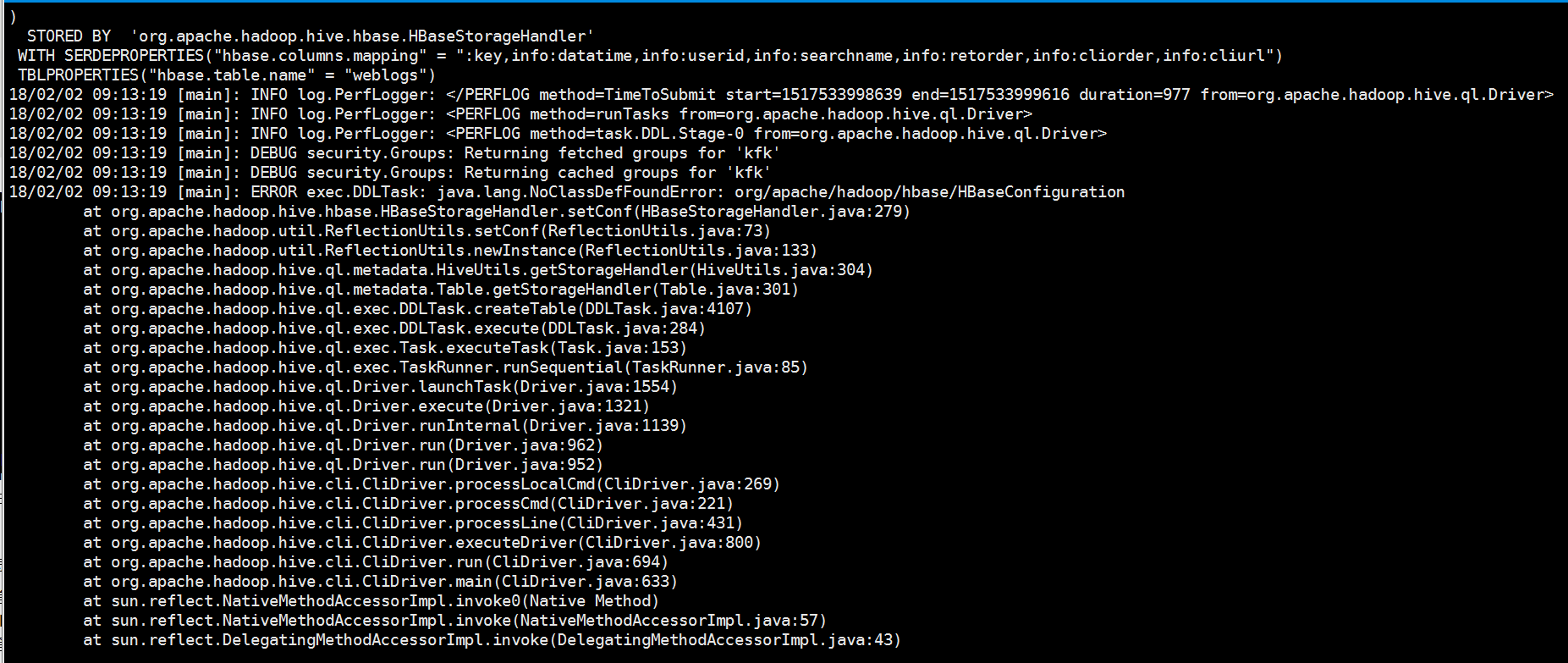

Hive和HBase不同是CDH版本的解决办法

(2) 重启Hbase服务试试

(3)别光检查你的Hive,还看看你的HBase进程啊。

从时间同步,防火墙,MySQL启动没、hive-site.xml配置好了没,以及授权是否正确等方面去排查。

java.lang.RuntimeException: HRegionServer Aborted的问题

分布式集群HBase启动后某节点的HRegionServer自动消失问题

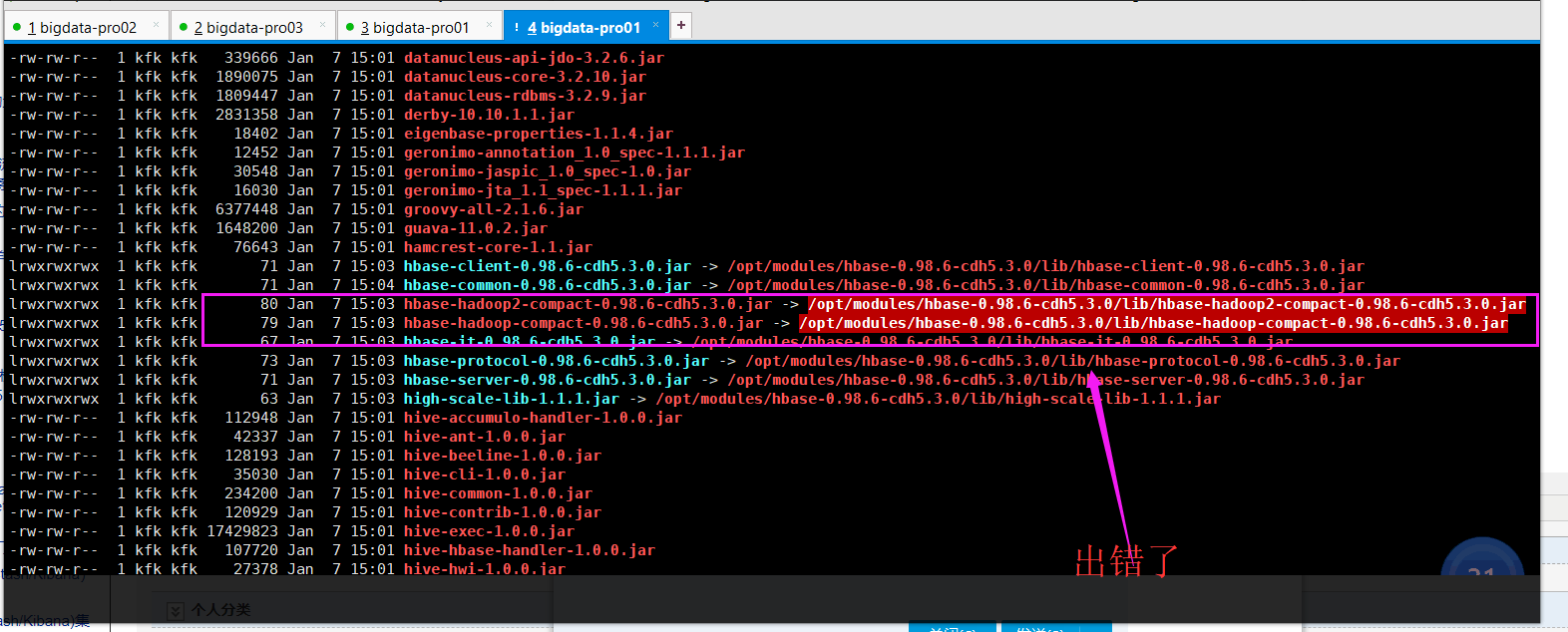

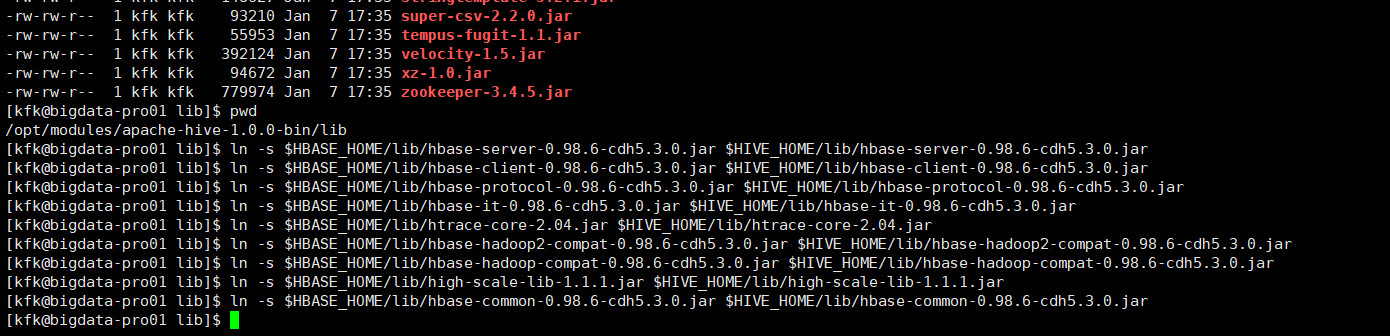

(4) 少包了,是Hive和HBase集成这过程出错了。

官网 https://cwiki.apache.org/confluence/display/Hive/HBaseIntegration

export HBASE_HOME=/opt/modules/hbase-0.98.-cdh5.3.0

export HIVE_HOME=/opt/modules/apache-hive-1.0.-bin ln -s $HBASE_HOME/lib/hbase-server-0.98.-cdh5.3.0.jar $HIVE_HOME/lib/hbase-server-0.98.-cdh5.3.0.jar

ln -s $HBASE_HOME/lib/hbase-client-0.98.-cdh5.3.0.jar $HIVE_HOME/lib/hbase-client-0.98.-cdh5.3.0.jar

ln -s $HBASE_HOME/lib/hbase-protocol-0.98.-cdh5.3.0.jar $HIVE_HOME/lib/hbase-protocol-0.98.-cdh5.3.0.jar

ln -s $HBASE_HOME/lib/hbase-it-0.98.-cdh5.3.0.jar $HIVE_HOME/lib/hbase-it-0.98.-cdh5.3.0.jar

ln -s $HBASE_HOME/lib/htrace-core-2.04.jar $HIVE_HOME/lib/htrace-core-2.04.jar

ln -s $HBASE_HOME/lib/hbase-hadoop2-compat-0.98.-cdh5.3.0.jar $HIVE_HOME/lib/hbase-hadoop2-compat-0.98.-cdh5.3.0.jar

ln -s $HBASE_HOME/lib/hbase-hadoop-compat-0.98.-cdh5.3.0.jar $HIVE_HOME/lib/hbase-hadoop-compat-0.98.-cdh5.3.0.jar

ln -s $HBASE_HOME/lib/high-scale-lib-1.1..jar $HIVE_HOME/lib/high-scale-lib-1.1..jar

ln -s $HBASE_HOME/lib/hbase-common-0.98.-cdh5.3.0.jar $HIVE_HOME/lib/hbase-common-0.98.-cdh5.3.0.jar

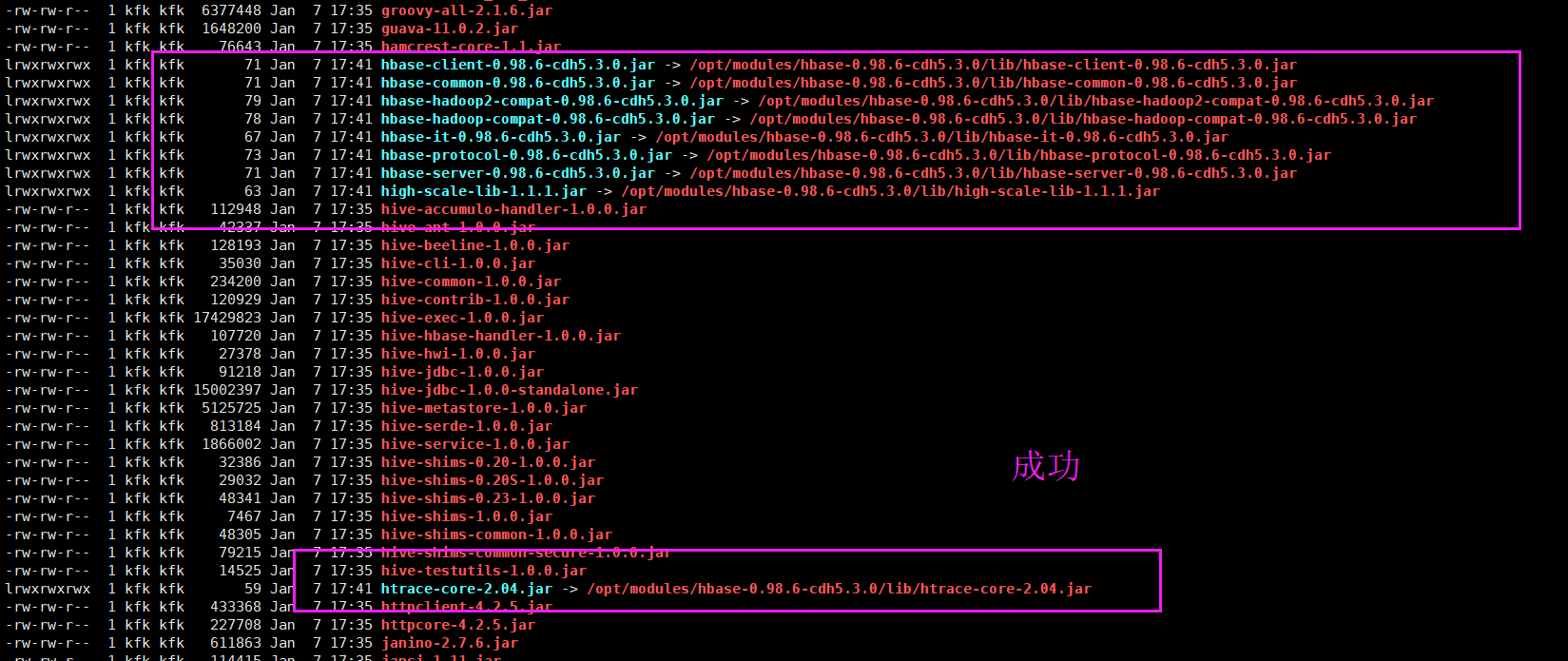

[kfk@bigdata-pro01 lib]$ ll

total

-rw-rw-r-- kfk kfk Jan : accumulo-core-1.6..jar

-rw-rw-r-- kfk kfk Jan : accumulo-fate-1.6..jar

-rw-rw-r-- kfk kfk Jan : accumulo-start-1.6..jar

-rw-rw-r-- kfk kfk Jan : accumulo-trace-1.6..jar

-rw-rw-r-- kfk kfk Jan : activation-1.1.jar

-rw-rw-r-- kfk kfk Jan : ant-1.9..jar

-rw-rw-r-- kfk kfk Jan : ant-launcher-1.9..jar

-rw-rw-r-- kfk kfk Jan : antlr-2.7..jar

-rw-rw-r-- kfk kfk Jan : antlr-runtime-3.4.jar

-rw-rw-r-- kfk kfk Jan : asm-commons-3.1.jar

-rw-rw-r-- kfk kfk Jan : asm-tree-3.1.jar

-rw-rw-r-- kfk kfk Jan : avro-1.7..jar

-rw-rw-r-- kfk kfk Jan : bonecp-0.8..RELEASE.jar

-rw-rw-r-- kfk kfk Jan : calcite-avatica-0.9.-incubating.jar

-rw-rw-r-- kfk kfk Jan : calcite-core-0.9.-incubating.jar

-rw-rw-r-- kfk kfk Jan : commons-beanutils-1.7..jar

-rw-rw-r-- kfk kfk Jan : commons-beanutils-core-1.8..jar

-rw-rw-r-- kfk kfk Jan : commons-cli-1.2.jar

-rw-rw-r-- kfk kfk Jan : commons-codec-1.4.jar

-rw-rw-r-- kfk kfk Jan : commons-collections-3.2..jar

-rw-rw-r-- kfk kfk Jan : commons-compiler-2.7..jar

-rw-rw-r-- kfk kfk Jan : commons-compress-1.4..jar

-rw-rw-r-- kfk kfk Jan : commons-configuration-1.6.jar

-rw-rw-r-- kfk kfk Jan : commons-dbcp-1.4.jar

-rw-rw-r-- kfk kfk Jan : commons-digester-1.8.jar

-rw-rw-r-- kfk kfk Jan : commons-httpclient-3.0..jar

-rw-rw-r-- kfk kfk Jan : commons-io-2.4.jar

-rw-rw-r-- kfk kfk Jan : commons-lang-2.6.jar

-rw-rw-r-- kfk kfk Jan : commons-logging-1.1..jar

-rw-rw-r-- kfk kfk Jan : commons-math-2.1.jar

-rw-rw-r-- kfk kfk Jan : commons-pool-1.5..jar

-rw-rw-r-- kfk kfk Jan : commons-vfs2-2.0.jar

-rw-rw-r-- kfk kfk Jan : curator-client-2.6..jar

-rw-rw-r-- kfk kfk Jan : curator-framework-2.6..jar

-rw-rw-r-- kfk kfk Jan : datanucleus-api-jdo-3.2..jar

-rw-rw-r-- kfk kfk Jan : datanucleus-core-3.2..jar

-rw-rw-r-- kfk kfk Jan : datanucleus-rdbms-3.2..jar

-rw-rw-r-- kfk kfk Jan : derby-10.10.1.1.jar

-rw-rw-r-- kfk kfk Jan : eigenbase-properties-1.1..jar

-rw-rw-r-- kfk kfk Jan : geronimo-annotation_1.0_spec-1.1..jar

-rw-rw-r-- kfk kfk Jan : geronimo-jaspic_1.0_spec-1.0.jar

-rw-rw-r-- kfk kfk Jan : geronimo-jta_1.1_spec-1.1..jar

-rw-rw-r-- kfk kfk Jan : groovy-all-2.1..jar

-rw-rw-r-- kfk kfk Jan : guava-11.0..jar

-rw-rw-r-- kfk kfk Jan : hamcrest-core-1.1.jar

lrwxrwxrwx kfk kfk Jan : hbase-client-0.98.-cdh5.3.0.jar -> /opt/modules/hbase-0.98.-cdh5.3.0/lib/hbase-client-0.98.-cdh5.3.0.jar

lrwxrwxrwx kfk kfk Jan : hbase-common-0.98.-cdh5.3.0.jar -> /opt/modules/hbase-0.98.-cdh5.3.0/lib/hbase-common-0.98.-cdh5.3.0.jar

lrwxrwxrwx kfk kfk Jan : hbase-hadoop2-compat-0.98.-cdh5.3.0.jar -> /opt/modules/hbase-0.98.-cdh5.3.0/lib/hbase-hadoop2-compat-0.98.-cdh5.3.0.jar

lrwxrwxrwx kfk kfk Jan : hbase-hadoop-compat-0.98.-cdh5.3.0.jar -> /opt/modules/hbase-0.98.-cdh5.3.0/lib/hbase-hadoop-compat-0.98.-cdh5.3.0.jar

lrwxrwxrwx kfk kfk Jan : hbase-it-0.98.-cdh5.3.0.jar -> /opt/modules/hbase-0.98.-cdh5.3.0/lib/hbase-it-0.98.-cdh5.3.0.jar

lrwxrwxrwx kfk kfk Jan : hbase-protocol-0.98.-cdh5.3.0.jar -> /opt/modules/hbase-0.98.-cdh5.3.0/lib/hbase-protocol-0.98.-cdh5.3.0.jar

lrwxrwxrwx kfk kfk Jan : hbase-server-0.98.-cdh5.3.0.jar -> /opt/modules/hbase-0.98.-cdh5.3.0/lib/hbase-server-0.98.-cdh5.3.0.jar

lrwxrwxrwx kfk kfk Jan : high-scale-lib-1.1..jar -> /opt/modules/hbase-0.98.-cdh5.3.0/lib/high-scale-lib-1.1..jar

-rw-rw-r-- kfk kfk Jan : hive-accumulo-handler-1.0..jar

-rw-rw-r-- kfk kfk Jan : hive-ant-1.0..jar

-rw-rw-r-- kfk kfk Jan : hive-beeline-1.0..jar

-rw-rw-r-- kfk kfk Jan : hive-cli-1.0..jar

-rw-rw-r-- kfk kfk Jan : hive-common-1.0..jar

-rw-rw-r-- kfk kfk Jan : hive-contrib-1.0..jar

-rw-rw-r-- kfk kfk Jan : hive-exec-1.0..jar

-rw-rw-r-- kfk kfk Jan : hive-hbase-handler-1.0..jar

-rw-rw-r-- kfk kfk Jan : hive-hwi-1.0..jar

-rw-rw-r-- kfk kfk Jan : hive-jdbc-1.0..jar

-rw-rw-r-- kfk kfk Jan : hive-jdbc-1.0.-standalone.jar

-rw-rw-r-- kfk kfk Jan : hive-metastore-1.0..jar

-rw-rw-r-- kfk kfk Jan : hive-serde-1.0..jar

-rw-rw-r-- kfk kfk Jan : hive-service-1.0..jar

-rw-rw-r-- kfk kfk Jan : hive-shims-0.20-1.0..jar

-rw-rw-r-- kfk kfk Jan : hive-shims-.20S-1.0..jar

-rw-rw-r-- kfk kfk Jan : hive-shims-0.23-1.0..jar

-rw-rw-r-- kfk kfk Jan : hive-shims-1.0..jar

-rw-rw-r-- kfk kfk Jan : hive-shims-common-1.0..jar

-rw-rw-r-- kfk kfk Jan : hive-shims-common-secure-1.0..jar

-rw-rw-r-- kfk kfk Jan : hive-testutils-1.0..jar

lrwxrwxrwx kfk kfk Jan : htrace-core-2.04.jar -> /opt/modules/hbase-0.98.-cdh5.3.0/lib/htrace-core-2.04.jar

-rw-rw-r-- kfk kfk Jan : httpclient-4.2..jar

-rw-rw-r-- kfk kfk Jan : httpcore-4.2..jar

-rw-rw-r-- kfk kfk Jan : janino-2.7..jar

-rw-rw-r-- kfk kfk Jan : jansi-1.11.jar

-rw-rw-r-- kfk kfk Jan : jcommander-1.32.jar

-rw-rw-r-- kfk kfk Jan : jdo-api-3.0..jar

-rw-rw-r-- kfk kfk Jan : jetty-all-7.6..v20120127.jar

-rw-rw-r-- kfk kfk Jan : jetty-all-server-7.6..v20120127.jar

-rw-rw-r-- kfk kfk Jan : jline-0.9..jar

-rw-rw-r-- kfk kfk Jan : jpam-1.1.jar

-rw-rw-r-- kfk kfk Jan : jsr305-1.3..jar

-rw-rw-r-- kfk kfk Jan : jta-1.1.jar

-rw-rw-r-- kfk kfk Jan : junit-4.11.jar

-rw-rw-r-- kfk kfk Jan : libfb303-0.9..jar

-rw-rw-r-- kfk kfk Jan : libthrift-0.9..jar

-rw-rw-r-- kfk kfk Jan : linq4j-0.4.jar

-rw-rw-r-- kfk kfk Jan : log4j-1.2..jar

-rw-rw-r-- kfk kfk Jan : mail-1.4..jar

-rw-rw-r-- kfk kfk Jan : maven-scm-api-1.4.jar

-rw-rw-r-- kfk kfk Jan : maven-scm-provider-svn-commons-1.4.jar

-rw-rw-r-- kfk kfk Jan : maven-scm-provider-svnexe-1.4.jar

-rw-rw-r-- kfk kfk Jan : mysql-connector-java-5.1..jar

-rw-rw-r-- kfk kfk Jan : opencsv-2.3.jar

-rw-rw-r-- kfk kfk Jan : oro-2.0..jar

-rw-rw-r-- kfk kfk Jan : paranamer-2.3.jar

-rw-rw-r-- kfk kfk Jan : pentaho-aggdesigner-algorithm-5.1.-jhyde.jar

drwxrwxr-x kfk kfk Jan : php

-rw-rw-r-- kfk kfk Jan : plexus-utils-1.5..jar

drwxrwxr-x kfk kfk Jan : py

-rw-rw-r-- kfk kfk Jan : quidem-0.1..jar

-rw-rw-r-- kfk kfk Jan : regexp-1.3.jar

-rw-rw-r-- kfk kfk Jan : servlet-api-2.5.jar

-rw-rw-r-- kfk kfk Jan : snappy-java-1.0..jar

-rw-rw-r-- kfk kfk Jan : ST4-4.0..jar

-rw-rw-r-- kfk kfk Jan : stax-api-1.0..jar

-rw-rw-r-- kfk kfk Jan : stringtemplate-3.2..jar

-rw-rw-r-- kfk kfk Jan : super-csv-2.2..jar

-rw-rw-r-- kfk kfk Jan : tempus-fugit-1.1.jar

-rw-rw-r-- kfk kfk Jan : velocity-1.5.jar

-rw-rw-r-- kfk kfk Jan : xz-1.0.jar

-rw-rw-r-- kfk kfk Jan : zookeeper-3.4..jar

[kfk@bigdata-pro01 lib]$

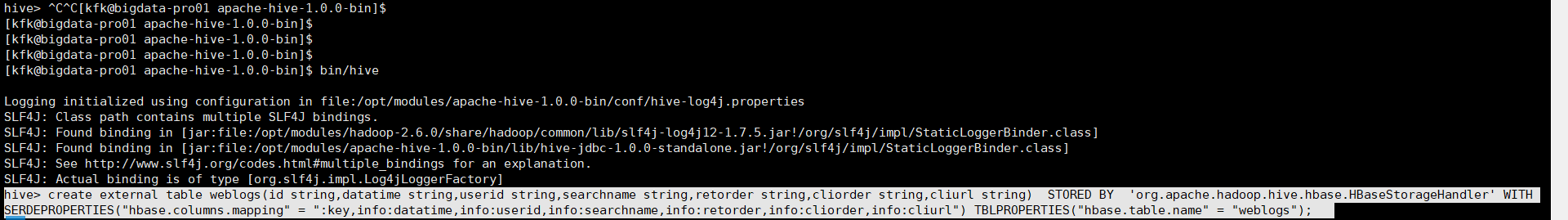

然后,再来执行

[kfk@bigdata-pro01 apache-hive-1.0.-bin]$ bin/hive Logging initialized using configuration in file:/opt/modules/apache-hive-1.0.-bin/conf/hive-log4j.properties

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/modules/hadoop-2.6./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/modules/apache-hive-1.0.-bin/lib/hive-jdbc-1.0.-standalone.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

hive> create external table weblogs(

> id string,

> datatime string,

> userid string,

> searchname string,

> retorder string,

> cliorder string,

> cliurl string

> )

> STORED BY 'org.apache.hadoop.hive.hbase.HBaseStorageHandler'

> WITH SERDEPROPERTIES("hbase.columns.mapping" = ":key,info:datatime,info:userid,info:searchname,info:retorder,info:cliorder,info:cliurl")

> TBLPROPERTIES("hbase.table.name" = "weblogs");

或者

[kfk@bigdata-pro01 apache-hive-1.0.-bin]$ bin/hive Logging initialized using configuration in file:/opt/modules/apache-hive-1.0.-bin/conf/hive-log4j.properties

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/modules/hadoop-2.6./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/modules/apache-hive-1.0.-bin/lib/hive-jdbc-1.0.-standalone.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

hive> create external table weblogs(id string,datatime string,userid string,searchname string,retorder string,cliorder string,cliurl string) STORED BY 'org.apache.hadoop.hive.hbase.HBaseStorageHandler' WITH SERDEPROPERTIES("hbase.columns.mapping" = ":key,info:datatime,info:userid,info:searchname,info:retorder,info:cliorder,info:cliurl") TBLPROPERTIES("hbase.table.name" = "weblogs");

以上是创建与HBase集成的Hive的外部表。

同时,大家可以关注我的个人博客:

http://www.cnblogs.com/zlslch/ 和 http://www.cnblogs.com/lchzls/ http://www.cnblogs.com/sunnyDream/

详情请见:http://www.cnblogs.com/zlslch/p/7473861.html

人生苦短,我愿分享。本公众号将秉持活到老学到老学习无休止的交流分享开源精神,汇聚于互联网和个人学习工作的精华干货知识,一切来于互联网,反馈回互联网。

目前研究领域:大数据、机器学习、深度学习、人工智能、数据挖掘、数据分析。 语言涉及:Java、Scala、Python、Shell、Linux等 。同时还涉及平常所使用的手机、电脑和互联网上的使用技巧、问题和实用软件。 只要你一直关注和呆在群里,每天必须有收获

对应本平台的讨论和答疑QQ群:大数据和人工智能躺过的坑(总群)(161156071)

Hive的Shell里hive> 执行操作时,出现FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask错误的解决办法(图文详解)的更多相关文章

- hive从本地导入数据时出现「Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.MoveTask」错误

现象 通过load data local导入本地文件时报无法导入的错误 hive> load data local inpath '/home/hadoop/out/mid_test.txt' ...

- FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. com/mongodb/util/JSON

问题: 将MongoDB数据导入Hive,按照https://blog.csdn.net/thriving_fcl/article/details/51471248文章,在hive建外部表与mongo ...

- FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. MetaException(message:javax.jdo.JDODataStoreException: An exception was thrown while adding/validating class(es) :

在hive命令行创建表时报错: FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. ...

- FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. MetaException(me

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. MetaException(me ...

- hive遇到FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.mr.MapRedTask错误

hive遇到FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.mr.MapRedTask错误 起因 ...

- 解决hiveserver2报错:java.io.IOException: Job status not available - Error while processing statement: FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.mr.MapRedTask

用户使用的sql: select count( distinct patient_id ) from argus.table_aa000612_641cd8ce_ceff_4ea0_9b27_0a3a ...

- java.sql.SQLException: Error while processing statement: FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.mr.MapRedTask

执行Hive查询: Console是这样报错的 java.sql.SQLException: Error from org.apache.hadoop.hive.ql.exec.mr.MapRedTa ...

- FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask

hive启动后,出现以下异常 hive> show databases; FAILED: Error / failed on connection exception: java.net.Con ...

- hive_异常_01_(未解决)FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. org.apache.hadoop.hbase.HTableDescriptor.addFamily(Lorg/apache/hadoop/hbase/HColumnDescriptor;)V

一.如果出现如下错误需要编译源码 需要重新编译Hbase-handler源码 步骤如下: 准备Jar包: 将Hbase 中lib下的jar包和Hive中lib下的jar包全部导入到一起. 记得删除里面 ...

随机推荐

- Template Method Design Pattern in Java

Template Method is a behavioral design pattern and it’s used to create a method stub and deferring s ...

- 机器学习—集成学习(Adaboost)

一.原理部分: 二.sklearn实现: from sklearn.ensemble import AdaBoostClassifier from sklearn.datasets import lo ...

- Oracle Alert - APP-ALR-04108: SQL error ORA-01455

SELECT OD.ORGANIZATION_CODE, TO_CHAR(H.ORDER_NUMBER), --ORACLE ALERT 自动转数字类型最长11位,转字符处理解决APP-ALR-041 ...

- C# 图书整理

C#测试驱动开发C#设计模式C#高级编程单元测试之道C#版:使用Nunit 继续添加......

- Win10+VS2015折腾小记

20150807 昨天安装了多语言的专业版(当时语言可选,但是我也没选,今天虚机中文企业版时,视图选择语言,但是也只有中文简体,输入法有很多). 专业版安装在硬盘中,感觉不到半小时就完成了. 使用一个 ...

- 修改Tomcat的jvm的垃圾回收GC方式为CMS

修改Tomcat的jvm的垃圾回收GC方式 cp $TOMCAT_HOME/bin/catalina.sh $TOMCAT_HOME/bin/catalina.sh.bak_20170815 vi $ ...

- Privacy Policy of ColorfulBroswer

Personal information collection this app does not collect your data and does not share your infomat ...

- 为什么JavaScript要有null?(翻译)

原文地址 JavaScript有不少怪癖和难以理解的地方.其中null& undefined就比较有意思.既然有了为什么JavaScript还要弄一个null? 相等比较 让我们开始由具有看看 ...

- django系列6--Ajax01 特点, 基本格式, 向前端发送数据

一.Ajax了解 AJAX(Asynchronous Javascript And XML)优点是在不重新加载整个页面的情况下,可以与服务器交换数据并更新部分网页内容 优点: 1.ajax使用Java ...

- iOS水波纹效果

最近也是在学习一些动画效果的实现,也找了一些Demo进行练习,先放出原地址http://www.cocoachina.com/ios/20161104/17960.html,感谢大神的分享,作者对实现 ...