Spark随机森林实战

package big.data.analyse.ml.randomforest import org.apache.spark.ml.Pipeline

import org.apache.spark.ml.classification.{RandomForestClassificationModel, RandomForestClassifier}

import org.apache.spark.ml.evaluation.MulticlassClassificationEvaluator

import org.apache.spark.ml.feature.{IndexToString, VectorIndexer, StringIndexer}

import org.apache.spark.sql.SparkSession /**

* 随机森林

* Created by zhen on 2018/9/20.

*/

object RandomForest {

def main(args: Array[String]) {

//创建spark对象

val spark = SparkSession.builder()

.appName("RandomForest")

.master("local[2]")

.getOrCreate()

//获取数据

val data = spark.read.format("libsvm")

.load("src/big/data/analyse/ml/randomforest/randomforest.txt")

//标识整个数据集的标识列和索引列

val labelIndexer = new StringIndexer()

.setInputCol("label")

.setOutputCol("indexedLabel")

.fit(data)

//设置树的最大层次

val featureIndexer = new VectorIndexer()

.setInputCol("features")

.setOutputCol("indexedFeatures")

.setMaxCategories(4)

.fit(data)

//拆分数据为训练集和测试集(7:3)

val Array(trainingData, testData) = data.randomSplit(Array(0.7, 0.3))

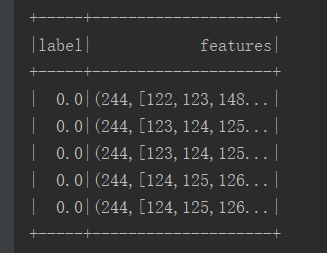

testData.show(5)

//创建模型

val randomForest = new RandomForestClassifier()

.setLabelCol("indexedLabel")

.setFeaturesCol("indexedFeatures")

.setNumTrees(10)

//转化初始数据

val labelConverter = new IndexToString()

.setInputCol("prediction")

.setOutputCol("predictedLabel")

.setLabels(labelIndexer.labels)

//使用管道运行转换器和随机森林算法

val pipeline = new Pipeline()

.setStages(Array(labelIndexer, featureIndexer, randomForest, labelConverter))

//训练模型

val model = pipeline.fit(trainingData)

//预测

val predictions = model.transform(testData)

//输出预测结果

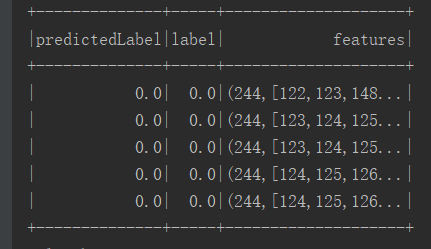

predictions.select("predictedLabel", "label", "features").show(5)

//创建评估函数,计算错误率

val evaluator = new MulticlassClassificationEvaluator()

.setLabelCol("indexedLabel")

.setPredictionCol("prediction")

.setMetricName("accuracy") val accuracy = evaluator.evaluate(predictions)

println("test error = " + (1.0 - accuracy)) val rfModel = model.stages(2).asInstanceOf[RandomForestClassificationModel]

println("learned classification forest model:\n" + rfModel.toDebugString) spark.stop()

}

}

使用数据:

0 128:51 129:159 130:253 131:159 132:50 155:48 156:238 157:252 158:252

1 159:124 160:253 161:255 162:63 186:96 187:244 188:251 189:253 190:62

1 125:145 126:255 127:211 128:31 152:32 153:237 154:253 155:252 156:71

1 153:5 154:63 155:197 181:20 182:254 183:230 184:24 209:20 210:254

1 152:1 153:168 154:242 155:28 180:10 181:228 182:254 183:100 209:190

0 130:64 131:253 132:255 133:63 157:96 158:205 159:251 160:253 161:205

1 159:121 160:254 161:136 186:13 187:230 188:253 189:248 190:99 213:4

1 100:166 101:222 102:55 128:197 129:254 130:218 131:5 155:29 156:249

0 155:53 156:255 157:253 158:253 159:253 160:124 183:180 184:253 185:25

0 128:73 129:253 130:227 131:73 132:21 156:73 157:251 158:251 159:251

1 155:178 156:255 157:105 182:6 183:188 184:253 185:216 186:14 210:14

0 154:46 155:105 156:254 157:254 158:254 159:254 160:255 161:239 162:41

0 152:56 153:105 154:220 155:254 156:63 178:18 179:166 180:233 181:253

1 130:7 131:176 132:254 133:224 158:51 159:253 160:253 161:223 185:4

0 155:21 156:176 157:253 158:253 159:124 182:105 183:176 184:251 185:25

1 151:68 152:45 153:131 154:131 155:131 156:101 157:68 158:92 159:44

0 125:29 126:170 127:255 128:255 129:141 151:29 152:198 153:255 154:255

0 153:203 154:254 155:252 156:252 157:252 158:214 159:51 160:20 180:62

1 98:64 99:191 100:70 125:68 126:243 127:253 128:249 129:63 152:30

1 125:26 126:240 127:72 153:25 154:238 155:208 182:209 183:226 184:14

0 155:62 156:91 157:213 158:255 159:228 160:91 161:12 182:70 183:230

1 157:42 158:228 159:253 160:253 185:144 186:251 187:251 188:251 212:89

1 128:62 129:254 130:213 156:102 157:253 158:252 159:102 160:20 184:102

0 154:28 155:195 156:254 157:254 158:254 159:254 160:254 161:255 162:61

0 123:8 124:76 125:202 126:254 127:255 128:163 129:37 130:2 150:13

0 127:68 128:254 129:255 130:254 131:107 153:11 154:176 155:230 156:253

1 157:85 158:255 159:103 160:1 185:205 186:253 187:253 188:30 213:205

1 126:94 127:132 154:250 155:250 156:4 182:250 183:254 184:95 210:250

1 124:32 125:253 126:31 152:32 153:251 154:149 180:32 181:251 182:188

1 129:39 130:254 131:255 132:254 133:140 157:136 158:253 159:253 160:22

0 123:59 124:55 149:71 150:192 151:254 152:250 153:147 154:17 176:123

1 128:58 129:139 156:247 157:247 158:25 183:121 184:253 185:156 186:3

1 129:28 130:247 131:255 132:165 156:47 157:221 158:252 159:252 160:164

0 156:13 157:6 181:10 182:77 183:145 184:253 185:190 186:67 207:11

0 127:28 128:164 129:254 130:233 131:148 132:11 154:3 155:164 156:254

0 129:105 130:255 131:219 132:67 133:67 134:52 156:20 157:181 158:253

0 125:22 126:183 127:252 128:254 129:252 130:252 131:252 132:76 151:85

1 155:114 156:206 157:25 183:238 184:252 185:55 211:222 212:252 213:55

1 127:73 128:253 129:253 130:63 155:115 156:252 157:252 158:144 183:217

1 120:85 121:253 122:132 123:9 147:82 148:241 149:251 150:251 151:128

1 126:15 127:200 128:255 129:90 154:42 155:254 156:254 157:173 182:42

0 182:32 183:57 184:57 185:57 186:57 187:57 188:57 189:57 208:67 209:18

0 127:42 128:235 129:255 130:84 153:15 154:132 155:208 156:253 157:253

1 156:202 157:253 158:69 184:253 185:252 186:121 212:253 213:252 214:69

1 156:73 157:253 158:253 159:253 160:124 184:73 185:251 186:251 187:251

1 124:111 125:255 126:48 152:162 153:253 154:237 155:63 180:206 181:253

0 99:70 100:255 101:165 102:114 127:122 128:253 129:253 130:253 131:120

1 124:29 125:197 126:255 127:84 152:85 153:251 154:253 155:83 180:86

1 159:31 160:210 161:253 162:163 187:198 188:252 189:252 190:162 213:10

1 131:159 132:255 133:122 158:167 159:228 160:253 161:121 185:64 186:23

0 153:92 154:191 155:178 156:253 157:242 158:141 159:104 160:29 180:26

1 128:53 129:250 130:255 131:25 156:167 157:253 158:253 159:25 182:3

0 122:63 123:176 124:253 125:253 126:159 127:113 128:63 150:140 151:253

0 153:12 154:136 155:254 156:255 157:195 158:115 159:3 180:6 181:175

1 128:255 129:253 130:57 156:253 157:251 158:225 159:56 183:169 184:254

0 151:23 152:167 153:208 154:254 155:255 156:129 157:19 179:151 180:253

1 130:24 131:150 132:233 133:38 156:14 157:89 158:253 159:254 160:254

0 125:120 126:253 127:253 128:63 151:38 152:131 153:246 154:252 155:252

1 127:155 128:253 129:126 155:253 156:251 157:141 158:4 183:253 184:251

0 101:88 102:127 103:5 126:19 127:58 128:20 129:14 130:217 131:19 152:7

0 127:37 128:141 129:156 130:156 131:194 132:194 133:47 153:11 154:132

0 154:32 155:134 156:218 157:254 158:254 159:254 160:217 161:84 176:44

1 124:102 125:252 126:252 127:41 152:102 153:250 154:250 155:202 180:10

0 124:20 125:121 126:197 127:253 128:64 151:23 152:200 153:252 154:252

1 127:20 128:254 129:255 130:37 155:19 156:253 157:253 158:134 183:19

0 235:40 236:37 238:7 239:77 240:137 241:136 242:136 243:136 244:136

1 128:166 129:255 130:187 131:6 156:165 157:253 158:253 159:13 183:15

1 128:117 129:128 155:2 156:199 157:127 183:81 184:254 185:87 211:116

1 129:159 130:142 156:11 157:220 158:141 184:78 185:254 186:141 212:111

0 124:66 125:254 126:254 127:58 128:60 129:59 130:59 131:50 151:73

1 129:101 130:222 131:84 157:225 158:252 159:84 184:89 185:246 186:208

0 124:41 125:254 126:254 127:157 128:34 129:34 130:218 131:255 132:206

0 96:56 97:247 98:121 124:24 125:242 126:245 127:122 153:231 154:253

0 125:19 126:164 127:253 128:255 129:253 130:118 131:59 132:36 153:78

1 129:232 130:255 131:107 156:58 157:244 158:253 159:106 184:95 185:253

1 127:63 128:128 129:2 155:63 156:254 157:123 183:63 184:254 185:179

1 130:131 131:255 132:184 133:15 157:99 158:247 159:253 160:182 161:15

0 125:57 126:255 127:253 128:198 129:85 153:168 154:253 155:251 156:253

0 127:12 128:105 129:224 130:255 131:247 132:22 155:131 156:254 157:254

1 130:226 131:247 132:55 157:99 158:248 159:254 160:230 161:30 185:125

1 130:166 131:253 132:124 133:53 158:140 159:251 160:251 161:180 185:12

1 129:17 130:206 131:229 132:44 157:2 158:125 159:254 160:123 185:95

1 130:218 131:253 132:124 157:84 158:236 159:251 160:251 184:63 185:236

1 124:102 125:180 126:1 152:140 153:254 154:130 180:140 181:254 182:204

0 128:87 129:208 130:249 155:27 156:212 157:254 158:195 182:118 183:225

1 126:134 127:230 154:133 155:231 156:10 182:133 183:253 184:96 210:133

1 125:29 126:85 127:255 128:139 153:197 154:251 155:253 156:251 181:254

1 125:149 126:255 127:254 128:58 153:215 154:253 155:183 156:2 180:41

1 130:79 131:203 132:141 157:51 158:240 159:240 160:140 185:88 186:252

1 126:94 127:254 128:75 154:166 155:253 156:231 182:208 183:253 184:147

0 127:46 128:105 129:254 130:254 131:224 132:59 133:59 134:9 155:196

1 125:42 126:232 127:254 128:58 153:86 154:253 155:253 156:58 181:86

1 156:60 157:229 158:38 184:187 185:254 186:78 211:121 212:252 213:254

1 101:11 102:150 103:72 129:37 130:251 131:71 157:63 158:251 159:71

0 127:45 128:254 129:254 130:254 131:148 132:24 133:9 154:43 155:254

0 125:218 126:253 127:253 128:255 129:149 130:62 151:42 152:144 153:236

0 127:60 128:96 129:96 130:48 153:16 154:171 155:228 156:253 157:251

0 126:32 127:202 128:255 129:253 130:253 131:175 132:21 152:84 153:144

1 130:218 131:170 132:108 157:32 158:227 159:252 160:232 185:129 186:25

1 130:116 131:255 132:123 157:29 158:213 159:253 160:122 185:189 186:25

结果(测试集&预测集):

内部决策树结构:

test error = 0.34375

learned classification forest model:

RandomForestClassificationModel (uid=rfc_0487ba2e1907) with 10 trees

Tree 0 (weight 1.0):

If (feature 185 <= 0.0)

If (feature 157 <= 253.0)

If (feature 149 <= 0.0)

If (feature 210 in {3.0})

Predict: 0.0

Else (feature 210 not in {3.0})

If (feature 208 in {2.0})

Predict: 0.0

Else (feature 208 not in {2.0})

Predict: 0.0

Else (feature 149 > 0.0)

Predict: 1.0

Else (feature 157 > 253.0)

Predict: 1.0

Else (feature 185 > 0.0)

If (feature 160 <= 0.0)

If (feature 180 <= 0.0)

Predict: 0.0

Else (feature 180 > 0.0)

Predict: 1.0

Else (feature 160 > 0.0)

Predict: 0.0

Tree 1 (weight 1.0):

If (feature 156 <= 253.0)

If (feature 187 <= 0.0)

If (feature 133 in {2.0})

Predict: 1.0

Else (feature 133 not in {2.0})

If (feature 100 <= 11.0)

If (feature 128 <= 139.0)

Predict: 0.0

Else (feature 128 > 139.0)

Predict: 1.0

Else (feature 100 > 11.0)

Predict: 1.0

Else (feature 187 > 0.0)

Predict: 0.0

Else (feature 156 > 253.0)

Predict: 1.0

Tree 2 (weight 1.0):

If (feature 158 <= 51.0)

If (feature 182 <= 0.0)

If (feature 127 <= 58.0)

If (feature 129 <= 142.0)

If (feature 154 <= 253.0)

Predict: 0.0

Else (feature 154 > 253.0)

Predict: 1.0

Else (feature 129 > 142.0)

Predict: 1.0

Else (feature 127 > 58.0)

Predict: 1.0

Else (feature 182 > 0.0)

Predict: 0.0

Else (feature 158 > 51.0)

If (feature 127 <= 62.0)

Predict: 0.0

Else (feature 127 > 62.0)

Predict: 1.0

Tree 3 (weight 1.0):

If (feature 100 <= 11.0)

If (feature 127 <= 0.0)

If (feature 151 <= 162.0)

If (feature 159 <= 0.0)

If (feature 125 <= 48.0)

Predict: 0.0

Else (feature 125 > 48.0)

Predict: 0.0

Else (feature 159 > 0.0)

Predict: 0.0

Else (feature 151 > 162.0)

Predict: 1.0

Else (feature 127 > 0.0)

If (feature 131 <= 0.0)

If (feature 153 <= 42.0)

Predict: 0.0

Else (feature 153 > 42.0)

If (feature 154 <= 228.0)

Predict: 1.0

Else (feature 154 > 228.0)

Predict: 0.0

Else (feature 131 > 0.0)

Predict: 1.0

Else (feature 100 > 11.0)

Predict: 1.0

Tree 4 (weight 1.0):

If (feature 152 <= 0.0)

If (feature 158 <= 0.0)

If (feature 151 <= 0.0)

Predict: 1.0

Else (feature 151 > 0.0)

Predict: 0.0

Else (feature 158 > 0.0)

If (feature 182 <= 15.0)

If (feature 153 <= 0.0)

Predict: 0.0

Else (feature 153 > 0.0)

Predict: 1.0

Else (feature 182 > 15.0)

Predict: 1.0

Else (feature 152 > 0.0)

If (feature 124 <= 0.0)

Predict: 1.0

Else (feature 124 > 0.0)

If (feature 123 <= 24.0)

If (feature 125 <= 232.0)

Predict: 0.0

Else (feature 125 > 232.0)

Predict: 1.0

Else (feature 123 > 24.0)

Predict: 0.0

Tree 5 (weight 1.0):

If (feature 157 <= 0.0)

If (feature 101 <= 0.0)

If (feature 129 <= 0.0)

If (feature 183 <= 0.0)

If (feature 152 <= 231.0)

Predict: 0.0

Else (feature 152 > 231.0)

Predict: 0.0

Else (feature 183 > 0.0)

Predict: 0.0

Else (feature 129 > 0.0)

Predict: 1.0

Else (feature 101 > 0.0)

Predict: 1.0

Else (feature 157 > 0.0)

If (feature 155 <= 165.0)

Predict: 0.0

Else (feature 155 > 165.0)

Predict: 1.0

Tree 6 (weight 1.0):

If (feature 153 <= 253.0)

If (feature 125 <= 240.0)

If (feature 158 <= 3.0)

If (feature 182 <= 0.0)

If (feature 179 <= 6.0)

Predict: 1.0

Else (feature 179 > 6.0)

Predict: 0.0

Else (feature 182 > 0.0)

If (feature 128 <= 139.0)

Predict: 0.0

Else (feature 128 > 139.0)

Predict: 0.0

Else (feature 158 > 3.0)

If (feature 155 <= 58.0)

Predict: 0.0

Else (feature 155 > 58.0)

If (feature 175 in {1.0})

Predict: 1.0

Else (feature 175 not in {1.0})

Predict: 0.0

Else (feature 125 > 240.0)

If (feature 129 <= 0.0)

If (feature 154 <= 0.0)

Predict: 1.0

Else (feature 154 > 0.0)

Predict: 0.0

Else (feature 129 > 0.0)

Predict: 1.0

Else (feature 153 > 253.0)

Predict: 1.0

Tree 7 (weight 1.0):

If (feature 131 <= 67.0)

If (feature 155 <= 102.0)

If (feature 129 <= 226.0)

If (feature 129 <= 62.0)

If (feature 127 <= 58.0)

Predict: 0.0

Else (feature 127 > 58.0)

Predict: 1.0

Else (feature 129 > 62.0)

Predict: 0.0

Else (feature 129 > 226.0)

Predict: 1.0

Else (feature 155 > 102.0)

If (feature 128 <= 224.0)

If (feature 184 <= 25.0)

If (feature 157 <= 0.0)

Predict: 1.0

Else (feature 157 > 0.0)

Predict: 1.0

Else (feature 184 > 25.0)

Predict: 0.0

Else (feature 128 > 224.0)

If (feature 131 <= 0.0)

Predict: 0.0

Else (feature 131 > 0.0)

Predict: 1.0

Else (feature 131 > 67.0)

Predict: 0.0

Tree 8 (weight 1.0):

If (feature 182 <= 180.0)

If (feature 179 <= 62.0)

If (feature 128 <= 101.0)

If (feature 156 <= 225.0)

If (feature 149 <= 0.0)

Predict: 0.0

Else (feature 149 > 0.0)

Predict: 1.0

Else (feature 156 > 225.0)

If (feature 155 <= 202.0)

Predict: 0.0

Else (feature 155 > 202.0)

Predict: 1.0

Else (feature 128 > 101.0)

If (feature 183 <= 0.0)

If (feature 128 <= 254.0)

Predict: 1.0

Else (feature 128 > 254.0)

Predict: 0.0

Else (feature 183 > 0.0)

Predict: 0.0

Else (feature 179 > 62.0)

Predict: 0.0

Else (feature 182 > 180.0)

If (feature 156 <= 105.0)

Predict: 0.0

Else (feature 156 > 105.0)

Predict: 1.0

Tree 9 (weight 1.0):

If (feature 96 in {1.0})

Predict: 1.0

Else (feature 96 not in {1.0})

If (feature 185 <= 67.0)

If (feature 160 <= 12.0)

If (feature 178 in {1.0})

Predict: 1.0

Else (feature 178 not in {1.0})

If (feature 126 <= 0.0)

Predict: 0.0

Else (feature 126 > 0.0)

Predict: 1.0

Else (feature 160 > 12.0)

If (feature 155 <= 0.0)

Predict: 0.0

Else (feature 155 > 0.0)

Predict: 1.0

Else (feature 185 > 67.0)

Predict: 0.0

总结:可知该随机森林共有10棵树组成,预测结果为10棵树的投票为准。每棵树的最大层次为4,这是为了避免层次过高带来的计算压力和过拟合!

Spark随机森林实战的更多相关文章

- Spark随机森林实现学习

前言 最近阅读了spark mllib(版本:spark 1.3)中Random Forest的实现,发现在分布式的数据结构上实现迭代算法时,有些地方与单机环境不一样.单机上一些直观的操作(递归),在 ...

- spark 随机森林算法案例实战

随机森林算法 由多个决策树构成的森林,算法分类结果由这些决策树投票得到,决策树在生成的过程当中分别在行方向和列方向上添加随机过程,行方向上构建决策树时采用放回抽样(bootstraping)得到训练数 ...

- Python之随机森林实战

代码实现: # -*- coding: utf-8 -*- """ Created on Tue Sep 4 09:38:57 2018 @author: zhen &q ...

- python spark 随机森林入门demo

class pyspark.mllib.tree.RandomForest[source] Learning algorithm for a random forest model for class ...

- Spark随机深林扩展—OOB错误评估和变量权重

本文目的 当前spark(1.3版)随机森林实现,没有包括OOB错误评估和变量权重计算.而这两个功能在实际工作中比较常用.OOB错误评估可以代替交叉检验,评估模型整体结果,避免交叉检验带来的计算开销. ...

- Spark2.0机器学习系列之6:GBDT(梯度提升决策树)、GBDT与随机森林差异、参数调试及Scikit代码分析

概念梳理 GBDT的别称 GBDT(Gradient Boost Decision Tree),梯度提升决策树. GBDT这个算法还有一些其他的名字,比如说MART(Multiple Addi ...

- 使用基于Apache Spark的随机森林方法预测贷款风险

使用基于Apache Spark的随机森林方法预测贷款风险 原文:Predicting Loan Credit Risk using Apache Spark Machine Learning R ...

- H2O中的随机森林算法介绍及其项目实战(python实现)

H2O中的随机森林算法介绍及其项目实战(python实现) 包的引入:from h2o.estimators.random_forest import H2ORandomForestEstimator ...

- 100天搞定机器学习|Day56 随机森林工作原理及调参实战(信用卡欺诈预测)

本文是对100天搞定机器学习|Day33-34 随机森林的补充 前文对随机森林的概念.工作原理.使用方法做了简单介绍,并提供了分类和回归的实例. 本期我们重点讲一下: 1.集成学习.Bagging和随 ...

随机推荐

- Eclipse安装ModelGoon控件(ModelGoon控件反向生成UML)

Eclipse安装ModelGoon 1 下载ModelGoon到本地,放在eclipse的安装目录下 2 打开Eclipse,点击Help,选择Install new software 3 点击ad ...

- 工具-infer,静态代码检测

1.工具介绍 Infer 是一个静态程序分析工具,可以对 Java.C 和 Objective-C 程序进行分析,此工具是用 OCaml写成的.https://github.com/facebook/ ...

- Redis for Windows

要求 必备知识 熟悉基本编程环境搭建. 运行环境 windows 7(64位); redis64-2.8.17 下载地址 环境下载 什么是Redis redis是一个key-value存储系统.和Me ...

- Tomcat笔记:Tomcat的执行流程解析

Bootstrap的启动 Bootstrap的main方法先new了一个自己的对象(Bootstrap),然后用该对象主要执行了四个方法: init(); setAwait(true); load(a ...

- Java NIO 基础知识

前言 前言部分是科普,读者可自行选择是否阅读这部分内容. 为什么我们需要关心 NIO?我想很多业务猿都会有这个疑问. 我在工作的前两年对这个问题也很不解,因为那个时候我认为自己已经非常熟悉 IO 操作 ...

- 【IT笔试面试题整理】二叉树中和为某一值的路径--所有可能路径

[试题描述] You are given a binary tree in which each node contains a value. Design an algorithm to print ...

- 用SVN进行团队开发协作生命周期详解

目录 前言 面向人群 背景 解决方案 团队开发生命周期 创建新项目 创建分支 切换分支 合并代码 正式版本发布 bug修复 结束语 前言 查找了SVN的相关知识无论是园子里还是百度都只有一些理论,而有 ...

- [PHP] 看博客学习插入排序

定义数组长度变量$len,使用count()函数,参数:数组 for循环数组,条件:从第二个开始,遍历数组,循环内 定义临时变量$temp,赋值当前元素 for循环数组,条件:遍历当前元素前面的所有元 ...

- 自己写一个java的mvc框架吧(三)

自己写一个mvc框架吧(三) 根据Method获取参数并转换参数类型 上一篇我们将url与Method的映射创建完毕,并成功的将映射关系创建起来了.这一篇我们将根据Method的入参参数名称.参数类型 ...

- MySQL5.7 常用用户操作

目录 MySQL5.7 常用用户操作 1. 新建用户 2. 授权 3. 创建用户时授权 4. 设置与更改用户密码(root) 5. 撤销用户权限 6. 删除用户 7. 查看用户的授权 8. 显示当前用 ...