[转帖]CPU Utilization is Wrong

CPU Utilization is Wrong

09 May 2017

The metric we all use for CPU utilization is deeply misleading, and getting worse every year. What is CPU utilization? How busy your processors are? No, that's not what it measures. Yes, I'm talking about the "%CPU" metric used everywhere, by everyone. In every performance monitoring product. In top(1).

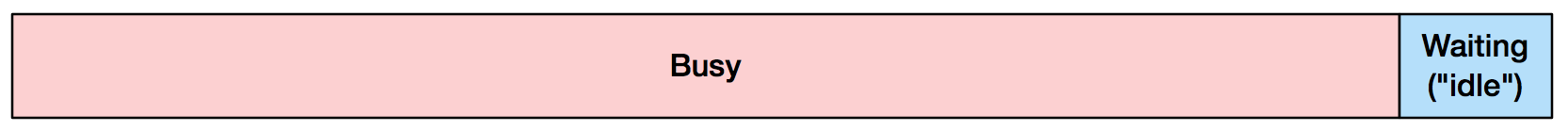

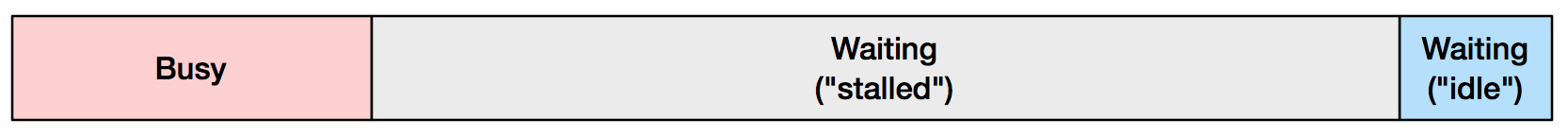

What you may think 90% CPU utilization means:

What it might really mean:

Stalled means the processor was not making forward progress with instructions, and usually happens because it is waiting on memory I/O. The ratio I drew above (between busy and stalled) is what I typically see in production. Chances are, you're mostly stalled, but don't know it.

What does this mean for you? Understanding how much your CPUs are stalled can direct performance tuning efforts between reducing code or reducing memory I/O. Anyone looking at CPU performance, especially on clouds that auto scale based on CPU, would benefit from knowing the stalled component of their %CPU.

What really is CPU Utilization?

The metric we call CPU utilization is really "non-idle time": the time the CPU was not running the idle thread. Your operating system kernel (whatever it is) usually tracks this during context switch. If a non-idle thread begins running, then stops 100 milliseconds later, the kernel considers that CPU utilized that entire time.

This metric is as old as time sharing systems. The Apollo Lunar Module guidance computer (a pioneering time sharing system) called its idle thread the "DUMMY JOB", and engineers tracked cycles running it vs real tasks as a important computer utilization metric. (I wrote about this before.)

So what's wrong with this?

Nowadays, CPUs have become much faster than main memory, and waiting on memory dominates what is still called "CPU utilization". When you see high %CPU in top(1), you might think of the processor as being the bottleneck – the CPU package under the heat sink and fan – when it's really those banks of DRAM.

This has been getting worse. For a long time processor manufacturers were scaling their clockspeed quicker than DRAM was scaling its access latency (the "CPU DRAM gap"). That levelled out around 2005 with 3 GHz processors, and since then processors have scaled using more cores and hyperthreads, plus multi-socket configurations, all putting more demand on the memory subsystem. Processor manufacturers have tried to reduce this memory bottleneck with larger and smarter CPU caches, and faster memory busses and interconnects. But we're still usually stalled.

How to tell what the CPUs are really doing

By using Performance Monitoring Counters (PMCs): hardware counters that can be read using Linux perf, and other tools. For example, measuring the entire system for 10 seconds:

# perf stat -a -- sleep 10

Performance counter stats for 'system wide':

641398.723351 task-clock (msec) # 64.116 CPUs utilized (100.00%)

379,651 context-switches # 0.592 K/sec (100.00%)

51,546 cpu-migrations # 0.080 K/sec (100.00%)

13,423,039 page-faults # 0.021 M/sec

1,433,972,173,374 cycles # 2.236 GHz (75.02%)

<not supported> stalled-cycles-frontend

<not supported> stalled-cycles-backend

1,118,336,816,068 instructions # 0.78 insns per cycle (75.01%)

249,644,142,804 branches # 389.218 M/sec (75.01%)

7,791,449,769 branch-misses # 3.12% of all branches (75.01%)

10.003794539 seconds time elapsed

The key metric here is instructions per cycle (insns per cycle: IPC), which shows on average how many instructions we were completed for each CPU clock cycle. The higher, the better (a simplification). The above example of 0.78 sounds not bad (78% busy?) until you realize that this processor's top speed is an IPC of 4.0. This is also known as 4-wide, referring to the instruction fetch/decode path. Which means, the CPU can retire (complete) four instructions with every clock cycle. So an IPC of 0.78 on a 4-wide system, means the CPUs are running at 19.5% their top speed. Newer Intel processors may move to 5-wide.

There are hundreds more PMCs you can use to dig further: measuring stalled cycles directly by different types.

In the cloud

If you are in a virtual environment, you might not have access to PMCs, depending on whether the hypervisor supports them for guests. I recently posted about The PMCs of EC2: Measuring IPC, showing how PMCs are now available for dedicated host types on the AWS EC2 Xen-based cloud.

Interpretation and actionable items

If your IPC is < 1.0, you are likely memory stalled, and software tuning strategies include reducing memory I/O, and improving CPU caching and memory locality, especially on NUMA systems. Hardware tuning includes using processors with larger CPU caches, and faster memory, busses, and interconnects.

If your IPC is > 1.0, you are likely instruction bound. Look for ways to reduce code execution: eliminate unnecessary work, cache operations, etc. CPU flame graphs are a great tool for this investigation. For hardware tuning, try a faster clock rate, and more cores/hyperthreads.

For my above rules, I split on an IPC of 1.0. Where did I get that from? I made it up, based on my prior work with PMCs. Here's how you can get a value that's custom for your system and runtime: write two dummy workloads, one that is CPU bound, and one memory bound. Measure their IPC, then calculate their mid point.

What performance monitoring products should tell you

Every performance tool should show IPC along with %CPU. Or break down %CPU into instruction-retired cycles vs stalled cycles, eg, %INS and %STL.

As for top(1), there is tiptop(1) for Linux, which shows IPC by process:

tiptop - [root]

Tasks: 96 total, 3 displayed screen 0: default PID [ %CPU] %SYS P Mcycle Minstr IPC %MISS %BMIS %BUS COMMAND

3897 35.3 28.5 4 274.06 178.23 0.65 0.06 0.00 0.0 java

1319+ 5.5 2.6 6 87.32 125.55 1.44 0.34 0.26 0.0 nm-applet

900 0.9 0.0 6 25.91 55.55 2.14 0.12 0.21 0.0 dbus-daemo

Other reasons CPU utilization is misleading

It's not just memory stall cycles that makes CPU utilization misleading. Other factors include:

- Temperature trips stalling the processor.

- Turboboost varying the clockrate.

- The kernel varying the clock rate with speed step.

- The problem with averages: 80% utilized over 1 minute, hiding bursts of 100%.

- Spin locks: the CPU is utilized, and has high IPC, but the app is not making logical forward progress.

Update: is CPU utilization actually wrong?

There have been hundreds of comments on this post, here (below) and elsewhere (1, 2). Thanks to everyone for taking the time and the interest in this topic. To summarize my responses: I'm not talking about iowait at all (that's disk I/O), and there are actionable items if you know you are memory bound (see above).

But is CPU utilization actually wrong, or just deeply misleading? I think many people interpret high %CPU to mean that the processing unit is the bottleneck, which is wrong (as I said earlier). At that point you don't yet know, and it is often something external. Is the metric technically correct? If the CPU stall cycles can't be used by anything else, aren't they are therefore "utilized waiting" (which sounds like an oxymoron)? In some cases, yes, you could say that %CPU as an OS-level metric is technically correct, but deeply misleading. With hyperthreads, however, those stalled cycles can now be used by another thread, so %CPU may count cycles as utilized that are in fact available. That's wrong. In this post I wanted to focus on the interpretation problem and suggested solutions, but yes, there are technical problems with this metric as well.

You might just say that utilization as a metric was already broken, as Adrian Cockcroft discussed previously.

Conclusion

CPU utilization has become a deeply misleading metric: it includes cycles waiting on main memory, which can dominate modern workloads. Perhaps %CPU should be renamed to %CYC, short for cycles. You can figure out what %CPU really means by using additional metrics, including instructions per cycle (IPC). An IPC < 1.0 likely means memory bound, and an IPC > 1.0 likely means instruction bound. I covered IPC in my previous post, including an introduction to the Performance Monitoring Counters (PMCs) needed to measure it.

Performance monitoring products that show %CPU – which is all of them – should also show PMC metrics to explain what that means, and not mislead the end user. For example, they can show %CPU with IPC, and/or instruction-retired cycles vs stalled cycles. Armed with these metrics, developers and operators can choose how to better tune their applications and systems.

[转帖]CPU Utilization is Wrong的更多相关文章

- How do I Find Out Linux CPU Utilization?

From:http://www.cyberciti.biz/tips/how-do-i-find-out-linux-cpu-utilization.html Whenever a Linux sys ...

- 压力测试衡量CPU的三个指标:CPU Utilization、Load Average和Context Switch Rate

分类: 4.软件设计/架构/测试 2010-01-12 19:58 34241人阅读 评论(4) 收藏 举报 测试loadrunnerlinux服务器firebugthread 上篇讲如何用LoadR ...

- Zabbix CPU utilization监控参数

工作中查看Zabbix linux 监控项的时候对linux 监控的cpu使用的各个参数没怎么明白,特意查看了下资料 Zabbix linux模板下的CPU utilization是自带的监控Linu ...

- 【每日一摩斯】-Troubleshooting: High CPU Utilization (164768.1) - 系列6

如果问题是一个正运行的缓慢的查询SQL,那么就应该对该查询进行调优,避免它耗费过高的CPU资源.如果它做了许多的hash连接和全表扫描,那么就应该添加索引以提高效率. 下面的文章可以帮助判断查询的问题 ...

- 【每日一摩斯】-Troubleshooting: High CPU Utilization (164768.1) - 系列5

Oracle(用户)进程 以下这些操作都是需要消耗大量CPU资源的:解析大型查询,存储过程编译或执行,空间管理和排序. 下面这几篇文章可以帮助采集关于使用高CPU资源的进程的更多信息: Note:35 ...

- 【每日一摩斯】-Troubleshooting: High CPU Utilization (164768.1) - 系列4

Jobs (CJQ0, Jn, SNPn) Job进程运行用户定义的以及系统定义的类似于batch的任务.检查Job进程占用大量CPU资源的方法,就像检查用户进程一样. 可以根据以下视图检查Job进程 ...

- [转帖]CPU Cache 机制以及 Cache miss

CPU Cache 机制以及 Cache miss https://www.cnblogs.com/jokerjason/p/10711022.html CPU体系结构之cache小结 1.What ...

- [转帖]CPU 的缓存

缓存这个词想必大家都听过,其实缓存的意义很广泛:电脑整机最大的缓存可以体现为内存条.显卡上的显存就是显卡芯片所需要用到的缓存.硬盘上也有相对应的缓存.CPU有着最快的缓存(L1.L2.L3缓存等),缓 ...

- [转帖]CPU时间片

CPU时间片 https://www.cnblogs.com/xingzc/p/6077214.html CPU的时间片 CPU的利用率好CPU的 load average 是不一样的 Conntex ...

- [转帖]震惊,用了这么多年的 CPU 利用率,其实是错的

震惊,用了这么多年的 CPU 利用率,其实是错的 2018年12月22日 08:43:09 Linuxer_ 阅读数:50 https://blog.csdn.net/juS3Ve/article/d ...

随机推荐

- Sermant:无代理服务网格架构解析及无门槛玩转插件开发

本文分享自华为云社区<Sermant:无代理服务网格架构解析及无门槛玩转插件开发>,作者: 华为云社区精选 . 本期直播的主题是<从架构设计到开发实践,深入浅出了解Sermant&g ...

- 这场世界级的攻坚考验,华为云GaussDB稳过

摘要:实践证明,华为云GaussDB完全经受住了这场世界级的攻坚考验,也完全具备支撑大型一体机系统迁移上云的能力,并积累了丰富的经验. 本文分享自华为云社区<这场世界级的攻坚考验,华为云Gaus ...

- Python图像处理丨图像的灰度线性变换

摘要:本文主要讲解灰度线性变换. 本文分享自华为云社区<[Python图像处理] 十五.图像的灰度线性变换>,作者:eastmount. 一.图像灰度线性变换原理 图像的灰度线性变换是通过 ...

- 火山引擎DataTester:一个爆款游戏产品,是如何用A/B测试打磨出来的?

随着国内游戏用户数量趋于饱和,中国游戏产业也从高速成长期逐渐转型,市场成熟度提升,竞争趋于精细化. 随着游戏出海以及私域流量运营的挑战,游戏企业对数据分析的使用需求和依赖度进一步提高.而在游戏研发立项 ...

- PPT 光效果

点状.线状.面状.光影 "光" = PPT高大上的秘密

- AI 黑科技,老照片修复,模糊变高清

大家好 最近闲逛,发现腾讯开源的老照片修复算法新出了V1.3的预训练模型,手痒试了一下. 我拿"自己"的旧照片试了一下,先看效果 GFPGAN FPGAN算法由腾讯PCG ARC实 ...

- 阿里云视频云vPaaS低代码音视频工厂:极速智造,万象空间

当下音视频技术越来越广泛地应用于更多行各业中,但因开发成本高.难度系数大等问题,掣肘了很多企业业务的第二增长需求.阿里云视频云基于云原生.音视频.人工智能等先进技术,提供易接入.强拓展.高效部署和覆盖 ...

- mysql备份恢复总结

mysqldump备份注:例子中的语句都是在mysql5.6下执行------------------基础------------------------一.修改my.cnf文件 vi /etc/my ...

- B3637-DP【橙】

这题我用sort的时候大意了,从1开始使用的下标但是用sort时没加1导致排序错误,排了半天错才发现. 另外,这道题我似乎用了一种与网络上搜到了做法截然不同的自己的瞎想出来的做法,我的这个做法需要n^ ...

- [Troubleshooting] kubectl cp exit code 255 - exec: \"tar\": executable file not found in $PATH"

0. 背景 kubectl cp container 文件到本地 host 报错: $ kubectl cp test/po-test-pod-0:/tmp ./ -c ctr-test-contai ...