Spark(五十):使用JvisualVM监控Spark Executor JVM

引导

Windows环境下JvisulaVM一般存在于安装了JDK的目录${JAVA_HOME}/bin/JvisualVM.exe,它支持(本地和远程)jstatd和JMX两种方式连接远程JVM。

jstatd (Java Virtual Machine jstat Daemon)——监听远程服务器的CPU,内存,线程等信息

JMX(Java Management Extensions,即Java管理扩展)是一个为应用程序、设备、系统等植入管理功能的框架。JMX可以跨越一系列异构操作系统平台、系统体系结构和网络传输协议,灵活的开发无缝集成的系统、网络和服务管理应用。

备注:针对jstatd我尝试未成功,因此也不在这里误导别人。

JMX监控

正常配置:

-Dcom.sun.management.jmxremote

-Dcom.sun.management.jmxremote.ssl=false

-Dcom.sun.management.jmxremote.authenticate=false

-Djava.rmi.server.hostname=<ip>

-Dcom.sun.management.jmxremote.port=<port>

添加JMX配置:

在Spark中监控executor时,需要先配置jmx然后再启动spark应用程序,配置方式有三种:

1)在spark-defaults.conf中配置那三个参数

2)在spark-env.sh中配置:配置master,worker的JavaOptions

3)在spark-submit提交时配置

这里采用以下spark-submit提交时配置:

spark-submit \

--class myTest.KafkaWordCount \

--master yarn \

--deploy-mode cluster \

--conf "spark.executor.extraJavaOptions=-Dcom.sun.management.jmxremote -Dcom.sun.management.jmxremote.port=0 -Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.ssl=false" \

--verbose \

--executor-memory 1G \

--total-executor-cores \

/hadoop/spark/app/spark//testSpark.jar *.*.*.*:* test3 wordcount kafkawordcount3 checkpoint4

注意:

1)不能指定具体的 ip 和 port------因为spark中运行时,很可能一个节点上分配多个container进程,此时占用同一个端口,会导致spark应用程序通过spark-submit提交失败。

2)因为不指定具体的ip和port,所以在任务提交阶段会自动分配端口。

3)上边三种配置方式可能会导致监控级别不同(比如spark-submit只针对一个应用程序,spark-env.sh可能是全局一个节点所有executor监控【未验证】,请读者注意。)

查找JMX分配端口

通过yarn applicationattempt -list appicationId查找到applicationattemptid

[root@cdh- bin]# yarn applicationattempt -list application_1559203334026_0015

// :: INFO client.RMProxy: Connecting to ResourceManager at CDH-/10.dx.dx.143:

Total number of application attempts :

ApplicationAttempt-Id State AM-Container-Id Tracking-URL

appattempt_1559203334026_0015_000001 RUNNING container_1559203334026_0015_01_000001 http://CDH-143:8088/proxy/application_1559203334026_0015/

通过yarn container -list aaplicationattemptId查找container id list

[root@cdh- bin]# yarn container -list appattempt_1559203334026_0015_000001

// :: INFO client.RMProxy: Connecting to ResourceManager at CDH-/10.dx.dx.143:

Total number of containers :

Container-Id Start Time Finish Time State Host LOG-URL

container_1559203334026_0015_01_000012 Sat Jun :: + N/A RUNNING CDH-: http://CDH-146:8042/node/containerlogs/container_1559203334026_0015_01_000012/dx

container_1559203334026_0015_01_000013 Sat Jun :: + N/A RUNNING CDH-: http://CDH-146:8042/node/containerlogs/container_1559203334026_0015_01_000013/dx

container_1559203334026_0015_01_000010 Sat Jun :: + N/A RUNNING CDH-: http://CDH-146:8042/node/containerlogs/container_1559203334026_0015_01_000010/dx

container_1559203334026_0015_01_000011 Sat Jun :: + N/A RUNNING CDH-: http://CDH-146:8042/node/containerlogs/container_1559203334026_0015_01_000011/dx

container_1559203334026_0015_01_000016 Sat Jun :: + N/A RUNNING CDH-: http://CDH-146:8042/node/containerlogs/container_1559203334026_0015_01_000016/dx

container_1559203334026_0015_01_000014 Sat Jun :: + N/A RUNNING CDH-: http://CDH-146:8042/node/containerlogs/container_1559203334026_0015_01_000014/dx

container_1559203334026_0015_01_000015 Sat Jun :: + N/A RUNNING CDH-: http://CDH-146:8042/node/containerlogs/container_1559203334026_0015_01_000015/dx

container_1559203334026_0015_01_000004 Sat Jun :: + N/A RUNNING CDH-: http://CDH-142:8042/node/containerlogs/container_1559203334026_0015_01_000004/dx

container_1559203334026_0015_01_000005 Sat Jun :: + N/A RUNNING CDH-: http://CDH-142:8042/node/containerlogs/container_1559203334026_0015_01_000005/dx

container_1559203334026_0015_01_000002 Sat Jun :: + N/A RUNNING CDH-: http://CDH-142:8042/node/containerlogs/container_1559203334026_0015_01_000002/dx

container_1559203334026_0015_01_000003 Sat Jun :: + N/A RUNNING CDH-: http://CDH-142:8042/node/containerlogs/container_1559203334026_0015_01_000003/dx

container_1559203334026_0015_01_000008 Sat Jun :: + N/A RUNNING CDH-: http://CDH-142:8042/node/containerlogs/container_1559203334026_0015_01_000008/dx

container_1559203334026_0015_01_000009 Sat Jun :: + N/A RUNNING CDH-: http://CDH-142:8042/node/containerlogs/container_1559203334026_0015_01_000009/dx

container_1559203334026_0015_01_000006 Sat Jun :: + N/A RUNNING CDH-: http://CDH-142:8042/node/containerlogs/container_1559203334026_0015_01_000006/dx

container_1559203334026_0015_01_000007 Sat Jun :: + N/A RUNNING CDH-: http://CDH-142:8042/node/containerlogs/container_1559203334026_0015_01_000007/dx

container_1559203334026_0015_01_000001 Sat Jun :: + N/A RUNNING CDH-: http://CDH-142:8042/node/containerlogs/container_1559203334026_0015_01_000001/dx

到具体executor所在节点服务器上,使用如下命令找到运行的线程,和 pid

[root@cdh- ~]# ps -axu | grep container_1559203334026_0015_01_000013

yarn 0.0 0.0 ? S : : bash /data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/default_container_executor.sh

yarn 0.0 0.0 ? Ss : : /bin/bash -c /usr/java/jdk1..0_171-amd64/bin/java -server -Xmx6144m '-Dcom.sun.management.jmxremote' '-Dcom.sun.management.jmxremote.port=0' '-Dcom.sun.management.jmxremote.authenticate=false' '-Dcom.sun.management.jmxremote.ssl=false' -Djava.io.tmpdir=/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/tmp '-Dspark.network.timeout=10000000' '-Dspark.driver.port=47564' '-Dspark.port.maxRetries=32' -Dspark.yarn.app.container.log.dir=/data6/yarn/container-logs/application_1559203334026_0015/container_1559203334026_0015_01_000013 -XX:OnOutOfMemoryError='kill %p' org.apache.spark.executor.CoarseGrainedExecutorBackend --driver-url spark://CoarseGrainedScheduler@CDH-143:47564 --executor-id 12 --hostname CDH-146 --cores 2 --app-id application_1559203334026_0015 --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/__app__.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/streaming-dx-perf-3.0.0.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/dx-common-3.0.0.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/spark-sql-kafka-0-10_2.11-2.4.0.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/spark-avro_2.11-3.2.0.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/shc-core-1.1.2-2.2-s_2.11-SNAPSHOT.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/rocksdbjni-5.17.2.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/kafka-clients-0.10.0.1.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/elasticsearch-spark-20_2.11-6.4.1.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/dx_Spark_State_Store_Plugin-1.0-SNAPSHOT.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/bijection-core_2.11-0.9.5.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/bijection-avro_2.11-0.9.5.jar 1>/data6/yarn/container-logs/application_1559203334026_0015/container_1559203334026_0015_01_000013/stdout 2>/data6/yarn/container-logs/application_1559203334026_0015/container_1559203334026_0015_01_000013/stderr

yarn 3.3 ? Sl : : /usr/java/jdk1..0_171-amd64/bin/java -server -Xmx6144m -Dcom.sun.management.jmxremote -Dcom.sun.management.jmxremote.port= -Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.ssl=false -Djava.io.tmpdir=/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/tmp -Dspark.network.timeout= -Dspark.driver.port= -Dspark.port.maxRetries= -Dspark.yarn.app.container.log.dir=/data6/yarn/container-logs/application_1559203334026_0015/container_1559203334026_0015_01_000013 -XX:OnOutOfMemoryError=kill %p org.apache.spark.executor.CoarseGrainedExecutorBackend --driver-url spark://CoarseGrainedScheduler@CDH-143:47564 --executor-id 12 --hostname CDH-146 --cores 2 --app-id application_1559203334026_0015 --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/__app__.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/dx-domain-perf-3.0.0.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/dx-common-3.0.0.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/spark-sql-kafka-0-10_2.11-2.4.0.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/spark-avro_2.11-3.2.0.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/shc-core-1.1.2-2.2-s_2.11-SNAPSHOT.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/rocksdbjni-5.17.2.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/kafka-clients-0.10.0.1.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/elasticsearch-spark-20_2.11-6.4.1.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/dx_Spark_State_Store_Plugin-1.0-SNAPSHOT.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/bijection-core_2.11-0.9.5.jar --user-class-path file:/data6/yarn/nm/usercache/dx/appcache/application_1559203334026_0015/container_1559203334026_0015_01_000013/bijection-avro_2.11-0.9.5.jar

root 0.0 0.0 pts/ S+ : : grep --color=auto container_1559203334026_0015_01_000013

然后通过 pid 找到对应JMX的端口

[root@cdh- ~]# sudo netstat -antp | grep

tcp 10.dx.dx.146: 0.0.0.0:* LISTEN /python2.

tcp6 ::: :::* LISTEN /java

tcp6 ::: :::* LISTEN /java

tcp6 10.dx.dx.146: :::* LISTEN /java

tcp6 10.dx.dx.146: 10.dx.dx.142: ESTABLISHED /java

tcp6 10.dx.dx.146: 10.206.186.35: ESTABLISHED /java

tcp6 10.dx.dx.146: 10.dx.dx.143: ESTABLISHED /java

结果中看,疑似为48169或37692,稍微尝试一下即可连上对应的 spark executor

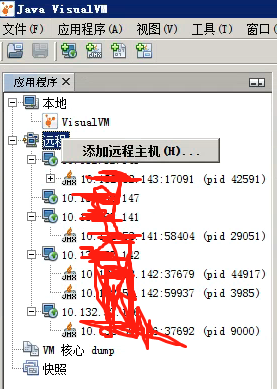

使用JvisulaVM.exe工具添加监控

在本地windows服务器上找到JDK的目录,找到文件${JAVA_HOME}/bin/JvisualVM.exe,并运行它。启动后选择“远程”右键,添加JMX监控

填写监控executor所在节点ip

然后就可以启动监控:

Spark(五十):使用JvisualVM监控Spark Executor JVM的更多相关文章

- Spark(五十二):Spark Scheduler模块之DAGScheduler流程

导入 从一个Job运行过程中来看DAGScheduler是运行在Driver端的,其工作流程如下图: 图中涉及到的词汇概念: 1. RDD——Resillient Distributed Datase ...

- 使用 JvisualVM 监控 spark executor

使用 JvisualVM,需要先配置 java 的启动参数 jmx 正常情况下,如下配置 -Dcom.sun.management.jmxremote -Dcom.sun.management.jmx ...

- Spark2.x(五十五):在spark structured streaming下sink file(parquet,csv等),正常运行一段时间后:清理掉checkpoint,重新启动app,无法sink记录(file)到hdfs。

场景: 在spark structured streaming读取kafka上的topic,然后将统计结果写入到hdfs,hdfs保存目录按照month,day,hour进行分区: 1)程序放到spa ...

- Spark2.x(五十九):yarn-cluster模式提交Spark任务,如何关闭client进程?

问题: 最近现场反馈采用yarn-cluster方式提交spark application后,在提交节点机上依然会存在一个yarn的client进程不关闭,又由于spark application都是 ...

- Spark(四十九):Spark On YARN启动流程源码分析(一)

引导: 该篇章主要讲解执行spark-submit.sh提交到将任务提交给Yarn阶段代码分析. spark-submit的入口函数 一般提交一个spark作业的方式采用spark-submit来提交 ...

- 监控Spark应用方法简介

监控Spark应用有很多种方法. Web接口每一个SparkContext启动一个web UI用来展示应用相关的一些非常有用的信息,默认在4040端口.这些信息包括: 任务和调度状态的列表RDD大小和 ...

- Spark2.3(四十二):Spark Streaming和Spark Structured Streaming更新broadcast总结(二)

本次此时是在SPARK2,3 structured streaming下测试,不过这种方案,在spark2.2 structured streaming下应该也可行(请自行测试).以下是我测试结果: ...

- Spark(十四)SparkStreaming的官方文档

一.SparkCore.SparkSQL和SparkStreaming的类似之处 二.SparkStreaming的运行流程 2.1 图解说明 2.2 文字解说 1.我们在集群中的其中一台机器上提交我 ...

- Spark应用监控解决方案--使用Prometheus和Grafana监控Spark应用

Spark任务启动后,我们通常都是通过跳板机去Spark UI界面查看对应任务的信息,一旦任务多了之后,这将会是让人头疼的问题.如果能将所有任务信息集中起来监控,那将会是很完美的事情. 通过Spark ...

随机推荐

- MySQL Innodb--共享临时表空间和临时文件

在MySQL 5.7版本中引入Online DDL特性和共享临时表空间特性,临时数据主要存放形式为: 1.DML命令执行过程中文件排序(file sore)操作生成的临时文件,存储目录由参数tmpdi ...

- Find 命令记录

当需要查找一个时间的文件时 使用find [文件目录] -mtime [时间] 例如:查看mysql.bak目录下的1天前的文件 find mysql.bak -mtime 找到此文件之后需要将它移动 ...

- spring事务什么时候会自动回滚

在java中异常的基类为Throwable,他有两个子类xception与Errors.同时RuntimeException就是Exception的子类,只有RuntimeException才会进行回 ...

- idea中添加多级父子模块

在 IntelliJ IDEA 中,没有类似于 Eclipse 工作空间(Workspace)的概念,而是提出了Project和Module这两个概念. 在 IntelliJ IDEA 中Projec ...

- amazeui datepicker日历控件 设置默认当日

amazeui datepicker日历控件 设置默认当日 背景: 最近在做一个系统的时候,前台需要选择日期,传给后台进行处理,每次都需要通过手动点击组件,选择日期,这样子很不好,所以我想通过程序自动 ...

- python3_pygame游戏窗口创建

python3利用第三方模块pygame创建游戏窗口 步骤1.导入pygame模块 步骤2.初始化pygame模块 步骤3.设置游戏窗口大小 步骤4.定义游戏窗口背景颜色 步骤5.开始循环检测游戏窗口 ...

- Windows 远程访问 ubuntu 16 lts

remote access ubuntu 使用安装使用vncserver (除非必要,不要使用图形界面,底层码农真的应该关心效率) $ sudo apt-get install vncsever wi ...

- 怎么给win10进行分区?

新安装的win10系统的朋友,对于win10系统分区不满意应该如何是好呢?今天给大家介绍两种win10系统分区的方法,一个是windows自带分区管理软件来操作;另一个就是简单方便的分区助手来帮助您进 ...

- 基于h5+的微信支付,hbuilder打包

1.打开app项目的manifest.json的文件,选择模块权限配置,将Payment(支付)模块添加至已选模块中 2.选择SDK配置,在plus.payment·支付中,勾选□ 微信支付,配置好a ...

- Linux 系统 Composer 安装

Composer 是个包管理工具 在项目中使用它会很方便 本文中用 PHP 安装 1.下载安装 执行命令 curl -sS https://getcomposer.org/installer | ph ...