ubuntu14.04 编译hadoop-2.6.0-cdh5.4.4

|

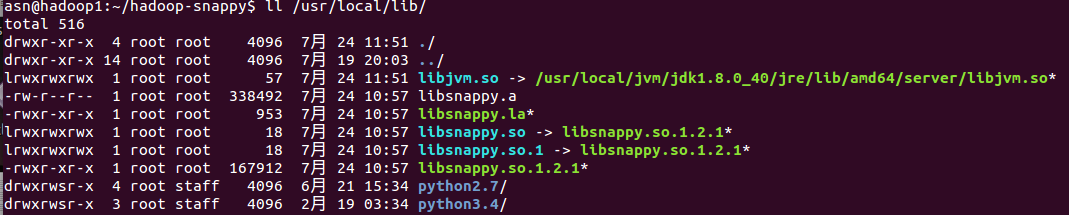

1 protocol buffer sudo apt-get install libprotobuf-dev asn@hadoop1:~/Desktop$ protoc --version libprotoc 2.5.0 2 安装CMake asn@hadoop1:~/Desktop$ cmake --version cmake version 2.8.12.2 3、安装其他依赖 sudo apt-get install zlib1g-dev libssl-dev 4 Snappy压缩 snappy cd snappy-1.1.2/ sudo ./configure sudo make sudo make install asn@hadoop1:~/snappy-1.1.2$ ls /usr/local/lib | grep snappy libsnappy.a libsnappy.la libsnappy.so libsnappy.so.1 libsnappy.so.1.2.1 hadoop-snappy asn@hadoop1:~/hadoop-snappy$ ls -l /usr/bin/gcc lrwxrwxrwx 1 root root 7 4月 27 22:36 /usr/bin/gcc -> gcc-4.8 asn@hadoop1:~/hadoop-snappy$ sudo rm /usr/bin/gcc asn@hadoop1:~/hadoop-snappy$ ls -l /usr/bin/gcc ls: cannot access /usr/bin/gcc: No such file or directory $ sudo apt-get install gcc-4.4 asn@hadoop1:~/hadoop-snappy$ sudo ln -s /usr/bin/gcc-4.4 /usr/bin/gcc asn@hadoop1:~/hadoop-snappy$ ls -l /usr/bin/gcc lrwxrwxrwx 1 root root 16 7月 24 11:30 /usr/bin/gcc -> /usr/bin/gcc-4.4 [exec] make: *** [libhadoopsnappy.la] Error 1 [exec] libtool: link: gcc -shared -fPIC -DPIC src/org/apache/hadoop/io/compress/snappy/.libs/SnappyCompressor.o src/org/apache/hadoop/io/compress/snappy/.libs/SnappyDecompressor.o -L/usr/local/lib -ljvm -ldl -O2 -m64 -O2 -Wl,-soname -Wl,libhadoopsnappy.so.0 -o .libs/libhadoopsnappy.so.0.0.1 这是因为没有把安装jvm的libjvm.so symbolic链接到usr/local/lib。 如果你的系统是64位,可到/root/bin/jdk1.6.0_37/jre/lib/amd64/server/察看libjvm.so 链接到的地方,这里修改如下,使用命令: 问题即可解决。

mvn package [INFO] ------------------------------------------------------------------------ [INFO] BUILD SUCCESS [INFO] ------------------------------------------------------------------------ [INFO] Total time: 43.710 s [INFO] Finished at: 2015-07-24T11:54:09+08:00 [INFO] Final Memory: 23M/359M [INFO] ------------------------------------------------------------------------

5 findbugs http://osdn.jp/projects/sfnet_findbugs/downloads/findbugs/3.0.1/findbugs-3.0.1.zip/ asn@hadoop1:~$ unzip -h UnZip 6.00 of 20 April 2009, by Debian. Original by Info-ZIP. Usage: unzip [-Z] [-opts[modifiers]] file[.zip] [list] [-x xlist] [-d exdir] Default action is to extract files in list, except those in xlist, to exdir; file[.zip] may be a wildcard. -Z => ZipInfo mode ("unzip -Z" for usage). -p extract files to pipe, no messages -l list files (short format) -f freshen existing files, create none -t test compressed archive data -u update files, create if necessary -z display archive comment only -v list verbosely/show version info -T timestamp archive to latest -x exclude files that follow (in xlist) -d extract files into exdir modifiers: -n never overwrite existing files -q quiet mode (-qq => quieter) -o overwrite files WITHOUT prompting -a auto-convert any text files -j junk paths (do not make directories) -aa treat ALL files as text -U use escapes for all non-ASCII Unicode -UU ignore any Unicode fields -C match filenames case-insensitively -L make (some) names lowercase -X restore UID/GID info -V retain VMS version numbers -K keep setuid/setgid/tacky permissions -M pipe through "more" pager -O CHARSET specify a character encoding for DOS, Windows and OS/2 archives -I CHARSET specify a character encoding for UNIX and other archives See "unzip -hh" or unzip.txt for more help. Examples: unzip data1 -x joe => extract all files except joe from zipfile data1.zip unzip -p foo | more => send contents of foo.zip via pipe into program more unzip -fo foo ReadMe => quietly replace existing ReadMe if archive file newer asn@hadoop1:~$ sudo unzip findbugs-3.0.1.zip -d /usr/local 配置环境变量 # findbugs export FINDBUGS_HOME=/usr/local/findbugs-3.0.1 export PATH=$PATH:$FINDBUGS_HOME/bin 5 编译 mvn package -Pdist,native,docs -DskipTests -Dtar [INFO] --- maven-javadoc-plugin:2.8.1:jar (module-javadocs) @ hadoop-dist --- [INFO] Building jar: /home/asn/hadoop-2.6.0-cdh5.4.4-src/hadoop-dist/target/hadoop-dist-2.6.0-cdh5.4.4-javadoc.jar [INFO] ------------------------------------------------------------------------ [INFO] Reactor Summary: [INFO] [INFO] Apache Hadoop Main ................................. SUCCESS [ 1.612 s] [INFO] Apache Hadoop Project POM .......................... SUCCESS [ 0.879 s] [INFO] Apache Hadoop Annotations .......................... SUCCESS [ 2.210 s] [INFO] Apache Hadoop Assemblies ........................... SUCCESS [ 0.467 s] [INFO] Apache Hadoop Project Dist POM ..................... SUCCESS [ 2.778 s] [INFO] Apache Hadoop Maven Plugins ........................ SUCCESS [ 2.803 s] [INFO] Apache Hadoop MiniKDC .............................. SUCCESS [ 3.063 s] [INFO] Apache Hadoop Auth ................................. SUCCESS [ 21.714 s] [INFO] Apache Hadoop Auth Examples ........................ SUCCESS [ 4.256 s] [INFO] Apache Hadoop Common ............................... SUCCESS [03:50 min] [INFO] Apache Hadoop NFS .................................. SUCCESS [ 5.584 s] [INFO] Apache Hadoop KMS .................................. SUCCESS [03:47 min] [INFO] Apache Hadoop Common Project ....................... SUCCESS [ 0.037 s] [INFO] Apache Hadoop HDFS ................................. SUCCESS [09:31 min] [INFO] Apache Hadoop HttpFS ............................... SUCCESS [03:25 min] [INFO] Apache Hadoop HDFS BookKeeper Journal .............. SUCCESS [ 6.757 s] [INFO] Apache Hadoop HDFS-NFS ............................. SUCCESS [ 3.637 s] [INFO] Apache Hadoop HDFS Project ......................... SUCCESS [ 0.207 s] [INFO] hadoop-yarn ........................................ SUCCESS [ 0.231 s] [INFO] hadoop-yarn-api .................................... SUCCESS [01:21 min] [INFO] hadoop-yarn-common ................................. SUCCESS [ 21.180 s] [INFO] hadoop-yarn-server ................................. SUCCESS [ 0.089 s] [INFO] hadoop-yarn-server-common .......................... SUCCESS [ 8.697 s] [INFO] hadoop-yarn-server-nodemanager ..................... SUCCESS [ 18.131 s] [INFO] hadoop-yarn-server-web-proxy ....................... SUCCESS [ 2.802 s] [INFO] hadoop-yarn-server-applicationhistoryservice ....... SUCCESS [ 6.952 s] [INFO] hadoop-yarn-server-resourcemanager ................. SUCCESS [ 18.398 s] [INFO] hadoop-yarn-server-tests ........................... SUCCESS [ 1.072 s] [INFO] hadoop-yarn-client ................................. SUCCESS [ 4.769 s] [INFO] hadoop-yarn-applications ........................... SUCCESS [ 0.062 s] [INFO] hadoop-yarn-applications-distributedshell .......... SUCCESS [ 2.625 s] [INFO] hadoop-yarn-applications-unmanaged-am-launcher ..... SUCCESS [ 1.906 s] [INFO] hadoop-yarn-site ................................... SUCCESS [ 0.088 s] [INFO] hadoop-yarn-registry ............................... SUCCESS [ 4.753 s] [INFO] hadoop-yarn-project ................................ SUCCESS [ 5.611 s] [INFO] hadoop-mapreduce-client ............................ SUCCESS [ 0.204 s] [INFO] hadoop-mapreduce-client-core ....................... SUCCESS [ 16.280 s] [INFO] hadoop-mapreduce-client-common ..................... SUCCESS [ 15.060 s] [INFO] hadoop-mapreduce-client-shuffle .................... SUCCESS [ 5.416 s] [INFO] hadoop-mapreduce-client-app ........................ SUCCESS [ 7.769 s] [INFO] hadoop-mapreduce-client-hs ......................... SUCCESS [ 6.008 s] [INFO] hadoop-mapreduce-client-jobclient .................. SUCCESS [ 4.967 s] [INFO] hadoop-mapreduce-client-hs-plugins ................. SUCCESS [ 1.901 s] [INFO] hadoop-mapreduce-client-nativetask ................. SUCCESS [01:32 min] [INFO] Apache Hadoop MapReduce Examples ................... SUCCESS [ 5.513 s] [INFO] hadoop-mapreduce ................................... SUCCESS [ 5.140 s] [INFO] Apache Hadoop MapReduce Streaming .................. SUCCESS [ 3.647 s] [INFO] Apache Hadoop Distributed Copy ..................... SUCCESS [ 6.701 s] [INFO] Apache Hadoop Archives ............................. SUCCESS [ 2.504 s] [INFO] Apache Hadoop Rumen ................................ SUCCESS [ 5.215 s] [INFO] Apache Hadoop Gridmix .............................. SUCCESS [ 3.454 s] [INFO] Apache Hadoop Data Join ............................ SUCCESS [ 2.347 s] [INFO] Apache Hadoop Ant Tasks ............................ SUCCESS [ 2.068 s] [INFO] Apache Hadoop Extras ............................... SUCCESS [ 2.509 s] [INFO] Apache Hadoop Pipes ................................ SUCCESS [ 12.185 s] [INFO] Apache Hadoop OpenStack support .................... SUCCESS [ 5.989 s] [INFO] Apache Hadoop Amazon Web Services support .......... SUCCESS [01:17 min] [INFO] Apache Hadoop Azure support ........................ SUCCESS [ 26.187 s] [INFO] Apache Hadoop Client ............................... SUCCESS [ 13.386 s] [INFO] Apache Hadoop Mini-Cluster ......................... SUCCESS [ 2.447 s] [INFO] Apache Hadoop Scheduler Load Simulator ............. SUCCESS [ 6.560 s] [INFO] Apache Hadoop Tools Dist ........................... SUCCESS [ 11.723 s] [INFO] Apache Hadoop Tools ................................ SUCCESS [ 0.126 s] [INFO] Apache Hadoop Distribution ......................... SUCCESS [03:02 min] [INFO] ------------------------------------------------------------------------ [INFO] BUILD SUCCESS [INFO] ------------------------------------------------------------------------ [INFO] Total time: 33:22 min [INFO] Finished at: 2015-07-24T15:56:25+08:00 [INFO] Final Memory: 230M/791M [INFO] ------------------------------------------------------------------------ 6 导入hadoop项目到eclipse

$ cd hadoop-maven-plugins $ mvn install 2)在hadoop-2.6.0-cdh5.4.4-src目录下执行 mvn eclipse:eclipse -DskipTests asn@hadoop1:~/hadoop-2.6.0-cdh5.4.4-src$ mvn org.apache.maven.plugins:maven-eclipse-plugin:2.6:eclipse-DdownloadJavadocs=true Javadoc for some artifacts is not available. Please run the same goal with the -DdownloadJavadocs=true parameter in order to check remote repositories for javadoc. main: [INFO] Executed tasks [INFO] [INFO] --- maven-remote-resources-plugin:1.0:process (default) @ hadoop-dist --- [INFO] inceptionYear not specified, defaulting to 2015 [INFO] [INFO] <<< maven-eclipse-plugin:2.6:eclipse (default-cli) < generate-resources @ hadoop-dist <<< [INFO] [INFO] --- maven-eclipse-plugin:2.6:eclipse (default-cli) @ hadoop-dist --- [INFO] Using Eclipse Workspace: null [INFO] Adding default classpath container: org.eclipse.jdt.launching.JRE_CONTAINER [INFO] Wrote settings to /home/asn/hadoop-2.6.0-cdh5.4.4-src/hadoop-dist/.settings/org.eclipse.jdt.core.prefs [INFO] Wrote Eclipse project for "hadoop-dist" to /home/asn/hadoop-2.6.0-cdh5.4.4-src/hadoop-dist. [INFO] [INFO] ------------------------------------------------------------------------ [INFO] Reactor Summary: [INFO] [INFO] Apache Hadoop Main ................................. SUCCESS [ 0.959 s] [INFO] Apache Hadoop Project POM .......................... SUCCESS [ 0.468 s] [INFO] Apache Hadoop Annotations .......................... SUCCESS [ 0.239 s] [INFO] Apache Hadoop Project Dist POM ..................... SUCCESS [ 0.129 s] [INFO] Apache Hadoop Assemblies ........................... SUCCESS [ 0.109 s] [INFO] Apache Hadoop Maven Plugins ........................ SUCCESS [ 0.957 s] [INFO] Apache Hadoop MiniKDC .............................. SUCCESS [ 3.097 s] [INFO] Apache Hadoop Auth ................................. SUCCESS [ 1.904 s] [INFO] Apache Hadoop Auth Examples ........................ SUCCESS [ 0.442 s] [INFO] Apache Hadoop Common ............................... SUCCESS [ 1.475 s] [INFO] Apache Hadoop NFS .................................. SUCCESS [ 0.690 s] [INFO] Apache Hadoop KMS .................................. SUCCESS [ 1.199 s] [INFO] Apache Hadoop Common Project ....................... SUCCESS [ 0.035 s] [INFO] Apache Hadoop HDFS ................................. SUCCESS [ 1.738 s] [INFO] Apache Hadoop HttpFS ............................... SUCCESS [ 1.294 s] [INFO] Apache Hadoop HDFS BookKeeper Journal .............. SUCCESS [ 0.448 s] [INFO] Apache Hadoop HDFS-NFS ............................. SUCCESS [ 0.369 s] [INFO] Apache Hadoop HDFS Project ......................... SUCCESS [ 0.040 s] [INFO] hadoop-yarn ........................................ SUCCESS [ 0.038 s] [INFO] hadoop-yarn-api .................................... SUCCESS [ 0.714 s] [INFO] hadoop-yarn-common ................................. SUCCESS [ 0.370 s] [INFO] hadoop-yarn-server ................................. SUCCESS [ 0.030 s] [INFO] hadoop-yarn-server-common .......................... SUCCESS [ 0.423 s] [INFO] hadoop-yarn-server-nodemanager ..................... SUCCESS [ 0.576 s] [INFO] hadoop-yarn-server-web-proxy ....................... SUCCESS [ 0.416 s] [INFO] hadoop-yarn-server-applicationhistoryservice ....... SUCCESS [ 0.499 s] [INFO] hadoop-yarn-server-resourcemanager ................. SUCCESS [ 0.707 s] [INFO] hadoop-yarn-server-tests ........................... SUCCESS [ 0.870 s] [INFO] hadoop-yarn-client ................................. SUCCESS [ 0.444 s] [INFO] hadoop-yarn-applications ........................... SUCCESS [ 0.029 s] [INFO] hadoop-yarn-applications-distributedshell .......... SUCCESS [ 0.347 s] [INFO] hadoop-yarn-applications-unmanaged-am-launcher ..... SUCCESS [ 0.357 s] [INFO] hadoop-yarn-site ................................... SUCCESS [ 0.037 s] [INFO] hadoop-yarn-registry ............................... SUCCESS [ 0.620 s] [INFO] hadoop-yarn-project ................................ SUCCESS [ 0.445 s] [INFO] hadoop-mapreduce-client ............................ SUCCESS [ 0.125 s] [INFO] hadoop-mapreduce-client-core ....................... SUCCESS [ 1.548 s] [INFO] hadoop-mapreduce-client-common ..................... SUCCESS [ 0.960 s] [INFO] hadoop-mapreduce-client-shuffle .................... SUCCESS [ 0.905 s] [INFO] hadoop-mapreduce-client-app ........................ SUCCESS [ 0.814 s] [INFO] hadoop-mapreduce-client-hs ......................... SUCCESS [ 0.975 s] [INFO] hadoop-mapreduce-client-jobclient .................. SUCCESS [ 0.699 s] [INFO] hadoop-mapreduce-client-hs-plugins ................. SUCCESS [ 0.446 s] [INFO] hadoop-mapreduce-client-nativetask ................. SUCCESS [ 0.470 s] [INFO] Apache Hadoop MapReduce Examples ................... SUCCESS [ 0.786 s] [INFO] hadoop-mapreduce ................................... SUCCESS [ 0.197 s] [INFO] Apache Hadoop MapReduce Streaming .................. SUCCESS [ 0.127 s] [INFO] Apache Hadoop Distributed Copy ..................... SUCCESS [04:15 min] [INFO] Apache Hadoop Archives ............................. SUCCESS [ 0.157 s] [INFO] Apache Hadoop Rumen ................................ SUCCESS [ 0.174 s] [INFO] Apache Hadoop Gridmix .............................. SUCCESS [ 6.043 s] [INFO] Apache Hadoop Data Join ............................ SUCCESS [ 0.159 s] [INFO] Apache Hadoop Ant Tasks ............................ SUCCESS [ 0.104 s] [INFO] Apache Hadoop Extras ............................... SUCCESS [ 0.126 s] [INFO] Apache Hadoop Pipes ................................ SUCCESS [ 0.044 s] [INFO] Apache Hadoop OpenStack support .................... SUCCESS [ 0.500 s] [INFO] Apache Hadoop Amazon Web Services support .......... SUCCESS [03:11 min] [INFO] Apache Hadoop Azure support ........................ SUCCESS [ 19.169 s] [INFO] Apache Hadoop Client ............................... SUCCESS [04:05 min] [INFO] Apache Hadoop Mini-Cluster ......................... SUCCESS [ 1.293 s] [INFO] Apache Hadoop Scheduler Load Simulator ............. SUCCESS [ 4.901 s] [INFO] Apache Hadoop Tools Dist ........................... SUCCESS [ 11.164 s] [INFO] Apache Hadoop Tools ................................ SUCCESS [ 0.027 s] [INFO] Apache Hadoop Distribution ......................... SUCCESS [ 0.109 s] [INFO] ------------------------------------------------------------------------ [INFO] BUILD SUCCESS [INFO] ------------------------------------------------------------------------ [INFO] Total time: 12:50 min [INFO] Finished at: 2015-07-24T19:45:50+08:00 [INFO] Final Memory: 118M/521M [INFO] ------------------------------------------------------------------------ 7 单机伪分布 1)etc配置 core-site.xml <configuration> <property> <name>fs.defaultFS</name> <value>hdfs://localhost:9000</value> </property> <property> <name>hadoop.tmp.dir</name> <value>file:/home/asn/tmp</value> </property> </configuration> hdfs-site.xml <configuration> <property> <name>dfs.namenode.name.dir</name> <value>file:/home/asn/dfs/name</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>file:/home/asn/dfs/data</value> </property> <property> <name>dfs.replication</name> <value>1</value> </property> <property> <name>dfs.webhdfs.enabled</name> <value>true</value> </property> </configuration> 2) 格式化namenode $ bin/hdfs namenode -format 3) 启动NameNode和DataNode后台进程 $ sbin/start-dfs.sh asn@hadoop1:~/hadoop-2.6.0-cdh5.4.4$ sbin/start-dfs.sh Starting namenodes on [localhost] localhost: starting namenode, logging to /home/asn/hadoop-2.6.0-cdh5.4.4/logs/hadoop-asn-namenode-hadoop1.out localhost: datanode running as process 44763. Stop it first. Starting secondary namenodes [0.0.0.0] 0.0.0.0: secondarynamenode running as process 44939. Stop it first. 4) 运行例子grep $ bin/hdfs dfs -mkdir /user $ bin/hdfs dfs -mkdir /user/asn $ bin/hdfs dfs -put etc/hadoop input $ bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.0-cdh5.4.4.jar grep input output 'dfs[a-z.]+' 5) 查看结果 asn@hadoop1:~/hadoop-2.6.0-cdh5.4.4$ bin/hdfs dfs -get output output ##从hdfs文件系统中拷贝输出文件到本地 asn@hadoop1:~/hadoop-2.6.0-cdh5.4.4$ ls bin etc include lib libexec LICENSE.txt logs NOTICE.txt output README.txt sbin share asn@hadoop1:~/hadoop-2.6.0-cdh5.4.4$ ls output part-r-00000 _SUCCESS asn@hadoop1:~/hadoop-2.6.0-cdh5.4.4$ cat output/* ##查看计算结果 6 dfs.audit.logger 4 dfs.class 3 dfs.server.namenode. 2 dfs.audit.log.maxfilesize 2 dfs.audit.log.maxbackupindex 2 dfs.period 1 dfsmetrics.log 1 dfsadmin 1 dfs.webhdfs.enabled 1 dfs.servers 1 dfs.replication 1 dfs.file 1 dfs.datanode.data.dir 1 dfs.namenode.name.dir asn@hadoop1:~/hadoop-2.6.0-cdh5.4.4$ bin/hdfs dfs -cat output/* ##查看hdfs文件系统上的计算结果 6 dfs.audit.logger 4 dfs.class 3 dfs.server.namenode. 2 dfs.audit.log.maxfilesize 2 dfs.audit.log.maxbackupindex 2 dfs.period 1 dfsmetrics.log 1 dfsadmin 1 dfs.webhdfs.enabled 1 dfs.servers 1 dfs.replication 1 dfs.file 1 dfs.datanode.data.dir 1 dfs.namenode.name.dir 8 端口总结

dfs文件系统服务暴露在9000端口

重新生成ssh-key时,报错处理 asn@hadoop1:~/hadoop-2.6.0-cdh5.4.4$ ssh localhost Agent admitted failure to sign using the key. 解决办法 在当前用户下执行命令: ssh-add |

ubuntu14.04 编译hadoop-2.6.0-cdh5.4.4的更多相关文章

- linux编译64bitHadoop (eg: ubuntu14.04 and hadoop 2.3.0)

Hadoop官网提供的编译好的hadoop-2.3.0.tar.gz二进制包是在32位系统上编译的,在64系统上运行会有一些错误,比如: WARN util.NativeCodeLoader: Una ...

- ubuntu14.04 编译安装gcc-5.3.0

最近编译个源码,要求对C++14的支持了,就GCC的编译安装最新的5.3.0,整个过程以root用户进行. 1.下载GCC源码,属于事后文档整理,已经不知道从哪下载了. 2.解压:tar -zxvf ...

- Ubuntu14.04用apt在线/离线安装CDH5.1.2[Apache Hadoop 2.3.0]

目录 [TOC] 1.CDH介绍 1.1.什么是CDH和CM? CDH一个对Apache Hadoop的集成环境的封装,可以使用Cloudera Manager进行自动化安装. Cloudera-Ma ...

- Ubuntu14.04编译安装mysql5.6.26

Ubuntu14.04编译安装mysql5.6.26 (1)安装编译源码需要的包 sudo apt-get install make cmake gcc g++ bison libncurses5-d ...

- 二、Ubuntu14.04下安装Hadoop2.4.0 (伪分布模式)

在Ubuntu14.04下安装Hadoop2.4.0 (单机模式)基础上配置 一.配置core-site.xml /usr/local/hadoop/etc/hadoop/core-site.xml ...

- Torch,Tensorflow使用: Ubuntu14.04(x64)+ CUDA8.0 安装 Torch和Tensorflow

系统配置: Ubuntu14.04(x64) CUDA8.0 cudnn-8.0-linux-x64-v5.1.tgz(Tensorflow依赖) Anaconda 1. Torch安装 Torch是 ...

- mac OS X Yosemite 上编译hadoop 2.6.0/2.7.0及TEZ 0.5.2/0.7.0 注意事项

1.jdk 1.7问题 hadoop 2.7.0必须要求jdk 1.7.0,而oracle官网已经声明,jdk 1.7 以后不准备再提供更新了,所以趁现在还能下载,赶紧去down一个mac版吧 htt ...

- 64位CentOS上编译 Hadoop 2.2.0

下载了Hadoop预编译好的二进制包,hadoop-2.2.0.tar.gz,启动起来后.总是出现这样的警告: WARN util.NativeCodeLoader: Unable to load n ...

- Ubuntu 12.04下Hadoop 2.2.0 集群搭建(原创)

现在大家可以跟我一起来实现Ubuntu 12.04下Hadoop 2.2.0 集群搭建,在这里我使用了两台服务器,一台作为master即namenode主机,另一台作为slave即datanode主机 ...

- 在docker容器中编译hadoop 3.1.0

在docker容器中编译hadoop 3.1.0 优点:docker安装好之后可以一键部署编译环境,不用担心各种库不兼容等问题,编译失败率低. Hadoop 3.1.0 的源代码目录下有一个 `sta ...

随机推荐

- Leetcode6.ZigZag ConversionZ字形变换

将字符串 "PAYPALISHIRING" 以Z字形排列成给定的行数: 之后从左往右,逐行读取字符:"PAHNAPLSIIGYIR" 实现一个将字符串进行指定行 ...

- BootStrap 栅格化换行问题

bootstrap 3的栅格系统有时会出现错位情况,原因是上一行的元素高度不一,导致元素float错误. 解决方法 使用栅格系统的时候,应该在每一行都使用row单独包裹起来,不要直接在一行中连续加入c ...

- 【CS Round #44 (Div. 2 only) B】Square Cover

[链接]点击打开链接 [题意] 给你一个n*m的矩形,让你在其中圈出若干个子正方形,使得这些子正方形里面的所有数字都是一样的. 且一样的数字,都是在同一个正方形里面.问你有没有方案. [题解] 相同的 ...

- Django快速创建新项目

Python免费视频含配套文件QQ124111294 https://pan.baidu.com/s/1bL5ml4 python.exe manage.py startapp app01 pytho ...

- bzoj 1191 [HNOI2006]超级英雄Hero——二分图匹配

题目:https://www.lydsy.com/JudgeOnline/problem.php?id=1191 不就是个最大匹配么. 结果WA得不行. 看TJ后发现题面上说了一旦没回答出一道题就结束 ...

- 使用openpyxl模块时出现错误: zipfile.BadZipFile: File is not a zip file

通过Pycharm工具新建一个xlsx文件. 再通过openpyxl模块读取该表时,报错: zipfile.BadZipFile: File is not a zip file 如下所示: 解决办法: ...

- 2018-5-4-WPF-获得触摸精度和触摸点

title author date CreateTime categories WPF 获得触摸精度和触摸点 lindexi 2018-05-04 21:11:51 +0800 2018-5-4 21 ...

- 洛谷 P1948 [USACO08JAN]电话线Telephone Lines 最短路+二分答案

目录 题面 题目链接 题目描述 输入输出格式 输入格式 输出格式 输入输出样例 输入样例 输出样例 说明 思路 AC代码 题面 题目链接 P1948 [USACO08JAN]电话线Telephone ...

- typeof与js数据类型

js有6种数据类型有null.undefied.string.number.boolean.object. 然而我之前的[误区]: typeof的返回值和JS的数据类型是一样的.但是并不是(⊙o⊙)哦 ...

- PHP文件载入和载入路径

一.文件载入 所谓的文件的载入,就是指将需要的目标文件的代码载入到当前的位置上,从某种意义上来说,文件载入也是一种流程控制! 文件载入的主要目的是体现网站的分层设计,因为不同的页面有很多相同的区域(相 ...