008 Ceph集群数据同步

介绍,目前已经创建一个名为ceph的Ceph集群,和一个backup(单节点)Ceph集群,是的这两个集群的数据可以同步,做备份恢复功能

一、配置集群的相互访问

1.1 安装rbd mirror

rbd-mirror是一个新的守护进程,负责将一个镜像从一个集群同步到另一个集群

如果是单向同步,则只需要在备份集群上安装

如果是双向同步,则需要在两个集群上都安装

rbd-mirror需要连接本地和远程集群

每个集群只需要运行一个rbd-mirror进程,必须手动按章

[root@ceph5 ceph]# yum install rbd-mirror

1.2 互传配置文件

Ceph集群----->backu盘集群

[root@ceph2 ceph]# scp /etc/ceph/ceph.conf /etc/ceph/ceph.client.admin.keyring ceph5:/etc/ceph/

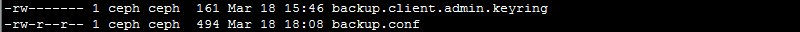

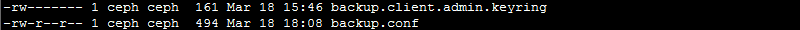

[root@ceph5 ceph]# ll -h

[root@ceph5 ceph]# chown ceph.ceph -R ./

[root@ceph5 ceph]# ll

[root@ceph5 ceph]# ceph -s --cluster ceph

cluster:

id: 35a91e48--4e96-a7ee-980ab989d20d

health: HEALTH_OK

services:

mon: daemons, quorum ceph2,ceph3,ceph4

mgr: ceph4(active), standbys: ceph2, ceph3

osd: osds: up, in

data:

pools: pools, pgs

objects: objects, MB

usage: MB used, GB / GB avail

pgs: active+clean

1.3 把配置文件从从端传到主端

[root@ceph5 ceph]# scp /etc/ceph/backup.conf /etc/ceph/backup.client.admin.keyring ceph2:/etc/ceph/

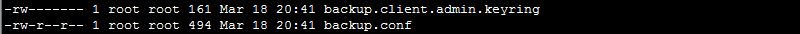

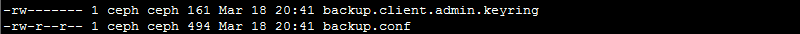

[root@ceph2 ceph]# ll

[root@ceph2 ceph]# chown -R ceph.ceph /etc/ceph/

[root@ceph2 ceph]# ll

[root@ceph2 ceph]# ceph -s --cluster backup

cluster:

id: 51dda18c--4edb-8ba9-27330ead81a7

health: HEALTH_OK services:

mon: daemons, quorum ceph5

mgr: ceph5(active)

osd: osds: up, in data:

pools: pools, pgs

objects: objects, bytes

usage: MB used, MB / MB avail

pgs: active+clean

二、 配置镜像为池模式

如果是单向同步,则只需要在主集群修改,如果是双向同步,需要在两个集群上都修改,(如果只启用单向备份,则不需要在备份集群上开启镜像模式)

rbd_default_features = 125

也可不修改配置文件,而在创建镜像时指定需要启用的功能

2.1 创建镜像池

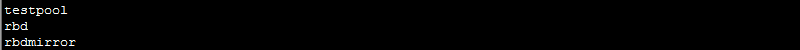

[root@ceph2 ceph]# ceph osd pool create rbdmirror 32 32

[root@ceph5 ceph]# ceph osd pool create rbdmirror 32 32

[root@ceph2 ceph]# yum -y install rbd-mirror

[root@ceph2 ceph]# rbd mirror pool enable rbdmirror pool --cluster ceph

[root@ceph2 ceph]# rbd mirror pool enable rbdmirror pool --cluster backup

2.2 增加同伴集群

需要将ceph和backup两个集群设置为同伴,这是为了让rbd-mirror进程找到它peer的集群的存储池

[root@ceph2 ceph]# rbd mirror pool peer add rbdmirror client.admin@ceph --cluster backup

[root@ceph2 ceph]# rbd mirror pool peer add rbdmirror client.admin@backup --cluster ceph

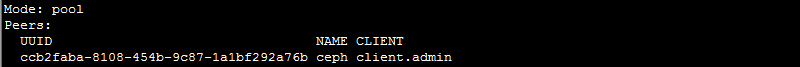

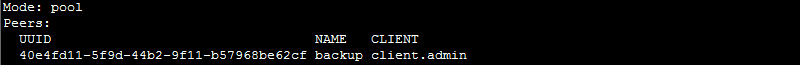

[root@ceph2 ceph]# rbd mirror pool info --pool=rbdmirror --cluster backup

[root@ceph2 ceph]# rbd mirror pool info --pool=rbdmirror --cluster ceph

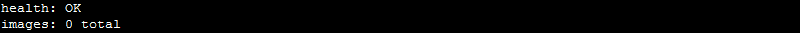

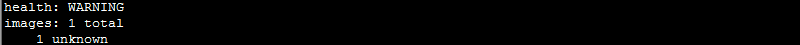

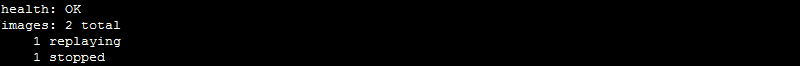

[root@ceph2 ceph]# rbd mirror pool status rbdmirror

[root@ceph2 ceph]# rbd mirror pool status rbdmirror --cluster backup

2.3 创建RBD并开启journaling

在rbdmirror池中创建一个test镜像

[root@ceph2 ceph]# rbd create --size 1G rbdmirror/test

[root@ceph2 ceph]# rbd ls rbdmirror

在从端查看,为空并没有同步,是因为没有开启journing功能,从端没有开启mirror

[root@ceph2 ceph]# rbd ls rbdmirror --cluster backup

[root@ceph2 ceph]# rbd info rbdmirror/test

rbd image 'test':

size MB in objects

order ( kB objects)

block_name_prefix: rbd_data.fbcd643c9869

format:

features: layering, exclusive-lock, object-map, fast-diff, deep-flatten

flags:

create_timestamp: Mon Mar ::

[root@ceph2 ceph]# rbd feature enable rbdmirror/test journaling

[root@ceph2 ceph]# rbd info rbdmirror/test

rbd image 'test':

size MB in objects

order ( kB objects)

block_name_prefix: rbd_data.fbcd643c9869

format:

features: layering, exclusive-lock, object-map, fast-diff, deep-flatten, journaling

flags:

create_timestamp: Mon Mar ::

journal: fbcd643c9869

mirroring state: enabled

mirroring global id: 0f6993f2-8c34-4c49-a546-61fcb452ff40

mirroring primary: true #主是true

2.4 从端开启mirror进程

[root@ceph2 ceph]# rbd mirror pool status rbdmirror

2.5 前台手动启动调试

[root@ceph5 ceph]# rbd-mirror -d --setuser ceph --setgroup ceph --cluster backup -i admin

-- ::39.521126 7f3a7f7c5340 set uid:gid to : (ceph:ceph)

-- ::39.521150 7f3a7f7c5340 ceph version 12.2.-.el7cp (c6d85fd953226c9e8168c9abe81f499d66cc2716) luminous (stable), process (unknown), pid

-- ::39.544922 7f3a7f7c5340 mgrc service_daemon_register rbd-mirror.admin metadata {arch=x86_64,ceph_version=ceph version 12.2.-.el7cp (c6d85fd953226c9e8168c9abe81f499d66cc2716) luminous (stable),cpu=QEMU Virtual CPU version 1.5.,distro=rhel,distro_description=Red Hat Enterprise Linux Server 7.4 (Maipo),distro_version=7.4,hostname=ceph5,instance_id=,kernel_description=# SMP Thu Dec :: EST ,kernel_version=3.10.-693.11..el7.x86_64,mem_swap_kb=,mem_total_kb=,os=Linux}

-- ::42.743067 7f3a6bfff700 - rbd::mirror::ImageReplayer: 0x7f3a50016c70 [/0f6993f2-8c34-4c49-a546-61fcb452ff40] handle_init_remote_journaler: image_id=1037140e0f76, m_client_meta.image_id=1037140e0f76, client.state=connected

[root@ceph5 ceph]# systemctl start ceph-rbd-mirror@admin

[root@ceph5 ceph]# ps aux|grep rbd

ceph 1.6 1.5 ? Ssl : : /usr/bin/rbd-mirror -f --cluster backup --id admin --setuser ceph --setgroup ceph

2.6 从端验证

查看已经同步

[root@ceph5 ceph]# rbd ls rbdmirror

[root@ceph5 ceph]# rbd info rbdmirror/test

rbd image 'test':

size MB in objects

order ( kB objects)

block_name_prefix: rbd_data.1037140e0f76

format:

features: layering, exclusive-lock, object-map, fast-diff, deep-flatten, journaling

flags:

create_timestamp: Mon Mar ::

journal: 1037140e0f76

mirroring state: enabled

mirroring global id: 0f6993f2-8c34-4c49-a546-61fcb452ff40

mirroring primary: false #从为false

现在相当于单向同步,

2.7 配置双向同步

主端手动启一下mirror

[root@ceph2 ceph]# /usr/bin/rbd-mirror -f --cluster ceph --id admin --setuser ceph --setgroup ceph -d

[root@ceph2 ceph]# systemctl restart ceph-rbd-mirror@admin

[root@ceph2 ceph]# ps aux|grep rbd

ceph 1.0 0.4 ? Ssl : : /usr/bin/rbd-mirror -f --cluster ceph --id admin --setuser ceph --setgroup ceph

从端做主端,创建一个镜像:

[root@ceph5 ceph]# rbd create --size 1G rbdmirror/ceph5-rbd --image-feature journaling --image-feature exclusive-lock

[root@ceph5 ceph]# rbd info rbdmirror/ceph5-rbd

rbd image 'ceph5-rbd':

size MB in objects

order ( kB objects)

block_name_prefix: rbd_data.103e74b0dc51

format:

features: exclusive-lock, journaling

flags:

create_timestamp: Mon Mar ::

journal: 103e74b0dc51

mirroring state: enabled

mirroring global id: c41dc1d8-43e5-44eb-ba3f-87dbb17f0d15

mirroring primary: true

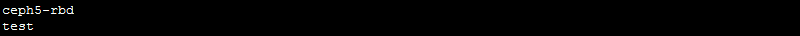

[root@ceph5 ceph]# rbd ls rbdmirror

主端变从端

[root@ceph2 ceph]# rbd info rbdmirror/ceph5-rbd

rbd image 'ceph5-rbd':

size MB in objects

order ( kB objects)

block_name_prefix: rbd_data.fbe541a7c4c9

format:

features: exclusive-lock, journaling

flags:

create_timestamp: Mon Mar ::

journal: fbe541a7c4c9

mirroring state: enabled

mirroring global id: c41dc1d8-43e5-44eb-ba3f-87dbb17f0d15

mirroring primary: false

查看基于池的同步信息

[root@ceph2 ceph]# rbd mirror pool status --pool=rbdmirror

查看image状态

[root@ceph2 ceph]# rbd mirror image status rbdmirror/test

test:

global_id: 0f6993f2-8c34-4c49-a546-61fcb452ff40

state: up+stopped

description: local image is primary

last_update: -- ::

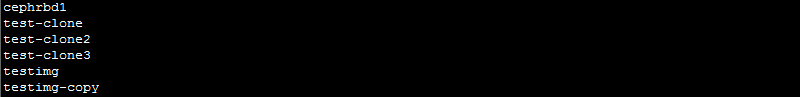

三、基于镜像级别的双向同步

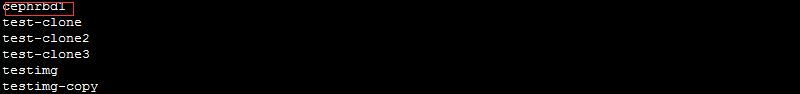

把池中的cephrbd1,作为镜像同步

[root@ceph2 ceph]# rbd ls

3.1 先为rbd池开启镜像模式

[root@ceph2 ceph]# rbd mirror pool enable rbd image --cluster ceph

[root@ceph2 ceph]# rbd mirror pool enable rbd image --cluster backup

3.2 增加同伴

[root@ceph2 ceph]# rbd mirror pool peer add rbd client.admin@ceph --cluster backup

[root@ceph2 ceph]# rbd mirror pool peer add rbd client.admin@backup --cluster ceph

[root@ceph2 ceph]# ceph osd pool ls

[root@ceph2 ceph]# rbd info cephrbd1

rbd image 'cephrbd1':

size MB in objects

order ( kB objects)

block_name_prefix: rbd_data.fb2074b0dc51

format:

features: layering

flags:

create_timestamp: Sun Mar ::

3.3 打开journaling

[root@ceph2 ceph]# rbd feature enable cephrbd1 exclusive-lock

[root@ceph2 ceph]# rbd feature enable cephrbd1 journaling

[root@ceph2 ceph]# rbd info cephrbd1

rbd image 'cephrbd1':

size MB in objects

order ( kB objects)

block_name_prefix: rbd_data.fb2074b0dc51

format:

features: layering, exclusive-lock, journaling

flags:

create_timestamp: Sun Mar ::

journal: fb2074b0dc51

mirroring state: disabled

3.4 开启镜像的mirror功能

[root@ceph2 ceph]# rbd mirror image enable rbd/cephrbd1

[root@ceph2 ceph]# rbd info rbd/cephrbd1

rbd image 'cephrbd1':

size MB in objects

order ( kB objects)

block_name_prefix: rbd_data.fb2074b0dc51

format:

features: layering, exclusive-lock, journaling

flags:

create_timestamp: Sun Mar ::

journal: fb2074b0dc51

mirroring state: enabled

mirroring global id: cfa19d96-1c41-47e3-bae0-0b9acfdcb72d

mirroring primary: true

[root@ceph2 ceph]# rbd ls

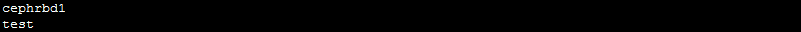

3.5 从端验证

[root@ceph5 ceph]# rbd ls

同步成功

如果主挂掉,可以把从设为主,但是实验状态下,可以把主降级处理,然后把从设为主

3.6 降级处理

[root@ceph2 ceph]# rbd mirror image demote rbd/cephrbd1

Image demoted to non-primary

[root@ceph2 ceph]# rbd info cephrbd1

rbd image 'cephrbd1':

size MB in objects

order ( kB objects)

block_name_prefix: rbd_data.fb2074b0dc51

format:

features: layering, exclusive-lock, journaling

flags:

create_timestamp: Sun Mar ::

journal: fb2074b0dc51

mirroring state: enabled

mirroring global id: cfa19d96-1c41-47e3-bae0-0b9acfdcb72d

mirroring primary: false

[root@ceph5 ceph]# rbd mirror image promote cephrbd1

Image promoted to primary

[root@ceph5 ceph]# rbd info cephrbd1

rbd image 'cephrbd1':

size MB in objects

order ( kB objects)

block_name_prefix: rbd_data.1043836c40e

format:

features: layering, exclusive-lock, journaling

flags:

create_timestamp: Mon Mar ::

journal: 1043836c40e

mirroring state: enabled

mirroring global id: cfa19d96-1c41-47e3-bae0-0b9acfdcb72d

mirroring primary: true #成为主,客户端就可以挂载到这里

3.7 池的升降级

[root@ceph2 ceph]# rbd mirror pool demote rbdmirror

Demoted mirrored images

[root@ceph2 ceph]# rbd mirror pool demote rbdmirror

Demoted mirrored images

[root@ceph2 ceph]# rbd info rbdmirror/ceph5-rbd

rbd image 'ceph5-rbd':

size MB in objects

order ( kB objects)

block_name_prefix: rbd_data.fbe541a7c4c9

format:

features: exclusive-lock, journaling

flags:

create_timestamp: Mon Mar ::

journal: fbe541a7c4c9

mirroring state: enabled

mirroring global id: c41dc1d8-43e5-44eb-ba3f-87dbb17f0d15

mirroring primary: false [root@ceph5 ceph]# rbd mirror pool promote rbdmirror

Promoted mirrored images

[root@ceph5 ceph]# rbd ls rbdmirror

ceph5-rbd

test

[root@ceph5 ceph]# rbd info rbdmirror/ceph5-rbd

rbd image 'ceph5-rbd':

size MB in objects

order ( kB objects)

block_name_prefix: rbd_data.103e74b0dc51

format:

features: exclusive-lock, journaling

flags:

create_timestamp: Mon Mar ::

journal: 103e74b0dc51

mirroring state: enabled

mirroring global id: c41dc1d8-43e5-44eb-ba3f-87dbb17f0d15

mirroring primary: true

[root@ceph5 ceph]# rbd info rbdmirror/test

rbd image 'test':

size MB in objects

order ( kB objects)

block_name_prefix: rbd_data.1037140e0f76

format:

features: layering, exclusive-lock, object-map, fast-diff, deep-flatten, journaling

flags:

create_timestamp: Mon Mar ::

journal: 1037140e0f76

mirroring state: enabled

mirroring global id: 0f6993f2-8c34-4c49-a546-61fcb452ff40

mirroring primary: true

3.8 摘掉mirror

[root@ceph2 ceph]# rbd mirror pool info --pool=rbdmirror

Mode: pool

Peers:

UUID NAME CLIENT

40e4fd11-5f9d-44b2-9f11-b57968be62cf backup client.admin

[root@ceph2 ceph]# rbd mirror pool info --pool=rbdmirror --cluster backup

Mode: pool

Peers:

UUID NAME CLIENT

ccb2faba--454b-9c87-1a1bf292a76b ceph client.admin

[root@ceph5 ceph]# rbd mirror pool peer remove --pool=rbdmirror 761872b4-03d8-4f0a-972e-dc61575a785f --cluster backup

[root@ceph5 ceph]# rbd mirror image disable rbd/cephrbd1 --force

[root@ceph5 ceph]# rbd info cephrbd1

rbd image 'cephrbd1':

size MB in objects

order ( kB objects)

block_name_prefix: rbd_data.1043836c40e

format:

features: layering, exclusive-lock, journaling

flags:

create_timestamp: Mon Mar ::

journal: 1043836c40e

mirroring state: disabled #已经关闭了镜像的mirror

Ceph的集群间相互访问备份配置完成!!!

博主声明:本文的内容来源主要来自誉天教育晏威老师,由本人实验完成操作验证,需要的博友请联系誉天教育(http://www.yutianedu.com/),获得官方同意或者晏老师(https://www.cnblogs.com/breezey/)本人同意即可转载,谢谢!

008 Ceph集群数据同步的更多相关文章

- Eureka应用注册与集群数据同步源码解析

在之前的EurekaClient自动装配及启动流程解析一文中我们提到过,在构造DiscoveryClient类时,会把自身注册到服务端,本文就来分析一下这个注册流程 客户端发起注册 boolean r ...

- Elasticsearch多集群数据同步

有时多个Elasticsearch集群避免不了要同步数据,网上查找了下数据同步工具还挺多,比较常用的有:elasticserach-dump.elasticsearch-exporter.logsta ...

- mysql 集群 数据同步

mysql集群配置在网站负载均衡中是必不可少的: 首先说下我个人准备的负载均衡方式: 1.通过nginx方向代理来将服务器压力分散到各个服务器上: 2.每个服务器中代码逻辑一样: 3.通过使用redi ...

- elasticsearch 不同集群数据同步

采用快照方式 1.源集群采用NFS,注意权限 2.共享目录完成后,在所有ES服务器上挂载为同一目录 3.创建快照仓库 put _snapshot/my_backup{ "type" ...

- [自动化]基于kolla-ceph的自动化部署ceph集群

kolla-ceph来源: 项目中的部分代码来自于kolla和kolla-ansible kolla-ceph的介绍: 1.镜像的构建很方便, 基于容器的方式部署,创建.删除方便 2.kolla-ce ...

- [自动化]基于kolla的自动化部署ceph集群

kolla-ceph来源: 项目中的部分代码来自于kolla和kolla-ansible kolla-ceph的介绍: 1.镜像的构建很方便, 基于容器的方式部署,创建.删除方便 2.kolla-ce ...

- CEPH集群操作入门--配置

参考文档:CEPH官网集群操作文档 概述 Ceph存储集群是所有Ceph部署的基础. 基于RADOS,Ceph存储集群由两种类型的守护进程组成:Ceph OSD守护进程(OSD)将数据作为对象 ...

- 1、ceph-deploy之部署ceph集群

环境说明 server:3台虚拟机,挂载卷/dev/vdb 10G 系统:centos7.2 ceph版本:luminous repo: 公网-http://download.ceph.com,htt ...

- 小型ceph集群的搭建

了解ceph DFS(distributed file system)分布式存储系统,指文件系统管理的物理存储资源,不一定直接连接在本地节点上,而是通过计算机网络与节点相连,众多类别中,ceph是当下 ...

随机推荐

- Android EditText____TextchangedListener

今天在做APP的时候有个需求: EditText 动态监听内容变化如果长度为6时(就是看是不是验证码) 判断是否正确 正确就跳到下一个Activity,但是在Activity.finish()的时候, ...

- MySQL_分库分表

分库分表 数据切分 通过某种特定的条件,将我们存放在同一个数据库中的数据分散存放到多个数据库(主机)上面,以达到分散单台设备负载的效果.数据的切分同时还能够提高系统的总体可用性,由于单台设备Crash ...

- js中setInterval与setTimeout用法 实现实时刷新每秒刷新

setTimeout 定义和用法: setTimeout()方法用于在指定的毫秒数后调用函数或计算表达式. 语法: setTimeout(code,millisec) 参数: ...

- 一条数据的漫游 -- X-Engine SIGMOD Paper Introduction

大多数人追寻永恒的家园(归宿),少数人追寻永恒的航向. ----瓦尔特.本雅明 背景 X-Engine是阿里数据库产品事业部自研的OLTP数据库存储引擎,作为自研数据库POLARDB X的存储引擎,已 ...

- 模板—点分治B(合并子树)(洛谷P4149 [IOI2011]Race)

洛谷P4149 [IOI2011]Race 点分治作用(目前只知道这个): 求一棵树上满足条件的节点二元组(u,v)个数,比较典型的是求dis(u,v)(dis表示距离)满足条件的(u,v)个数. 算 ...

- 从 Apache ORC 到 Apache Calcite | 2019大数据技术公开课第一季《技术人生专访》

摘要: 什么是Apache ORC开源项目?主流的开源列存格式ORC和Parquet有何区别?MaxCompute为什么选择ORC? 如何一步步成为committer和加入PMC的?在阿里和Uber总 ...

- oracle等式比较和范围比较

当WHERE子句中有索引列, ORACLE不能合并它们,ORACLE将用范围比较. 举例: DEPTNO上有一个非唯一性索引,EMP_CAT也有一个非唯一性索引. SELECT ENAME FROM ...

- 神经网络入门——7or 感知器

OR 感知器 OR 感知器与 AND 感知器很类似,在下图中,OR 感知器与 AND 感知器有相同的分割线,只是 OR 感知器分割线下移了一段距离.对权重或者偏置做怎样的设置可以实现这个效果?用下面的 ...

- 【微信小程序】下载并预览文档——pdf、word、excel等多种类型

.wxml文件 <view data-url="https://XXX/upload/zang." data-type="excel" catchtap= ...

- H3C 星型以太网拓扑扩展