keepalived(3)- keepalived+nginx实现WEB负载均衡高可用集群

1. keepalived+nginx实现WEB负载均衡高可用集群

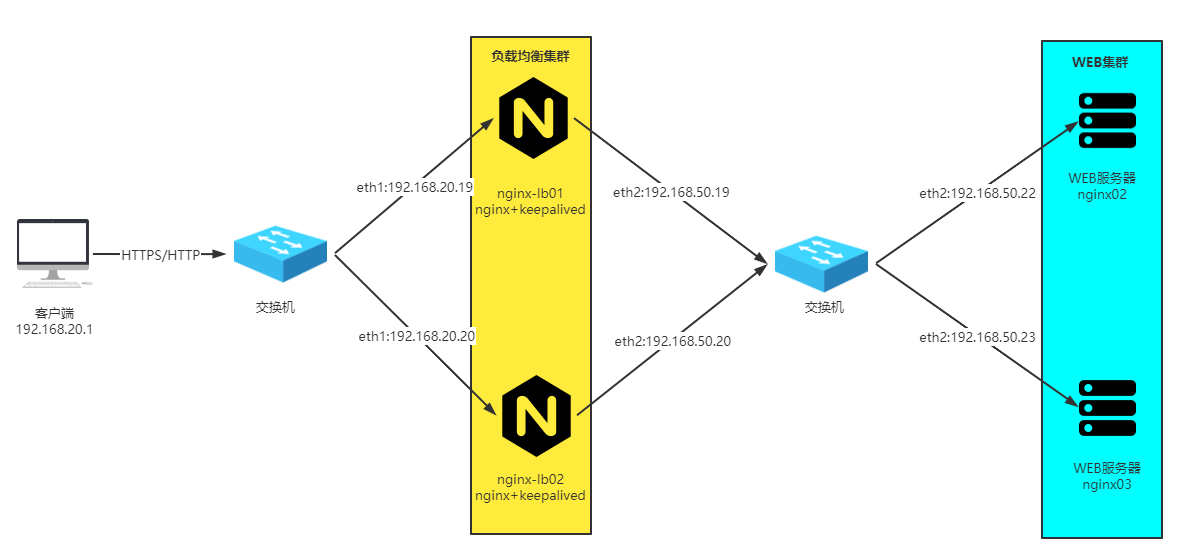

nginx作为负载均衡设备可以将流量调度到后端WEB集群中,但是nginx本身没有做到高可用,需要结合keepalived技术解决nginx的单点故障问题,因此通常需要nginx结合keepalived技术实现架构的高可用。

1.1 需求和环境描述

高可用nginx设计需求:

使用nginx+keepalived模型时需要监控nginx的状态,当nginx服务异常时,需要降低keepalived的优先级,进行VIP切换,此功能可以通过keepalived的track_script脚本功能实现,后端RS健康状态监测可以由nginx实现。

同时keepalived状态切换时也需要保证nginx进程启动,可以在keepalived状态切换为BACKUP或MASTER时通过notify.sh脚本再次启动一次nginx服务。

环境说明如下:

- 客户端:地址:192.168.20.1

- 负载均衡集群

- 节点1:主机名:nginx-lb01,地址:eth1:192.168.20.19(外部),eth2:192.168.50.19(内部)

- 节点2:主机名:nginx-lb02,地址:eth1:192.168.20.20(外部),eth2:192.168.50.20(内部)

- 负载均衡集群对外提供两个虚地址:192.168.20.28和192.168.20.29,其中192.168.20.28的MASTER节点为nginx-lb01,192.168.20.29的MASTER节点为nginx-lb02,前端可以通过DNS轮询把用户请求调度到不同的负载均衡节点上,实现分流互备。

- WEB集群

- 节点1:主机名:nginx02,地址:192.168.50.22

- 节点2:主机名:nginx03,地址:192.168.50.23

1.2 WEB集群部署

nginx02节点部署

#1.nginx的配置文件如下:

[root@nginx02 ~]# cat /etc/nginx/conf.d/xuzhichao.conf

server {

listen 80 default_server;

server_name www.xuzhichao.com;

access_log /var/log/nginx/access_xuzhichao.log access_json;

charset utf-8,gbk;

keepalive_timeout 65; #防盗链

valid_referers none blocked server_names *.b.com b.* ~\.baidu\. ~\.google\.; if ( $invalid_referer ) {

return 403;

} #浏览器图标

location = /favicon.ico {

root /data/nginx/xuzhichao;

} location / {

root /data/nginx/xuzhichao;

index index.html index.php;

} location ~ \.php$ {

root /data/nginx/xuzhichao; #fastcgi反向代理

fastcgi_pass 127.0.0.1:9000;

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

fastcgi_param HTTPS on;

fastcgi_hide_header X-Powered-By;

include fastcgi_params;

} location = /nginx_status {

access_log off;

allow 192.168.20.0/24;

deny all;

stub_status;

}

} #2.nginx工作目录:

[root@nginx02 ~]# ll /data/nginx/xuzhichao/index.html

-rw-r--r-- 1 nginx nginx 25 Jun 28 00:04 /data/nginx/xuzhichao/index.html

[root@nginx02 ~]# cat /data/nginx/xuzhichao/index.html

node1.xuzhichao.com page #3.启动nginx服务:

[root@nginx02 ~]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

[root@nginx02 ~]# systemctl start nginx.service #4.负载均衡测试访问情况:

[root@nginx-lb02 ~]# curl -Hhost:www.xuzhichao.com 192.168.50.22

node1.xuzhichao.com page

nginx03节点部署

#1.nginx的配置文件如下:

[root@nginx03 ~]# cat /etc/nginx/conf.d/xuzhichao.conf

server {

listen 80 default_server;

server_name www.xuzhichao.com;

access_log /var/log/nginx/access_xuzhichao.log access_json;

charset utf-8,gbk;

keepalive_timeout 65; #防盗链

valid_referers none blocked server_names *.b.com b.* ~\.baidu\. ~\.google\.; if ( $invalid_referer ) {

return 403;

} #浏览器图标

location = /favicon.ico {

root /data/nginx/xuzhichao;

} location / {

root /data/nginx/xuzhichao;

index index.html index.php;

} location ~ \.php$ {

root /data/nginx/xuzhichao; #fastcgi反向代理

fastcgi_pass 127.0.0.1:9000;

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

fastcgi_param HTTPS on;

fastcgi_hide_header X-Powered-By;

include fastcgi_params;

} location = /nginx_status {

access_log off;

allow 192.168.20.0/24;

deny all;

stub_status;

}

} #2.nginx工作目录:

[root@nginx03 ~]# ll /data/nginx/xuzhichao/index.html

-rw-r--r-- 1 nginx nginx 25 Jun 28 00:04 /data/nginx/xuzhichao/index.html

[root@nginx03 ~]# cat /data/nginx/xuzhichao/index.html

node2.xuzhichao.com page #3.启动nginx服务:

[root@nginx03 ~]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

[root@nginx03 ~]# systemctl start nginx.service #4.负载均衡测试访问情况:

[root@nginx-lb03 ~]# curl -Hhost:www.xuzhichao.com 192.168.50.23

node2.xuzhichao.com page

1.3 负载均衡集群部署

nginx-lb01和nginx-lb02配置文件完全一致:

#1.nginx的配置文件如下:

[root@nginx-lb01 certs]# cat /etc/nginx/conf.d/xuzhichao.conf

upstream webserver {

server 192.168.50.22 fail_timeout=5s max_fails=3;

server 192.168.50.23 fail_timeout=5s max_fails=3;

keepalive 32;

} server {

listen 443 ssl;

listen 80;

server_name www.xuzhichao.com;

access_log /var/log/nginx/access_xuzhichao.log access_json; ssl_certificate /etc/nginx/certs/xuzhichao.crt;

ssl_certificate_key /etc/nginx/certs/xuzhichao.key;

ssl_session_cache shared:ssl_cache:30m;

ssl_session_timeout 10m; valid_referers none blocked server_names *.b.com b.* ~\.baidu\. ~\.google\.; if ( $invalid_referer ) {

return 403;

} location / { if ( $scheme = http ) {

rewrite /(.*) https://www.xuzhichao.com/$1 permanent;

} proxy_pass http://webserver;

include proxy_params;

}

} [root@nginx-lb01 certs]# cat /etc/nginx/proxy_params

proxy_set_header host $http_host;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_connect_timeout 30;

proxy_send_timeout 60;

proxy_read_timeout 60; proxy_buffering on;

proxy_buffer_size 64k;

proxy_buffers 4 64k; #2.启动nginx

[root@nginx-lb01 certs]# systemctl start nginx.service #3.客户端通过分别测试两台负载均衡设备

#测试nginx-lb01负载均衡

[root@xuzhichao ~]# for i in {1..10} ;do curl -k -Hhost:www.xuzhichao.com https://192.168.20.19 ;done

node1.xuzhichao.com page

node2.xuzhichao.com page

node1.xuzhichao.com page

node2.xuzhichao.com page

node1.xuzhichao.com page

node2.xuzhichao.com page

node1.xuzhichao.com page

node2.xuzhichao.com page

node1.xuzhichao.com page

node2.xuzhichao.com page #测试nginx-lb02负载均衡

[root@xuzhichao ~]# for i in {1..10} ;do curl -k -Hhost:www.xuzhichao.com https://192.168.20.20 ;done

node1.xuzhichao.com page

node2.xuzhichao.com page

node1.xuzhichao.com page

node2.xuzhichao.com page

node1.xuzhichao.com page

node2.xuzhichao.com page

node1.xuzhichao.com page

node2.xuzhichao.com page

node1.xuzhichao.com page

node2.xuzhichao.com page

1.4 keepalived部署

nginx-lb01节点的keepalived部署如下:

#1.keepalived配置文件:

[root@nginx-lb01 keepalived]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id keepalived01

script_user root

enable_script_security

vrrp_mcast_group4 224.0.0.19

} vrrp_script chk_down {

script "/etc/keepalived/chk_down.sh" <==检测脚本,可以直接写命令,也可以调用脚本,脚本需要有执行权限,此处表示若/etc/keepalived/down文件存在,则vrrp优先级减少50

weight -50 <==如果脚本退出状态为1,则触发优先级减少50

interval 1 <==脚本检测的周期,1s检测一次

fall 3 <==脚本检测3次都失败则认为检测失败,触发优先级减少50

rise 3 <==脚本检测3此都成功则认为检测成功,优先级恢复

} #注意:在检测脚本中减少weight值是不生效的,如果在vrrp_script字段中没有设置weight值,则脚本状态值为1则直接把keepalived状态置为fault状态。

vrrp_script chk_nginx {

script "/etc/keepalived/chk_nginx.sh" <==调用检测脚本,脚本需要有执行权限

interval 5 <==注意:每5秒执行一次脚本, 脚本执行过程不能超过5秒,否则会被中断再次重新运行脚本。

} vrrp_instance VI_1 {

state MASTER

interface eth1

virtual_router_id 51

priority 120

advert_int 3

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.20.28/24 dev eth1

} track_interface {

eth2

eth0

} track_script {

chk_down

chk_nginx

} notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

} vrrp_instance VI_2 {

state BACKUP

interface eth1

virtual_router_id 52

priority 100

advert_int 3

authentication {

auth_type PASS

auth_pass 2222

} virtual_ipaddress {

192.168.20.29/24 dev eth1

} track_interface {

eth2

eth0

} track_script {

chk_down

chk_nginx

} notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

} #2.chk_nginx脚本文件:

[root@nginx-lb01 keepalived]# cat /etc/keepalived/chk_nginx.sh

#!/bin/bash nginxpid=$(pidof nginx | wc -l) #1.判断Nginx是否存活,如果不存活则尝试启动Nginx

if [ $nginxpid -eq 0 ];then

systemctl start nginx

sleep 2 #2.等待2秒后再次获取一次Nginx状态

nginxpid=$(pidof nginx | wc -l) #3.再次进行判断, 如Nginx还不存活则停止Keepalived,让虚地址进行漂移,并退出脚本

if [ $nginxpid -eq 0 ];then

systemctl stop keepalived

fi

fi #3.notify.sh文件:

[root@nginx-lb01 keepalived]# cat /etc/keepalived/notify.sh

#!/bin/bash contact='root@localhost'

notify() {

local mailsubject="$(hostname) to be $1, vip floating"

local mailbody="$(date +'%F %T'): vrrp transition, $(hostname) changed to be $1"

echo "$mailbody" | mail -s "$mailsubject" $contact

} case $1 in

master)

notify master

systemctl start nginx <==keepalived状态切换时触发启动nginx服务,确保nginx服务启动,可用于提供服务

;;

backup)

notify backup

systemctl start nginx

;;

fault)

notify fault

;;

*)

echo "Usage: $(basename $0) {master|backup|fault}"

exit 1

;;

esac #4.chk_down脚本:

[root@nginx-lb01 keepalived]# cat /etc/keepalived/chk_down.sh

#!/bin/bash [[ ! -f /etc/keepalived/down ]] #5.增加执行权限:

[root@nginx-lb01 keepalived]# chmod u+x chk_nginx.sh

[root@nginx-lb01 keepalived]# chmod u+x notify.sh

[root@nginx-lb01 keepalived]# chmod u+x chk_down.sh #5.重启keepalived服务:

[root@nginx-lb01 keepalived]# systemctl restart keepalived.service #6.查看VIP情况:

[root@nginx-lb01 keepalived]# ip add show eth1

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:33:71:d0 brd ff:ff:ff:ff:ff:ff

inet 192.168.20.19/24 brd 192.168.20.255 scope global noprefixroute eth1

valid_lft forever preferred_lft forever

inet 192.168.20.28/24 scope global secondary eth1

valid_lft forever preferred_lft forever

inet6 fe80::f0da:450f:5a80:de8b/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::7f20:c9d7:cb3e:bb8e/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::1a77:baea:91d8:79c7/64 scope link noprefixroute

valid_lft forever preferred_lft forever

nginx-lb02节点的keepalived部署如下:

#1.keepalived配置文件:

You have new mail in /var/spool/mail/root

[root@nginx-lb02 keepalived]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id keepalived01

script_user root

enable_script_security

vrrp_mcast_group4 224.0.0.19

} vrrp_script chk_down {

script "[[ ! -f /etc/keepalived/down ]]" <==注意:在centos7.8测试使用这种方式无法生效。需要使用脚本方式。

weight -50

interval 1

fall 3

rise 3

} vrrp_script chk_nginx {

script "/etc/keepalived/chk_nginx.sh"

interval 5

} vrrp_instance VI_1 {

state BACKUP

interface eth1

virtual_router_id 51

priority 100

advert_int 3

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.20.28/24 dev eth1

} track_interface {

eth2

eth0

} track_script {

chk_down

chk_nginx

} notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

} vrrp_instance VI_2 {

state MASTER

interface eth1

virtual_router_id 52

priority 120

advert_int 3

authentication {

auth_type PASS

auth_pass 2222

} virtual_ipaddress {

192.168.20.29/24 dev eth1

} track_interface {

eth2

eth0

} track_script {

chk_down

chk_nginx

} notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

} #2.chk_nginx脚本文件:

[root@nginx-lb02 keepalived]# cat /etc/keepalived/chk_nginx.sh

#!/bin/bash nginxpid=$(pidof nginx | wc -l) #1.判断Nginx是否存活,如果不存活则尝试启动Nginx

if [ $nginxpid -eq 0 ];then

systemctl start nginx

sleep 2 #2.等待2秒后再次获取一次Nginx状态

nginxpid=$(pidof nginx | wc -l) #3.再次进行判断, 如Nginx还不存活则停止Keepalived,让地址进行漂移,并退出脚本

if [ $nginxpid -eq 0 ];then

systemctl stop keepalived

fi

fi #3.notify.sh文件:

[root@nginx-lb02 keepalived]# cat /etc/keepalived/notify.sh

#!/bin/bash contact='root@localhost'

notify() {

local mailsubject="$(hostname) to be $1, vip floating"

local mailbody="$(date +'%F %T'): vrrp transition, $(hostname) changed to be $1"

echo "$mailbody" | mail -s "$mailsubject" $contact

} case $1 in

master)

notify master

systemctl start nginx <==keepalived状态切换时触发启动nginx服务,确保nginx服务启动,可用于提供服务

;;

backup)

notify backup

systemctl start nginx

;;

fault)

notify fault

;;

*)

echo "Usage: $(basename $0) {master|backup|fault}"

exit 1

;;

esac #4.增加执行权限:

[root@nginx-lb02 keepalived]# chmod u+x chk_nginx.sh

[root@nginx-lb02 keepalived]# chmod u+x notify.sh #5.重启keepalived服务:

[root@nginx-lb02 keepalived]# systemctl restart keepalived.service #6.查看VIP情况:

[root@nginx-lb02 keepalived]# ip add show eth1

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:21:9d:5c brd ff:ff:ff:ff:ff:ff

inet 192.168.20.20/24 brd 192.168.20.255 scope global noprefixroute eth1

valid_lft forever preferred_lft forever

inet 192.168.20.29/24 scope global secondary eth1

valid_lft forever preferred_lft forever

inet6 fe80::52b0:737b:a3cb:c6a5/64 scope link noprefixroute

valid_lft forever preferred_lft forever

用客户端测试通过两个VIP访问后端WEB集群

#测试VIP192.168.20.28,此时时通过负载均衡nginx-lb01节点访问

[root@xuzhichao ~]# for i in {1..10} ;do curl -k -Hhost:www.xuzhichao.com https://192.168.20.28 ;done

node1.xuzhichao.com page

node2.xuzhichao.com page

node1.xuzhichao.com page

node2.xuzhichao.com page

node1.xuzhichao.com page

node2.xuzhichao.com page

node1.xuzhichao.com page

node2.xuzhichao.com page

node1.xuzhichao.com page

node2.xuzhichao.com page #测试VIP192.168.20.29,此时时通过负载均衡nginx-lb02节点访问

[root@xuzhichao ~]# for i in {1..10} ;do curl -k -Hhost:www.xuzhichao.com https://192.168.20.29 ;done

node1.xuzhichao.com page

node2.xuzhichao.com page

node1.xuzhichao.com page

node2.xuzhichao.com page

node1.xuzhichao.com page

node2.xuzhichao.com page

node1.xuzhichao.com page

node2.xuzhichao.com page

node1.xuzhichao.com page

node2.xuzhichao.com page

1.5 测试监控的接口down后keepalived的情况

把nginx-lb01节点的eth2接口down掉,模拟连接内网的接口故障,此时若VIP不进行切换,则使用192.168.20.28地址的请求会出现问题。

[root@nginx-lb01 keepalived]# ip link set eth2 down

[root@nginx-lb01 keepalived]# ip link show eth2

4: eth2: <BROADCAST,MULTICAST> mtu 1500 qdisc pfifo_fast state DOWN mode DEFAULT group default qlen 1000

link/ether 00:0c:29:33:71:da brd ff:ff:ff:ff:ff:ff

此时查看nginx-lb01节点的keepalived日志,发现keepalived状态切换为fault,192.168.20.28这个虚地址被移除,并发送通知消息。

[root@nginx-lb01 ~]# tail -f /var/log/keepalived.log

Jul 9 23:40:52 nginx-lb01 Keepalived_vrrp[1838]: Kernel is reporting: interface eth2 DOWN

Jul 9 23:40:52 nginx-lb01 Keepalived_vrrp[1838]: VRRP_Instance(VI_1) Entering FAULT STATE

Jul 9 23:40:52 nginx-lb01 Keepalived_vrrp[1838]: VRRP_Instance(VI_1) removing protocol VIPs.

Jul 9 23:40:52 nginx-lb01 Keepalived_vrrp[1838]: Opening script file /etc/keepalived/notify.sh

Jul 9 23:40:52 nginx-lb01 Keepalived_vrrp[1838]: VRRP_Instance(VI_1) Now in FAULT state

Jul 9 23:40:54 nginx-lb01 Keepalived_vrrp[1838]: Kernel is reporting: interface eth2 DOWN

Jul 9 23:40:54 nginx-lb01 Keepalived_vrrp[1838]: VRRP_Instance(VI_2) Now in FAULT state

Jul 9 23:40:54 nginx-lb01 Keepalived_vrrp[1838]: Opening script file /etc/keepalived/notify.sh [root@nginx-lb01 keepalived]# ip add show eth1

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:33:71:d0 brd ff:ff:ff:ff:ff:ff

inet 192.168.20.19/24 brd 192.168.20.255 scope global noprefixroute eth1

valid_lft forever preferred_lft forever

inet6 fe80::f0da:450f:5a80:de8b/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::7f20:c9d7:cb3e:bb8e/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::1a77:baea:91d8:79c7/64 scope link noprefixroute

valid_lft forever preferred_lft forever

You have new mail in /var/spool/mail/root

查看nginx-lb02节点的keepalived日志,发现它接管了192.168.20.28这个VIP,并发送通知消息。

[root@nginx-lb02 ~]# tail -f /var/log/keepalived.log

Jul 9 23:40:52 nginx-lb02 Keepalived_vrrp[1967]: VRRP_Instance(VI_1) Transition to MASTER STATE

Jul 9 23:40:55 nginx-lb02 Keepalived_vrrp[1967]: VRRP_Instance(VI_1) Entering MASTER STATE

Jul 9 23:40:55 nginx-lb02 Keepalived_vrrp[1967]: VRRP_Instance(VI_1) setting protocol VIPs.

Jul 9 23:40:55 nginx-lb02 Keepalived_vrrp[1967]: Sending gratuitous ARP on eth1 for 192.168.20.28

Jul 9 23:40:55 nginx-lb02 Keepalived_vrrp[1967]: VRRP_Instance(VI_1) Sending/queueing gratuitous ARPs on eth1 for 192.168.20.28 [root@nginx-lb02 keepalived]# ip add show eth1

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:21:9d:5c brd ff:ff:ff:ff:ff:ff

inet 192.168.20.20/24 brd 192.168.20.255 scope global noprefixroute eth1

valid_lft forever preferred_lft forever

inet 192.168.20.29/24 scope global secondary eth1

valid_lft forever preferred_lft forever

inet 192.168.20.28/24 scope global secondary eth1

valid_lft forever preferred_lft forever

inet6 fe80::52b0:737b:a3cb:c6a5/64 scope link noprefixroute

valid_lft forever preferred_lft forever

You have new mail in /var/spool/mail/root

此时测试192.168.20.28虚地址可以正常进行调度

[root@xuzhichao ~]# for i in {1..10} ;do curl -k -Hhost:www.xuzhichao.com https://192.168.20.28 ;done

node1.xuzhichao.com page

node2.xuzhichao.com page

node1.xuzhichao.com page

node2.xuzhichao.com page

node1.xuzhichao.com page

node2.xuzhichao.com page

node1.xuzhichao.com page

node2.xuzhichao.com page

node1.xuzhichao.com page

node2.xuzhichao.com page

恢复nginx-lb01的eth2口,虚地址192.168.20.28重新回到nginx-lb01节点

[root@nginx-lb01 keepalived]# ip link set eth2 up [root@nginx-lb01 keepalived]# ip add show eth1

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:33:71:d0 brd ff:ff:ff:ff:ff:ff

inet 192.168.20.19/24 brd 192.168.20.255 scope global noprefixroute eth1

valid_lft forever preferred_lft forever

inet 192.168.20.28/24 scope global secondary eth1

valid_lft forever preferred_lft forever

inet6 fe80::f0da:450f:5a80:de8b/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::7f20:c9d7:cb3e:bb8e/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::1a77:baea:91d8:79c7/64 scope link noprefixroute

valid_lft forever preferred_lft forever

1.6 测试nginx服务异常时keepalived的情况

把nginx-lb01节点的nginx服务停止,发现nginx很快就重新启动了,说明,检测nginx的脚本生效了。

[root@nginx-lb01 keepalived]# systemctl stop nginx.service [root@nginx-lb01 keepalived]# systemctl status nginx.service

● nginx.service - nginx - high performance web server

Loaded: loaded (/usr/lib/systemd/system/nginx.service; disabled; vendor preset: disabled)

Active: active (running) since Fri 2021-07-09 23:58:41 CST; 5s ago

Docs: http://nginx.org/en/docs/

Process: 2527 ExecStart=/usr/sbin/nginx -c /etc/nginx/nginx.conf (code=exited, status=0/SUCCESS)

Process: 2526 ExecStartPre=/usr/sbin/nginx -t (code=exited, status=0/SUCCESS)

Main PID: 2529 (nginx)

CGroup: /system.slice/nginx.service

├─2529 nginx: master process /usr/sbin/nginx -c /etc/nginx/nginx.conf

└─2531 nginx: worker process Jul 09 23:58:41 nginx-lb01 systemd[1]: Starting nginx - high performance web server...

Jul 09 23:58:41 nginx-lb01 nginx[2526]: nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

Jul 09 23:58:41 nginx-lb01 nginx[2526]: nginx: configuration file /etc/nginx/nginx.conf test is successful

Jul 09 23:58:41 nginx-lb01 systemd[1]: Failed to parse PID from file /var/run/nginx.pid: Invalid argument

Jul 09 23:58:41 nginx-lb01 systemd[1]: Started nginx - high performance web server.

把nginx-lb01节点的nginx配置文件修改错误,然后再停止nginx服务,让nginx服务无法启动,此时查看nginx-lb01节点的keepalived日志,发现keepalived状态切换为fault,192.168.20.28这个虚地址被移除,并发送通知消息。

[root@nginx-lb01 ~]# tail -f /var/log/keepalived.log

Jul 10 00:01:48 nginx-lb01 Keepalived_vrrp[2287]: /etc/keepalived/chk_nginx.sh exited with status 127 <==脚本检测失败

Jul 10 00:01:48 nginx-lb01 Keepalived_vrrp[2287]: VRRP_Script(chk_nginx) failed <==脚本检测失败

Jul 10 00:01:49 nginx-lb01 Keepalived_vrrp[2287]: VRRP_Instance(VI_1) Entering FAULT STATE <==进入fault状态

Jul 10 00:01:49 nginx-lb01 Keepalived_vrrp[2287]: VRRP_Instance(VI_1) removing protocol VIPs.

Jul 10 00:01:49 nginx-lb01 Keepalived_vrrp[2287]: Opening script file /etc/keepalived/notify.sh

Jul 10 00:01:49 nginx-lb01 Keepalived_vrrp[2287]: VRRP_Instance(VI_1) Now in FAULT state

Jul 10 00:01:51 nginx-lb01 Keepalived_vrrp[2287]: VRRP_Instance(VI_2) Now in FAULT state

Jul 10 00:01:51 nginx-lb01 Keepalived_vrrp[2287]: Opening script file /etc/keepalived/notify.sh

Jul 10 00:01:53 nginx-lb01 Keepalived_vrrp[2287]: /etc/keepalived/chk_nginx.sh exited with status 127

Jul 10 00:01:58 nginx-lb01 Keepalived_vrrp[2287]: /etc/keepalived/chk_nginx.sh exited with status 127 <==每5s检测一次chk_nginx脚本

Jul 10 00:02:03 nginx-lb01 Keepalived_vrrp[2287]: /etc/keepalived/chk_nginx.sh exited with status 127

Jul 10 00:02:08 nginx-lb01 Keepalived_vrrp[2287]: /etc/keepalived/chk_nginx.sh exited with status 127

Jul 10 00:02:13 nginx-lb01 Keepalived_vrrp[2287]: /etc/keepalived/chk_nginx.sh exited with status 127

Jul 10 00:02:18 nginx-lb01 Keepalived_vrrp[2287]: /etc/keepalived/chk_nginx.sh exited with status 127

Jul 10 00:02:23 nginx-lb01 Keepalived_vrrp[2287]: /etc/keepalived/chk_nginx.sh exited with status 127 [root@nginx-lb01 keepalived]# ip add show eth1

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:33:71:d0 brd ff:ff:ff:ff:ff:ff

inet 192.168.20.19/24 brd 192.168.20.255 scope global noprefixroute eth1

valid_lft forever preferred_lft forever

inet6 fe80::f0da:450f:5a80:de8b/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::7f20:c9d7:cb3e:bb8e/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::1a77:baea:91d8:79c7/64 scope link noprefixroute

valid_lft forever preferred_lft forever

查看nginx-lb02节点的keepalived日志,发现它接管了192.168.20.28这个VIP,并发送通知消息。

[root@nginx-lb02 ~]# tail -f /var/log/keepalived.log

Jul 10 00:01:49 nginx-lb02 Keepalived_vrrp[2208]: VRRP_Instance(VI_1) Transition to MASTER STATE

Jul 10 00:01:52 nginx-lb02 Keepalived_vrrp[2208]: VRRP_Instance(VI_1) Entering MASTER STATE

Jul 10 00:01:52 nginx-lb02 Keepalived_vrrp[2208]: VRRP_Instance(VI_1) setting protocol VIPs.

Jul 10 00:01:52 nginx-lb02 Keepalived_vrrp[2208]: Sending gratuitous ARP on eth1 for 192.168.20.28

Jul 10 00:01:52 nginx-lb02 Keepalived_vrrp[2208]: VRRP_Instance(VI_1) Sending/queueing gratuitous ARPs on eth1 for 192.168.20.28

Jul 10 00:01:52 nginx-lb02 Keepalived_vrrp[2208]: Sending gratuitous ARP on eth1 for 192.168.20.28 [root@nginx-lb02 keepalived]# ip add show eth1

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:21:9d:5c brd ff:ff:ff:ff:ff:ff

inet 192.168.20.20/24 brd 192.168.20.255 scope global noprefixroute eth1

valid_lft forever preferred_lft forever

inet 192.168.20.29/24 scope global secondary eth1

valid_lft forever preferred_lft forever

inet 192.168.20.28/24 scope global secondary eth1

valid_lft forever preferred_lft forever

inet6 fe80::52b0:737b:a3cb:c6a5/64 scope link noprefixroute

valid_lft forever preferred_lft forever

You have new mail in /var/spool/mail/root

此时测试192.168.20.28虚地址可以正常进行调度

[root@xuzhichao ~]# for i in {1..10} ;do curl -k -Hhost:www.xuzhichao.com https://192.168.20.28 ;done

node1.xuzhichao.com page

node2.xuzhichao.com page

node1.xuzhichao.com page

node2.xuzhichao.com page

node1.xuzhichao.com page

node2.xuzhichao.com page

node1.xuzhichao.com page

node2.xuzhichao.com page

node1.xuzhichao.com page

node2.xuzhichao.com page

此时恢复nginx-lb01节点的nginx配置文件,查看nginx-lb01节点的日志,发现chk_nginx脚本检测成功,nginx服务自动启动,虚地址192.168.20.28重新回到nginx-lb01节点

[root@nginx-lb01 ~]# tail -f /var/log/keepalived.log

Jul 10 00:07:43 nginx-lb01 Keepalived_vrrp[2287]: VRRP_Script(chk_nginx) succeeded <==脚本检测成功

Jul 10 00:07:44 nginx-lb01 Keepalived_vrrp[2287]: VRRP_Instance(VI_1) Entering BACKUP STATE

Jul 10 00:07:44 nginx-lb01 Keepalived_vrrp[2287]: Opening script file /etc/keepalived/notify.sh

Jul 10 00:07:45 nginx-lb01 Keepalived_vrrp[2287]: VRRP_Instance(VI_2) Entering BACKUP STATE

Jul 10 00:07:45 nginx-lb01 Keepalived_vrrp[2287]: Opening script file /etc/keepalived/notify.sh

Jul 10 00:07:47 nginx-lb01 Keepalived_vrrp[2287]: VRRP_Instance(VI_1) forcing a new MASTER election

Jul 10 00:07:50 nginx-lb01 Keepalived_vrrp[2287]: VRRP_Instance(VI_1) Transition to MASTER STATE

Jul 10 00:07:53 nginx-lb01 Keepalived_vrrp[2287]: VRRP_Instance(VI_1) Entering MASTER STATE

Jul 10 00:07:53 nginx-lb01 Keepalived_vrrp[2287]: VRRP_Instance(VI_1) setting protocol VIPs.

Jul 10 00:07:53 nginx-lb01 Keepalived_vrrp[2287]: Sending gratuitous ARP on eth1 for 192.168.20.28

Jul 10 00:07:53 nginx-lb01 Keepalived_vrrp[2287]: VRRP_Instance(VI_1) Sending/queueing gratuitous ARPs on eth1 for 192.168.20.28

Jul 10 00:07:53 nginx-lb01 Keepalived_vrrp[2287]: Sending gratuitous ARP on eth1 for 192.168.20.28

Jul 10 00:07:53 nginx-lb01 Keepalived_vrrp[2287]: Sending gratuitous ARP on eth1 for 192.168.20.28 [root@nginx-lb01 keepalived]# ip add show eth1

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:33:71:d0 brd ff:ff:ff:ff:ff:ff

inet 192.168.20.19/24 brd 192.168.20.255 scope global noprefixroute eth1

valid_lft forever preferred_lft forever

inet 192.168.20.28/24 scope global secondary eth1

valid_lft forever preferred_lft forever

inet6 fe80::f0da:450f:5a80:de8b/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::7f20:c9d7:cb3e:bb8e/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::1a77:baea:91d8:79c7/64 scope link noprefixroute

valid_lft forever preferred_lft forever [root@nginx-lb01 keepalived]# systemctl status nginx.service

● nginx.service - nginx - high performance web server

Loaded: loaded (/usr/lib/systemd/system/nginx.service; disabled; vendor preset: disabled)

Active: active (running) since Sat 2021-07-10 00:07:41 CST; 2min 48s ago

Docs: http://nginx.org/en/docs/

Process: 3758 ExecStart=/usr/sbin/nginx -c /etc/nginx/nginx.conf (code=exited, status=0/SUCCESS)

Process: 3757 ExecStartPre=/usr/sbin/nginx -t (code=exited, status=0/SUCCESS)

Main PID: 3760 (nginx)

CGroup: /system.slice/nginx.service

├─3760 nginx: master process /usr/sbin/nginx -c /etc/nginx/nginx.conf

└─3762 nginx: worker process

nginx-lb02节点移除虚地址192.168.20.28,进入BACKUP状态

[root@nginx-lb02 ~]# tail -f /var/log/keepalived.log

Jul 10 00:07:47 nginx-lb02 Keepalived_vrrp[2208]: VRRP_Instance(VI_1) Received advert with higher priority 120, ours 100

Jul 10 00:07:47 nginx-lb02 Keepalived_vrrp[2208]: VRRP_Instance(VI_1) Entering BACKUP STATE

Jul 10 00:07:47 nginx-lb02 Keepalived_vrrp[2208]: VRRP_Instance(VI_1) removing protocol VIPs.

Jul 10 00:07:47 nginx-lb02 Keepalived_vrrp[2208]: Opening script file /etc/keepalived/notify.sh

1.7 测试chk_down脚本生效后keepalived的情况

在nginx-lb01节点创建/etc/keepalived/down文件

#1.创建down文件:

[root@nginx-lb01 keepalived]# touch down #2.查看nginx-lb01节点的keepalived日志,脚本加测失败,降低本节点vrrp优先级,变为BACKUP状态:

[root@nginx-lb01 ~]# tail -f /var/log/keepalived.log

Jul 10 16:20:28 nginx-lb01 Keepalived_vrrp[18592]: /etc/keepalived/chk_down.sh exited with status 1 <==三次脚本退出状态为1

Jul 10 16:20:29 nginx-lb01 Keepalived_vrrp[18592]: /etc/keepalived/chk_down.sh exited with status 1

Jul 10 16:20:30 nginx-lb01 Keepalived_vrrp[18592]: /etc/keepalived/chk_down.sh exited with status 1

Jul 10 16:20:30 nginx-lb01 Keepalived_vrrp[18592]: VRRP_Script(chk_down) failed <==判定脚本检测失败

Jul 10 16:20:31 nginx-lb01 Keepalived_vrrp[18592]: VRRP_Instance(VI_1) Changing effective priority from 120 to 70 <==减少vrrp优先级50

Jul 10 16:20:31 nginx-lb01 Keepalived_vrrp[18592]: VRRP_Instance(VI_2) Changing effective priority from 100 to 50 <==减少vrrp优先级50

Jul 10 16:20:31 nginx-lb01 Keepalived_vrrp[18592]: /etc/keepalived/chk_down.sh exited with status 1

Jul 10 16:20:32 nginx-lb01 Keepalived_vrrp[18592]: /etc/keepalived/chk_down.sh exited with status 1

Jul 10 16:20:33 nginx-lb01 Keepalived_vrrp[18592]: /etc/keepalived/chk_down.sh exited with status 1

Jul 10 16:20:34 nginx-lb01 Keepalived_vrrp[18592]: VRRP_Instance(VI_1) Received advert with higher priority 100, ours 70

Jul 10 16:20:34 nginx-lb01 Keepalived_vrrp[18592]: VRRP_Instance(VI_1) Entering BACKUP STATE <==进入BACKUP状态

Jul 10 16:20:34 nginx-lb01 Keepalived_vrrp[18592]: VRRP_Instance(VI_1) removing protocol VIPs.

Jul 10 16:20:34 nginx-lb01 Keepalived_vrrp[18592]: Opening script file /etc/keepalived/notify.sh

Jul 10 16:20:34 nginx-lb01 Keepalived_vrrp[18592]: /etc/keepalived/chk_down.sh exited with status 1

Jul 10 16:20:35 nginx-lb01 Keepalived_vrrp[18592]: /etc/keepalived/chk_down.sh exited with status 1

Jul 10 16:20:36 nginx-lb01 Keepalived_vrrp[18592]: /etc/keepalived/chk_down.sh exited with status 1

Jul 10 16:20:37 nginx-lb01 Keepalived_vrrp[18592]: /etc/keepalived/chk_down.sh exited with status 1

Jul 10 16:20:38 nginx-lb01 Keepalived_vrrp[18592]: /etc/keepalived/chk_down.sh exited with status 1 #3.本机虚ip移除:

[root@nginx-lb01 keepalived]# ip add show eth1

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:33:71:d0 brd ff:ff:ff:ff:ff:ff

inet 192.168.20.19/24 brd 192.168.20.255 scope global noprefixroute eth1

valid_lft forever preferred_lft forever

inet6 fe80::f0da:450f:5a80:de8b/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::7f20:c9d7:cb3e:bb8e/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::1a77:baea:91d8:79c7/64 scope link noprefixroute

valid_lft forever preferred_lft forever

查看nginx-lb02节点的情况

#1.查看日志情况,该节点称为MASTER状态,接管了虚IP192.168.20.28

[root@nginx-lb02 ~]# tail -f /var/log/keepalived.log

Jul 10 16:20:34 nginx-lb02 Keepalived_vrrp[3831]: VRRP_Instance(VI_1) forcing a new MASTER election

Jul 10 16:20:37 nginx-lb02 Keepalived_vrrp[3831]: VRRP_Instance(VI_1) Transition to MASTER STATE

Jul 10 16:20:40 nginx-lb02 Keepalived_vrrp[3831]: VRRP_Instance(VI_1) Entering MASTER STATE

Jul 10 16:20:40 nginx-lb02 Keepalived_vrrp[3831]: VRRP_Instance(VI_1) setting protocol VIPs.

Jul 10 16:20:40 nginx-lb02 Keepalived_vrrp[3831]: Sending gratuitous ARP on eth1 for 192.168.20.28

Jul 10 16:20:40 nginx-lb02 Keepalived_vrrp[3831]: VRRP_Instance(VI_1) Sending/queueing gratuitous ARPs on eth1 for 192.168.20.28

Jul 10 16:20:40 nginx-lb02 Keepalived_vrrp[3831]: Sending gratuitous ARP on eth1 for 192.168.20.28

Jul 10 16:20:40 nginx-lb02 Keepalived_vrrp[3831]: Sending gratuitous ARP on eth1 for 192.168.20.28

Jul 10 16:20:40 nginx-lb02 Keepalived_vrrp[3831]: Sending gratuitous ARP on eth1 for 192.168.20.28

Jul 10 16:20:40 nginx-lb02 Keepalived_vrrp[3831]: Sending gratuitous ARP on eth1 for 192.168.20.28

Jul 10 16:20:40 nginx-lb02 Keepalived_vrrp[3831]: Opening script file /etc/keepalived/notify.sh

Jul 10 16:20:45 nginx-lb02 Keepalived_vrrp[3831]: Sending gratuitous ARP on eth1 for 192.168.20.28

Jul 10 16:20:45 nginx-lb02 Keepalived_vrrp[3831]: VRRP_Instance(VI_1) Sending/queueing gratuitous ARPs on eth1 for 192.168.20.28

Jul 10 16:20:45 nginx-lb02 Keepalived_vrrp[3831]: Sending gratuitous ARP on eth1 for 192.168.20.28

Jul 10 16:20:45 nginx-lb02 Keepalived_vrrp[3831]: Sending gratuitous ARP on eth1 for 192.168.20.28

Jul 10 16:20:45 nginx-lb02 Keepalived_vrrp[3831]: Sending gratuitous ARP on eth1 for 192.168.20.28 #2.本机虚IP情况:

[root@nginx-lb02 ~]# ip add show eth1

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:21:9d:5c brd ff:ff:ff:ff:ff:ff

inet 192.168.20.20/24 brd 192.168.20.255 scope global noprefixroute eth1

valid_lft forever preferred_lft forever

inet 192.168.20.29/24 scope global secondary eth1

valid_lft forever preferred_lft forever

inet 192.168.20.28/24 scope global secondary eth1

valid_lft forever preferred_lft forever

inet6 fe80::52b0:737b:a3cb:c6a5/64 scope link noprefixroute

valid_lft forever preferred_lft forever此时测试192.168.20.28虚地址可以正常进行调度

[root@xuzhichao ~]# for i in {1..10} ;do curl -k -Hhost:www.xuzhichao.com https://192.168.20.28 ;done

node1.xuzhichao.com page

node2.xuzhichao.com page

node1.xuzhichao.com page

node2.xuzhichao.com page

node1.xuzhichao.com page

node2.xuzhichao.com page

node1.xuzhichao.com page

node2.xuzhichao.com page

node1.xuzhichao.com page

node2.xuzhichao.com page

在nginx-lb01节点删除down文件:

#1.删除down文件:

[root@nginx-lb01 keepalived]# rm -f /etc/keepalived/down #2.查看nginx-lb01节点日志,显示脚本校测成功,优先级恢复,重新抢占会虚地址192.168.20.28

[root@nginx-lb01 ~]# tail -f /var/log/keepalived.log

Jul 10 16:33:56 nginx-lb01 Keepalived_vrrp[18592]: VRRP_Script(chk_down) succeeded

Jul 10 16:33:57 nginx-lb01 Keepalived_vrrp[18592]: VRRP_Instance(VI_2) Changing effective priority from 50 to 100

Jul 10 16:33:57 nginx-lb01 Keepalived_vrrp[18592]: VRRP_Instance(VI_1) Changing effective priority from 70 to 120

Jul 10 16:33:59 nginx-lb01 Keepalived_vrrp[18592]: VRRP_Instance(VI_1) forcing a new MASTER election

Jul 10 16:34:02 nginx-lb01 Keepalived_vrrp[18592]: VRRP_Instance(VI_1) Transition to MASTER STATE

Jul 10 16:34:05 nginx-lb01 Keepalived_vrrp[18592]: VRRP_Instance(VI_1) Entering MASTER STATE

Jul 10 16:34:05 nginx-lb01 Keepalived_vrrp[18592]: VRRP_Instance(VI_1) setting protocol VIPs.

Jul 10 16:34:05 nginx-lb01 Keepalived_vrrp[18592]: Sending gratuitous ARP on eth1 for 192.168.20.28

Jul 10 16:34:05 nginx-lb01 Keepalived_vrrp[18592]: VRRP_Instance(VI_1) Sending/queueing gratuitous ARPs on eth1 for 192.168.20.28

Jul 10 16:34:05 nginx-lb01 Keepalived_vrrp[18592]: Sending gratuitous ARP on eth1 for 192.168.20.28

Jul 10 16:34:05 nginx-lb01 Keepalived_vrrp[18592]: Sending gratuitous ARP on eth1 for 192.168.20.28 #3.本机虚IP情况:

[root@nginx-lb01 keepalived]# ip add show eth1

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:33:71:d0 brd ff:ff:ff:ff:ff:ff

inet 192.168.20.19/24 brd 192.168.20.255 scope global noprefixroute eth1

valid_lft forever preferred_lft forever

inet 192.168.20.28/24 scope global secondary eth1

valid_lft forever preferred_lft forever

inet6 fe80::f0da:450f:5a80:de8b/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::7f20:c9d7:cb3e:bb8e/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::1a77:baea:91d8:79c7/64 scope link noprefixroute

valid_lft forever preferred_lft forever

keepalived(3)- keepalived+nginx实现WEB负载均衡高可用集群的更多相关文章

- linux系统下对网站实施负载均衡+高可用集群需要考虑的几点

随着linux系统的成熟和广泛普及,linux运维技术越来越受到企业的关注和追捧.在一些中小企业,尤其是牵涉到电子商务和电子广告类的网站,通常会要求作负载均衡和高可用的Linux集群方案. 那么如何实 ...

- Linux下"负载均衡+高可用"集群的考虑点 以及 高可用方案说明(Keepalive/Heartbeat)

当下Linux运维技术越来越受到企业的关注和追捧, 在某些企业, 尤其是牵涉到电子商务和电子广告类的网站,通常会要求作负载均衡和高可用的Linux集群方案.那么如何实施Llinux集群架构,才能既有效 ...

- LVS+Keepalived-DR模式负载均衡高可用集群

LVS+Keepalived DR模式负载均衡+高可用集群架构图 工作原理: Keepalived采用VRRP热备份协议实现Linux服务器的多机热备功能. VRRP,虚拟路由冗余协议,是针对路由器的 ...

- Lvs+keepAlived实现负载均衡高可用集群(DR实现)

第1章 LVS 简介 1.1 LVS介绍 LVS是Linux Virtual Server的简写,意为Linux虚拟服务器,是虚拟的服务器集群系统,可在UNIX/LINUX平台下实现负载均衡集群功能. ...

- 手把手教程: CentOS 6.5 LVS + KeepAlived 搭建 负载均衡 高可用 集群

为了实现服务的高可用和可扩展,在网上找了几天的资料,现在终于配置完毕,现将心得公布处理,希望对和我一样刚入门的菜鸟能有一些帮助. 一.理论知识(原理) 我们不仅要知其然,而且要知其所以然,所以先给大家 ...

- lvs+heartbeat搭建负载均衡高可用集群

[172.25.48.1]vm1.example.com [172.25.48.4]vm4.example.com 集群依赖软件:

- RHCS+Nginx及Fence机制实现高可用集群

RHCS(Red Hat Cluster Suite,红帽集群套件)是Red Hat公司开发整合的一套综合集群软件组件,提供了集群系统中三种集群构架,分别是高可用性集群.负载均衡集群.存储集群,可以通 ...

- nginx实现高性能负载均衡的Tomcat集群

1. 安装软件: nginx 两个apache-tomcat 安装过程省略. 2.配置两个tomcat的http端口,第一个为18080,第二个为28080 注意:需要把server.xml文件中所有 ...

- windows NLB+ARR实现Web负载均衡高可用/可伸缩

基于IIS的ARR负载均衡 基于NLB负载均衡 这两篇分别分ARR 和 NLB基本配置,下面我们讲讲,如何组合使用,搭配成高可用/可伸缩集群. 什么是高可用,可伸缩 ? 高可用:是指系统经过专门设计减 ...

- 基于corosync+pacemaker+drbd+LNMP做web服务器的高可用集群

实验系统:CentOS 6.6_x86_64 实验前提: 1)提前准备好编译环境,防火墙和selinux都关闭: 2)本配置共有两个测试节点,分别coro1和coro2,对应的IP地址分别为192.1 ...

随机推荐

- LeetCode 416. 分割等和子集(bitset优化)

LeetCode 416. 分割等和子集 1 题目描述 给你一个只包含正整数的非空数组nums.请你判断是否可以将这个数组分割成两个子集,使得两个子集的元素和相等. 1.1 输入测试 示例 1: 输入 ...

- #min_max容斥#HDU 4336 Card Collector

题目 有\(n\)张牌,获得第\(i\)张牌的概率为\(p_i\), 问期望多少次能收集完\(n\)张牌 分析 题目求的是\(E(\max S)\),根据min_max容斥可以得到, \[E(\max ...

- #威佐夫博弈#洛谷 2252 [SHOI2002]取石子游戏

题目 有两堆石子,数量任意,可以不同.游戏开始由两个人轮流取石子. 游戏规定,每次有两种不同的取法,一是可以在任意的一堆中取走任意多的石子: 二是可以在两堆中同时取走相同数量的石子.最后把石子全部取完 ...

- 劫持TLS绕过canary pwn89

劫持TLS绕过canary pwn88 首先了解一下这个东西的前提条件和原理 前提: 溢出字节够大,通常至少一个page(4K) 创建一个线程,在线程内栈溢出 原理: 在开启canary的情况下,当程 ...

- OpenHarmony应用ArkUI 状态管理开发范例

本文转载自<#2023盲盒+码# OpenHarmony应用ArkUI 状态管理开发范例>,作者:zhushangyuan_ 本文根据橘子购物应用,实现ArkUI中的状态管理. 在声明 ...

- Windows wsl2安装Docker

wsl2的Ubuntu安装好后,就可以安装Docker了. 由于众所周知的原因,国内访问国外的某些网站会访问不了或者访问极慢,Docker的安装网站就在其中. 所以推荐使用阿里的镜像进行安装. 1.使 ...

- linux打包Qt,收集依赖库脚本

编写shell脚本,用来收集Qt的依赖库,避免在无环境裸机上无法运行 1.创建shell脚本:touch pack.sh 2.编辑shell脚本,脚本内容如下:vi pack.sh 3.给脚本增加权限 ...

- css实现带背景颜色的小三角

<div id="first"> <p>带背景颜色的小三角实现是比较简单的</p> <span id="top"> ...

- 【译】新的 MSBuild 编辑体验

MSBuild 是 .NET 开发体验的基本组成部分,但它对新手和有经验的开发人员都具有挑战性.为了帮助开发人员更好地理解他们的项目文件,并访问需要直接编辑项目文件的高级功能,我们发布了一个实验性的 ...

- 批处理及有状态等应用类型在 K8S 上应该如何配置?

众所周知, Kubernetes(K8S)更适合运行无状态应用, 但是除了无状态应用. 我们还会有很多其他应用类型, 如: 有状态应用, 批处理, 监控代理(每台主机上都得跑), 更复杂的应用(如:h ...