用KNN算法分类CIFAR-10图片数据

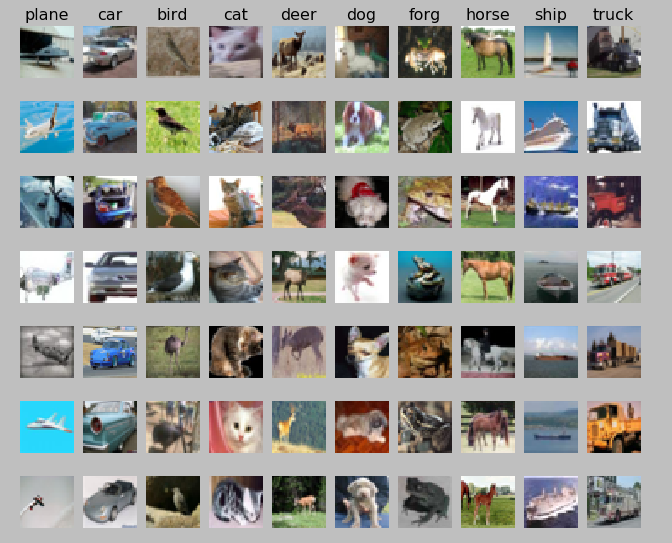

KNN分类CIFAR-10,并且做Cross Validation,CIDAR-10数据库数据如下:

knn.py : 主要的试验流程

from cs231n.data_utils import load_CIFAR10

from cs231n.classifiers import KNearestNeighbor

import random

import numpy as np

import matplotlib.pyplot as plt

# set plt params

plt.rcParams['figure.figsize'] = (10.0, 8.0) # set default size of plots

plt.rcParams['image.interpolation'] = 'nearest'

plt.rcParams['image.cmap'] = 'gray' cifar10_dir = 'cs231n/datasets/cifar-10-batches-py'

x_train,y_train,x_test,y_test = load_CIFAR10(cifar10_dir)

print'x_train : ',x_train.shape

print'y_train : ',y_train.shape

print'x_test : ',x_test.shape,'y_test : ',y_test.shape #visual training example

classes = ['plane','car','bird','cat','deer','dog','forg','horse','ship','truck']

num_classes = len(classes)

samples_per_class = 7

for y,cls in enumerate(classes):

#flaznonzero return indices_array of the none-zero elements

# ten classes, y_train and y_test all in [1...10]

idxs = np.flatnonzero(y_train == y)

idxs = np.random.choice(idxs , samples_per_class, replace = False)

for i,idx in enumerate(idxs):

plt_idx = i*num_classes + y + 1

# subplot(m,n,p)

# m : length of subplot

# n : width of subplot

# p : location of subplot

plt.subplot(samples_per_class,num_classes,plt_idx)

plt.imshow(x_train[idx].astype('uint8'))

# hidden the axis info

plt.axis('off')

if i == 0:

plt.title(cls)

plt.show() # subsample data for more dfficient code execution

num_training = 5000

#range(5)=[0,1,2,3,4]

mask = range(num_training)

x_train = x_train[mask]

y_train = y_train[mask]

num_test = 500

mask = range(num_test)

x_test = x_test[mask]

y_test = y_test[mask]

#the image data has three chanels

#the next two step shape the image size 32*32*3 to 3072*1

x_train = np.reshape(x_train,(x_train.shape[0],-1))

x_test = np.reshape(x_test,(x_test.shape[0],-1))

print 'after subsample and re shape:'

print 'x_train : ',x_train.shape," x_test : ",x_test.shape

#KNN classifier

classifier = KNearestNeighbor()

classifier.train(x_train,y_train)

# compute the distance between test_data and train_data

dists = classifier.compute_distances_no_loops(x_test)

#each row is a single test example and its distances to training example

print 'dist shape : ',dists.shape

plt.imshow(dists , interpolation='none')

plt.show()

y_test_pred = classifier.predict_labels(dists,k = 5)

num_correct = np.sum(y_test_pred == y_test)

acc = float(num_correct)/num_test

print'k=5 ,The Accurancy is : ', acc #Cross-Validation #5-fold cross validation split the training data to 5 pieces

num_folds = 5

#k is params of knn

k_choice = [1,5,8,11,15,18,20,50,100]

x_train_folds = []

y_train_folds = []

x_train_folds = np.array_split(x_train,num_folds)

y_train_folds = np.array_split(y_train,num_folds) k_to_acc={} for k in k_choice:

k_to_acc[k] =[]

for k in k_choice:

print 'cross validation : k = ', k

for j in range(num_folds):

#vstack :stack the array to matrix

#vertical

x_train_cv = np.vstack(x_train_folds[0:j]+x_train_folds[j+1:])

x_test_cv = x_train_folds[j] #>>> a = np.array((1,2,3))

#>>> b = np.array((2,3,4))

#>>> np.hstack((a,b))

# horizontally

y_train_cv = np.hstack(y_train_folds[0:j]+y_train_folds[j+1:])

y_test_cv = y_train_folds[j] classifier.train(x_train_cv,y_train_cv)

dists_cv = classifier.compute_distances_no_loops(x_test_cv)

y_test_pred = classifier.predict_labels(dists_cv,k)

num_correct = np.sum(y_test_pred == y_test_cv)

acc = float(num_correct)/ num_test

k_to_acc[k].append(acc)

print k_to_acc

k_nearest_neighbor.py : knn算法的实现:

import numpy as np

from collections import Counter

class KNearestNeighbor(object):

""" a kNN classifier with L2 distance """ def __init__(self):

pass def train(self, X, y):

"""

Train the classifier. For k-nearest neighbors this is just

memorizing the training data. Inputs:

- X: A numpy array of shape (num_train, D) containing the training data

consisting of num_train samples each of dimension D.

- each row is a training example

- y: A numpy array of shape (N,) containing the training labels, where

y[i] is the label for X[i].

"""

self.X_train = X

self.y_train = y def predict(self, X, k=1, num_loops=0):

"""

Predict labels for test data using this classifier. Inputs:

- X: A numpy array of shape (num_test, D) containing test data consisting

of num_test samples each of dimension D.

- k: The number of nearest neighbors that vote for the predicted labels.

- num_loops: Determines which implementation to use to compute distances

between training points and testing points. Returns:

- y: A numpy array of shape (num_test,) containing predicted labels for the

test data, where y[i] is the predicted label for the test point X[i].

"""

if num_loops == 0:

dists = self.compute_distances_no_loops(X)

elif num_loops == 1:

dists = self.compute_distances_one_loop(X)

elif num_loops == 2:

dists = self.compute_distances_two_loops(X)

else:

raise ValueError('Invalid value %d for num_loops' % num_loops) return self.predict_labels(dists, k=k)

def compute_distances_two_loops(self, X):

"""

Compute the distance between each test point in X and each training point

in self.X_train using a nested loop over both the training data and the

test data. Inputs:

- X: A numpy array of shape (num_test, D) containing test data. Returns:

- dists: A numpy array of shape (num_test, num_train) where dists[i, j]

is the Euclidean distance between the ith test point and the jth training

point.

"""

num_test = X.shape[0]

num_train = self.X_train.shape[0]

dists = np.zeros((num_test, num_train))

for i in xrange(num_test):

for j in xrange(num_train):

#####################################################################

# TODO: #

# Compute the l2 distance between the ith test point and the jth #

# training point, and store the result in dists[i, j]. You should #

# not use a loop over dimension. #

#####################################################################

#Euclidean distance

#dists[i,j] = np.sqrt(np.sum(X[i,:]-self.X_train[j,:])**2)

# use linalg make it more easy

dists[i,j] = np.linalg.norm(self.X_train[j,:]-X[i,:])

#####################################################################

# END OF YOUR CODE #

#####################################################################

return dists def compute_distances_one_loop(self, X):

"""

Compute the distance between each test point in X and each training point

in self.X_train using a single loop over the test data. Input / Output: Same as compute_distances_two_loops

"""

num_test = X.shape[0]

num_train = self.X_train.shape[0]

dists = np.zeros((num_test, num_train))

for i in xrange(num_test):

#######################################################################

# TODO: #

# Compute the l2 distance between the ith test point and all training #

# points, and store the result in dists[i, :]. #

#######################################################################

#evevy row minus X[i,:] then norm it

# axis = 1 imply operations by row

dist[i,:] = np.linalg.norm(self.X_train - X[i,:],axis = 1)

#######################################################################

# END OF YOUR CODE #

#######################################################################

return dists def compute_distances_no_loops(self, X):

"""

Compute the distance between each test point in X and each training point

in self.X_train using no explicit loops. Input / Output: Same as compute_distances_two_loops

"""

num_test = X.shape[0]

num_train = self.X_train.shape[0]

dists = np.zeros((num_test, num_train))

#########################################################################

# TODO: #

# Compute the l2 distance between all test points and all training #

# points without using any explicit loops, and store the result in #

# dists. #

# #

# You should implement this function using only basic array operations; #

# in particular you should not use functions from scipy. #

# #

# HINT: Try to formulate the l2 distance using matrix multiplication #

# and two broadcast sums. #

#########################################################################

M = np.dot(X , self.X_train.T)

te = np.square(X).sum(axis = 1)

tr = np.square(self.X_train).sum(axis = 1)

dists = np.sqrt(-2*M +tr+np.matrix(te).T)

#########################################################################

# END OF YOUR CODE #

#########################################################################

return dists def predict_labels(self, dists, k=1):

"""

Given a matrix of distances between test points and training points,

predict a label for each test point. Inputs:

- dists: A numpy array of shape (num_test, num_train) where dists[i, j]

gives the distance betwen the ith test point and the jth training point. Returns:

- y: A numpy array of shape (num_test,) containing predicted labels for the

test data, where y[i] is the predicted label for the test point X[i].

"""

num_test = dists.shape[0]

y_pred = np.zeros(num_test)

for i in xrange(num_test):

# A list of length k storing the labels of the k nearest neighbors to

# the ith test point.

closest_y = []

#########################################################################

# TODO: #

# Use the distance matrix to find the k nearest neighbors of the ith #

# testing point, and use self.y_train to find the labels of these #

# neighbors. Store these labels in closest_y. #

# Hint: Look up the function numpy.argsort. #

#########################################################################

labels = self.y_train[np.argsort(dists[i,:])].flatten()

closest_y = labels[0:k]

#########################################################################

# TODO: #

# Now that you have found the labels of the k nearest neighbors, you #

# need to find the most common label in the list closest_y of labels. #

# Store this label in y_pred[i]. Break ties by choosing the smaller #

# label. #

#########################################################################

c = Counter(closest_y)

y_pred[i] = c.most_common(1)[0][0]

#########################################################################

# END OF YOUR CODE #

######################################################################### return y_pred

data_utils.py : CIFAR-10数据的读取

import cPickle as pickle

import numpy as np

import os

from scipy.misc import imread def load_CIFAR_batch(filename):

""" load single batch of cifar """

with open(filename, 'rb') as f:

datadict = pickle.load(f)

X = datadict['data']

Y = datadict['labels']

X = X.reshape(10000, 3, 32, 32).transpose(0,2,3,1).astype("float")

Y = np.array(Y)

return X, Y def load_CIFAR10(ROOT):

""" load all of cifar """

xs = []

ys = []

for b in range(1,6):

f = os.path.join(ROOT, 'data_batch_%d' % (b, ))

X, Y = load_CIFAR_batch(f)

xs.append(X)

ys.append(Y)

Xtr = np.concatenate(xs)

Ytr = np.concatenate(ys)

del X, Y

Xte, Yte = load_CIFAR_batch(os.path.join(ROOT, 'test_batch'))

return Xtr, Ytr, Xte, Yte def load_tiny_imagenet(path, dtype=np.float32):

"""

Load TinyImageNet. Each of TinyImageNet-100-A, TinyImageNet-100-B, and

TinyImageNet-200 have the same directory structure, so this can be used

to load any of them. Inputs:

- path: String giving path to the directory to load.

- dtype: numpy datatype used to load the data. Returns: A tuple of

- class_names: A list where class_names[i] is a list of strings giving the

WordNet names for class i in the loaded dataset.

- X_train: (N_tr, 3, 64, 64) array of training images

- y_train: (N_tr,) array of training labels

- X_val: (N_val, 3, 64, 64) array of validation images

- y_val: (N_val,) array of validation labels

- X_test: (N_test, 3, 64, 64) array of testing images.

- y_test: (N_test,) array of test labels; if test labels are not available

(such as in student code) then y_test will be None.

"""

# First load wnids

with open(os.path.join(path, 'wnids.txt'), 'r') as f:

wnids = [x.strip() for x in f] # Map wnids to integer labels

wnid_to_label = {wnid: i for i, wnid in enumerate(wnids)} # Use words.txt to get names for each class

with open(os.path.join(path, 'words.txt'), 'r') as f:

wnid_to_words = dict(line.split('\t') for line in f)

for wnid, words in wnid_to_words.iteritems():

wnid_to_words[wnid] = [w.strip() for w in words.split(',')]

class_names = [wnid_to_words[wnid] for wnid in wnids] # Next load training data.

X_train = []

y_train = []

for i, wnid in enumerate(wnids):

if (i + 1) % 20 == 0:

print 'loading training data for synset %d / %d' % (i + 1, len(wnids))

# To figure out the filenames we need to open the boxes file

boxes_file = os.path.join(path, 'train', wnid, '%s_boxes.txt' % wnid)

with open(boxes_file, 'r') as f:

filenames = [x.split('\t')[0] for x in f]

num_images = len(filenames) X_train_block = np.zeros((num_images, 3, 64, 64), dtype=dtype)

y_train_block = wnid_to_label[wnid] * np.ones(num_images, dtype=np.int64)

for j, img_file in enumerate(filenames):

img_file = os.path.join(path, 'train', wnid, 'images', img_file)

img = imread(img_file)

if img.ndim == 2:

## grayscale file

img.shape = (64, 64, 1)

X_train_block[j] = img.transpose(2, 0, 1)

X_train.append(X_train_block)

y_train.append(y_train_block) # We need to concatenate all training data

X_train = np.concatenate(X_train, axis=0)

y_train = np.concatenate(y_train, axis=0) # Next load validation data

with open(os.path.join(path, 'val', 'val_annotations.txt'), 'r') as f:

img_files = []

val_wnids = []

for line in f:

img_file, wnid = line.split('\t')[:2]

img_files.append(img_file)

val_wnids.append(wnid)

num_val = len(img_files)

y_val = np.array([wnid_to_label[wnid] for wnid in val_wnids])

X_val = np.zeros((num_val, 3, 64, 64), dtype=dtype)

for i, img_file in enumerate(img_files):

img_file = os.path.join(path, 'val', 'images', img_file)

img = imread(img_file)

if img.ndim == 2:

img.shape = (64, 64, 1)

X_val[i] = img.transpose(2, 0, 1) # Next load test images

# Students won't have test labels, so we need to iterate over files in the

# images directory.

img_files = os.listdir(os.path.join(path, 'test', 'images'))

X_test = np.zeros((len(img_files), 3, 64, 64), dtype=dtype)

for i, img_file in enumerate(img_files):

img_file = os.path.join(path, 'test', 'images', img_file)

img = imread(img_file)

if img.ndim == 2:

img.shape = (64, 64, 1)

X_test[i] = img.transpose(2, 0, 1) y_test = None

y_test_file = os.path.join(path, 'test', 'test_annotations.txt')

if os.path.isfile(y_test_file):

with open(y_test_file, 'r') as f:

img_file_to_wnid = {}

for line in f:

line = line.split('\t')

img_file_to_wnid[line[0]] = line[1]

y_test = [wnid_to_label[img_file_to_wnid[img_file]] for img_file in img_files]

y_test = np.array(y_test) return class_names, X_train, y_train, X_val, y_val, X_test, y_test def load_models(models_dir):

"""

Load saved models from disk. This will attempt to unpickle all files in a

directory; any files that give errors on unpickling (such as README.txt) will

be skipped. Inputs:

- models_dir: String giving the path to a directory containing model files.

Each model file is a pickled dictionary with a 'model' field. Returns:

A dictionary mapping model file names to models.

"""

models = {}

for model_file in os.listdir(models_dir):

with open(os.path.join(models_dir, model_file), 'rb') as f:

try:

models[model_file] = pickle.load(f)['model']

except pickle.UnpicklingError:

continue

return models

通过 cv,最优的 k 值为7,accurancy=0.282,太低了,明天用cnn重复这个实验...

用KNN算法分类CIFAR-10图片数据的更多相关文章

- Opencv学习之路—Opencv下基于HOG特征的KNN算法分类训练

在计算机视觉研究当中,HOG算法和LBP算法算是基础算法,但是却十分重要.后期很多图像特征提取的算法都是基于HOG和LBP,所以了解和掌握HOG,是学习计算机视觉的前提和基础. HOG算法的原理很多资 ...

- KNN算法——分类部分

1.核心思想 如果一个样本在特征空间中的k个最相邻的样本中的大多数属于某一个类别,则该样本也属于这个类别,并具有这个类别上样本的特性.也就是说找出一个样本的k个最近邻居,将这些邻居的属性的平均值赋给该 ...

- KNN算法[分类算法]

kNN(k-近邻)分类算法的实现 (1) 简介: (2)算法描述: (3) <?php /* *KNN K-近邻方法(分类算法的实现) */ /* *把.txt中的内容读到数组中保存,$file ...

- 机器学习实战(笔记)------------KNN算法

1.KNN算法 KNN算法即K-临近算法,采用测量不同特征值之间的距离的方法进行分类. 以二维情况举例: 假设一条样本含有两个特征.将这两种特征进行数值化,我们就可以假设这两种特种分别 ...

- 吴裕雄--天生自然python机器学习实战:K-NN算法约会网站好友喜好预测以及手写数字预测分类实验

实验设备与软件环境 硬件环境:内存ddr3 4G及以上的x86架构主机一部 系统环境:windows 软件环境:Anaconda2(64位),python3.5,jupyter 内核版本:window ...

- 机器学习【三】k-近邻(kNN)算法

一.kNN算法概述 kNN算法是用来分类的,其依据测量不同特征值之间的距离,其核心思想在于用距离目标最近的k个样本数据的分类来代表目标的分类(这k个样本数据和目标数据最为相似).其精度高,对异常值不敏 ...

- 运用kNN算法识别潜在续费商家

背景与目标 Youzan 是一家SAAS公司,服务于数百万商家,帮助互联网时代的生意人私有化顾客资产.拓展互联网客群.提高经营效率.现在,该公司希望能够从商家的交易数据中,挖掘出有强烈续费倾向的商家, ...

- KNN算法的R语言实现

近邻分类 简言之,就是将未标记的案例归类为与它们最近相似的.带有标记的案例所在的类. 应用领域: 1.计算机视觉:包含字符和面部识别等 2.推荐系统:推荐受众喜欢电影.美食和娱乐等 3.基因工程:识别 ...

- 【Machine Learning】KNN算法虹膜图片识别

K-近邻算法虹膜图片识别实战 作者:白宁超 2017年1月3日18:26:33 摘要:随着机器学习和深度学习的热潮,各种图书层出不穷.然而多数是基础理论知识介绍,缺乏实现的深入理解.本系列文章是作者结 ...

随机推荐

- javascript 图片延迟加载

<!DOCTYPE html> <html xmlns="http://www.w3.org/1999/xhtml"> <head> <m ...

- Python之socketserver源码分析

一.socketserver简介 socketserver是一个创建服务器的框架,封装了许多功能用来处理来自客户端的请求,简化了自己写服务端代码.比如说对于基本的套接字服务器(socket-based ...

- hdu 1515 Anagrams by Stack

题解: 第一:两个字符不相等(即栈顶字符与目标字符不相等):这种情况很容易处理,将匹配word的下一个字符入栈,指针向后挪已为继续递归. 第二:两个字符相等(即栈顶字符与目标字符相等):这种情况有两种 ...

- git入门-分支

1. git分支简介 使用分支可以让你从开发主线上分离开来,然后在新的分支上解决特定问题,同时不会影响主线.像其它的一些版本控制系统,创建分支需要创建整个源代码目录的副本.而Git 的分支是很轻量级的 ...

- SPRING IN ACTION 第4版笔记-第十一章Persisting data with object-relational mapping-002设置JPA的EntityManagerFactory(<persistence-unit>、<jee:jndi-lookup>)

一.EntityManagerFactory的种类 1.The JPA specification defines two kinds of entity managers: Applicatio ...

- (KEIL)MDK5安装与JLINK问题解决方法(支持代码自动补全)

MDK V5在10月8日发布,昨天终于没忍住装上使用了一下,尝了尝鲜. 安装和破解的方法相信各位高手都不在话下,实在不会的可以参考keil4的安装步骤,keil5 和 keil4的安装没有的区别. ...

- Eclipse配置Flex开发环境(转)

Eclipse配置Flex开发环境 开发环境:Eclipse3.2.Flex Builder31.下载安装Flex Builder3,下载地址:http://subject.csdn.net/adob ...

- Android 实现全屏、无标题栏

实现全屏无标题栏: 1.在xml文件中进行配置 AndroidManifest.xml中,找到需要全屏或设置成无标题栏的Activity,在该Activity进行如下配置即可. 实现全屏效果: and ...

- Mybatis Interceptor 拦截器原理 源码分析

Mybatis采用责任链模式,通过动态代理组织多个拦截器(插件),通过这些拦截器可以改变Mybatis的默认行为(诸如SQL重写之类的),由于插件会深入到Mybatis的核心,因此在编写自己的插件前最 ...

- jsp页面变量作用域问题

想实现一个注销的功能,在页面上有个注销按钮,我想一点它就注销,用了js给按钮加了onclick代码,如下 <% session = request.getSession(true); ...