[转]Core Kubernetes: Jazz Improv over Orchestration

(因为写的真的是太好了,所以必须要转载)

This is the first in a series of blog posts that details some of the inner workings of Kubernetes. If you are simply an operator or user of Kubernetes you don’t necessarily need to understand these details. But if you prefer depth-first learning and really want to understand the details of how things work, this is for you.

This article assumes a working knowledge of Kubernetes. I’m not going to define what Kubernetes is or the core components (e.g. Pod, Node, Kubelet).

In this article we talk about the core moving parts and how they work with each other to make things happen. The general class of systems like Kubernetes is commonly called container orchestration. But orchestration implies there is a central conductor with an up front plan. However, this isn’t really a great description of Kubernetes. Instead, Kubernetes is more like jazz improv. There is a set of actors that are playing off of each other to coordinate and react.

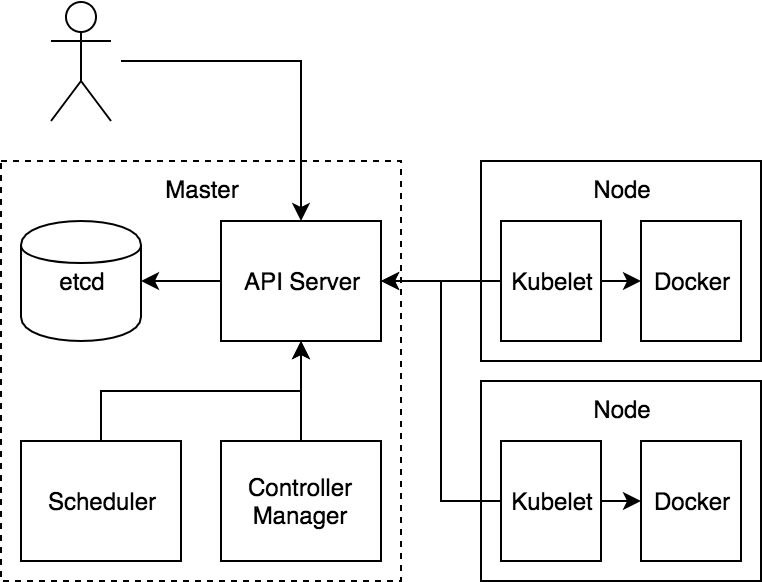

We’ll start by going over the core components and what they do. Then we’ll look at a typical flow that schedules and runs a Pod.

Datastore: etcd

etcd is the core state store for Kubernetes. While there are important in-memory caches throughout the system, etcd is considered the system of record.

Quick summary of etcd: etcd is a clustered database that prizes consistency above partition tolerance. Systems of this class (ZooKeeper, parts of Consul) are patterned after a system developed at Google called chubby. These systems are often called “lock servers” as they can be used to coordinate locking in a distributed systems. Personally, I find that name a bit confusing. The data model for etcd (and chubby) is a simple hierarchy of keys that store simple unstructured values. It actually looks a lot like a file system. Interestingly, at Google, chubby is most frequently accessed using an abstracted File interface that works across local files, object stores, etc. The highly consistent nature, however, provides for strict ordering of writes and allows clients to do atomic updates of a set of values.

Managing state reliably is one of the more difficult things to do in any system. In a distributed system it is even more difficult as it brings in many subtle algorithms like raft or paxos. By using etcd, Kubernetes itself can concentrate on other parts of the system.

The idea of watch in etcd (and similar systems) is critical for how Kubernetes works. These systems allow clients to perform a lightweight subscription for changes to parts of the key namespace. Clients get notified immediately when something they are watching changes. This can be used as a coordination mechanism between components of the distributed system. One component can write to etcd and other componenents can immediately react to that change.

One way to think of this is as an inversion of the common pubsub mechanisms. In many queue systems, the topics store no real user data but the messages that are published to those topics contain rich data. For systems like etcd the keys (analogous to topics) store the real data while the messages (notifications of changes) contain no unique rich information. In other words, for queues the topics are simple and the messages rich while systems like etcd are the opposite.

The common pattern is for clients to mirror a subset of the database in memory and then react to changes of that database. Watches are used as an efficient mechanism to keep that cache up to date. If the watch fails for some reason, the client can fall back to polling at the cost of increased load, network traffic and latency.

Policy Layer: API Server

The heart of Kubernetes is a component that is, creatively, called the API Server. This is the only component in the system that talks to etcd. In fact, etcd is really an implementation detail of the API Server and it is theoretically possible to back Kubernetes with some other storage system.

The API Server is a policy component that provides filtered access to etcd. Its responsibilities are relatively generic in nature and it is currently being broken out so that it can be used as a control plane nexus for other types of systems.

The main currency of the API Server is a resource. These are exposed via a simple REST API. There is a standard structure to most of these resources that enables some expanded features. The nature and reasoning for that API structure is left as a topic for a future post. Regardless, the API Server allows various components to create, read, write, update and watch for changes of resources.

Let’s detail the responsibilities of the API Server:

- Authentication and authorization. Kubernetes has a pluggable auth system. There are some built in mechanisms for both authentication users and authorizing those users to access resources. In addition there are methods to call out to external services (potentially self-hosted on Kubernetes) to provide these services. This type of extensiblity is core to how Kubernetes is built.

- Next, the API Server runs a set of admission controllers that can reject or modify requests. These allow policy to be applied and default values to be set. This is a critical place for making sure that the data entering the system is valid while the API Server client is still waiting for request confirmation. While these admission controllers are currently compiled in to the API Server, there is ongoing work to make this be another extensibility mechanism.

- The API server helps with API versioning. A critical problem when versioning APIs is to allow for the representation of the resources to evolve. Fields will be added, deprecated, re-organized and in other ways transformed. The API Server stores a “true” representation of a resource in etcd and converts/renders that resource depending on the version of the API being satisfied. Planning for versioning and the evolution of APIs has been a key effort for Kubernetes since early in the project. This is part of what allows Kubernetes to offer a decent deprecation policy relatively early in its lifecycle.

A critical feature of the API Server is that it also supports the idea of watch. This means that clients of the API Server can employ the same coordination patterns as with etcd. Most coordination in Kubernetes consists of a component writing to an API Server resource that another component is watching. The second component will then react to changes almost immediately.

Business Logic: Controller Manager and Scheduler

The last piece of the puzzle is the code that actually makes the thing work! These are the components that coordinate through the API Server. These are bundled into separate servers called the Controller Manager and the Scheduler. The choice to break these out was so they couldn’t “cheat”. If the core parts of the system had to talk to the API Server like every other component it would help ensure that we were building an extensible system from the start. The fact that there are just two of these is an accident of history. They could conceivably be combined into one big binary or broken out into a dozen+ separate servers.

The components here do all sorts of things to make the system work. The scheduler, specifically, (a) looks for Pods that aren’t assigned to a node (unbound Pods), (b) examines the state of the cluster (cached in memory), (c) picks a node that has free space and meets other constraints, and (d) binds that Pod to a node.

Similarly, there is code (“controller”) in the Controller Manager to implement the behavior of a ReplicaSet. (As a reminder, the ReplicaSet ensures that there are a set number of replicas of a Pod Template running at any one time) This controller will watch both the ReplicaSet resource and a set of Pods based on the selector in that resource. It then takes action to create/destroy Pods in order to maintain a stable set of Pods as described in the ReplicaSet. Most controllers follow this type of pattern.

Node Agent: Kubelet

Finally, there is the agent that sits on the node. This also authenticates to the API Server like any other component. It is responsible for watching the set of Pods that are bound to its node and making sure those Pods are running. It then reports back status as things change with respect to those Pods.

A Typical Flow

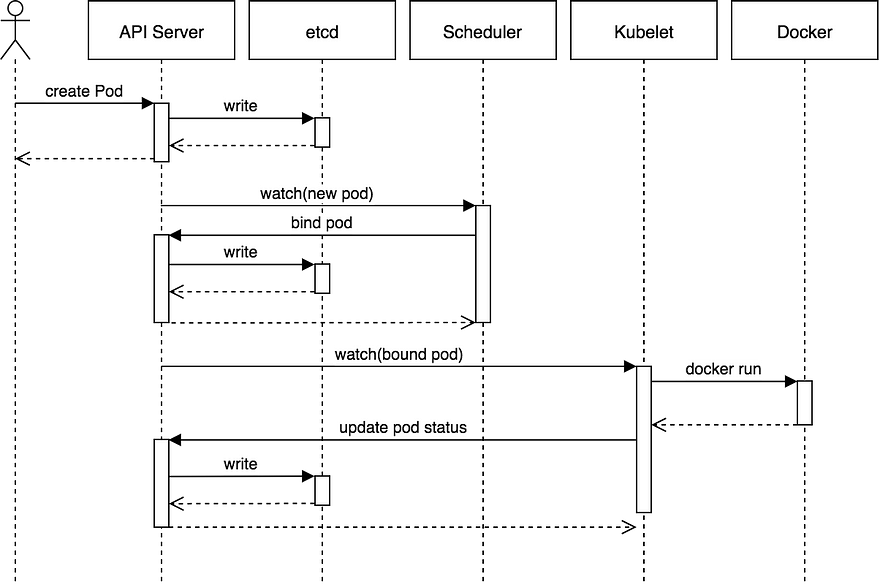

To help understand how this works, let’s work through an example of how things get done in Kubernetes.

This sequence diagram shows how a typical flow works for scheduling a Pod. This shows the (somewhat rare) case where a user is creating a Pod directly. More typically, the user will create something like a ReplicaSet and it will be the ReplicaSet that creates the Pod.

The basic flow:

- The user creates a Pod via the API Server and the API server writes it to etcd.

- The scheduler notices an “unbound” Pod and decides which node to run that Pod on. It writes that binding back to the API Server.

- The Kubelet notices a change in the set of Pods that are bound to its node. It, in turn, runs the container via the container runtime (i.e. Docker).

- The Kubelet monitors the status of the Pod via the container runtime. As things change, the Kubelet will reflect the current status back to the API Server.

Summing Up

By using the API Server as a central coordination point, Kubernetes is able to have a set of components interact with each other in a loosely coupled manner. Hopefully this gives you an idea of how Kubernetes is more jazz improv than orchestration.

Please give us feedback on this article and suggestions for future “under the covers” type pieces. Hit me up on twitter at @jbeda or @heptio.

(原文地址:https://blog.heptio.com/core-kubernetes-jazz-improv-over-orchestration-a7903ea92ca)

[转]Core Kubernetes: Jazz Improv over Orchestration的更多相关文章

- kubernetes实践之一:kubernetes二进制包安装

kubernetes二进制部署 1.环境规划 软件 版本 Linux操作系统 CentOS Linux release 7.6.1810 (Core) Kubernetes 1.9 Docker 18 ...

- kubernetes 1.14安装部署metrics-server插件

简单介绍: 如果使用kubernetes的自动扩容功能的话,那首先得有一个插件,然后该插件将收集到的信息(cpu.memory..)与自动扩容的设置的值进行比对,自动调整pod数量.关于该插件,在ku ...

- Announcing HashiCorp Consul + Kubernetes

转自:https://www.hashicorp.com/blog/consul-plus-kubernetes We're excited to announce multiple features ...

- 基于TLS证书手动部署kubernetes集群(上)

一.简介 Kubernetes是Google在2014年6月开源的一个容器集群管理系统,使用Go语言开发,Kubernetes也叫K8S. K8S是Google内部一个叫Borg的容器集群管理系统衍生 ...

- Kubernetes - Getting Started With Kubeadm

In this scenario you'll learn how to bootstrap a Kubernetes cluster using Kubeadm. Kubeadm solves th ...

- kubernetes 1.15.1 高可用部署 -- 从零开始

这是一本书!!! 一本写我在容器生态圈的所学!!! 重点先知: 1. centos 7.6安装优化 2. k8s 1.15.1 高可用部署 3. 网络插件calico 4. dashboard 插件 ...

- 一份关于.NET Core云原生采用情况调查

调查背景 Kubernetes 越来越多地在生产环境中使用,围绕 Kubernetes 的整个生态系统在不断演进,新的工具和解决方案也在持续发布.云原生计算的发展驱动着各个企业转向遵循云原生原则(启动 ...

- 二进制方式安装Kubernetes 1.14.2高可用详细步骤

00.组件版本和配置策略 组件版本 Kubernetes 1.14.2 Docker 18.09.6-ce Etcd 3.3.13 Flanneld 0.11.0 插件: Coredns Dashbo ...

- (转)基于TLS证书手动部署kubernetes集群(上)

转:https://www.cnblogs.com/wdliu/archive/2018/06/06/9147346.html 一.简介 Kubernetes是Google在2014年6月开源的一个容 ...

随机推荐

- guava常用

教程: http://www.yiibai.com/guava/ http://ifeve.com/google-guava/ optional 注意java8同样提供optional,区分 意义: ...

- Asp.Net Core 2.0 项目实战(4)ADO.NET操作数据库封装、 EF Core操作及实例

Asp.Net Core 2.0 项目实战(1) NCMVC开源下载了 Asp.Net Core 2.0 项目实战(2)NCMVC一个基于Net Core2.0搭建的角色权限管理开发框架 Asp.Ne ...

- k8s 使用详解

转自:https://www.cnblogs.com/gaoyuechen/p/8685771.html

- python爬虫学习(一):BeautifulSoup库基础及一般元素提取方法

最近在看爬虫相关的东西,一方面是兴趣,另一方面也是借学习爬虫练习python的使用,推荐一个很好的入门教程:中国大学MOOC的<python网络爬虫与信息提取>,是由北京理工的副教授嵩天老 ...

- 不一样的go语言-gopher

前言 gopher原意地鼠,在golang 的世界里解释为地道的go程序员.在其他语言的世界里也有PHPer,Pythonic的说法,反而Java是个例外.虽然也有Javaer之类的说法,但似乎并 ...

- 【Java并发核心四】Executor 与 ThreadPoolExecutor

Executor 和 ThreadPoolExecutor 实现的是线程池,主要作用是支持高并发的访问处理. Executor 是一个接口,与线程池有关的大部分类都实现了此接口. ExecutorSe ...

- vue+ElementUI使用笔记

1,使用表单验证: //定义验证规则 window.varifyUtil = { //验证数字 validateNumber: function(rule, value, callback){ if ...

- bzoj4709: [Jsoi2011]柠檬 斜率优化

题目链接 bzoj4709: [Jsoi2011]柠檬 题解 斜率优化 设 \(f[i]\) 表示前 \(i\)个数分成若干段的最大总价值. 对于分成的每一段,左端点的数.右端点的数.选择的数一定是相 ...

- 转:甲骨文发布大数据解决方案 含最新版NoSQL数据库

原文出处: http://www.searchdatabase.com.cn/showcontent_88247.htm 以下是部分节选: 最新发布的大数据创新成果包括: Oracle Big Dat ...

- 使用OClint进行iOS项目的静态代码扫描

使用OClint进行iOS项目的静态代码扫描 原文链接:http://blog.yourtion.com/static-code-analysis-ios-using-oclint.html 最近需要 ...