云端炼丹,算力白嫖,基于云端GPU(Colab)使用So-vits库制作AI特朗普演唱《国际歌》

人工智能AI技术早已深入到人们生活的每一个角落,君不见AI孙燕姿的歌声此起彼伏,不绝于耳,但并不是每个人都拥有一块N卡,没有GPU的日子总是不好过的,但是没关系,山人有妙计,本次我们基于Google的Colab免费云端服务器来搭建深度学习环境,制作AI特朗普,让他高唱《国际歌》。

Colab(全名Colaboratory ),它是Google公司的一款基于云端的基础免费服务器产品,可以在B端,也就是浏览器里面编写和执行Python代码,非常方便,贴心的是,Colab可以给用户分配免费的GPU进行使用,对于没有N卡的朋友来说,这已经远远超出了业界良心的范畴,简直就是在做慈善事业。

配置Colab

Colab是基于Google云盘的产品,我们可以将深度学习的Python脚本、训练好的模型、以及训练集等数据直接存放在云盘中,然后通过Colab执行即可。

首先访问Google云盘:drive.google.com

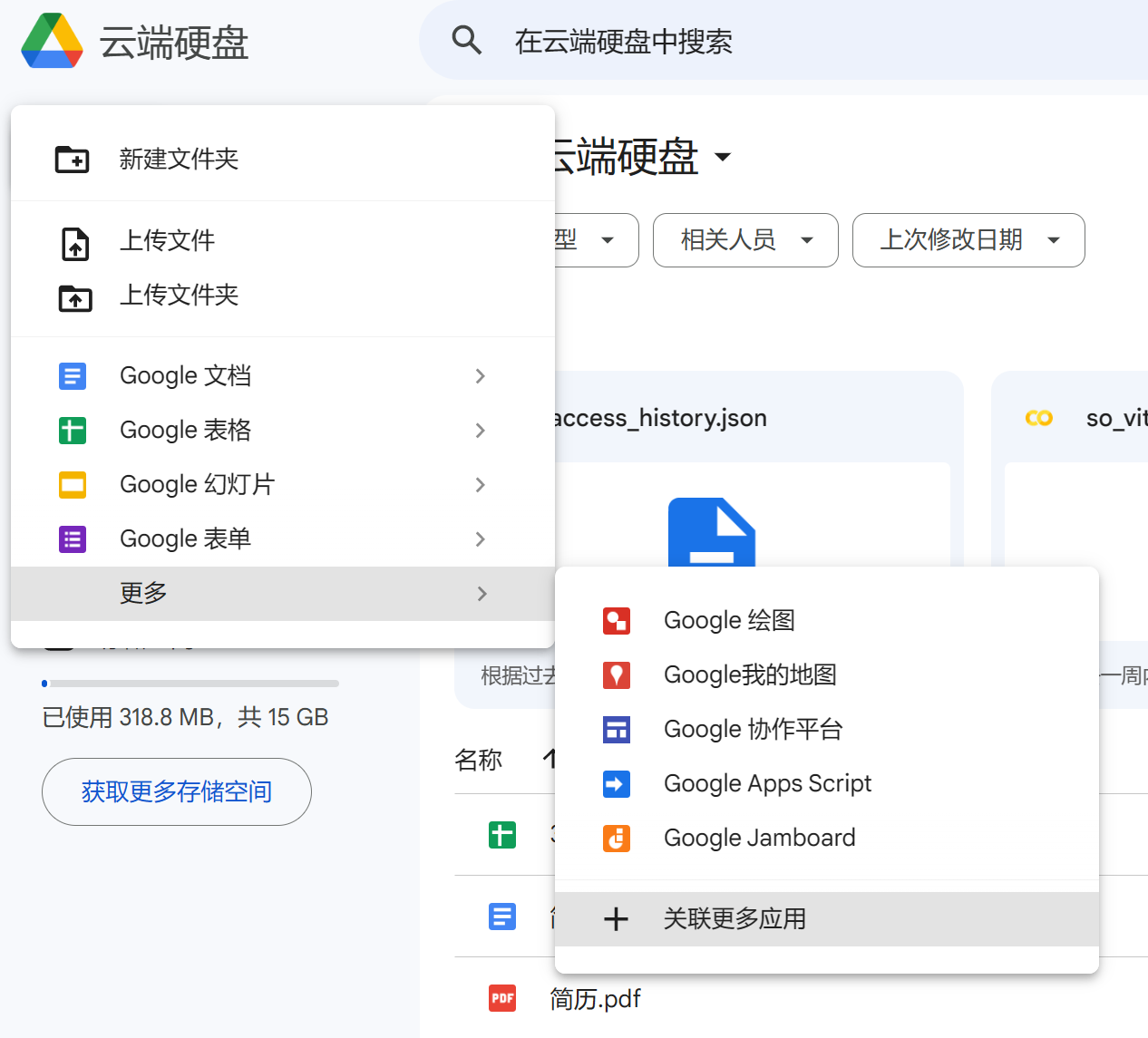

随后点击新建,选择关联更多应用:

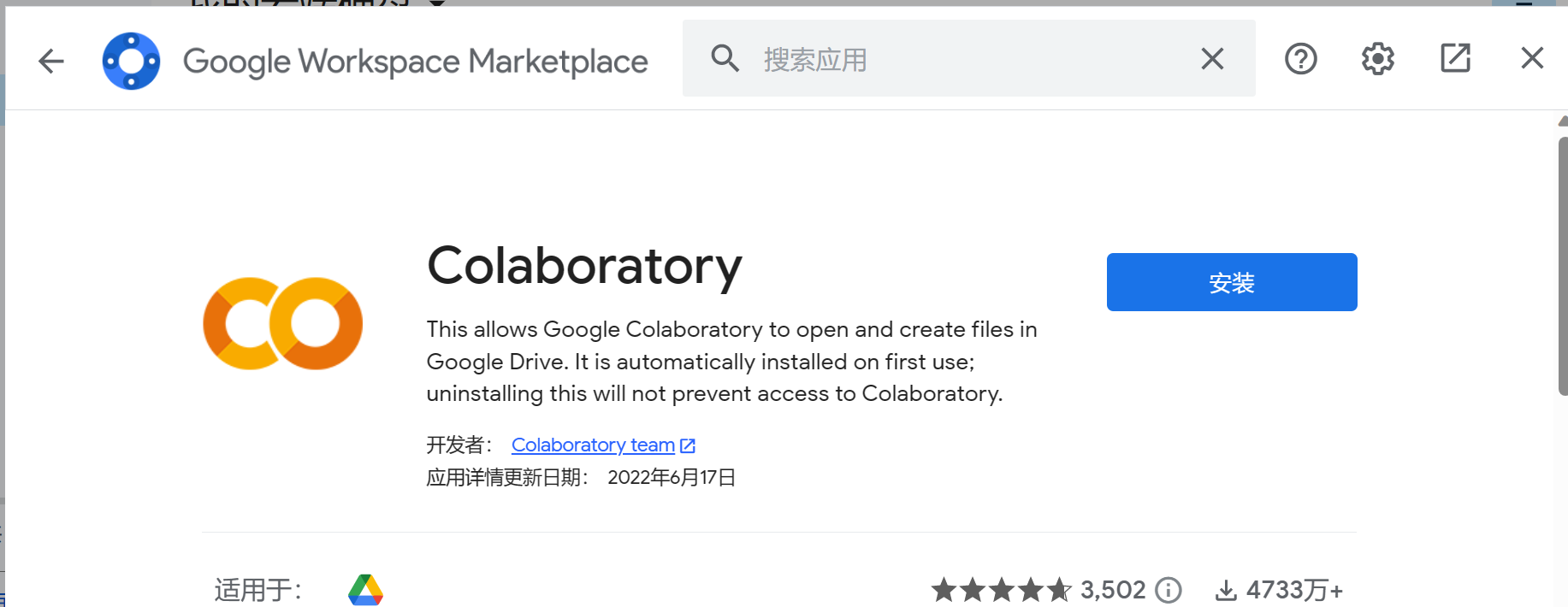

接着安装Colab即可:

至此,云盘和Colab就关联好了,现在我们可以新建一个脚本文件my_sovits.ipynb文件,键入代码:

hello colab

随后,按快捷键 ctrl + 回车,即可运行代码:

这里需要注意的是,Colab使用的是基于Jupyter Notebook的ipynb格式的Python代码。

Jupyter Notebook是以网页的形式打开,可以在网页页面中直接编写代码和运行代码,代码的运行结果也会直接在代码块下显示。如在编程过程中需要编写说明文档,可在同一个页面中直接编写,便于作及时的说明和解释。

随后设置一下显卡类型:

接着运行命令,查看GPU版本:

!/usr/local/cuda/bin/nvcc --version

!nvidia-smi

程序返回:

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2022 NVIDIA Corporation

Built on Wed_Sep_21_10:33:58_PDT_2022

Cuda compilation tools, release 11.8, V11.8.89

Build cuda_11.8.r11.8/compiler.31833905_0

Tue May 16 04:49:23 2023

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 525.85.12 Driver Version: 525.85.12 CUDA Version: 12.0 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 Tesla T4 Off | 00000000:00:04.0 Off | 0 |

| N/A 65C P8 13W / 70W | 0MiB / 15360MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

这里建议选择Tesla T4的显卡类型,性能更突出。

至此Colab就配置好了。

配置So-vits

下面我们配置so-vits环境,可以通过pip命令安装一些基础依赖:

!pip install pyworld==0.3.2

!pip install numpy==1.23.5

注意jupyter语言是通过叹号来运行命令。

注意,由于不是本地环境,有的时候colab会提醒:

Looking in indexes: https://pypi.org/simple, https://us-python.pkg.dev/colab-wheels/public/simple/

Collecting numpy==1.23.5

Downloading numpy-1.23.5-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (17.1 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 17.1/17.1 MB 80.1 MB/s eta 0:00:00

Installing collected packages: numpy

Attempting uninstall: numpy

Found existing installation: numpy 1.22.4

Uninstalling numpy-1.22.4:

Successfully uninstalled numpy-1.22.4

Successfully installed numpy-1.23.5

WARNING: The following packages were previously imported in this runtime:

[numpy]

You must restart the runtime in order to use newly installed versions.

此时numpy库需要重启runtime才可以导入操作。

重启runtime后,需要再重新安装一次,直到系统提示依赖已经存在:

Looking in indexes: https://pypi.org/simple, https://us-python.pkg.dev/colab-wheels/public/simple/

Requirement already satisfied: numpy==1.23.5 in /usr/local/lib/python3.10/dist-packages (1.23.5)

随后,克隆so-vits项目,并且安装项目的依赖:

import os

import glob

!git clone https://github.com/effusiveperiscope/so-vits-svc -b eff-4.0

os.chdir('/content/so-vits-svc')

# install requirements one-at-a-time to ignore exceptions

!cat requirements.txt | xargs -n 1 pip install --extra-index-url https://download.pytorch.org/whl/cu117

!pip install praat-parselmouth

!pip install ipywidgets

!pip install huggingface_hub

!pip install pip==23.0.1 # fix pip version for fairseq install

!pip install fairseq==0.12.2

!jupyter nbextension enable --py widgetsnbextension

existing_files = glob.glob('/content/**/*.*', recursive=True)

!pip install --upgrade protobuf==3.9.2

!pip uninstall -y tensorflow

!pip install tensorflow==2.11.0

安装好依赖之后,定义一些前置工具方法:

os.chdir('/content/so-vits-svc') # force working-directory to so-vits-svc - this line is just for safety and is probably not required

import tarfile

import os

from zipfile import ZipFile

# taken from https://github.com/CookiePPP/cookietts/blob/master/CookieTTS/utils/dataset/extract_unknown.py

def extract(path):

if path.endswith(".zip"):

with ZipFile(path, 'r') as zipObj:

zipObj.extractall(os.path.split(path)[0])

elif path.endswith(".tar.bz2"):

tar = tarfile.open(path, "r:bz2")

tar.extractall(os.path.split(path)[0])

tar.close()

elif path.endswith(".tar.gz"):

tar = tarfile.open(path, "r:gz")

tar.extractall(os.path.split(path)[0])

tar.close()

elif path.endswith(".tar"):

tar = tarfile.open(path, "r:")

tar.extractall(os.path.split(path)[0])

tar.close()

elif path.endswith(".7z"):

import py7zr

archive = py7zr.SevenZipFile(path, mode='r')

archive.extractall(path=os.path.split(path)[0])

archive.close()

else:

raise NotImplementedError(f"{path} extension not implemented.")

# taken from https://github.com/CookiePPP/cookietts/tree/master/CookieTTS/_0_download/scripts

# megatools download urls

win64_url = "https://megatools.megous.com/builds/builds/megatools-1.11.1.20230212-win64.zip"

win32_url = "https://megatools.megous.com/builds/builds/megatools-1.11.1.20230212-win32.zip"

linux_url = "https://megatools.megous.com/builds/builds/megatools-1.11.1.20230212-linux-x86_64.tar.gz"

# download megatools

from sys import platform

import os

import urllib.request

import subprocess

from time import sleep

if platform == "linux" or platform == "linux2":

dl_url = linux_url

elif platform == "darwin":

raise NotImplementedError('MacOS not supported.')

elif platform == "win32":

dl_url = win64_url

else:

raise NotImplementedError ('Unknown Operating System.')

dlname = dl_url.split("/")[-1]

if dlname.endswith(".zip"):

binary_folder = dlname[:-4] # remove .zip

elif dlname.endswith(".tar.gz"):

binary_folder = dlname[:-7] # remove .tar.gz

else:

raise NameError('downloaded megatools has unknown archive file extension!')

if not os.path.exists(binary_folder):

print('"megatools" not found. Downloading...')

if not os.path.exists(dlname):

urllib.request.urlretrieve(dl_url, dlname)

assert os.path.exists(dlname), 'failed to download.'

extract(dlname)

sleep(0.10)

os.unlink(dlname)

print("Done!")

binary_folder = os.path.abspath(binary_folder)

def megadown(download_link, filename='.', verbose=False):

"""Use megatools binary executable to download files and folders from MEGA.nz ."""

filename = ' --path "'+os.path.abspath(filename)+'"' if filename else ""

wd_old = os.getcwd()

os.chdir(binary_folder)

try:

if platform == "linux" or platform == "linux2":

subprocess.call(f'./megatools dl{filename}{" --debug http" if verbose else ""} {download_link}', shell=True)

elif platform == "win32":

subprocess.call(f'megatools.exe dl{filename}{" --debug http" if verbose else ""} {download_link}', shell=True)

except:

os.chdir(wd_old) # don't let user stop download without going back to correct directory first

raise

os.chdir(wd_old)

return filename

import urllib.request

from tqdm import tqdm

import gdown

from os.path import exists

def request_url_with_progress_bar(url, filename):

class DownloadProgressBar(tqdm):

def update_to(self, b=1, bsize=1, tsize=None):

if tsize is not None:

self.total = tsize

self.update(b * bsize - self.n)

def download_url(url, filename):

with DownloadProgressBar(unit='B', unit_scale=True,

miniters=1, desc=url.split('/')[-1]) as t:

filename, headers = urllib.request.urlretrieve(url, filename=filename, reporthook=t.update_to)

print("Downloaded to "+filename)

download_url(url, filename)

def download(urls, dataset='', filenames=None, force_dl=False, username='', password='', auth_needed=False):

assert filenames is None or len(urls) == len(filenames), f"number of urls does not match filenames. Expected {len(filenames)} urls, containing the files listed below.\n{filenames}"

assert not auth_needed or (len(username) and len(password)), f"username and password needed for {dataset} Dataset"

if filenames is None:

filenames = [None,]*len(urls)

for i, (url, filename) in enumerate(zip(urls, filenames)):

print(f"Downloading File from {url}")

#if filename is None:

# filename = url.split("/")[-1]

if filename and (not force_dl) and exists(filename):

print(f"{filename} Already Exists, Skipping.")

continue

if 'drive.google.com' in url:

assert 'https://drive.google.com/uc?id=' in url, 'Google Drive links should follow the format "https://drive.google.com/uc?id=1eQAnaoDBGQZldPVk-nzgYzRbcPSmnpv6".\nWhere id=XXXXXXXXXXXXXXXXX is the Google Drive Share ID.'

gdown.download(url, filename, quiet=False)

elif 'mega.nz' in url:

megadown(url, filename)

else:

#urllib.request.urlretrieve(url, filename=filename) # no progress bar

request_url_with_progress_bar(url, filename) # with progress bar

import huggingface_hub

import os

import shutil

class HFModels:

def __init__(self, repo = "therealvul/so-vits-svc-4.0",

model_dir = "hf_vul_models"):

self.model_repo = huggingface_hub.Repository(local_dir=model_dir,

clone_from=repo, skip_lfs_files=True)

self.repo = repo

self.model_dir = model_dir

self.model_folders = os.listdir(model_dir)

self.model_folders.remove('.git')

self.model_folders.remove('.gitattributes')

def list_models(self):

return self.model_folders

# Downloads model;

# copies config to target_dir and moves model to target_dir

def download_model(self, model_name, target_dir):

if not model_name in self.model_folders:

raise Exception(model_name + " not found")

model_dir = self.model_dir

charpath = os.path.join(model_dir,model_name)

gen_pt = next(x for x in os.listdir(charpath) if x.startswith("G_"))

cfg = next(x for x in os.listdir(charpath) if x.endswith("json"))

try:

clust = next(x for x in os.listdir(charpath) if x.endswith("pt"))

except StopIteration as e:

print("Note - no cluster model for "+model_name)

clust = None

if not os.path.exists(target_dir):

os.makedirs(target_dir, exist_ok=True)

gen_dir = huggingface_hub.hf_hub_download(repo_id = self.repo,

filename = model_name + "/" + gen_pt) # this is a symlink

if clust is not None:

clust_dir = huggingface_hub.hf_hub_download(repo_id = self.repo,

filename = model_name + "/" + clust) # this is a symlink

shutil.move(os.path.realpath(clust_dir), os.path.join(target_dir, clust))

clust_out = os.path.join(target_dir, clust)

else:

clust_out = None

shutil.copy(os.path.join(charpath,cfg),os.path.join(target_dir, cfg))

shutil.move(os.path.realpath(gen_dir), os.path.join(target_dir, gen_pt))

return {"config_path": os.path.join(target_dir,cfg),

"generator_path": os.path.join(target_dir,gen_pt),

"cluster_path": clust_out}

# Example usage

# vul_models = HFModels()

# print(vul_models.list_models())

# print("Applejack (singing)" in vul_models.list_models())

# vul_models.download_model("Applejack (singing)","models/Applejack (singing)")

print("Finished!")

这些方法可以帮助我们下载、解压和加载模型。

音色模型下载和线上推理

接着将特朗普的音色模型和配置文件进行下载,下载地址是:

https://huggingface.co/Nardicality/so-vits-svc-4.0-models/tree/main/Trump18.5k

随后模型文件放到项目的models文件夹,配置文件则放入config文件夹。

接着将需要转换的歌曲上传到和项目平行的目录中。

运行代码:

import os

import glob

import json

import copy

import logging

import io

from ipywidgets import widgets

from pathlib import Path

from IPython.display import Audio, display

os.chdir('/content/so-vits-svc')

import torch

from inference import infer_tool

from inference import slicer

from inference.infer_tool import Svc

import soundfile

import numpy as np

MODELS_DIR = "models"

def get_speakers():

speakers = []

for _,dirs,_ in os.walk(MODELS_DIR):

for folder in dirs:

cur_speaker = {}

# Look for G_****.pth

g = glob.glob(os.path.join(MODELS_DIR,folder,'G_*.pth'))

if not len(g):

print("Skipping "+folder+", no G_*.pth")

continue

cur_speaker["model_path"] = g[0]

cur_speaker["model_folder"] = folder

# Look for *.pt (clustering model)

clst = glob.glob(os.path.join(MODELS_DIR,folder,'*.pt'))

if not len(clst):

print("Note: No clustering model found for "+folder)

cur_speaker["cluster_path"] = ""

else:

cur_speaker["cluster_path"] = clst[0]

# Look for config.json

cfg = glob.glob(os.path.join(MODELS_DIR,folder,'*.json'))

if not len(cfg):

print("Skipping "+folder+", no config json")

continue

cur_speaker["cfg_path"] = cfg[0]

with open(cur_speaker["cfg_path"]) as f:

try:

cfg_json = json.loads(f.read())

except Exception as e:

print("Malformed config json in "+folder)

for name, i in cfg_json["spk"].items():

cur_speaker["name"] = name

cur_speaker["id"] = i

if not name.startswith('.'):

speakers.append(copy.copy(cur_speaker))

return sorted(speakers, key=lambda x:x["name"].lower())

logging.getLogger('numba').setLevel(logging.WARNING)

chunks_dict = infer_tool.read_temp("inference/chunks_temp.json")

existing_files = []

slice_db = -40

wav_format = 'wav'

class InferenceGui():

def __init__(self):

self.speakers = get_speakers()

self.speaker_list = [x["name"] for x in self.speakers]

self.speaker_box = widgets.Dropdown(

options = self.speaker_list

)

display(self.speaker_box)

def convert_cb(btn):

self.convert()

def clean_cb(btn):

self.clean()

self.convert_btn = widgets.Button(description="Convert")

self.convert_btn.on_click(convert_cb)

self.clean_btn = widgets.Button(description="Delete all audio files")

self.clean_btn.on_click(clean_cb)

self.trans_tx = widgets.IntText(value=0, description='Transpose')

self.cluster_ratio_tx = widgets.FloatText(value=0.0,

description='Clustering Ratio')

self.noise_scale_tx = widgets.FloatText(value=0.4,

description='Noise Scale')

self.auto_pitch_ck = widgets.Checkbox(value=False, description=

'Auto pitch f0 (do not use for singing)')

display(self.trans_tx)

display(self.cluster_ratio_tx)

display(self.noise_scale_tx)

display(self.auto_pitch_ck)

display(self.convert_btn)

display(self.clean_btn)

def convert(self):

trans = int(self.trans_tx.value)

speaker = next(x for x in self.speakers if x["name"] ==

self.speaker_box.value)

spkpth2 = os.path.join(os.getcwd(),speaker["model_path"])

print(spkpth2)

print(os.path.exists(spkpth2))

svc_model = Svc(speaker["model_path"], speaker["cfg_path"],

cluster_model_path=speaker["cluster_path"])

input_filepaths = [f for f in glob.glob('/content/**/*.*', recursive=True)

if f not in existing_files and

any(f.endswith(ex) for ex in ['.wav','.flac','.mp3','.ogg','.opus'])]

for name in input_filepaths:

print("Converting "+os.path.split(name)[-1])

infer_tool.format_wav(name)

wav_path = str(Path(name).with_suffix('.wav'))

wav_name = Path(name).stem

chunks = slicer.cut(wav_path, db_thresh=slice_db)

audio_data, audio_sr = slicer.chunks2audio(wav_path, chunks)

audio = []

for (slice_tag, data) in audio_data:

print(f'#=====segment start, '

f'{round(len(data)/audio_sr, 3)}s======')

length = int(np.ceil(len(data) / audio_sr *

svc_model.target_sample))

if slice_tag:

print('jump empty segment')

_audio = np.zeros(length)

else:

# Padding "fix" for noise

pad_len = int(audio_sr * 0.5)

data = np.concatenate([np.zeros([pad_len]),

data, np.zeros([pad_len])])

raw_path = io.BytesIO()

soundfile.write(raw_path, data, audio_sr, format="wav")

raw_path.seek(0)

_cluster_ratio = 0.0

if speaker["cluster_path"] != "":

_cluster_ratio = float(self.cluster_ratio_tx.value)

out_audio, out_sr = svc_model.infer(

speaker["name"], trans, raw_path,

cluster_infer_ratio = _cluster_ratio,

auto_predict_f0 = bool(self.auto_pitch_ck.value),

noice_scale = float(self.noise_scale_tx.value))

_audio = out_audio.cpu().numpy()

pad_len = int(svc_model.target_sample * 0.5)

_audio = _audio[pad_len:-pad_len]

audio.extend(list(infer_tool.pad_array(_audio, length)))

res_path = os.path.join('/content/',

f'{wav_name}_{trans}_key_'

f'{speaker["name"]}.{wav_format}')

soundfile.write(res_path, audio, svc_model.target_sample,

format=wav_format)

display(Audio(res_path, autoplay=True)) # display audio file

pass

def clean(self):

input_filepaths = [f for f in glob.glob('/content/**/*.*', recursive=True)

if f not in existing_files and

any(f.endswith(ex) for ex in ['.wav','.flac','.mp3','.ogg','.opus'])]

for f in input_filepaths:

os.remove(f)

inference_gui = InferenceGui()

此时系统会自动在根目录,也就是content下寻找音乐文件,包含但不限于wav、flac、mp3等等,随后根据下载的模型进行推理,推理之前会自动对文件进行背景音分离以及降噪和切片等操作。

推理结束之后,会自动播放转换后的歌曲。

结语

如果是刚开始使用Colab,默认分配的显存是15G左右,完全可以胜任大多数训练和推理任务,但是如果经常用它挂机运算,能分配到的显卡配置就会渐进式地降低,如果需要长时间并且相对稳定的GPU资源,还是需要付费订阅Colab pro服务,另外Google云盘的免费使用空间也是15G,如果模型下多了,导致云盘空间不足,运行代码也会报错,所以最好定期清理Google云盘,以此保证深度学习任务的正常运行。

云端炼丹,算力白嫖,基于云端GPU(Colab)使用So-vits库制作AI特朗普演唱《国际歌》的更多相关文章

- 深度学习,机器学习神器,白嫖免费GPU

深度学习,机器学习神器,白嫖免费GPU! 最近在学习计算机视觉,自己的小本本没有那么高的算力,层级尝试过Google的Colab,以及移动云的GPU算力,都不算理想.如果数据集比较小,可以试试Cola ...

- 基于云端的通用权限管理系统,SAAS服务,基于SAAS的权限管理,基于SAAS的单点登录SSO,企业单点登录,企业系统监控,企业授权认证中心

基于云端的通用权限管理系统 SAAS服务 基于SAAS的权限管理 基于SAAS的单点登录SSO 基于.Net的SSO,单点登录系统,提供SAAS服务 基于Extjs 4.2 的企业信息管理系统 基于E ...

- Spring配置类深度剖析-总结篇(手绘流程图,可白嫖)

生命太短暂,不要去做一些根本没有人想要的东西.本文已被 https://www.yourbatman.cn 收录,里面一并有Spring技术栈.MyBatis.JVM.中间件等小而美的专栏供以免费学习 ...

- math type白嫖教程

math type作为一款很方便的公式编辑器,缺陷就是要收费,只有30天的免费试用.这里有一个白嫖的方法,当你30天的期限过了之后,只需要删除HKEY_CURRENT_USER\Software\In ...

- 如何白嫖微软Azure12个月及避坑指南

Azure是微软提供的一个云服务平台.是全球除了AWS外最大的云服务提供商.Azure是微软除了windows之外另外一个王牌,微软错过了移动端,还好抓住了云服务.这里的Azure是Azure国际不是 ...

- 5分钟白嫖我常用的免费效率软件/工具!效率300% up!

Mac 免费效率软件/工具推荐 1. uTools(Windows/Mac) 还在为了翻译 English 而专门下载一个翻译软件吗? 还在为了格式某个 json 文本.时间戳转换而打开网址百度地址吗 ...

- 白嫖码云Pages,两分钟的事,就能搭个百度能搜到的个人博客平台

为了攒点钱让女儿做个富二代(笑),我就没掏钱买服务器,白嫖 GitHub Pages 搭了一个博客平台.不过遗憾的是,GitHub Pages 只能被谷歌收录,无法被百度收录,这就白白损失了一大波流量 ...

- 白嫖党看番神器 AnimeSeacher

- Anime Searcher - 简介 通过整合第三方网站的视频和弹幕资源, 给白嫖党提供最舒适的看番体验~ 目前有 4 个资源搜索引擎和 2 个弹幕搜索引擎, 资源丰富, 更新超快, 不用下载, ...

- 教你怎么"白嫖"图床

本次白嫖适用于有自己域名的. 访问 又拍云,注册 注册好后,访问又拍云联盟 按照说明申请即可 结束 静等通过即可,经过我与又拍云联系核实他们审核通过都会在每周五的下午18:00统一发送审核结果邮件通知 ...

- Xray高级版白嫖破解指南

啊,阿Sir,免费的还想白嫖?? 好啦好啦不开玩笑 Xray是从长亭洞鉴核心引擎中提取出的社区版漏洞扫描神器,支持主动.被动多种扫描方式,自备盲打平台.可以灵活定义 POC,功能丰富,调用简单,支持 ...

随机推荐

- 基于LAMP搭建WordPress博客

1.安装Apache. 1)执行如下命令,安装Apache服务及其扩展包. yum -y install httpd mod_ssl mod_perl mod_auth_mysql 2)执行如下命令, ...

- AD域安全攻防实践(附攻防矩阵图)

以域控为基础架构,通过域控实现对用户和计算机资源的统一管理,带来便利的同时也成为了最受攻击者重点攻击的集权系统. 01.攻击篇 针对域控的攻击技术,在Windows通用攻击技术的基础上自成一套技术体系 ...

- 三天吃透Spring Cloud面试八股文

本文已经收录到Github仓库,该仓库包含计算机基础.Java基础.多线程.JVM.数据库.Redis.Spring.Mybatis.SpringMVC.SpringBoot.分布式.微服务.设计模式 ...

- 使用ASP.NET CORE SignalR实现APP扫描登录

使用signalr实现APP扫码登录 1. 背景介绍 在移动化时代,web开发很多时候都会带着移动端开发,这个时候为了减少重复输入账号密码以及安全性,很多APP端都会提供一个扫码登录功能,web端生成 ...

- 从零开始学Java系列之Java是什么?它到底是个啥?

全文大约[5000]字,不说废话,只讲可以让你学到技术.明白原理的纯干货!文章带有丰富案例及配图,只为让你更好的理解和运用文中的技术概念,给你带来具有足够的思想启迪...... ----------- ...

- NEFUOJ P903字符串去星问题

Description 有一个字符串(长度小于100),要统计其中有多少个,并输出该字符串去掉后的新字符串. Input 输入数据有多组,每组1个连续的字符串; Output 在1行内输出该串内有多少 ...

- 各类电商平台批量获取商品信息 API 详细操作说明

前言获取商品信息可以更加快捷的查看商品的详请参数,同理批量获取商品信息的话就可以查看多个商品的信息参数,便于我们查看整个店铺的数据情况方便运营管理.具体操作如下:先获取一个key和secret,登入测 ...

- [Linux]常用命令之【mkdir/touch/cp/rm/ls/mv】

cp 将来源文件夹packageA下的所有目录及文件复制到新文件夹packageB下,形成: /packageB/... # cp -r /home/packageA/* /home/cp/packa ...

- HTTP.sys漏洞的检测和修复(附补丁包下载)

关于这个 HTTP.sys 漏洞,查了一些资料,没有一个写的比较全的,下面我来整理下. 这个漏洞主要存在Windows+IIS的环境下,任何安装了微软IIS 6.0以上的Windows Server ...

- python对图片进行最大边大小缩放

def split_image_bs4(file, max_len=720): """ 切割图片 :param file: 二进制文件 :param max_len: 最 ...