live555开发笔记(二):live555创建RTSP服务器源码剖析,创建rtsp服务器的基本流程总结

前言

基于Live555的流媒体服务器方案源码剖析,了解基本的代码搭建步骤。

Demo

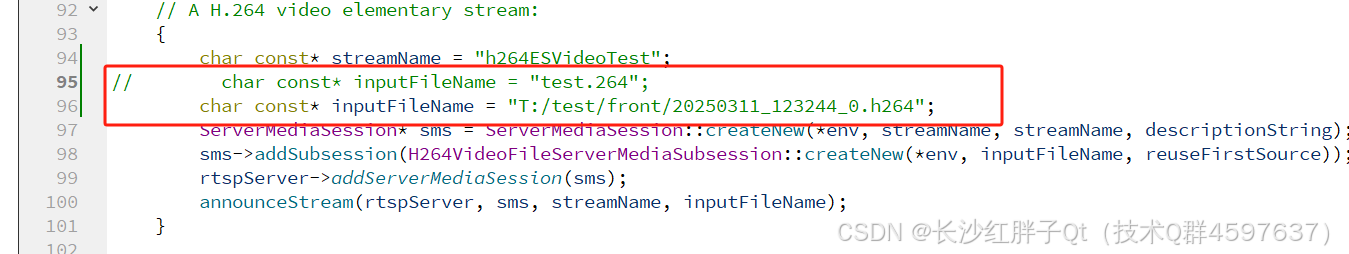

关于.h264与.265

没深入研究,但是h264的后缀名.264替换为.h264文件,流媒体服务器连接播放rtsp是成功的。

阅读示例

这是学习的必经之路,live555本身是一套框架,我们需要按照预定义的流程建立服务,按照预定义的一些虚函数自己实现这些函数。文档和其他资料上这块都很少,所以自行参照示例进行逐步学习。

TestOnDemandRTSPServer.cpp示例研究

从main函数开始,遇到新的类则单独往下跳,分析完再回来,建议看至少2-3次,前后呼应分析过程。

全局变量

// 环境输出的

UsageEnvironment* env;

// 要使每个流的第二个和后续客户端重用与第一个客户端相同的输入流(而不是从每个客户端的开始播放文件),请将以下“False”更改为“True”

Boolean reuseFirstSource = False;

// 要仅流式传输*MPEG-1 or 2视频“I”帧(例如,为了减少网络带宽),请将以下“False”更改为“True”:

Boolean iFramesOnly = False;

代码流程

首先创建任务调度器,然后设置我们的使用环境(注意:此代码是所有live555都需要这么做的):

TaskScheduler* scheduler = BasicTaskScheduler::createNew();

env = BasicUsageEnvironment::createNew(*scheduler);

开始进行权限控制,可以不控制则设置为null:

// 用于可选用户/密码身份验证的数据结构:

UserAuthenticationDatabase* authDB = NULL;

控制是否可以权限控制的用户名和密码:

// 是否对接入权限进行控制

//#define ACCESS_CONTROL // 屏蔽了,则是没定义,不进行权限控制

#ifdef ACCESS_CONTROL

// 要实现对RTSP服务器的客户端访问控制,请执行以下操作:

authDB = new UserAuthenticationDatabase;

// 添加权限用于名称和密码,添加允许访问服务器的每个<username>、<password>

authDB->addUserRecord("username1", "password1");

// 对允许访问服务器的每个<username>、<password>重复上述操作。

#endif

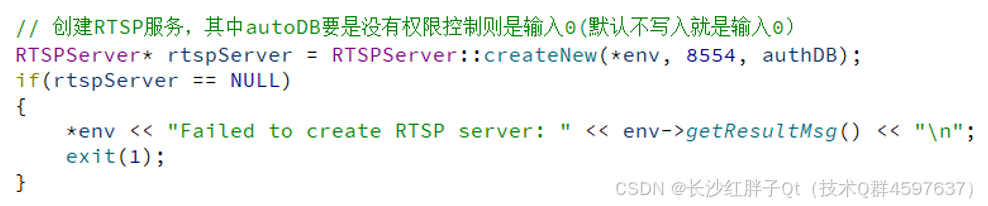

创建RTSP服务:

// 创建RTSP服务,其中autoDB要是没有权限控制则是输入0(默认不写入就是输入0)

RTSPServer* rtspServer = RTSPServer::createNew(*env, 8554, authDB);

if (rtspServer == NULL)

{

*env << "Failed to create RTSP server: " << env->getResultMsg() << "\n";

exit(1);

}

创建描述符,这个可以不用:

// 描述符,

char const* descriptionString = "Session streamed by \"testOnDemandRTSPServer\"";

下面就是关键步骤了,添加RTSP服务器的流,整个流分为好几种,我们本章主要测试mp4文件,想挑mp4的来解说,但是没有mp4,那我们就直接挑一个.H264来解说:

// ===== 下面开始创建实际的流,有很多流,根据格式来 =====

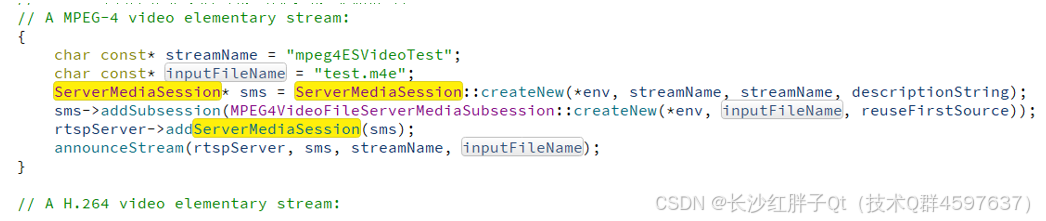

// A MPEG-4 video elementary stream:

{

char const* streamName = "mpeg4ESVideoTest";

char const* inputFileName = "test.m4e";

ServerMediaSession* sms = ServerMediaSession::createNew(*env, streamName, streamName, descriptionString);

sms->addSubsession(MPEG4VideoFileServerMediaSubsession::createNew(*env, inputFileName, reuseFirstSource));

rtspServer->addServerMediaSession(sms);

announceStream(rtspServer, sms, streamName, inputFileName);

}

// A H.264 video elementary stream:

{

char const* streamName = "h264ESVideoTest";

char const* inputFileName = "test.264";

ServerMediaSession* sms = ServerMediaSession::createNew(*env, streamName, streamName, descriptionString);

sms->addSubsession(H264VideoFileServerMediaSubsession::createNew(*env, inputFileName, reuseFirstSource));

rtspServer->addServerMediaSession(sms);

announceStream(rtspServer, sms, streamName, inputFileName);

}

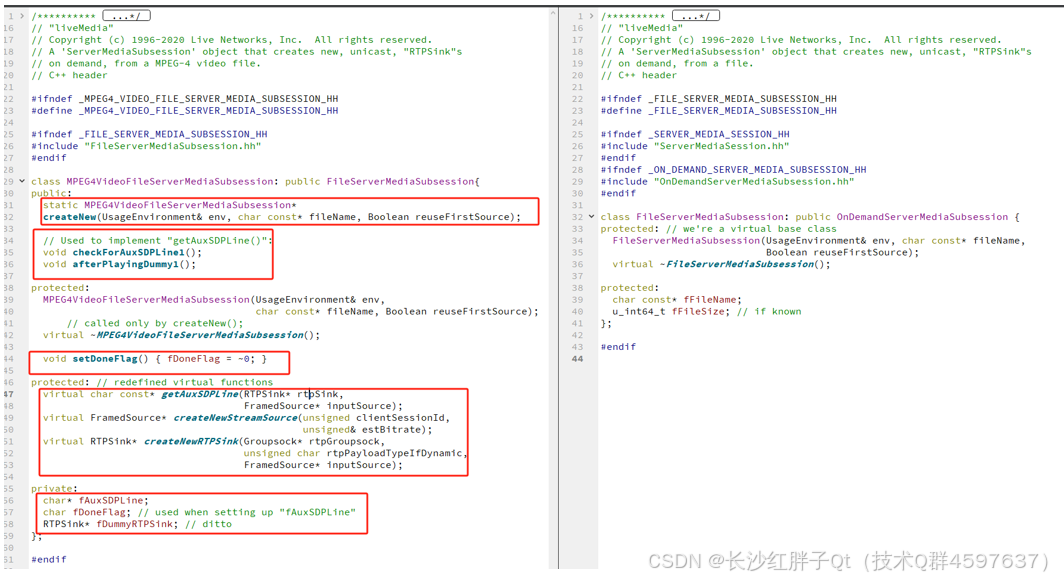

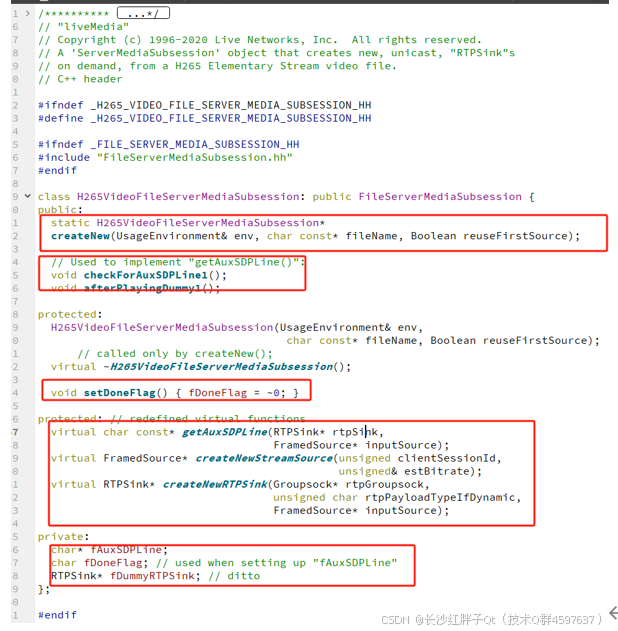

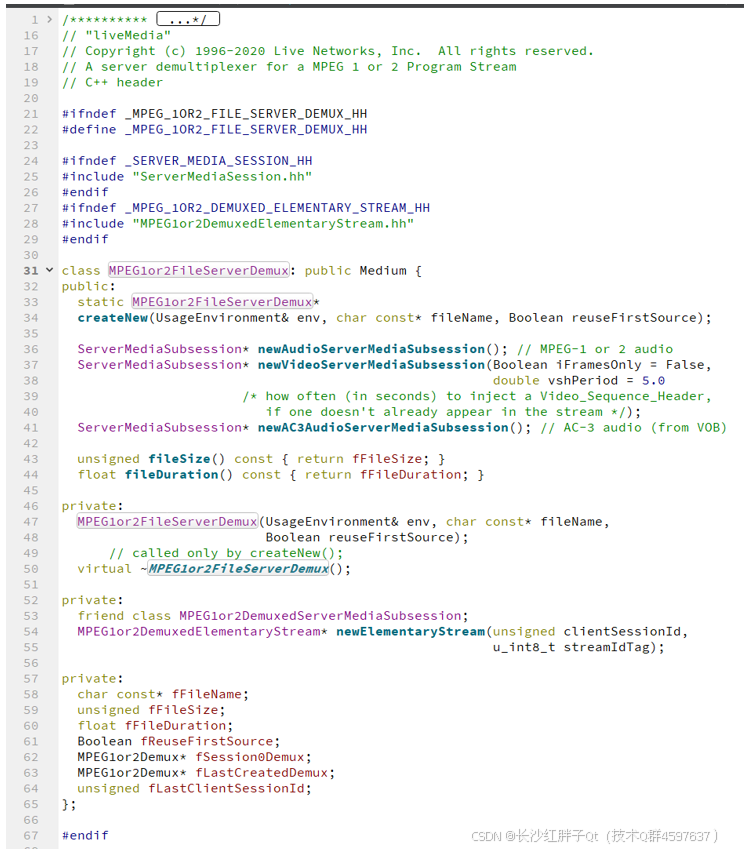

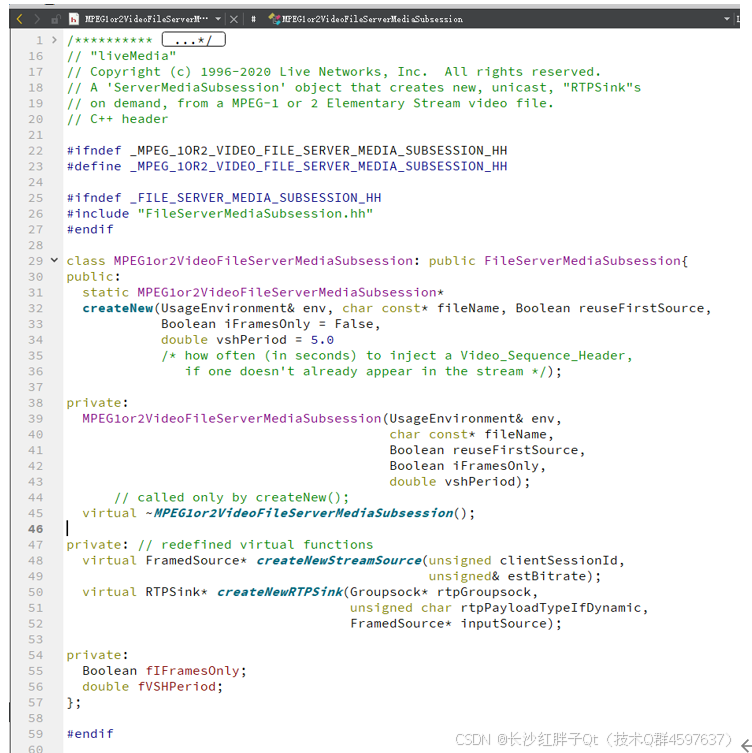

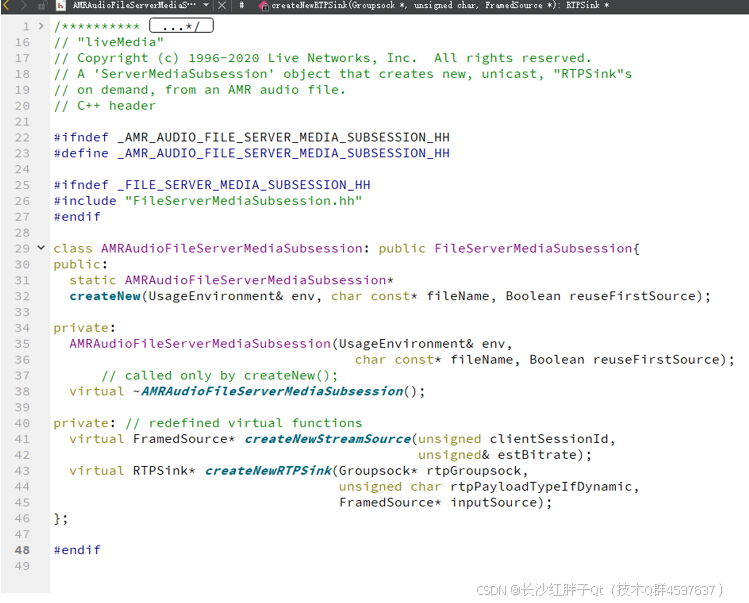

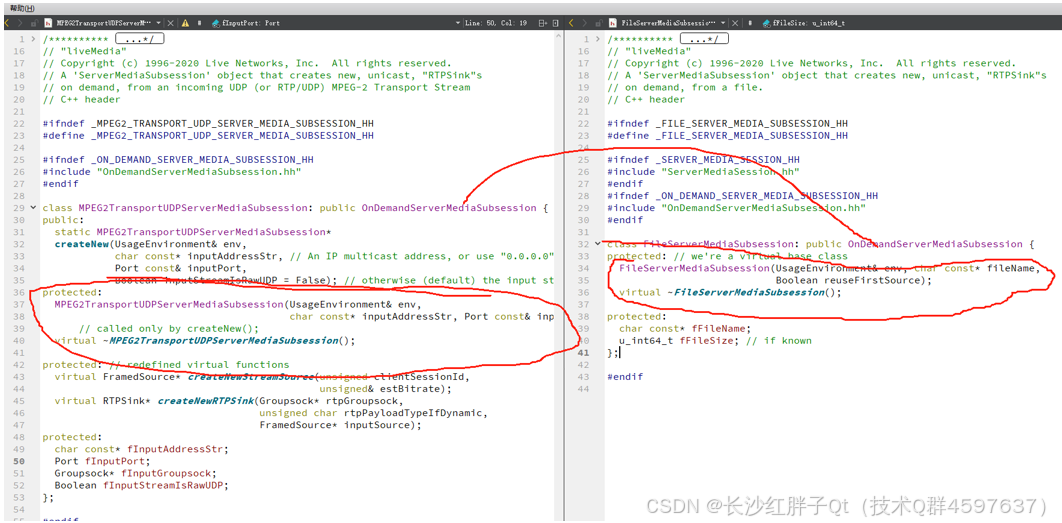

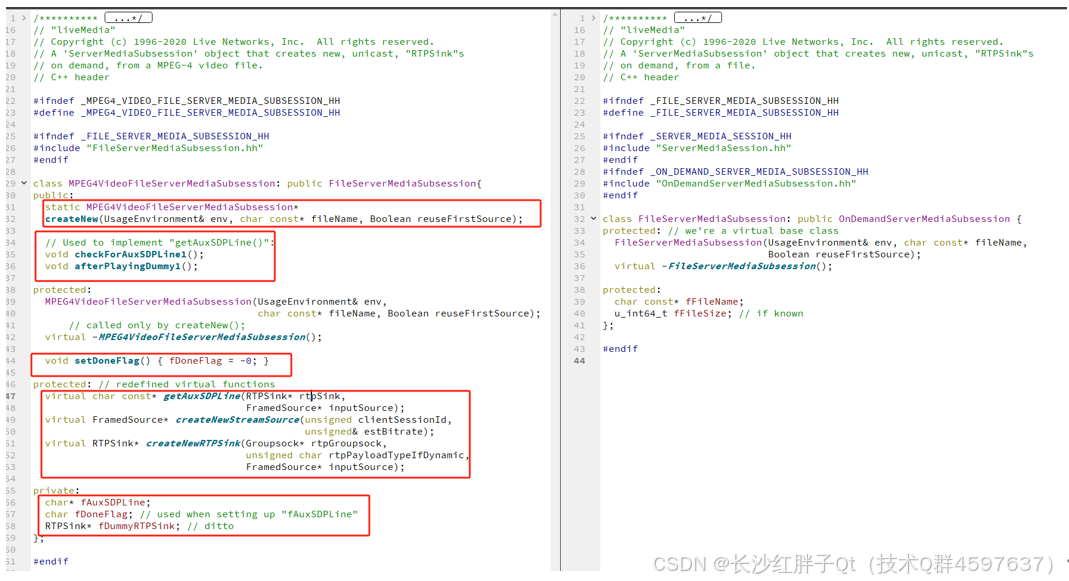

其中announceStream函数只是打印流名称和文件名,不用管。其他的代码就是如何生成流,如何添加进去,后续大部分代码都是这样,至于具体的文件都是继承了几个主要类,笔者全部扫了一遍,本代码里面涉及的几个SubSession相关的子类,我们需要流媒体服务器处理数据,就是需要重写这些文件subsession来实现数据的处理,现在都截图出来,如下:

所以一共有三种:

• 继承自:FileServerMediaSubsession

• 继承自:OnDemandServerMediaSubsession

• 继承自:Medium

以上就是按照框架的方式设置一些参数,如帧率、分辨率、音频流数据、视频流数据,但是这个发的是视频流数据,编码还是解码都需要自己弄。

思考一下,如果是有一个本地文件,当作流媒体服务器,那么需要在有客户端获取rtsp流的时候,服务器就进行编码器准备开始解码,要数据的时候,就解码一帧数据往外发,大致就是这个流程。

这里对subsession不做深入探讨,继续分析接下来的代码:

// 尝试为RTSP over HTTP隧道创建一个HTTP服务器,首先尝试使用默认HTTP端口(80),然后使用其他HTTP端口号(8000和8080)

if( rtspServer->setUpTunnelingOverHTTP(80)

|| rtspServer->setUpTunnelingOverHTTP(8000)

|| rtspServer->setUpTunnelingOverHTTP(8080))

{

// 打印输出

*env << "\n(We use port " << rtspServer->httpServerPortNum() << " for optional RTSP-over-HTTP tunneling.)\n";

}else{

*env << "\n(RTSP-over-HTTP tunneling is not available.)\n";

}

这是启动rtsp服务的http端口了。

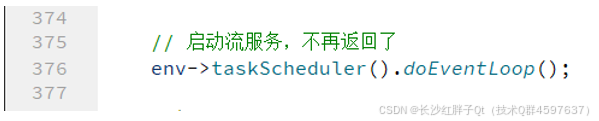

// 启动流服务,不再返回了

env->taskScheduler().doEventLoop();

这是进入rtsp服务器循环了,程序将阻塞在此处了。

Live555流媒体服务实现基本流程

步骤一:创建RTSP服务器

创建一个Rtsp服务器实例,服务器实例后续需要添加流服务媒体实例。

步骤二:添加ServerMediaSession实例

创建服务媒体实例,这代表了一个流媒体对话,但是流媒体对话的流服务服务流需要单独创建,使用ServerMediaSubsession实例。

步骤三:实现ServerMediaSubSession实例(实现重点)

ServerMediaSubsession是具体服务流数的实例,在这个类中都需要实现流媒体的操作函数,这些操作函数决定了流媒体对应指令的具体参数配置和动作。

这里分为三类:

- 继承自:FileServerMediaSubsession

- 继承自:OnDemandServerMediaSubsession

- 继承自:Medium

实现一些函数,咱们也不太清楚,但是知道需要拿到流的原始数据然后喂出去。

步骤四:启动服务端口

为rtsp创建一个http服务器,对外可以连接到的rtsp服务器。

步骤五:服务器运行

这是服务器进入循环,当前线程(单线程则是主线程,同时也可以代表本进程)则会阻塞在此处。

以上就是live555的rtsp建立rtsp服务器的流程,核心的点就在subsession中的数据处理,按照连接的流媒体格式,将流媒体的原始数据发出去,客户端就可以了播放了。

整理后的中文注释源码

#include "liveMedia.hh"

#include "BasicUsageEnvironment.hh"

// webm mkv文件使用

static char newDemuxWatchVariable;

static MatroskaFileServerDemux* matroskaDemux;

static void onMatroskaDemuxCreation(MatroskaFileServerDemux* newDemux, void* /*clientData*/)

{

matroskaDemux = newDemux;

newDemuxWatchVariable = 1;

}

// ogg文件使用

static OggFileServerDemux* oggDemux;

static void onOggDemuxCreation(OggFileServerDemux* newDemux, void* /*clientData*/)

{

oggDemux = newDemux;

newDemuxWatchVariable = 1;

}

// 打印流的相关信息

static void announceStream(RTSPServer* rtspServer,

ServerMediaSession* sms,

char const* streamName,

char const* inputFileName)

{

// 获取rtsp地址

char* url = rtspServer->rtspURL(sms);

// 获取使用环境

UsageEnvironment& env = rtspServer->envir();

// 使用环境输出“流名称”和“输入文件名”

env << "\n\"" << streamName << "\" stream, from the file \"" << inputFileName << "\"\n";

env << "Play this stream using the URL \"" << url << "\"\n";

delete[] url;

}

int main(int argc, char** argv)

{

// 全局环境输出的

UsageEnvironment* env;

// 要使每个流的第二个和后续客户端重用与第一个客户端相同的输入流(而不是从每个客户端的开始播放文件),请将以下“False”更改为“True”

Boolean reuseFirstSource = False;

// 要仅流式传输*MPEG-1 or 2视频“I”帧(例如,为了减少网络带宽),请将以下“False”更改为“True”:

Boolean iFramesOnly = False;

// 创建任务调度器、设置使用环境

TaskScheduler* scheduler = BasicTaskScheduler::createNew();

env = BasicUsageEnvironment::createNew(*scheduler);

// 用于可选用户/密码身份验证的数据结构:

UserAuthenticationDatabase* authDB = NULL;

// 是否对接入权限进行控制

//#define ACCESS_CONTROL

#ifdef ACCESS_CONTROL

// 要实现对RTSP服务器的客户端访问控制,请执行以下操作:

authDB = new UserAuthenticationDatabase;

// 添加权限用于名称和密码,添加允许访问服务器的每个<username>、<password>

authDB->addUserRecord("username1", "password1");

// 对允许访问服务器的每个<username>、<password>重复上述操作。

#endif

// 创建RTSP服务,其中autoDB要是没有权限控制则是输入0(默认不写入就是输入0)

RTSPServer* rtspServer = RTSPServer::createNew(*env, 8554, authDB);

if(rtspServer == NULL)

{

*env << "Failed to create RTSP server: " << env->getResultMsg() << "\n";

exit(1);

}

// 描述符,

char const* descriptionString = "Session streamed by \"testOnDemandRTSPServer\"";

// 设置RTSP服务器可以提供的每个可能的流。

// 每个这样的流都是使用“ServerMediaSession”对象实现的,

// 每个音频/视频子流都有一个或多个“ServerMediaSubsession”对象。

// ===== 下面开始创建实际的流,有很多流,根据格式来 =====

// A MPEG-4 video elementary stream:

{

char const* streamName = "mpeg4ESVideoTest";

char const* inputFileName = "test.m4e";

ServerMediaSession* sms = ServerMediaSession::createNew(*env, streamName, streamName, descriptionString);

sms->addSubsession(MPEG4VideoFileServerMediaSubsession::createNew(*env, inputFileName, reuseFirstSource));

rtspServer->addServerMediaSession(sms);

announceStream(rtspServer, sms, streamName, inputFileName);

}

// A H.264 video elementary stream:

{

char const* streamName = "h264ESVideoTest";

char const* inputFileName = "test.264";

ServerMediaSession* sms = ServerMediaSession::createNew(*env, streamName, streamName, descriptionString);

sms->addSubsession(H264VideoFileServerMediaSubsession::createNew(*env, inputFileName, reuseFirstSource));

rtspServer->addServerMediaSession(sms);

announceStream(rtspServer, sms, streamName, inputFileName);

}

// A H.265 video elementary stream:

{

char const* streamName = "h265ESVideoTest";

char const* inputFileName = "test.265";

ServerMediaSession* sms = ServerMediaSession::createNew(*env, streamName, streamName, descriptionString);

sms->addSubsession(H265VideoFileServerMediaSubsession::createNew(*env, inputFileName, reuseFirstSource));

rtspServer->addServerMediaSession(sms);

announceStream(rtspServer, sms, streamName, inputFileName);

}

// A MPEG-1 or 2 audio+video program stream:

{

char const* streamName = "mpeg1or2AudioVideoTest";

char const* inputFileName = "test.mpg";

// NOTE: This *must* be a Program Stream; not an Elementary Stream

ServerMediaSession* sms = ServerMediaSession::createNew(*env, streamName, streamName, descriptionString);

MPEG1or2FileServerDemux* demux = MPEG1or2FileServerDemux::createNew(*env, inputFileName, reuseFirstSource);

sms->addSubsession(demux->newVideoServerMediaSubsession(iFramesOnly));

sms->addSubsession(demux->newAudioServerMediaSubsession());

rtspServer->addServerMediaSession(sms);

announceStream(rtspServer, sms, streamName, inputFileName);

}

// A MPEG-1 or 2 video elementary stream:

{

char const* streamName = "mpeg1or2ESVideoTest";

char const* inputFileName = "testv.mpg";

// NOTE: This *must* be a Video Elementary Stream; not a Program Stream

ServerMediaSession* sms = ServerMediaSession::createNew(*env, streamName, streamName, descriptionString);

sms->addSubsession(MPEG1or2VideoFileServerMediaSubsession::createNew(*env, inputFileName, reuseFirstSource, iFramesOnly));

rtspServer->addServerMediaSession(sms);

announceStream(rtspServer, sms, streamName, inputFileName);

}

// A MP3 audio stream (actually, any MPEG-1 or 2 audio file will work):

// To stream using 'ADUs' rather than raw MP3 frames, uncomment the following:

//#define STREAM_USING_ADUS 1

// To also reorder ADUs before streaming, uncomment the following:

//#define INTERLEAVE_ADUS 1

// (For more information about ADUs and interleaving,

// see <http://www.live555.com/rtp-mp3/>)

{

char const* streamName = "mp3AudioTest";

char const* inputFileName = "test.mp3";

ServerMediaSession* sms = ServerMediaSession::createNew(*env, streamName, streamName, descriptionString);

Boolean useADUs = False;

Interleaving* interleaving = NULL;

#ifdef STREAM_USING_ADUS

useADUs = True;

#ifdef INTERLEAVE_ADUS

unsigned char interleaveCycle[] = {0,2,1,3}; // or choose your own...

unsigned const interleaveCycleSize = (sizeof interleaveCycle)/(sizeof (unsigned char));

interleaving = new Interleaving(interleaveCycleSize, interleaveCycle);

#endif

#endif

sms->addSubsession(MP3AudioFileServerMediaSubsession::createNew(*env, inputFileName, reuseFirstSource, useADUs, interleaving));

rtspServer->addServerMediaSession(sms);

announceStream(rtspServer, sms, streamName, inputFileName);

}

// A WAV audio stream:

{

char const* streamName = "wavAudioTest";

char const* inputFileName = "test.wav";

ServerMediaSession* sms = ServerMediaSession::createNew(*env, streamName, streamName, descriptionString);

// To convert 16-bit PCM data to 8-bit u-law, prior to streaming,

// change the following to True:

Boolean convertToULaw = False;

sms->addSubsession(WAVAudioFileServerMediaSubsession::createNew(*env, inputFileName, reuseFirstSource, convertToULaw));

rtspServer->addServerMediaSession(sms);

announceStream(rtspServer, sms, streamName, inputFileName);

}

// An AMR audio stream:

{

char const* streamName = "amrAudioTest";

char const* inputFileName = "test.amr";

ServerMediaSession* sms = ServerMediaSession::createNew(*env, streamName, streamName, descriptionString);

sms->addSubsession(AMRAudioFileServerMediaSubsession::createNew(*env, inputFileName, reuseFirstSource));

rtspServer->addServerMediaSession(sms);

announceStream(rtspServer, sms, streamName, inputFileName);

}

// A 'VOB' file (e.g., from an unencrypted DVD):

{

char const* streamName = "vobTest";

char const* inputFileName = "test.vob";

ServerMediaSession* sms = ServerMediaSession::createNew(*env, streamName, streamName, descriptionString);

// Note: VOB files are MPEG-2 Program Stream files, but using AC-3 audio

MPEG1or2FileServerDemux* demux = MPEG1or2FileServerDemux::createNew(*env, inputFileName, reuseFirstSource);

sms->addSubsession(demux->newVideoServerMediaSubsession(iFramesOnly));

sms->addSubsession(demux->newAC3AudioServerMediaSubsession());

rtspServer->addServerMediaSession(sms);

announceStream(rtspServer, sms, streamName, inputFileName);

}

// A MPEG-2 Transport Stream:

{

char const* streamName = "mpeg2TransportStreamTest";

char const* inputFileName = "test.ts";

char const* indexFileName = "test.tsx";

ServerMediaSession* sms = ServerMediaSession::createNew(*env, streamName, streamName, descriptionString);

sms->addSubsession(MPEG2TransportFileServerMediaSubsession::createNew(*env, inputFileName, indexFileName, reuseFirstSource));

rtspServer->addServerMediaSession(sms);

announceStream(rtspServer, sms, streamName, inputFileName);

}

// An AAC audio stream (ADTS-format file):

{

char const* streamName = "aacAudioTest";

char const* inputFileName = "test.aac";

ServerMediaSession* sms = ServerMediaSession::createNew(*env, streamName, streamName, descriptionString);

sms->addSubsession(ADTSAudioFileServerMediaSubsession::createNew(*env, inputFileName, reuseFirstSource));

rtspServer->addServerMediaSession(sms);

announceStream(rtspServer, sms, streamName, inputFileName);

}

// A DV video stream:

{

// First, make sure that the RTPSinks' buffers will be large enough to handle the huge size of DV frames (as big as 288000).

OutPacketBuffer::maxSize = 300000;

char const* streamName = "dvVideoTest";

char const* inputFileName = "test.dv";

ServerMediaSession* sms = ServerMediaSession::createNew(*env, streamName, streamName, descriptionString);

sms->addSubsession(DVVideoFileServerMediaSubsession::createNew(*env, inputFileName, reuseFirstSource));

rtspServer->addServerMediaSession(sms);

announceStream(rtspServer, sms, streamName, inputFileName);

}

// A AC3 video elementary stream:

{

char const* streamName = "ac3AudioTest";

char const* inputFileName = "test.ac3";

ServerMediaSession* sms = ServerMediaSession::createNew(*env, streamName, streamName, descriptionString);

sms->addSubsession(AC3AudioFileServerMediaSubsession::createNew(*env, inputFileName, reuseFirstSource));

rtspServer->addServerMediaSession(sms);

announceStream(rtspServer, sms, streamName, inputFileName);

}

// A Matroska ('.mkv') file, with video+audio+subtitle streams:

{

char const* streamName = "matroskaFileTest";

char const* inputFileName = "test.mkv";

ServerMediaSession* sms = ServerMediaSession::createNew(*env, streamName, streamName, descriptionString);

newDemuxWatchVariable = 0;

MatroskaFileServerDemux::createNew(*env, inputFileName, onMatroskaDemuxCreation, NULL);

env->taskScheduler().doEventLoop(&newDemuxWatchVariable);

Boolean sessionHasTracks = False;

ServerMediaSubsession* smss;

while ((smss = matroskaDemux->newServerMediaSubsession()) != NULL)

{

sms->addSubsession(smss);

sessionHasTracks = True;

}

if (sessionHasTracks)

{

rtspServer->addServerMediaSession(sms);

}

// otherwise, because the stream has no tracks, we don't add a ServerMediaSession to the server.

announceStream(rtspServer, sms, streamName, inputFileName);

}

// A WebM ('.webm') file, with video(VP8)+audio(Vorbis) streams:

// (Note: ".webm' files are special types of Matroska files, so we use the same code as the Matroska ('.mkv') file code above.)

{

char const* streamName = "webmFileTest";

char const* inputFileName = "test.webm";

ServerMediaSession* sms = ServerMediaSession::createNew(*env, streamName, streamName, descriptionString);

newDemuxWatchVariable = 0;

MatroskaFileServerDemux::createNew(*env, inputFileName, onMatroskaDemuxCreation, NULL);

env->taskScheduler().doEventLoop(&newDemuxWatchVariable);

Boolean sessionHasTracks = False;

ServerMediaSubsession* smss;

while((smss = matroskaDemux->newServerMediaSubsession()) != NULL)

{

sms->addSubsession(smss);

sessionHasTracks = True;

}

if(sessionHasTracks)

{

rtspServer->addServerMediaSession(sms);

}

// otherwise, because the stream has no tracks, we don't add a ServerMediaSession to the server.

announceStream(rtspServer, sms, streamName, inputFileName);

}

// An Ogg ('.ogg') file, with video and/or audio streams:

{

char const* streamName = "oggFileTest";

char const* inputFileName = "test.ogg";

ServerMediaSession* sms = ServerMediaSession::createNew(*env, streamName, streamName, descriptionString);

newDemuxWatchVariable = 0;

OggFileServerDemux::createNew(*env, inputFileName, onOggDemuxCreation, NULL);

env->taskScheduler().doEventLoop(&newDemuxWatchVariable);

Boolean sessionHasTracks = False;

ServerMediaSubsession* smss;

while ((smss = oggDemux->newServerMediaSubsession()) != NULL)

{

sms->addSubsession(smss);

sessionHasTracks = True;

}

if (sessionHasTracks)

{

rtspServer->addServerMediaSession(sms);

}

// otherwise, because the stream has no tracks, we don't add a ServerMediaSession to the server.

announceStream(rtspServer, sms, streamName, inputFileName);

}

// An Opus ('.opus') audio file:

// (Note: ".opus' files are special types of Ogg files, so we use the same code as the Ogg ('.ogg') file code above.)

{

char const* streamName = "opusFileTest";

char const* inputFileName = "test.opus";

ServerMediaSession* sms = ServerMediaSession::createNew(*env, streamName, streamName, descriptionString);

newDemuxWatchVariable = 0;

OggFileServerDemux::createNew(*env, inputFileName, onOggDemuxCreation, NULL);

env->taskScheduler().doEventLoop(&newDemuxWatchVariable);

Boolean sessionHasTracks = False;

ServerMediaSubsession* smss;

while((smss = oggDemux->newServerMediaSubsession()) != NULL)

{

sms->addSubsession(smss);

sessionHasTracks = True;

}

if(sessionHasTracks)

{

rtspServer->addServerMediaSession(sms);

}

// otherwise, because the stream has no tracks, we don't add a ServerMediaSession to the server.

announceStream(rtspServer, sms, streamName, inputFileName);

}

// A MPEG-2 Transport Stream, coming from a live UDP (raw-UDP or RTP/UDP) source:

{

char const* streamName = "mpeg2TransportStreamFromUDPSourceTest";

char const* inputAddressStr = "239.255.42.42";

// This causes the server to take its input from the stream sent by the "testMPEG2TransportStreamer" demo application.

// (Note: If the input UDP source is unicast rather than multicast, then change this to NULL.)

portNumBits const inputPortNum = 1234;

// This causes the server to take its input from the stream sent by the "testMPEG2TransportStreamer" demo application.

Boolean const inputStreamIsRawUDP = False;

ServerMediaSession* sms = ServerMediaSession::createNew(*env, streamName, streamName, descriptionString);

sms->addSubsession(MPEG2TransportUDPServerMediaSubsession::createNew(*env, inputAddressStr, inputPortNum, inputStreamIsRawUDP));

rtspServer->addServerMediaSession(sms);

char* url = rtspServer->rtspURL(sms);

*env << "\n\"" << streamName << "\" stream, from a UDP Transport Stream input source \n\t(";

if (inputAddressStr != NULL)

{

*env << "IP multicast address " << inputAddressStr << ",";

} else {

*env << "unicast;";

}

*env << " port " << inputPortNum << ")\n";

*env << "Play this stream using the URL \"" << url << "\"\n";

delete[] url;

}

// 尝试为RTSP over HTTP隧道创建一个HTTP服务器,首先尝试使用默认HTTP端口(80),然后使用其他HTTP端口号(8000和8080)

if( rtspServer->setUpTunnelingOverHTTP(80)

|| rtspServer->setUpTunnelingOverHTTP(8000)

|| rtspServer->setUpTunnelingOverHTTP(8080))

{

// 打印输出

*env << "\n(We use port " << rtspServer->httpServerPortNum() << " for optional RTSP-over-HTTP tunneling.)\n";

}else{

*env << "\n(RTSP-over-HTTP tunneling is not available.)\n";

}

// 启动流服务,不再返回了

env->taskScheduler().doEventLoop();

return 0;

}

工程模板v1.1.0

live555开发笔记(二):live555创建RTSP服务器源码剖析,创建rtsp服务器的基本流程总结的更多相关文章

- Hadoop源码学习笔记之NameNode启动场景流程二:http server启动源码剖析

NameNodeHttpServer启动源码剖析,这一部分主要按以下步骤进行: 一.源码调用分析 二.伪代码调用流程梳理 三.http server服务流程图解 第一步,源码调用分析 前一篇文章已经锁 ...

- 使用select异步IO实现socketserver服务器 源码剖析

#_*_coding:utf-8_*_ #这是一个echo server,客户端消息,服务端回复相同的消息 import select, socket, sys, queue # Create a T ...

- Django开发笔记二

Django开发笔记一 Django开发笔记二 Django开发笔记三 Django开发笔记四 Django开发笔记五 Django开发笔记六 1.xadmin添加主题.修改标题页脚和收起左侧菜单 # ...

- 【流媒体开发】VLC Media Player - Android 平台源码编译 与 二次开发详解 (提供详细800M下载好的编译源码及eclipse可调试播放器源码下载)

作者 : 韩曙亮 博客地址 : http://blog.csdn.net/shulianghan/article/details/42707293 转载请注明出处 : http://blog.csd ...

- Spark源码剖析 - SparkContext的初始化(二)_创建执行环境SparkEnv

2. 创建执行环境SparkEnv SparkEnv是Spark的执行环境对象,其中包括众多与Executor执行相关的对象.由于在local模式下Driver会创建Executor,local-cl ...

- [源码解析] 机器学习参数服务器ps-lite (1) ----- PostOffice

[源码解析] 机器学习参数服务器ps-lite 之(1) ----- PostOffice 目录 [源码解析] 机器学习参数服务器ps-lite 之(1) ----- PostOffice 0x00 ...

- SpringMVC源码剖析(二)- DispatcherServlet的前世今生

上一篇文章<SpringMVC源码剖析(一)- 从抽象和接口说起>中,我介绍了一次典型的SpringMVC请求处理过程中,相继粉墨登场的各种核心类和接口.我刻意忽略了源码中的处理细节,只列 ...

- 微信公众平台开发-access_token获取及应用(含源码)

微信公众平台开发-access_token获取及应用(含源码)作者: 孟祥磊-<微信公众平台开发实例教程> 很多系统中都有access_token参数,对于微信公众平台的access_to ...

- 微信公众平台开发2-access_token获取及应用(含源码)

微信公众平台开发-access_token获取及应用(含源码) 很多系统中都有access_token参数,对于微信公众平台的access_token参数,微信服务器判断该公众平台所拥有的权限,允许或 ...

- 07.ElementUI 2.X 源码学习:源码剖析之工程化(二)

0x.00 前言 项目工程化系列文章链接如下,推荐按照顺序阅读文章 . 1️⃣ 源码剖析之工程化(一):项目概览.package.json.npm script 2️⃣ 源码剖析之工程化(二):项目构 ...

随机推荐

- DC/DC layout建议

DCDC电路的重要性不言而喻,不合理的PCB Layout会造成芯片性能变差,甚至损坏芯片.如:线性度下降.带载能力下降.工作不稳定.EMI辐射增加.输出噪声增加等. 环路面积最小原则 DC/DC电路 ...

- ulimit命令 控制服务器资源

命 令:ulimit功 能:控制shell程序的资源语 法:ulimit [-aHS][-c <core文件上限>][-d <数据节区大小>][-f <文件大 小 ...

- [翻译] 为什么 Tracebit 用 C# 开发

原文: [Why Tracebit is written in C#] 作者: [Sam Cox (Tracebit联合创始人兼CTO)] 译者: [六六] (译注:Tracebit成立于2022年, ...

- 生成式 AI 的发展方向,是 Chat 还是 Agent?

一.整体介绍 生成式 AI 在当今科技领域的发展可谓是日新月异,其在对话系统(Chat)和自主代理(Agent)两个领域都取得了显著的成果. 在对话系统(Chat)方面,发展现状令人瞩目.当前,众多智 ...

- MybatisPlus - [04] 分页

limit m,n.PageHelper.MyBatisPlus分页插件 001 || MybatisPlus分页插件 (1)引入maven依赖 <dependency> <grou ...

- ABC393E题解

大概评级:绿. 拿到题目,寻找突破口,发现 \(A_i \le 10^6\),一般的数据都是 \(A_i \le 10^9\),所以必有蹊跷. 数学,权值,最大公约数,联想到了因子--懂了,原来是这么 ...

- DeepSeek 全套资料pdf合集免费下载(持续更新)

有很多朋友都关注DeepSeek相关使用的教程资料,本站也一直持续分享DeepSeek 学习相关的pdf资料,由于比较零散,这篇文章主要就是做一个汇总,并且持续更新,让大家可以及时获取下载最新的相关D ...

- layui 自动触发radio和select

layui对radio和select做了包装,正常用jquery选中后使用trigger不起作用. 那么,怎么让其自动触发呢? 对radio来说,必须在$选中后.next('.layui-form-r ...

- AI 实践|零成本生成SEO友好的TDK落地方案

之前写过一篇文章「Google搜索成最大入口,简单谈下个人博客的SEO」,文章里介绍了网页的描述信息TDK(Title.Description和Keywords)对SEO的重要作用,尽管已经意识到了T ...

- 【P5】Verilog搭建流水线MIPS-CPU

课下 Thinking_Log 1.为何不允许直接转发功能部件的输出 直接转发会使一些组合逻辑部件增加新的长短不一的操作延迟,不利于计算设置流水线是时钟频率(保证流水线吞吐量?). 2.jal中将NP ...