容器编排系统K8s之APIService资源

前文我们聊到了k8s上crd资源的使用和相关说明,回顾请参考:https://www.cnblogs.com/qiuhom-1874/p/14267400.html;今天我们来了解下k8s的第二种扩展机制自定义apiserver,以及apiservice资源的相关话题;

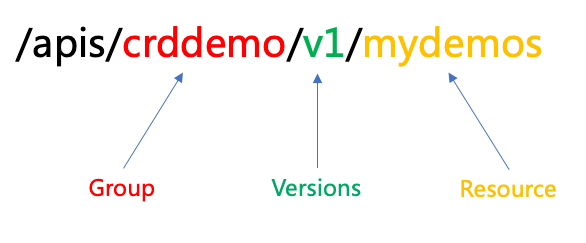

在开始聊自定义apiserver前,我们先来了解下k8s原生的apiserver;其实apiserver就是一个https服务器,我们可以使用kubectl工具通过https协议请求apiserver创建资源,删除资源,查看资源等等操作;每个请求都对应着RESTful api中的请求方法,对应资源就是http协议中的url路径;比如我们要创建一个pod,其kubectl请求apiserver 使用post方法将资源定义提交给apiserver;pod资源就是对应群组中的某个版本下某个名称空间下的某个pod资源;

apiserver资源组织逻辑

提示:客户端访问apiserver,对应资源类似上图中的组织方式;比如访问default名称空间下某个pod,其路径就为/apis/core/v1/namespace/default/pod/mypod;对应resource包含名称空间以及对应资源类型;

k8s原生apiserver组成

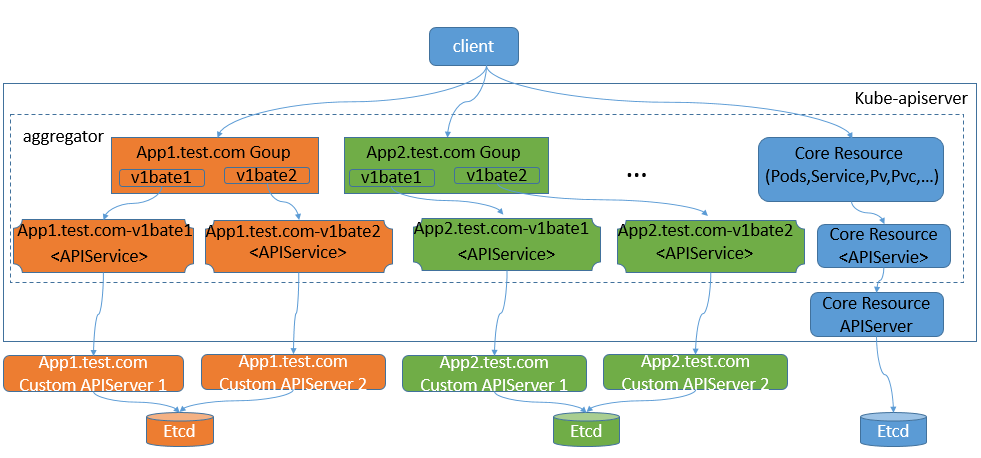

k8s原生apiserver主要有两个组件组成,第一个组件aggregator,其功能类似web代理服务器,第二个组件就是真正的apiserver;其工作逻辑是,用户请求首先送达给aggregator,由aggregator根据用户请求的资源,将对应请求路由至apiserver;简单讲aggregator这个组件主要作用就是用来路由用户请求;默认情况aggregator会把所有请求都路由至原生的apiserver上进行响应;如果我们需要自定义apiserver,就需要在默认的aggregator上使用apiservice资源将自定义apiserver注册到原生的apiserver上,让其用户请求能够被路由至自定义apiserver进行响应;如下图

提示:apiserver是k8s的唯一访问入口,默认客户端的所有操作都是发送给apiserver进行响应,我们自定义的apiserver要想能够被客户端访问,就必须通过内建apiserver中的aggregator组件中的路由信息,把对应路径的访问路由至对应apiserver进行访问;对应aggregator中的路由信息,由k8s内建apiservice资源定义;简单讲apiservice资源就是用来定义原生apiserver中aggregator组件上的路由信息;该路由就是将某某端点的访问路由至对应apiserver;

查看原生apiserver中的群组/版本信息

[root@master01 ~]# kubectl api-versions

admissionregistration.k8s.io/v1

admissionregistration.k8s.io/v1beta1

apiextensions.k8s.io/v1

apiextensions.k8s.io/v1beta1

apiregistration.k8s.io/v1

apiregistration.k8s.io/v1beta1

apps/v1

authentication.k8s.io/v1

authentication.k8s.io/v1beta1

authorization.k8s.io/v1

authorization.k8s.io/v1beta1

autoscaling/v1

autoscaling/v2beta1

autoscaling/v2beta2

batch/v1

batch/v1beta1

certificates.k8s.io/v1

certificates.k8s.io/v1beta1

coordination.k8s.io/v1

coordination.k8s.io/v1beta1

crd.projectcalico.org/v1

discovery.k8s.io/v1beta1

events.k8s.io/v1

events.k8s.io/v1beta1

extensions/v1beta1

flowcontrol.apiserver.k8s.io/v1beta1

mongodb.com/v1

networking.k8s.io/v1

networking.k8s.io/v1beta1

node.k8s.io/v1

node.k8s.io/v1beta1

policy/v1beta1

rbac.authorization.k8s.io/v1

rbac.authorization.k8s.io/v1beta1

scheduling.k8s.io/v1

scheduling.k8s.io/v1beta1

stable.example.com/v1

storage.k8s.io/v1

storage.k8s.io/v1beta1

v1

[root@master01 ~]#

提示:只有上面列出的群组版本才能够被客户端访问,即客户端只能访问上述列表中群组版本中的资源,没有出现群组版本是一定不会被客户端访问到;

apiservice资源的使用

示例:创建apiservice资源

[root@master01 ~]# cat apiservice-demo.yaml

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

name: v2beta1.auth.ilinux.io

spec:

insecureSkipTLSVerify: true

group: auth.ilinux.io

groupPriorityMinimum: 1000

versionPriority: 15

service:

name: auth-api

namespace: default

version: v2beta1

[root@master01 ~]#

提示:apiservice资源属于apiregistration.k8s.io/v1群组,其类型为APIService,其中spec.insecureSkipTLSVerify字段用来描述是否忽略安全验证,即不验证https证书;true表示不验证,false表示要验证;group字段用来描述对应自定义apiserver的群组;groupPriorityMinimum字段用来描述对应群组的优先级;versionPriority字段用来描述对应群组版本的优先级;service字段是用来描述把对应群组版本的请求路由至某个service;该service就是对应自定义apiserver关联的service;version字段用来描述对应apiserver属于对应群组中的某个版本;上述资源清单表示在aggregator上注册auth.ilinux.io/v2beta1这个端点,该端点对应的后端apiserver的service是default名称空间下的auth-api service;即客户端访问auth.ilinux.io/v2beta1下的资源都会被路由至default名称空间下的auth-api service进行响应;

应用清单

[root@master01 ~]# kubectl apply -f apiservice-demo.yaml

apiservice.apiregistration.k8s.io/v2beta1.auth.ilinux.io created

[root@master01 ~]# kubectl get apiservice |grep auth.ilinux.io

v2beta1.auth.ilinux.io default/auth-api False (ServiceNotFound) 16s

[root@master01 ~]# kubectl api-versions |grep auth.ilinux.io

auth.ilinux.io/v2beta1

[root@master01 ~]#

提示:可以看到应用清单以后,对应的端点信息就出现在api-versions中;

上述清单只是用来说明对应apiservice资源的使用;其实应用上述清单创建apiservice资源没有实质的作用,其原因是我们对应名称空间下并没有对应的服务,也没有对应自定义apiserver;所以通常自定义apiserver,我们会用到apiservice资源来把自定义apiserver整合进原生apiserver中;接下来我们部署一个真正意义上的自定义apiserver

部署metrics-server

metrics-server是用来扩展k8s的第三方apiserver,其主要作用是收集pod或node上的cpu,内存,磁盘等指标数据,并提供一个api接口供kubectl top命令访问;默认情况kubectl top 命令是没法正常使用,其原因是默认apiserver上没有对应的接口提供收集pod或node的cpu,内存,磁盘等核心指标数据;kubectl top命令主要用来显示pod/node资源的cpu,内存,磁盘的占用比例;该命令能够正常使用必须依赖Metrics API;

默认没有部署metrics server使用kubectl top pod/node查看pod或节点的cpu,内存占用比例

[root@master01 ~]# kubectl top

Display Resource (CPU/Memory/Storage) usage. The top command allows you to see the resource consumption for nodes or pods. This command requires Metrics Server to be correctly configured and working on the server. Available Commands:

node Display Resource (CPU/Memory/Storage) usage of nodes

pod Display Resource (CPU/Memory/Storage) usage of pods Usage:

kubectl top [flags] [options] Use "kubectl <command> --help" for more information about a given command.

Use "kubectl options" for a list of global command-line options (applies to all commands).

[root@master01 ~]# kubectl top pod

error: Metrics API not available

[root@master01 ~]# kubectl top node

error: Metrics API not available

[root@master01 ~]#

提示:默认没有部署metrics server,使用kubectl top pod/node命令,它会告诉我们没有可用的metrics api;

部署metrics server

下载部署清单

[root@master01 ~]# mkdir metrics-server

[root@master01 ~]# cd metrics-server

[root@master01 metrics-server]# wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.4.0/components.yaml

--2021-01-14 23:54:30-- https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.4.0/components.yaml

Resolving github.com (github.com)... 52.74.223.119

Connecting to github.com (github.com)|52.74.223.119|:443... connected.

HTTP request sent, awaiting response... 302 Found

Location: https://github-production-release-asset-2e65be.s3.amazonaws.com/92132038/c700f080-1f7e-11eb-9e30-864a63f442f4?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAIWNJYAX4CSVEH53A%2F20210114%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20210114T155432Z&X-Amz-Expires=300&X-Amz-Signature=fc5a6f41ca50ec22e87074a778d2cb35e716ae6c3231afad17dfaf8a02203e35&X-Amz-SignedHeaders=host&actor_id=0&key_id=0&repo_id=92132038&response-content-disposition=attachment%3B%20filename%3Dcomponents.yaml&response-content-type=application%2Foctet-stream [following]

--2021-01-14 23:54:32-- https://github-production-release-asset-2e65be.s3.amazonaws.com/92132038/c700f080-1f7e-11eb-9e30-864a63f442f4?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAIWNJYAX4CSVEH53A%2F20210114%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20210114T155432Z&X-Amz-Expires=300&X-Amz-Signature=fc5a6f41ca50ec22e87074a778d2cb35e716ae6c3231afad17dfaf8a02203e35&X-Amz-SignedHeaders=host&actor_id=0&key_id=0&repo_id=92132038&response-content-disposition=attachment%3B%20filename%3Dcomponents.yaml&response-content-type=application%2Foctet-stream

Resolving github-production-release-asset-2e65be.s3.amazonaws.com (github-production-release-asset-2e65be.s3.amazonaws.com)... 52.217.39.44

Connecting to github-production-release-asset-2e65be.s3.amazonaws.com (github-production-release-asset-2e65be.s3.amazonaws.com)|52.217.39.44|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 3962 (3.9K) [application/octet-stream]

Saving to: ‘components.yaml’ 100%[===========================================================================================>] 3,962 11.0KB/s in 0.4s 2021-01-14 23:54:35 (11.0 KB/s) - ‘components.yaml’ saved [3962/3962] [root@master01 metrics-server]# ls

components.yaml

[root@master01 metrics-server]#

修改部署清单内容

[root@master01 metrics-server]# cat components.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

rules:

- apiGroups:

- metrics.k8s.io

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- pods

- nodes

- nodes/stats

- namespaces

- configmaps

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --kubelet-insecure-tls

image: k8s.gcr.io/metrics-server/metrics-server:v0.4.0

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 4443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /readyz

port: https

scheme: HTTPS

periodSeconds: 10

securityContext:

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- mountPath: /tmp

name: tmp-dir

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {}

name: tmp-dir

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

k8s-app: metrics-server

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 100

[root@master01 metrics-server]#

提示:在deploy中,spec.template.containers.args字段中加上--kubelet-insecure-tls选项,表示不验证客户端证书;上述清单主要用deploy控制器将metrics server运行为一个pod,然后授权metrics-server用户能够对pod/node资源进行只读权限;然后把metrics.k8s.io/v1beta1注册到原生apiserver上,让其客户端访问metrics.k8s.io下的资源能够被路由至metrics-server这个服务上进行响应;

应用资源清单

[root@master01 metrics-server]# kubectl apply -f components.yaml

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

[root@master01 metrics-server]#

验证:查看原生apiserver是否有metrics.k8s.io/v1beta1?

[root@master01 metrics-server]# kubectl api-versions|grep metrics

metrics.k8s.io/v1beta1

[root@master01 metrics-server]#

提示:可以看到metrics.k8s.io/v1beta1群组已经注册到原生apiserver上;

查看metrics server pod是否运行正常?

[root@master01 metrics-server]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-744cfdf676-kh6rm 1/1 Running 4 5d7h

canal-5bt88 2/2 Running 20 11d

canal-9ldhl 2/2 Running 22 11d

canal-fvts7 2/2 Running 20 11d

canal-mwtg4 2/2 Running 23 11d

canal-rt8nn 2/2 Running 21 11d

coredns-7f89b7bc75-k9gdt 1/1 Running 32 37d

coredns-7f89b7bc75-kp855 1/1 Running 31 37d

etcd-master01.k8s.org 1/1 Running 36 37d

kube-apiserver-master01.k8s.org 1/1 Running 14 13d

kube-controller-manager-master01.k8s.org 1/1 Running 43 37d

kube-flannel-ds-fnd2w 1/1 Running 5 5d5h

kube-flannel-ds-k9l4k 1/1 Running 7 5d5h

kube-flannel-ds-s7w2j 1/1 Running 4 5d5h

kube-flannel-ds-vm4mr 1/1 Running 6 5d5h

kube-flannel-ds-zgq92 1/1 Running 37 37d

kube-proxy-74fxn 1/1 Running 10 10d

kube-proxy-fbl6c 1/1 Running 8 10d

kube-proxy-n82sf 1/1 Running 10 10d

kube-proxy-ndww5 1/1 Running 11 10d

kube-proxy-v8dhk 1/1 Running 11 10d

kube-scheduler-master01.k8s.org 1/1 Running 39 37d

metrics-server-58fcfcc9d-drbw2 1/1 Running 0 32s

[root@master01 metrics-server]#

提示:可以看到对应pod已经正常运行;

查看pod里的日志是否正常?

[root@master01 metrics-server]# kubectl logs metrics-server-58fcfcc9d-drbw2 -n kube-system

I0114 17:52:03.601493 1 serving.go:325] Generated self-signed cert (/tmp/apiserver.crt, /tmp/apiserver.key)

E0114 17:52:04.140587 1 pathrecorder.go:107] registered "/metrics" from goroutine 1 [running]:

runtime/debug.Stack(0x1942e80, 0xc00069aed0, 0x1bb58b5)

/usr/local/go/src/runtime/debug/stack.go:24 +0x9d

k8s.io/apiserver/pkg/server/mux.(*PathRecorderMux).trackCallers(0xc00028afc0, 0x1bb58b5, 0x8)

/go/pkg/mod/k8s.io/apiserver@v0.19.2/pkg/server/mux/pathrecorder.go:109 +0x86

k8s.io/apiserver/pkg/server/mux.(*PathRecorderMux).Handle(0xc00028afc0, 0x1bb58b5, 0x8, 0x1e96f00, 0xc0006d88d0)

/go/pkg/mod/k8s.io/apiserver@v0.19.2/pkg/server/mux/pathrecorder.go:173 +0x84

k8s.io/apiserver/pkg/server/routes.MetricsWithReset.Install(0xc00028afc0)

/go/pkg/mod/k8s.io/apiserver@v0.19.2/pkg/server/routes/metrics.go:43 +0x5d

k8s.io/apiserver/pkg/server.installAPI(0xc00000a1e0, 0xc000589b00)

/go/pkg/mod/k8s.io/apiserver@v0.19.2/pkg/server/config.go:711 +0x6c

k8s.io/apiserver/pkg/server.completedConfig.New(0xc000589b00, 0x1f099c0, 0xc0001449b0, 0x1bbdb5a, 0xe, 0x1ef29e0, 0x2cef248, 0x0, 0x0, 0x0)

/go/pkg/mod/k8s.io/apiserver@v0.19.2/pkg/server/config.go:657 +0xb45

sigs.k8s.io/metrics-server/pkg/server.Config.Complete(0xc000589b00, 0xc000599440, 0xc000599b00, 0xdf8475800, 0xc92a69c00, 0x0, 0x0, 0xdf8475800)

/go/src/sigs.k8s.io/metrics-server/pkg/server/config.go:52 +0x312

sigs.k8s.io/metrics-server/cmd/metrics-server/app.runCommand(0xc00001c6e0, 0xc000114600, 0x0, 0x0)

/go/src/sigs.k8s.io/metrics-server/cmd/metrics-server/app/start.go:66 +0x157

sigs.k8s.io/metrics-server/cmd/metrics-server/app.NewMetricsServerCommand.func1(0xc0000d9340, 0xc0005a4cd0, 0x0, 0x5, 0x0, 0x0)

/go/src/sigs.k8s.io/metrics-server/cmd/metrics-server/app/start.go:37 +0x33

github.com/spf13/cobra.(*Command).execute(0xc0000d9340, 0xc00013a130, 0x5, 0x5, 0xc0000d9340, 0xc00013a130)

/go/pkg/mod/github.com/spf13/cobra@v1.0.0/command.go:842 +0x453

github.com/spf13/cobra.(*Command).ExecuteC(0xc0000d9340, 0xc00013a180, 0x0, 0x0)

/go/pkg/mod/github.com/spf13/cobra@v1.0.0/command.go:950 +0x349

github.com/spf13/cobra.(*Command).Execute(...)

/go/pkg/mod/github.com/spf13/cobra@v1.0.0/command.go:887

main.main()

/go/src/sigs.k8s.io/metrics-server/cmd/metrics-server/metrics-server.go:38 +0xae

I0114 17:52:04.266492 1 requestheader_controller.go:169] Starting RequestHeaderAuthRequestController

I0114 17:52:04.267021 1 shared_informer.go:240] Waiting for caches to sync for RequestHeaderAuthRequestController

I0114 17:52:04.266641 1 secure_serving.go:197] Serving securely on [::]:4443

I0114 17:52:04.266670 1 tlsconfig.go:240] Starting DynamicServingCertificateController

I0114 17:52:04.266682 1 dynamic_serving_content.go:130] Starting serving-cert::/tmp/apiserver.crt::/tmp/apiserver.key

I0114 17:52:04.266688 1 configmap_cafile_content.go:202] Starting client-ca::kube-system::extension-apiserver-authentication::client-ca-file

I0114 17:52:04.267120 1 shared_informer.go:240] Waiting for caches to sync for client-ca::kube-system::extension-apiserver-authentication::client-ca-file

I0114 17:52:04.266692 1 configmap_cafile_content.go:202] Starting client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file

I0114 17:52:04.267301 1 shared_informer.go:240] Waiting for caches to sync for client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file

I0114 17:52:04.367448 1 shared_informer.go:247] Caches are synced for client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file

I0114 17:52:04.367472 1 shared_informer.go:247] Caches are synced for client-ca::kube-system::extension-apiserver-authentication::client-ca-file

I0114 17:52:04.367462 1 shared_informer.go:247] Caches are synced for RequestHeaderAuthRequestController

[root@master01 metrics-server]#

提示:只要metrics server pod没有出现错误日志,或者无法注册等信息,就表示pod里的容器运行正常;

验证:使用kubectl top 命令查看pod的cpu ,内存占比,看看对应命令是否可以正常执行?

[root@master01 metrics-server]# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

master01.k8s.org 235m 11% 1216Mi 70%

node01.k8s.org 140m 3% 747Mi 20%

node02.k8s.org 120m 3% 625Mi 17%

node03.k8s.org 133m 3% 594Mi 16%

node04.k8s.org 125m 3% 700Mi 19%

[root@master01 metrics-server]# kubectl top pods -n kube-system

NAME CPU(cores) MEMORY(bytes)

calico-kube-controllers-744cfdf676-kh6rm 2m 23Mi

canal-5bt88 50m 118Mi

canal-9ldhl 22m 86Mi

canal-fvts7 49m 106Mi

canal-mwtg4 57m 113Mi

canal-rt8nn 56m 113Mi

coredns-7f89b7bc75-k9gdt 3m 12Mi

coredns-7f89b7bc75-kp855 3m 15Mi

etcd-master01.k8s.org 25m 72Mi

kube-apiserver-master01.k8s.org 99m 410Mi

kube-controller-manager-master01.k8s.org 14m 88Mi

kube-flannel-ds-fnd2w 3m 45Mi

kube-flannel-ds-k9l4k 3m 27Mi

kube-flannel-ds-s7w2j 4m 46Mi

kube-flannel-ds-vm4mr 3m 45Mi

kube-flannel-ds-zgq92 2m 19Mi

kube-proxy-74fxn 1m 27Mi

kube-proxy-fbl6c 1m 23Mi

kube-proxy-n82sf 1m 25Mi

kube-proxy-ndww5 1m 25Mi

kube-proxy-v8dhk 2m 23Mi

kube-scheduler-master01.k8s.org 3m 33Mi

metrics-server-58fcfcc9d-drbw2 6m 23Mi

[root@master01 metrics-server]#

提示:可以看到kubectl top命令可以正常执行,说明metrics server 部署成功没有问题;

以上就是使用apiservice资源结合自定义apiserver扩展k8s功能的示例,简单总结apiservice资源的主要作用就是在aggregator上创建对应的路由信息,该路由信息的主要作用是将对应端点访问路由至自定义apiserver所对应的service进行响应;

容器编排系统K8s之APIService资源的更多相关文章

- 容器编排系统K8s之crd资源

前文我们了解了k8s节点污点和pod的对节点污点容忍度相关话题,回顾请参考:https://www.cnblogs.com/qiuhom-1874/p/14255486.html:今天我们来聊一下扩展 ...

- 容器编排系统k8s之Service资源

前文我们了解了k8s上的DemonSet.Job和CronJob控制器的相关话题,回顾请参考:https://www.cnblogs.com/qiuhom-1874/p/14157306.html:今 ...

- 容器编排系统k8s之Ingress资源

前文我们了解了k8s上的service资源的相关话题,回顾请参考:https://www.cnblogs.com/qiuhom-1874/p/14161950.html:今天我们来了解下k8s上的In ...

- 容器编排系统K8s之HPA资源

前文我们了解了用Prometheus监控k8s上的节点和pod资源,回顾请参考:https://www.cnblogs.com/qiuhom-1874/p/14287942.html:今天我们来了解下 ...

- 容器编排系统K8s之NetworkPolicy资源

前文我们了解了k8s的网络插件flannel的基础工作逻辑,回顾请参考:https://www.cnblogs.com/qiuhom-1874/p/14225657.html:今天我们来聊一下k8s上 ...

- 容器编排系统K8s之Prometheus监控系统+Grafana部署

前文我们聊到了k8s的apiservice资源结合自定义apiserver扩展原生apiserver功能的相关话题,回顾请参考:https://www.cnblogs.com/qiuhom-1874/ ...

- 容器编排系统K8s之ConfigMap、Secret资源

前文我们了解了k8s上的pv/pvc/sc资源的使用和相关说明,回顾请参考:https://www.cnblogs.com/qiuhom-1874/p/14188621.html:今天我们主要来聊一下 ...

- 容器编排系统K8s之PV、PVC、SC资源

前文我们聊到了k8s中给Pod添加存储卷相关话题,回顾请参考:https://www.cnblogs.com/qiuhom-1874/p/14180752.html:今天我们来聊一下持久存储卷相关话题 ...

- 容器编排系统K8s之Volume的基础使用

前文我们聊到了k8s上的ingress资源相关话题,回顾请参考:https://www.cnblogs.com/qiuhom-1874/p/14167581.html:今天们来聊一下k8s上volum ...

随机推荐

- 敏捷开发 | DSDM 在非 IT 领域也同样适用?

动态系统开发方法(Dynamic Systems Development Method:DSDM)是在快速应用程序开发(RAD)方法的基础上改进的.作为敏捷方法论的一种,DSDM方法倡导以业务为核心, ...

- MVC错误页面相关说明

1.如果使用普通的纯静态页面,在httpErrors中配置的话,返回的status code是200,不会是对应的错误码404.只能使用,aspx或mvc页面,加入,这样就会返回的时候就会显示404错 ...

- Linux杂谈: gcc对结构体大小的默认处理方式

1. 发现问题 最近在编写代码过程中发现,对一个结构体进行 sizeof 操作时,有时候大小是填充过的,有时候又没有填充. 那么,如果在代码中没有显示的指定要求编译器进行对齐时,gcc的默认处理是怎样 ...

- mini-web框架-元类-总结(5.4.1)

@ 目录 1.说明 2.代码 关于作者 1.说明 python中万物都是对象 使用python中自带的globals函数返回一个字典 通过这个可以调取当前py文件中的所有东西 当定义一个函数,类,全局 ...

- qs的工具方法讲解

简单来说,qs 是一个增加了一些安全性的查询字符串解析和序列化字符串的库. 今天在学习同事的代码, 在学习过程中遇到了这样一句代码 研究了很久,只了解了个大概,后面慢慢的用熟练只会,想着做个总结,温习 ...

- 安装篇九:安装wordpress(5.4版本)

#1.下载wordpress程序 下载部署wordpress博客程序(https://cn.wordpress.org/ 英文官网:https://www.wordpress.org/ ) [root ...

- [UWP] - 用Json格式来发送一个Post请求到WCF服务

测试实体类:(需要在客户端和服务端建了有相同字段名称的实体) public class CompositeType { public CompositeType() { SubCompositeTyp ...

- 远程调用get和post请求 将返回结果转换成实体类

package org.springblade.desk.utils; import org.apache.http.client.ResponseHandler; import org.apache ...

- flowable 实现多实例-会签-动态配置人员 参考demo

会签 即多人执行当前任务 设置判断数 通过 例如:设置了是半数通过即可通过当前节点 如果当前是4人那就是2人即通过 如果是6人那就是三人即通过 如果是5人 即三人通过 看各位的判断值是如何书写 这个值 ...

- 史上最全java里面的锁

什么是锁 在计算机科学中,锁(lock)或互斥(mutex)是一种同步机制,用于在有许多执行线程的环境中强制对资源的访问限制.锁旨在强制实施互斥排他.并发控制策略. 锁通常需要硬件支持才能有效实施.这 ...