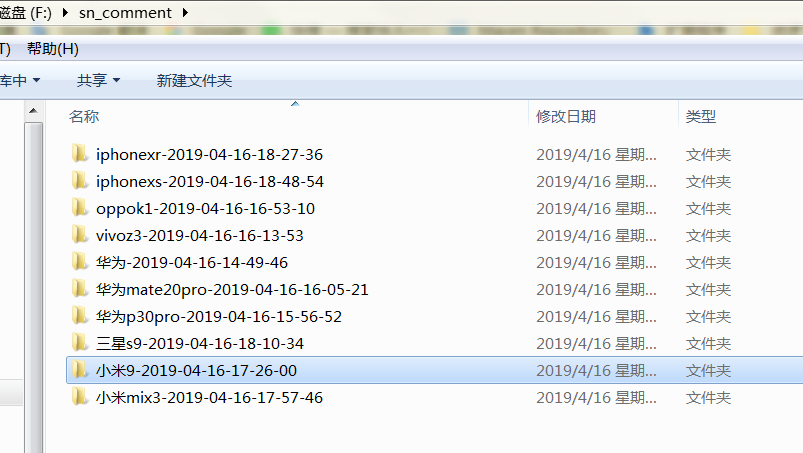

毕设一:python 爬取苏宁的商品评论

毕设需要大量的商品评论,网上找的数据比较旧了,自己动手

代理池用的proxypool,github:https://github.com/jhao104/proxy_pool

ua:fake_useragent

# 评价较多的店铺(苏宁推荐)

https://tuijian.suning.com/recommend-portal/recommendv2/biz.jsonp?parameter=%E5%8D%8E%E4%B8%BA&sceneIds=2-1&count=10 # 评价

https://review.suning.com/ajax/cluster_review_lists/general-30259269-000000010748901691-0000000000-total-1-default-10-----reviewList.htm?callback=reviewList # clusterid

# 在查看全部评论的href中,实际测试发现是执行js加上的,两种方案

# 1.去匹配js中的clusterId

# 2.或者用selenium/phantomjs去请求执行js之后的页面然后解析html获得href

代码:

# -*- coding: utf-8 -*-

# @author: Tele

# @Time : 2019/04/15 下午 8:20

import time

import requests

import os

import json

import re

from fake_useragent import UserAgent class SNSplider:

flag = True

regex_cluser_id = re.compile("\"clusterId\":\"(.{8})\"")

regex_comment = re.compile("reviewList\((.*)\)") @staticmethod

def get_proxy():

return requests.get("http://127.0.0.1:5010/get/").content.decode() @staticmethod

def get_ua():

ua = UserAgent()

return ua.random def __init__(self, kw_list):

self.kw_list = kw_list

# 评论url 参数顺序:cluser_id,sugGoodsCode,页码

self.url_temp = "https://review.suning.com/ajax/cluster_review_lists/general-{}-{}-0000000000-total-{}-default-10-----reviewList.htm"

self.headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36",

}

self.proxies = {

"http": None

}

self.parent_dir = None

self.file_dir = None # ua,proxy

def check(self):

self.headers["User-Agent"] = SNSplider.get_ua()

proxy = "http://" + SNSplider.get_proxy()

self.proxies["http"] = proxy

print("ua:", self.headers["User-Agent"])

print("proxy:", self.proxies["http"]) # 评论

def parse_url(self, cluster_id, sugGoodsCode, page):

url = self.url_temp.format(cluster_id, sugGoodsCode, page)

response = requests.get(url, headers=self.headers, proxies=self.proxies, verify=False)

if response.status_code == 200:

print(url)

if len(response.content) < 0:

return

data = json.loads(SNSplider.regex_comment.findall(response.content.decode())[0])

if "commodityReviews" in data:

# 评论

comment_list = data["commodityReviews"]

if len(comment_list) > 0:

item_list = list()

for comment in comment_list:

item = dict()

try:

# 商品名

item["referenceName"] = comment["commodityInfo"]["commodityName"]

except:

item["referenceName"] = None

# 评论时间

item["creationTime"] = comment["publishTime"]

# 内容

item["content"] = comment["content"]

# label

item["label"] = comment["labelNames"]

item_list.append(item) # 保存

with open(self.file_dir, "a", encoding="utf-8") as file:

file.write(json.dumps(item_list, ensure_ascii=False, indent=2))

file.write("\n")

time.sleep(5)

else:

SNSplider.flag = False

else:

print("评论页出错") # 提取商品信息

def get_product_info(self):

url_temp = "https://tuijian.suning.com/recommend-portal/recommendv2/biz.jsonp?parameter={}&sceneIds=2-1&count=10"

result_list = list()

for kw in self.kw_list:

url = url_temp.format(kw)

response = requests.get(url, headers=self.headers, proxies=self.proxies, verify=False)

if response.status_code == 200:

kw_dict = dict()

id_list = list()

data = json.loads(response.content.decode())

skus_list = data["sugGoods"][0]["skus"]

if len(skus_list) > 0:

for skus in skus_list:

item = dict()

sugGoodsCode = skus["sugGoodsCode"]

# 请求cluserId

item["sugGoodsCode"] = sugGoodsCode

item["cluster_id"] = self.get_cluster_id(sugGoodsCode)

id_list.append(item)

kw_dict["title"] = kw

kw_dict["id_list"] = id_list

result_list.append(kw_dict)

else:

pass

return result_list # cluserid

def get_cluster_id(self, sugGoodsCode):

self.check()

url = "https://product.suning.com/0000000000/{}.html".format(sugGoodsCode[6::])

response = requests.get(url, headers=self.headers, proxies=self.proxies, verify=False)

if response.status_code == 200:

cluser_id = None

try:

cluser_id = SNSplider.regex_cluser_id.findall(response.content.decode())[0]

except:

pass

return cluser_id

else:

print("请求cluster id出错") def get_comment(self, item_list):

if len(item_list) > 0:

for item in item_list:

id_list = item["id_list"]

item_title = item["title"]

if len(id_list) > 0:

self.parent_dir = "f:/sn_comment/" + item_title + time.strftime("-%Y-%m-%d-%H-%M-%S",

time.localtime(time.time()))

if not os.path.exists(self.parent_dir):

os.makedirs(self.parent_dir)

for product_code in id_list:

# 检查proxy,ua

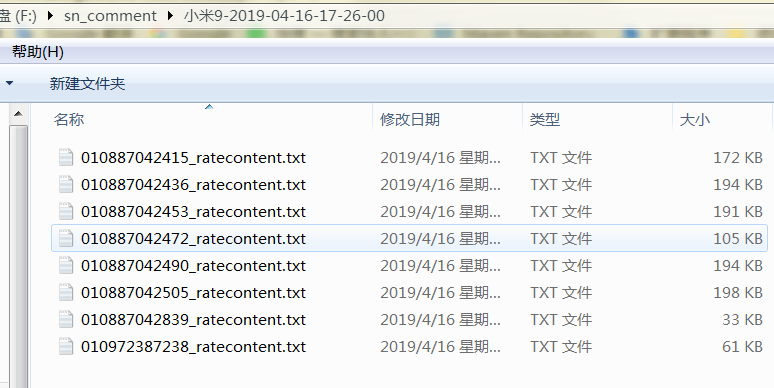

sugGoodsCode = product_code["sugGoodsCode"]

cluster_id = product_code["cluster_id"]

if not cluster_id:

continue

page = 1

# 检查目录

self.file_dir = self.parent_dir + "/" + sugGoodsCode[6::] + "_ratecontent.txt"

self.check()

while SNSplider.flag:

self.parse_url(cluster_id, sugGoodsCode, page)

page += 1

SNSplider.flag = True

else:

print("---error,empty id list---")

else:

print("---error,empty item list---") def run(self):

self.check()

item_list = self.get_product_info()

print(item_list)

self.get_comment(item_list) def main():

# , "华为mate20pro", "vivoz3", "oppok1", "荣耀8x", "小米9", "小米mix3", "三星s9", "iphonexr", "iphonexs"

# "华为p30pro", "华为mate20pro", "vivoz3""oppok1""荣耀8x", "小米9"

kw_list = ["小米mix3", "三星s9", "iphonexr", "iphonexs"]

splider = SNSplider(kw_list)

splider.run() if __name__ == '__main__':

main()

毕设一:python 爬取苏宁的商品评论的更多相关文章

- 毕设之Python爬取天气数据及可视化分析

写在前面的一些P话:(https://jq.qq.com/?_wv=1027&k=RFkfeU8j) 天气预报我们每天都会关注,我们可以根据未来的天气增减衣物.安排出行,每天的气温.风速风向. ...

- Python爬取淘宝店铺和评论

1 安装开发需要的一些库 (1) 安装mysql 的驱动:在Windows上按win+r输入cmd打开命令行,输入命令pip install pymysql,回车即可. (2) 安装自动化测试的驱动s ...

- python爬取网易云音乐歌曲评论信息

网易云音乐是广大网友喜闻乐见的音乐平台,区别于别的音乐平台的最大特点,除了“它比我还懂我的音乐喜好”.“小清新的界面设计”就是它独有的评论区了——————各种故事汇,各种金句频出.我们可以透过歌曲的评 ...

- 用Python爬取了三大相亲软件评论区,结果...

小三:怎么了小二?一副愁眉苦脸的样子. 小二:唉!这不是快过年了吗,家里又催相亲了 ... 小三:现在不是流行网恋吗,你可以试试相亲软件呀. 小二:这玩意靠谱吗? 小三:我也没用过,你自己看看软件评论 ...

- 毕设二:python 爬取京东的商品评论

# -*- coding: utf-8 -*- # @author: Tele # @Time : 2019/04/14 下午 3:48 # 多线程版 import time import reque ...

- 【Python爬虫案例学习】Python爬取淘宝店铺和评论

安装开发需要的一些库 (1) 安装mysql 的驱动:在Windows上按win+r输入cmd打开命令行,输入命令pip install pymysql,回车即可. (2) 安装自动化测试的驱动sel ...

- Python爬取网易云热歌榜所有音乐及其热评

获取特定歌曲热评: 首先,我们打开网易云网页版,击排行榜,然后点击左侧云音乐热歌榜,如图: 关于如何抓取指定的歌曲的热评,参考这篇文章,很详细,对小白很友好: 手把手教你用Python爬取网易云40万 ...

- Python 爬取所有51VOA网站的Learn a words文本及mp3音频

Python 爬取所有51VOA网站的Learn a words文本及mp3音频 #!/usr/bin/env python # -*- coding: utf-8 -*- #Python 爬取所有5 ...

- python爬取网站数据

开学前接了一个任务,内容是从网上爬取特定属性的数据.正好之前学了python,练练手. 编码问题 因为涉及到中文,所以必然地涉及到了编码的问题,这一次借这个机会算是彻底搞清楚了. 问题要从文字的编码讲 ...

随机推荐

- Python的主成分分析PCA算法

这篇文章很不错:https://blog.csdn.net/u013082989/article/details/53792010 为什么数据处理之前要进行归一化???(这个一直不明白) 这个也很不错 ...

- 微信支付v2开发(2) 微信支付账号体系

本文介绍微信支付账号体系各参数. 商户在微信公众平台提交申请资料以及银行账户资料,资料审核通过并签约后,可以获得表6-4所示帐户(包含财付通的相关支付资金账户),用于公众帐号支付. 帐号 作用 app ...

- poj 2240 floyd算法

Arbitrage Time Limit: 1000MS Memory Limit: 65536K Total Submissions: 17349 Accepted: 7304 Descri ...

- AE中Identify查询工具的实现

原文 AE中Identify查询工具的实现 主要实现点击查询并闪烁显示,并把查询要素的信息通过DataGridView显示出来,主要用到的接口: IIdentity.IArray.IIdentifyO ...

- 如何获取已经安装到苹果手机上的App信息

//如何获取已经安装到苹果手机上的App信息? Is it possible to get the information (app icon, app name, app location) abo ...

- 博客已迁移至http://blog.csdn.net/lujinhong2/

http://blog.csdn.net/lujinhong2/ 请继续关注

- gdbserver远程调试嵌入式linux应用程序方法

此处所讲的是基于gdb和gdbsever的远程调试方法.环境为:PC机:win7.虚拟机:10.04.下位机:飞嵌TE2440开发板. 嵌入式linux应用程序的开发一般都是在linux里面编写好代码 ...

- 【39.66%】【codeforces 740C】Alyona and mex

time limit per test2 seconds memory limit per test256 megabytes inputstandard input outputstandard o ...

- css3-11 如何改变背景图片的大小和位置

css3-11 如何改变背景图片的大小和位置 一.总结 一句话总结:css3相对css2本身就支持改变背景图片的大小和位置. 1.怎么设置背景不填充padding部分? background-orig ...

- jquery-12 jquery中的工具方法有哪些

jquery-12 jquery中的工具方法有哪些 一.总结 一句话总结:四个较常用方法.1.isArray();2.isFunction();3.isEmptyObejct();4.trim(); ...