requests库详解 --Python3

本文介绍了requests库的基本使用,希望对大家有所帮助。

requests库官方文档:https://2.python-requests.org/en/master/

一、请求:

1、GET请求

coding:utf8

import requests

response = requests.get('http://www.httpbin.org/get')

print(response.text)

2、POST请求

# coding:utf8

import requests

data = {

'name': 'Thanlon',

'age': 22,

'sex': '男'

}

response = requests.post('http://httpbin.org/post', data=data)

print(response.text)

3、解析json

# coding:utf8

import requests, json

response = requests.get('http://www.httpbin.org/get')

print(type(response.text))

# print(response.text)

print(response.json()) # 等价于json.loads(response.text)

print(type(response.json()))

4、获取二进制数据

# coding:utf8

import requests

response = requests.get('https://www.baidu.com/img/dong_5af13a1a6fd9fb2c587e68ca5038a3c8.gif')

print(type(response.text))

print(type(response.content))

print(response.text)

print(response.content) # 二进制流

5、保存二进制文件(图片、视频)

# coding:utf8

import requests

response = requests.get('https://www.baidu.com/img/dong_5af13a1a6fd9fb2c587e68ca5038a3c8.gif')

with open('image.gif', 'wb') as f:

f.write(response.content)

f.close()

6、添加headers(有需要添加请求头信息,否则请求不到,如“知乎”)

# coding:utf8

# get请求,添加headers

import requests

headers = {

'user-agent': 'Mouser-agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/72.0.3626.109 Safari/537.36zilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/72.0.3626.109 Safari/537.36'

}

response = requests.get('https://www.zhihu.com/explore', headers=headers)

print(response.text)

#coding:utf8

#post请求,添加headers

import requests

data = {

'name': 'Thanlon',

'age': 22,

'sex': '男'

}

headers = {

'user-agent': 'Mouser-agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/72.0.3626.109 Safari/537.36zilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/72.0.3626.109 Safari/537.36'

}

response = requests.post('http://httpbin.org/post', data=data, headers=headers)

print(response.text)

二、响应(response)

1、response相关属性

#coding:utf8

import requests

response = requests.get('http://httpbin.org')

print(type(response.status_code), response.status_code)#状态码 <class 'int'>

print(type(response.headers), response.headers)#响应头 <class 'requests.structures.CaseInsensitiveDict'>

print(type(response.cookies), response.cookies)#cookie <class 'requests.cookies.RequestsCookieJar'>

print(type(response.url), response.url)#请求的url <class 'str'>

print(type(response.history), response.history) # 访问的历史记录 <class 'list'>

2、状态码判断

#coding:utf8

import requests

response = requests.get('http://httpbin.org')

if not response.status_code == requests.codes.ok:#requests.codes.ok等价于200

pass

else:

print('Request Successfully')

3、文件上传

#coding:utf8

import requests

files = {

'file': open('image.gif', 'rb') # file可自定义

}

response = requests.post('http://httpbin.org/post', files=files)

print(response.text)

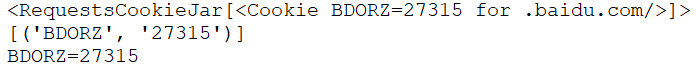

4、获取cookies

#coding:utf8

import requests

response = requests.get('http://www.baidu.com')

print(response.cookies)

print(response.cookies.items()) # [('BDORZ', '27315')]

for key, value in response.cookies.items():

print(key + '=' + value)

5、会话维持:模拟登录(相当于一个浏览器在请求)

#coding:utf8

import requests

s = requests.Session()

s.get('http://httpbin.org/cookies/set/BDORZ/123456')

response = s.get('http://httpbin.org/cookies')

print(response.text)

6、证书验证

#coding:utf8

import requests

response = requests.get('https://www.12306.cn')

print(response.status_code)

#coding:utf8

import requests, urllib3

urllib3.disable_warnings() # 消除警报信息

response = requests.get('https://www.12306.cn', verify=False) # verify默认是True

print(response.status_code) # 没有进行证书验证,有警报信息,

7、指定证书

#coding:utf8

import requests

response = requests.get('https://www.12306.cn', cert={'/path/server.crt', '/path/key'})

print(response.status_code)

8、代理的设置

#coding:utf8

import requests

proxies = {

'http': 'http://127.0.0.1:9743',

'https': 'https://127.0.0.1:9743'

}

response = requests.get('https://www.taobao.com', proxies=proxies)

print(response.status_code)

9、代理的设置(存在用户名和密码的情况下)

#coding:utf8

import requests

proxies = {

'http': 'http://user:password@127.0.0.1:9743',

'https': 'https://user:password@127.0.0.1:9743'

}

response = requests.get('https://www.taobao.com', proxies=proxies)

print(response.status_code)

10、socks代理

import requests

proxies = {

'http': 'socks5://127.0.0.1:9743',

'https': 'socks5://127.0.0.1:9743'

}

response = requests.get('https://www.taobao.com', proxies=proxies)

print(response.status_code)

11、超时设置

#coding:utf8

import requests

response = requests.get('http://httpbin.org', timeout=1)

print(response.status_code)

12、认证设置

遇到401错误,即:请求被禁止,需要加上auth参数

#coding:utf8

import requests

from requests.auth import HTTPBasicAuth

response = requests.get('https://api.github.com/user', auth=HTTPBasicAuth('user', 'pass'))

#response = requests.get('https://api.github.com/user', auth=('user', 'pass'))

print(response.status_code)

13、异常处理

#coding:utf8

import requests

from requests.exceptions import ReadTimeout, HTTPError, RequestException, ConnectionError

try:

response = requests.get('http://httpbin.org', timeout=0.3)

print(response.status_code)

except ReadTimeout:

print('Timeout')

except HTTPError:

print('Http Error')

# except ConnectionError:

# print('Connection Error')

except RequestException:

print('Request Error ')

requests库详解 --Python3的更多相关文章

- python WEB接口自动化测试之requests库详解

由于web接口自动化测试需要用到python的第三方库--requests库,运用requests库可以模拟发送http请求,再结合unittest测试框架,就能完成web接口自动化测试. 所以笔者今 ...

- Python爬虫:requests 库详解,cookie操作与实战

原文 第三方库 requests是基于urllib编写的.比urllib库强大,非常适合爬虫的编写. 安装: pip install requests 简单的爬百度首页的例子: response.te ...

- python接口自动化测试之requests库详解

前言 说到python发送HTTP请求进行接口自动化测试,脑子里第一个闪过的可能就是requests库了,当然python有很多模块可以发送HTTP请求,包括原生的模块http.client,urll ...

- 爬虫学习--Requests库详解 Day2

什么是Requests Requests是用python语言编写,基于urllib,采用Apache2 licensed开源协议的HTTP库,它比urllib更加方便,可以节约我们大量的工作,完全满足 ...

- Python爬虫学习==>第八章:Requests库详解

学习目的: request库比urllib库使用更加简洁,且更方便. 正式步骤 Step1:什么是requests requests是用Python语言编写,基于urllib,采用Apache2 Li ...

- urllib库详解 --Python3

相关:urllib是python内置的http请求库,本文介绍urllib三个模块:请求模块urllib.request.异常处理模块urllib.error.url解析模块urllib.parse. ...

- Python爬虫系列-Requests库详解

Requests基于urllib,比urllib更加方便,可以节约我们大量的工作,完全满足HTTP测试需求. 实例引入 import requests response = requests.get( ...

- python的requests库详解

快速上手 迫不及待了吗?本页内容为如何入门 Requests 提供了很好的指引.其假设你已经安装了 Requests.如果还没有,去安装一节看看吧. 首先,确认一下: Requests 已安装 Req ...

- requests库详解

import requests #实例引入 # response = requests.get('http://www.baidu.com') # print(type(response)) # pr ...

随机推荐

- IIS网站 由http协议改变为https协议

https://www.cnblogs.com/boosasliulin/p/6811231.html?utm_source=itdadao&utm_medium=referral

- linux----------yum一些安装命令汇总

1.yum install -y psmisc 安装killall命令 2.yum install -y lrzsz 安装sz(下载)和rz(上传)命令 3.yum ins ...

- 详解UML中的6大关系(关联、依赖、聚合、组合、泛化、实现)

UML中的6大关系相关英文及音标: 依赖关系 dependency --------> 关联关系 association ______> 聚合关系 aggregation ______ ...

- nginx解决跨域

location ~* \.(eot|ttf|woff|woff2|svg)$ { add_header Access-Control-Allow-Origin *; add_header Acces ...

- IP地址数据库 | 手机号段归属地数据库 | 行政区划省市区数据库

2019年4月最新版 IP地址数据库 (全球版·国内版·国外版·掩码版·英文版) 全球旗舰版 454267行 国内精华版 244379行 演示 https://www.qqzeng.com/ip ...

- Elasticsearch从入门到精通之Elasticsearch集群内的原理

上一章节我介绍了Elasticsearch安装与运行,本章节及后续章节将全方位介绍 Elasticsearch 的工作原理 在这个章节中,我将会再进一步介绍 cluster . node . shar ...

- 用Nuget部署程序包

用Nuget部署程序包 Nuget是.NET程序包管理工具(类似linux下的npm等),程序员可直接用简单的命令行(或VS)下载包.好处: (1)避免类库版本不一致带来的问题.GitHub是管理源代 ...

- Redmine(window7)安装

首先要准备Ruby相关文件,Redmine是基于Ruby on rails开发的. 1.下载railsinstaller,我这时下载的版本是railsinstaller-2.2.1.exe,对应的官网 ...

- github文件上传与下载

一.文件上传 ①.注册并登陆github,进入Github首页,点击New repository新建一个项目. ②.填写相应信息后点击create即可 Repository name: 仓库名称 De ...

- sqlserver可将字符转成数字再进行sum,如果varchar类型中存放的都是数字

sqlserver语法: select sum(cast(score as int)) as score from 表名; 注意:int是整型,在实际操作中根据自己需要的类型转换.