ESdata节点脱离集群,系统日志报120秒超时

故障描述:某一个节点(随机)总是无缘无故的脱离集群,节点load标高,100以上,敲命令都会卡住,只有强制重启才可以解决,加force_merge后更为严重,;

问题背景:之前基本一个月内会出现一次上述的问题吧,前阵子我加了一个每天凌晨1点开始执行force_merge=1定时任务,每次基本12小时左右才能完成,加剧了上述问题的出现,但这个基本是在凌晨4-6点出现故障比较多,一周内至少出现一次或多次,导致集群写入严重下降,属于半不可用状态(写入堆积,非实时数据),当时是加了merge开始问题急剧出现,经过几天排查无果,后来因为对历史数据查询需求不大,便关了这个定时任务,但是这个问题根本一直没解决,

目前有两个问题:

1、为什么会出现脱离集群的问题呢,而且现在时不时的出现,出现时间没有规律性?

2、某一个节点脱离后,整个集群吞吐量下降严重,从原来写入qps 70w+ 为什么会降到了30w左右呢?

排除硬件问题,重启后就恢复,而且找过系统部的同学看过没有硬件报警,希望有遇到过或者有排查思路的给一些建议或意见,以下是我收集的信息

信息一:在出现问题的当时(22:52),/vat/log/messages大量日志如下:

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994585] INFO: task java:104611 blocked for more than 120 seconds.

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994630] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994682] java D ffffffffffffffff 0 104611 1 0x00000100

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994685] ffff88013f05fc20 0000000000000082 ffff88001e6ee780 ffff88013f05ffd8

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994691] ffff88013f05ffd8 ffff88013f05ffd8 ffff88001e6ee780 ffff88013f05fd68

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994696] ffff88013f05fd70 7fffffffffffffff ffff88001e6ee780 ffffffffffffffff

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994701] Call Trace:

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994706] [<ffffffff8163a909>] schedule+0x29/0x70

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994710] [<ffffffff816385f9>] schedule_timeout+0x209/0x2d0

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994715] [<ffffffff8101c829>] ? read_tsc+0x9/0x10

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994720] [<ffffffff810d814c>] ? ktime_get_ts64+0x4c/0xf0

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994723] [<ffffffff8112882f>] ? delayacct_end+0x8f/0xb0

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994728] [<ffffffff8163acd6>] wait_for_completion+0x116/0x170

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994733] [<ffffffff810b8c10>] ? wake_up_state+0x20/0x20

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994737] [<ffffffff8109e7ac>] flush_work+0xfc/0x1c0

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994741] [<ffffffff8109a7e0>] ? move_linked_works+0x90/0x90

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994768] [<ffffffffa03a143a>] xlog_cil_force_lsn+0x8a/0x210 [xfs]

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994793] [<ffffffffa039fa7e>] _xfs_log_force_lsn+0x6e/0x2f0 [xfs]

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994798] [<ffffffff81639b12>] ? down_read+0x12/0x30

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994823] [<ffffffffa03824d0>] xfs_file_fsync+0x1b0/0x200 [xfs]

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994829] [<ffffffff8120f975>] do_fsync+0x65/0xa0

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994834] [<ffffffff8120fc63>] SyS_fdatasync+0x13/0x20

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994839] [<ffffffff81645b12>] tracesys+0xdd/0xe2

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994854] INFO: task java:67513 blocked for more than 120 seconds.

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994898] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994951] java D ffff88001f8128a8 0 67513 1 0x00000100

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994954] ffff880054a63c20 0000000000000082 ffff880116971700 ffff880054a63fd8

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994959] ffff880054a63fd8 ffff880054a63fd8 ffff880116971700 ffff88001f8128a0

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994964] ffff88001f8128a4 ffff880116971700 00000000ffffffff ffff88001f8128a8

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994970] Call Trace:

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994975] [<ffffffff8163b9e9>] schedule_preempt_disabled+0x29/0x70

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994979] [<ffffffff816396e5>] __mutex_lock_slowpath+0xc5/0x1c0

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994983] [<ffffffff811e8a87>] ? unlazy_walk+0x87/0x140

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994987] [<ffffffff81638b4f>] mutex_lock+0x1f/0x2f

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994992] [<ffffffff8163251e>] lookup_slow+0x33/0xa7

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.994996] [<ffffffff811edf13>] path_lookupat+0x773/0x7a0

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.995001] [<ffffffff811c0e65>] ? kmem_cache_alloc+0x35/0x1d0

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.995005] [<ffffffff811eec0f>] ? getname_flags+0x4f/0x1a0

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.995008] [<ffffffff811edf6b>] filename_lookup+0x2b/0xc0

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.995013] [<ffffffff811efd37>] user_path_at_empty+0x67/0xc0

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.995018] [<ffffffff81101072>] ? from_kgid_munged+0x12/0x20

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.995023] [<ffffffff811e3aef>] ? cp_new_stat+0x14f/0x180

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.995027] [<ffffffff811efda1>] user_path_at+0x11/0x20

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.995032] [<ffffffff811e35e3>] vfs_fstatat+0x63/0xc0

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.995036] [<ffffffff811e3bb1>] SYSC_newlstat+0x31/0x60

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.995042] [<ffffffff810222fd>] ? syscall_trace_enter+0x17d/0x220

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.995047] [<ffffffff81645ab3>] ? tracesys+0x7e/0xe2

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.995052] [<ffffffff811e3e3e>] SyS_newlstat+0xe/0x10

Aug 4 22:52:54 tjtx135-6-226 kernel: [4981123.995056] [<ffffffff81645b12>] tracesys+0xdd/0xe2根据已上报错,搜索的结论ung_task_timeout_secs和blocked for more than 120 seconds的解决方法,改了推荐的参数,问题还是依旧出现

Linux系统出现hung_task_timeout_secs和blocked for more than 120 seconds的解决方法

Linux系统出现系统没有响应。 在/var/log/message日志中出现大量的 “echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.” 和“blocked for more than 120 seconds”错误。

问题原因:

默认情况下, Linux会最多使用40%的可用内存作为文件系统缓存。当超过这个阈值后,文件系统会把将缓存中的内存全部写入磁盘, 导致后续的IO请求都是同步的。将缓存写入磁盘时,有一个默认120秒的超时时间。 出现上面的问题的原因是IO子系统的处理速度不够快,不能在120秒将缓存中的数据全部写入磁盘。IO系统响应缓慢,导致越来越多的请求堆积,最终系统内存全部被占用,导致系统失去响应。

解决方法:

根据应用程序情况,对vm.dirty_ratio,vm.dirty_background_ratio两个参数进行调优设置。 例如,推荐如下设置:

# sysctl -w vm.dirty_ratio=10

# sysctl -w vm.dirty_background_ratio=5

# sysctl -p

如果系统永久生效,修改/etc/sysctl.conf文件。加入如下两行:

#vi /etc/sysctl.confvm.dirty_background_ratio = 5 vm.dirty_ratio = 10重启系统生效。

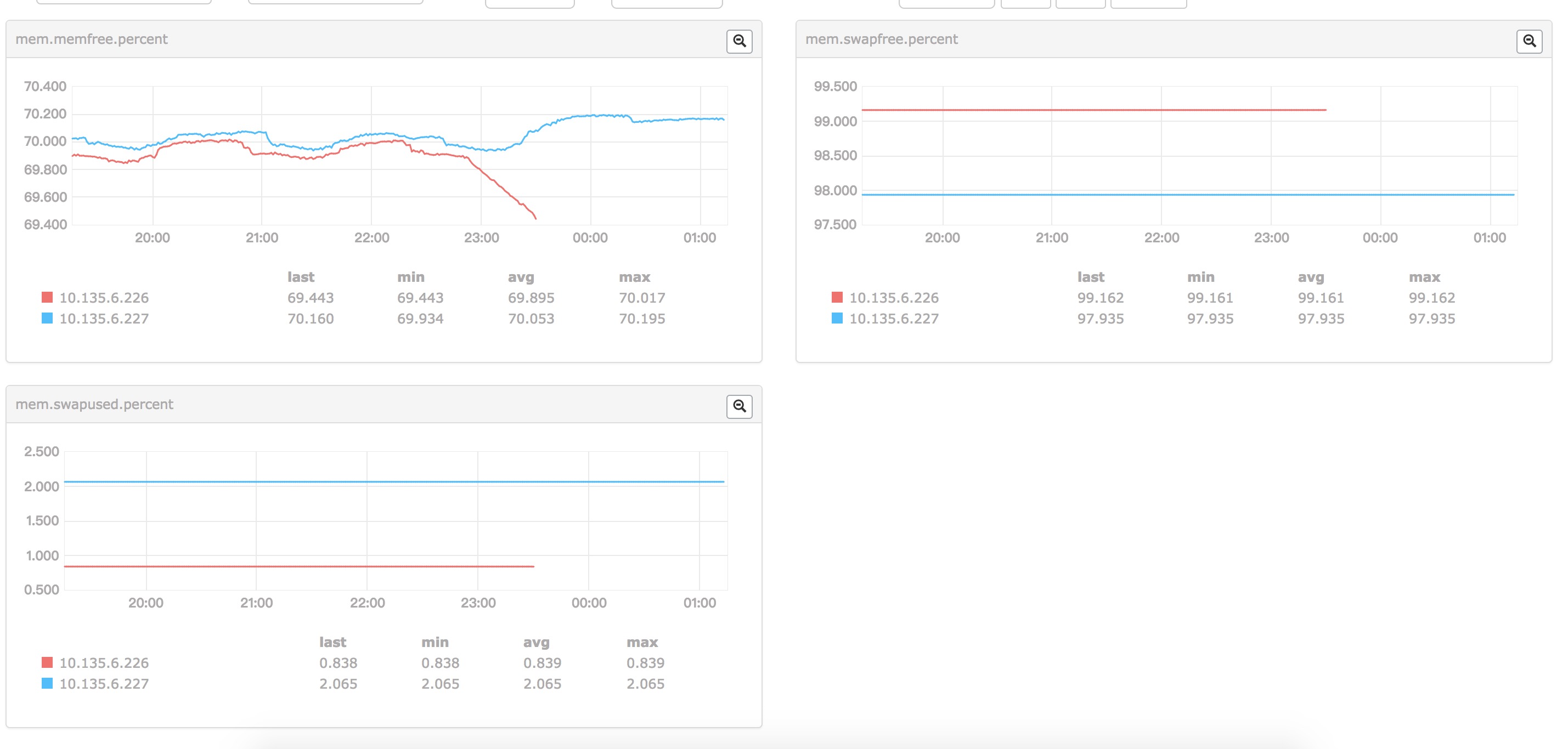

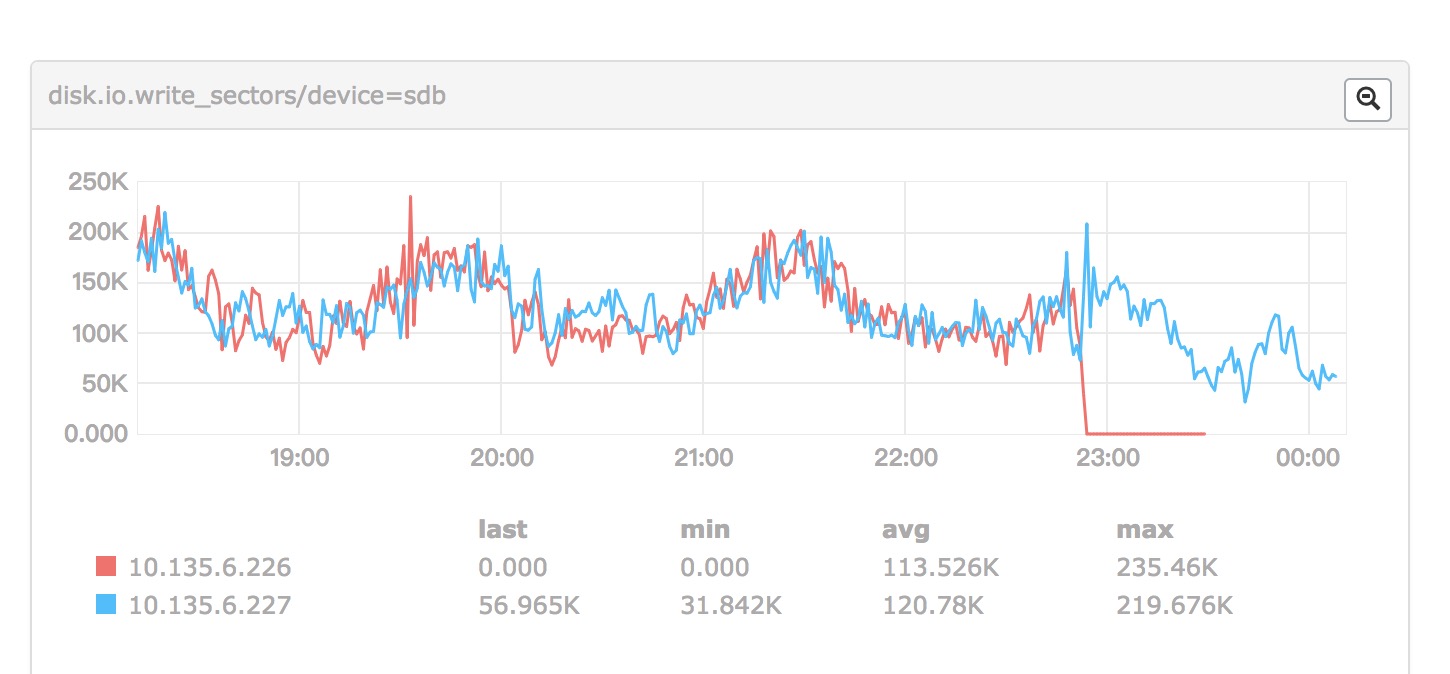

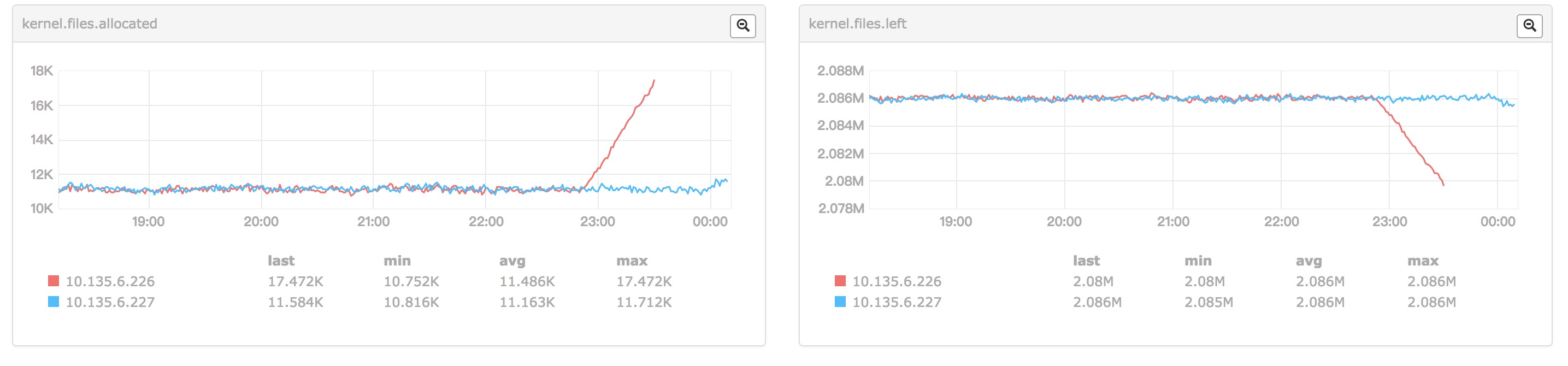

【根据博客结论,联想到可能跟可用内存作为文件系统缓存有关系,但是我看了故障前后的系统对比监控,在故障发生之前并没有表现出什么异常

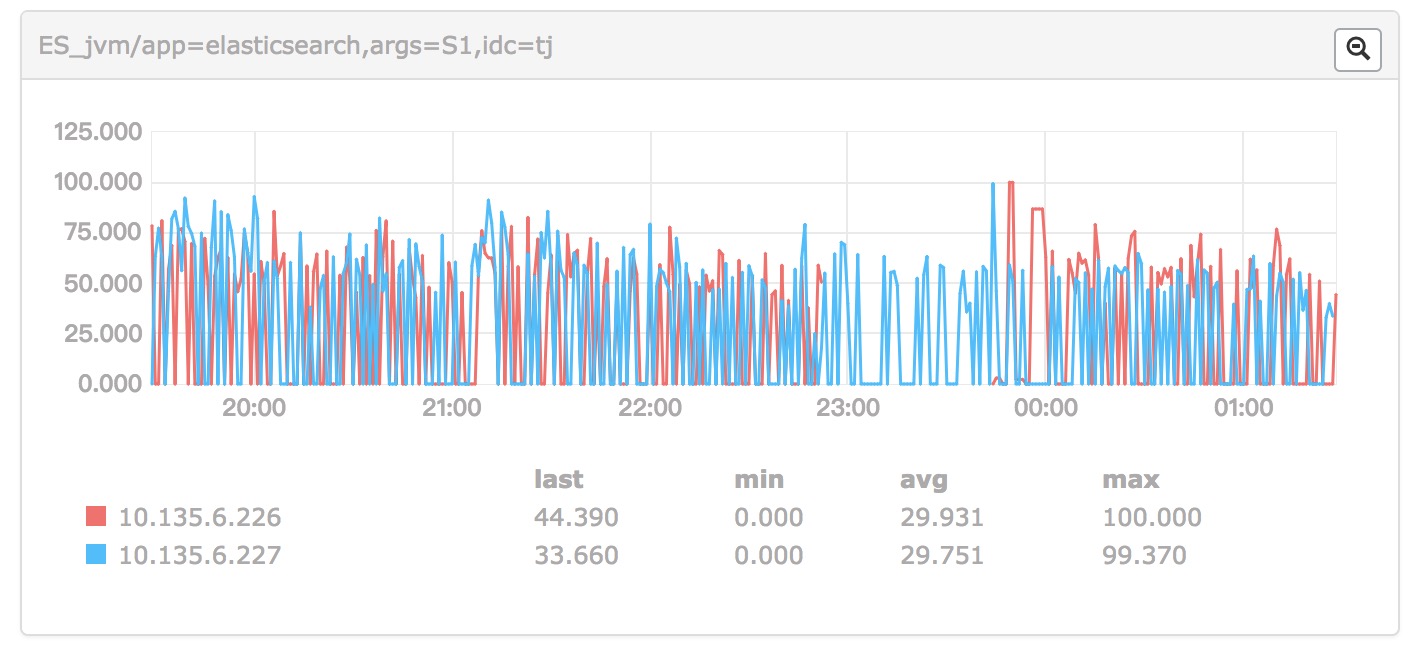

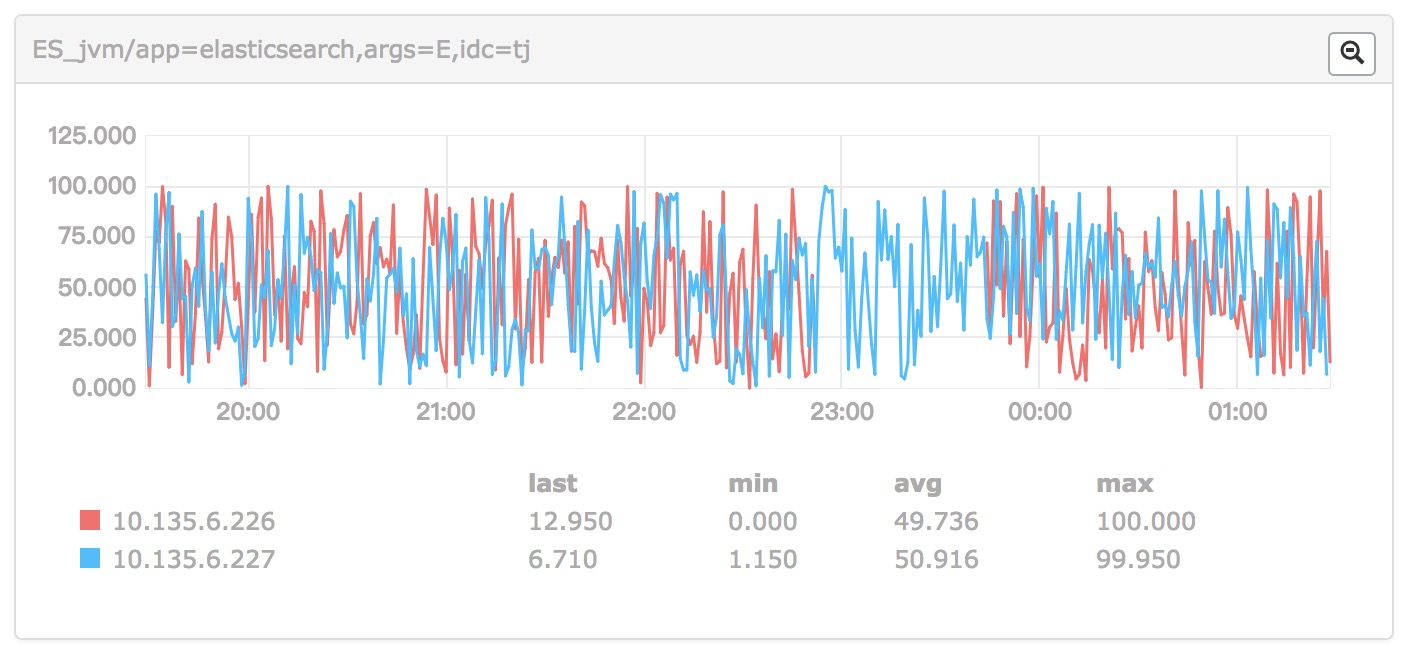

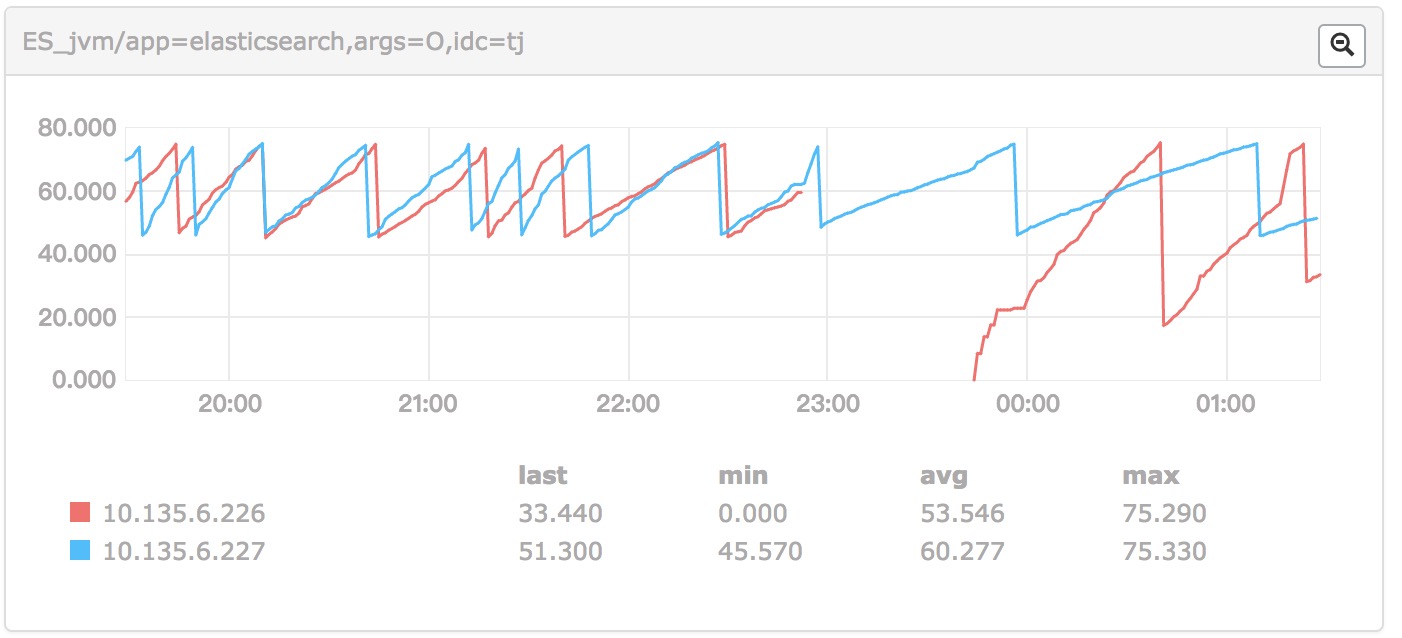

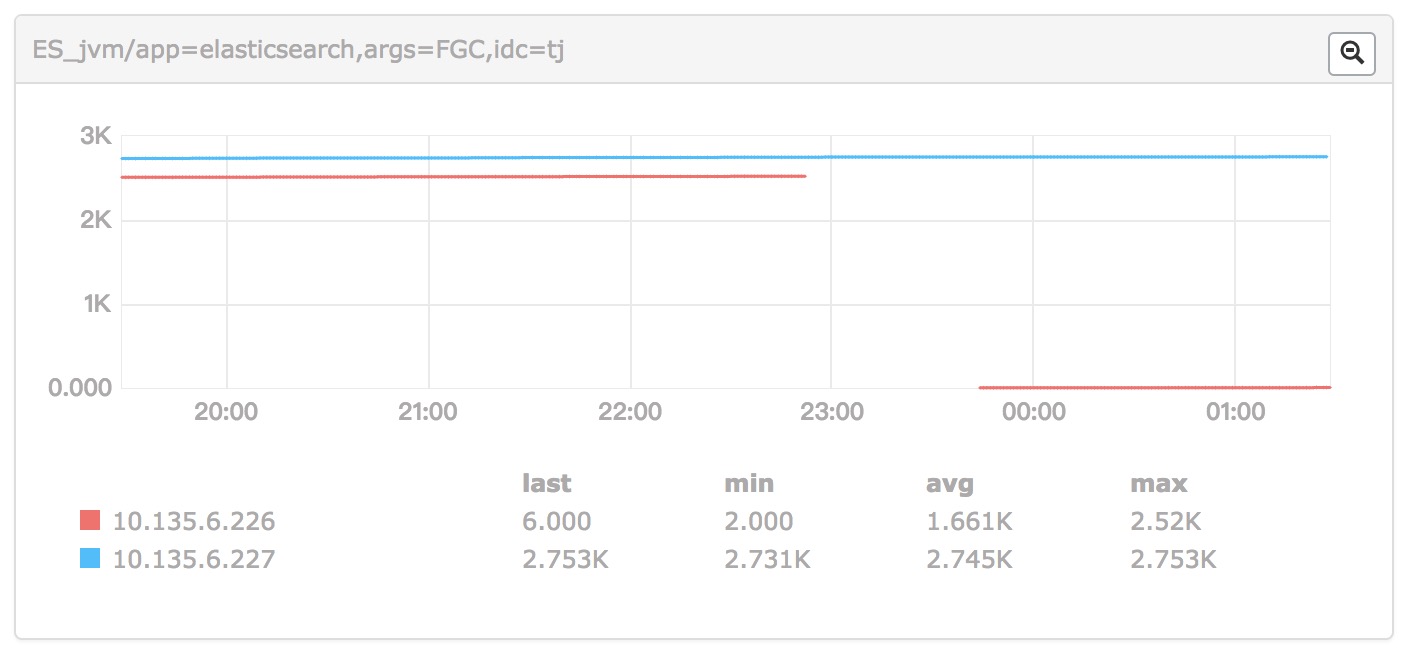

,下面的几张图是(正常节点:10.135.6.227)与(异常节点:10.135.6.226节点)监控对比图

内存:

IO:

LOAD:

还有个kernel故障点不一致,其他两者基本没什么差距

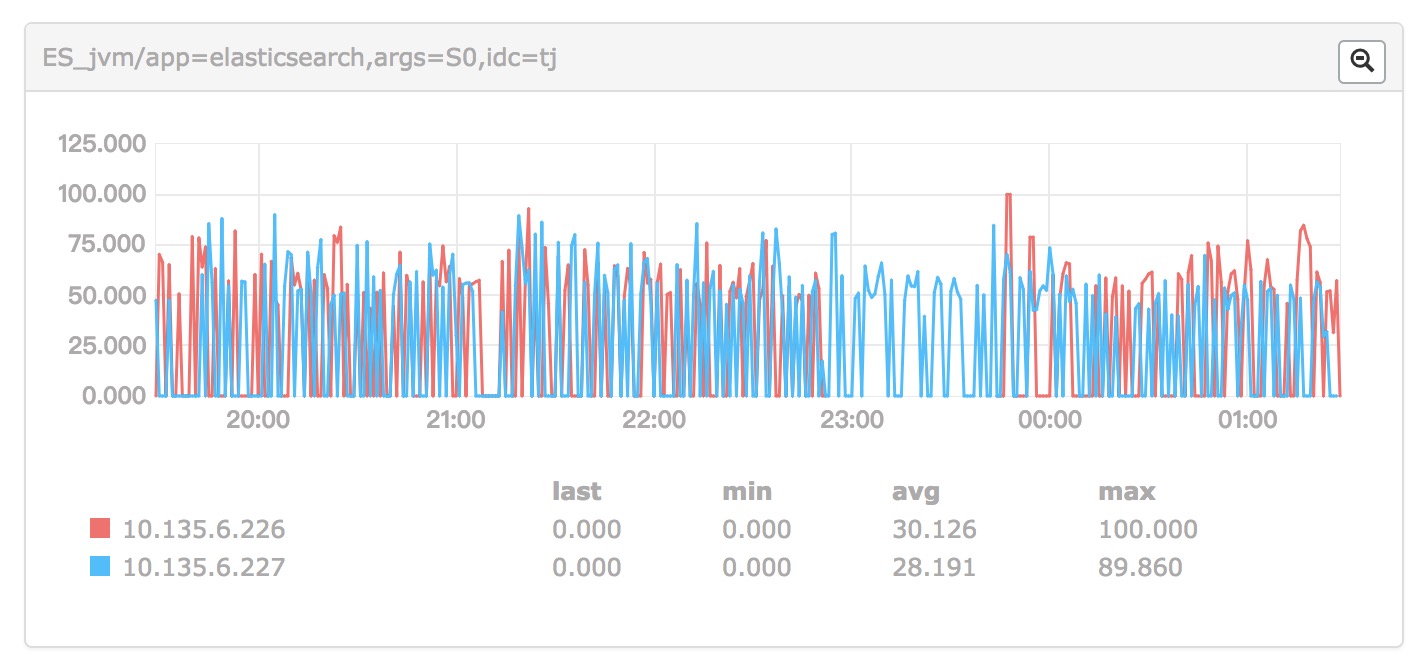

还有几张JVM的监控

master节点日志:

故障节点log日志

[2018-08-04T06:49:12,265][WARN ][o.e.m.j.JvmGcMonitorService] [10.135.6.226] [gc][young][1013831][93448] duration [1.1s], collections [1]/[7s], total [1.1s]/[1.2h], memory [22.8gb]->[9.4gb]/[29.2gb], all_pools {[young] [6.1gb]->[1.9mb]/[6.4gb]}{[survivor] [633.8mb]->[0b]/[819.1mb]}{[old] [16.1gb]->[9.4gb]/[22gb]}

[2018-08-04T06:49:12,275][INFO ][o.e.m.j.JvmGcMonitorService] [10.135.6.226] [gc][old][1013831][1217] duration [5.1s], collections [1]/[7s], total [5.1s]/[4.3m], memory [22.8gb]->[9.4gb]/[29.2gb], all_pools {[young] [6.1gb]->[1.9mb]/[6.4gb]}{[survivor] [633.8mb]->[0b]/[819.1mb]}{[old] [16.1gb]->[9.4gb]/[22gb]}

[2018-08-04T06:49:12,275][WARN ][o.e.m.j.JvmGcMonitorService] [10.135.6.226] [gc][1013831] overhead, spent [6.3s] collecting in the last [7s]

[2018-08-04T22:51:04,451][ERROR][o.e.x.m.c.n.NodeStatsCollector] [10.135.6.226] collector [node_stats] timed out when collecting data

[2018-08-04T22:51:14,468][ERROR][o.e.a.b.TransportBulkAction] [10.135.6.226] failed to execute pipeline for a bulk request

org.elasticsearch.common.util.concurrent.EsRejectedExecutionException: rejected execution of org.elasticsearch.ingest.PipelineExecutionService$2@57621aaa on EsThreadPoolExecutor[name = 10.135.6.226/bulk, queue capacity = 200, org.elasticsearch.common.util.concurrent.EsThreadPoolExecutor@19accc58[Running, pool size = 32, active threads = 32, queued tasks = 305, completed tasks = 160486966]]

at org.elasticsearch.common.util.concurrent.EsAbortPolicy.rejectedExecution(EsAbortPolicy.java:48) ~[elasticsearch-6.2.2.jar:6.2.2]

at java.util.concurrent.ThreadPoolExecutor.reject(ThreadPoolExecutor.java:823) ~[?:1.8.0_66]

at java.util.concurrent.ThreadPoolExecutor.execute(ThreadPoolExecutor.java:1369) ~[?:1.8.0_66]

at org.elasticsearch.common.util.concurrent.EsThreadPoolExecutor.doExecute(EsThreadPoolExecutor.java:98) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.common.util.concurrent.EsThreadPoolExecutor.execute(EsThreadPoolExecutor.java:93) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.ingest.PipelineExecutionService.executeBulkRequest(PipelineExecutionService.java:75) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.action.bulk.TransportBulkAction.processBulkIndexIngestRequest(TransportBulkAction.java:496) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.action.bulk.TransportBulkAction.doExecute(TransportBulkAction.java:135) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.action.bulk.TransportBulkAction.doExecute(TransportBulkAction.java:86) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.action.support.TransportAction$RequestFilterChain.proceed(TransportAction.java:167) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.security.action.filter.SecurityActionFilter.apply(SecurityActionFilter.java:133) ~[?:?]

at org.elasticsearch.action.support.TransportAction$RequestFilterChain.proceed(TransportAction.java:165) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.action.support.TransportAction.execute(TransportAction.java:139) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.action.support.TransportAction.execute(TransportAction.java:81) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.client.node.NodeClient.executeLocally(NodeClient.java:83) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.client.node.NodeClient.doExecute(NodeClient.java:72) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.client.support.AbstractClient.execute(AbstractClient.java:405) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.client.support.AbstractClient.bulk(AbstractClient.java:482) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.core.ClientHelper.executeAsyncWithOrigin(ClientHelper.java:73) ~[x-pack-core-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.monitoring.exporter.local.LocalBulk.doFlush(LocalBulk.java:120) ~[?:?]

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk.flush(ExportBulk.java:72) ~[?:?]

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk$Compound.lambda$doFlush$1(ExportBulk.java:166) ~[?:?]

at org.elasticsearch.xpack.core.common.IteratingActionListener.run(IteratingActionListener.java:93) [x-pack-core-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk$Compound.doFlush(ExportBulk.java:182) [x-pack-monitoring-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk.flushAndClose(ExportBulk.java:96) [x-pack-monitoring-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk.close(ExportBulk.java:86) [x-pack-monitoring-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.monitoring.exporter.Exporters.export(Exporters.java:205) [x-pack-monitoring-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.monitoring.MonitoringService$MonitoringExecution$1.doRun(MonitoringService.java:231) [x-pack-monitoring-6.2.2.jar:6.2.2]

at org.elasticsearch.common.util.concurrent.AbstractRunnable.run(AbstractRunnable.java:37) [elasticsearch-6.2.2.jar:6.2.2]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [?:1.8.0_66]

at java.util.concurrent.FutureTask.run(FutureTask.java:266) [?:1.8.0_66]

at org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingRunnable.run(ThreadContext.java:573) [elasticsearch-6.2.2.jar:6.2.2]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) [?:1.8.0_66]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) [?:1.8.0_66]

at java.lang.Thread.run(Thread.java:745) [?:1.8.0_66]

[2018-08-04T22:51:14,473][WARN ][o.e.x.m.MonitoringService] [10.135.6.226] monitoring execution failed

org.elasticsearch.xpack.monitoring.exporter.ExportException: Exception when closing export bulk

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk$1$1.<init>(ExportBulk.java:107) ~[?:?]

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk$1.onFailure(ExportBulk.java:105) ~[?:?]

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk$Compound$1.onResponse(ExportBulk.java:218) ~[?:?]

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk$Compound$1.onResponse(ExportBulk.java:212) ~[?:?]

at org.elasticsearch.xpack.core.common.IteratingActionListener.onResponse(IteratingActionListener.java:108) ~[?:?]

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk$Compound.lambda$doFlush$0(ExportBulk.java:176) ~[?:?]

at org.elasticsearch.action.ActionListener$1.onFailure(ActionListener.java:68) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.monitoring.exporter.local.LocalBulk.lambda$doFlush$1(LocalBulk.java:127) ~[?:?]

at org.elasticsearch.action.ActionListener$1.onFailure(ActionListener.java:68) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.action.support.ContextPreservingActionListener.onFailure(ContextPreservingActionListener.java:50) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.action.support.TransportAction$1.onFailure(TransportAction.java:91) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.action.bulk.TransportBulkAction.lambda$processBulkIndexIngestRequest$4(TransportBulkAction.java:503) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.ingest.PipelineExecutionService$2.onFailure(PipelineExecutionService.java:79) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.common.util.concurrent.AbstractRunnable.onRejection(AbstractRunnable.java:63) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingAbstractRunnable.onRejection(ThreadContext.java:662) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.common.util.concurrent.EsThreadPoolExecutor.doExecute(EsThreadPoolExecutor.java:104) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.common.util.concurrent.EsThreadPoolExecutor.execute(EsThreadPoolExecutor.java:93) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.ingest.PipelineExecutionService.executeBulkRequest(PipelineExecutionService.java:75) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.action.bulk.TransportBulkAction.processBulkIndexIngestRequest(TransportBulkAction.java:496) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.action.bulk.TransportBulkAction.doExecute(TransportBulkAction.java:135) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.action.bulk.TransportBulkAction.doExecute(TransportBulkAction.java:86) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.action.support.TransportAction$RequestFilterChain.proceed(TransportAction.java:167) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.security.action.filter.SecurityActionFilter.apply(SecurityActionFilter.java:133) ~[?:?]

at org.elasticsearch.action.support.TransportAction$RequestFilterChain.proceed(TransportAction.java:165) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.action.support.TransportAction.execute(TransportAction.java:139) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.action.support.TransportAction.execute(TransportAction.java:81) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.client.node.NodeClient.executeLocally(NodeClient.java:83) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.client.node.NodeClient.doExecute(NodeClient.java:72) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.client.support.AbstractClient.execute(AbstractClient.java:405) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.client.support.AbstractClient.bulk(AbstractClient.java:482) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.core.ClientHelper.executeAsyncWithOrigin(ClientHelper.java:73) ~[x-pack-core-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.monitoring.exporter.local.LocalBulk.doFlush(LocalBulk.java:120) ~[?:?]

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk.flush(ExportBulk.java:72) ~[?:?]

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk$Compound.lambda$doFlush$1(ExportBulk.java:166) ~[?:?]

at org.elasticsearch.xpack.core.common.IteratingActionListener.run(IteratingActionListener.java:93) [x-pack-core-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk$Compound.doFlush(ExportBulk.java:182) [x-pack-monitoring-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk.flushAndClose(ExportBulk.java:96) [x-pack-monitoring-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk.close(ExportBulk.java:86) [x-pack-monitoring-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.monitoring.exporter.Exporters.export(Exporters.java:205) [x-pack-monitoring-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.monitoring.MonitoringService$MonitoringExecution$1.doRun(MonitoringService.java:231) [x-pack-monitoring-6.2.2.jar:6.2.2]

at org.elasticsearch.common.util.concurrent.AbstractRunnable.run(AbstractRunnable.java:37) [elasticsearch-6.2.2.jar:6.2.2]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [?:1.8.0_66]

at java.util.concurrent.FutureTask.run(FutureTask.java:266) [?:1.8.0_66]

at org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingRunnable.run(ThreadContext.java:573) [elasticsearch-6.2.2.jar:6.2.2]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) [?:1.8.0_66]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) [?:1.8.0_66]

at java.lang.Thread.run(Thread.java:745) [?:1.8.0_66]

Caused by: org.elasticsearch.xpack.monitoring.exporter.ExportException: failed to flush export bulks

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk$Compound.lambda$doFlush$0(ExportBulk.java:168) ~[?:?]

... 41 more

Caused by: org.elasticsearch.xpack.monitoring.exporter.ExportException: failed to flush export bulk [default_local]

... 40 more

Caused by: org.elasticsearch.common.util.concurrent.EsRejectedExecutionException: rejected execution of org.elasticsearch.ingest.PipelineExecutionService$2@57621aaa on EsThreadPoolExecutor[name = 10.135.6.226/bulk, queue capacity = 200, org.elasticsearch.common.util.concurrent.EsThreadPoolExecutor@19accc58[Running, pool size = 32, active threads = 32, queued tasks = 305, completed tasks = 160486966]]

at org.elasticsearch.common.util.concurrent.EsAbortPolicy.rejectedExecution(EsAbortPolicy.java:48) ~[elasticsearch-6.2.2.jar:6.2.2]

at java.util.concurrent.ThreadPoolExecutor.reject(ThreadPoolExecutor.java:823) ~[?:1.8.0_66]

at java.util.concurrent.ThreadPoolExecutor.execute(ThreadPoolExecutor.java:1369) ~[?:1.8.0_66]

at org.elasticsearch.common.util.concurrent.EsThreadPoolExecutor.doExecute(EsThreadPoolExecutor.java:98) ~[elasticsearch-6.2.2.jar:6.2.2]

... 31 more

[2018-08-04T22:51:24,430][ERROR][o.e.a.b.TransportBulkAction] [10.135.6.226] failed to execute pipeline for a bulk request

org.elasticsearch.common.util.concurrent.EsRejectedExecutionException: rejected execution of org.elasticsearch.ingest.PipelineExecutionService$2@7cfc78f3 on EsThreadPoolExecutor[name = 10.135.6.226/bulk, queue capacity = 200, org.elasticsearch.common.util.concurrent.EsThreadPoolExecutor@19accc58[Running, pool size = 32, active threads = 32, queued tasks = 305, completed tasks = 160486966]]

at org.elasticsearch.common.util.concurrent.EsAbortPolicy.rejectedExecution(EsAbortPolicy.java:48) ~[elasticsearch-6.2.2.jar:6.2.2]

at java.util.concurrent.ThreadPoolExecutor.reject(ThreadPoolExecutor.java:823) ~[?:1.8.0_66]

at java.util.concurrent.ThreadPoolExecutor.execute(ThreadPoolExecutor.java:1369) ~[?:1.8.0_66]

at org.elasticsearch.common.util.concurrent.EsThreadPoolExecutor.doExecute(EsThreadPoolExecutor.java:98) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.common.util.concurrent.EsThreadPoolExecutor.execute(EsThreadPoolExecutor.java:93) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.ingest.PipelineExecutionService.executeBulkRequest(PipelineExecutionService.java:75) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.action.bulk.TransportBulkAction.processBulkIndexIngestRequest(TransportBulkAction.java:496) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.action.bulk.TransportBulkAction.doExecute(TransportBulkAction.java:135) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.action.bulk.TransportBulkAction.doExecute(TransportBulkAction.java:86) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.action.support.TransportAction$RequestFilterChain.proceed(TransportAction.java:167) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.security.action.filter.SecurityActionFilter.apply(SecurityActionFilter.java:133) ~[?:?]

at org.elasticsearch.action.support.TransportAction$RequestFilterChain.proceed(TransportAction.java:165) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.action.support.TransportAction.execute(TransportAction.java:139) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.action.support.TransportAction.execute(TransportAction.java:81) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.client.node.NodeClient.executeLocally(NodeClient.java:83) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.client.node.NodeClient.doExecute(NodeClient.java:72) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.client.support.AbstractClient.execute(AbstractClient.java:405) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.client.support.AbstractClient.bulk(AbstractClient.java:482) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.core.ClientHelper.executeAsyncWithOrigin(ClientHelper.java:73) ~[x-pack-core-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.monitoring.exporter.local.LocalBulk.doFlush(LocalBulk.java:120) ~[?:?]

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk.flush(ExportBulk.java:72) ~[?:?]

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk$Compound.lambda$doFlush$1(ExportBulk.java:166) ~[?:?]

at org.elasticsearch.xpack.core.common.IteratingActionListener.run(IteratingActionListener.java:93) [x-pack-core-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk$Compound.doFlush(ExportBulk.java:182) [x-pack-monitoring-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk.flushAndClose(ExportBulk.java:96) [x-pack-monitoring-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk.close(ExportBulk.java:86) [x-pack-monitoring-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.monitoring.exporter.Exporters.export(Exporters.java:205) [x-pack-monitoring-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.monitoring.MonitoringService$MonitoringExecution$1.doRun(MonitoringService.java:231) [x-pack-monitoring-6.2.2.jar:6.2.2]

at org.elasticsearch.common.util.concurrent.AbstractRunnable.run(AbstractRunnable.java:37) [elasticsearch-6.2.2.jar:6.2.2]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [?:1.8.0_66]

at java.util.concurrent.FutureTask.run(FutureTask.java:266) [?:1.8.0_66]

at org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingRunnable.run(ThreadContext.java:573) [elasticsearch-6.2.2.jar:6.2.2]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) [?:1.8.0_66]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) [?:1.8.0_66]

at java.lang.Thread.run(Thread.java:745) [?:1.8.0_66]

[2018-08-04T22:51:24,434][WARN ][o.e.x.m.MonitoringService] [10.135.6.226] monitoring execution failed

org.elasticsearch.xpack.monitoring.exporter.ExportException: Exception when closing export bulk

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk$1$1.<init>(ExportBulk.java:107) ~[?:?]

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk$1.onFailure(ExportBulk.java:105) ~[?:?]

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk$Compound$1.onResponse(ExportBulk.java:218) ~[?:?]

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk$Compound$1.onResponse(ExportBulk.java:212) ~[?:?]

at org.elasticsearch.xpack.core.common.IteratingActionListener.onResponse(IteratingActionListener.java:108) ~[?:?]

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk$Compound.lambda$doFlush$0(ExportBulk.java:176) ~[?:?]

at org.elasticsearch.action.ActionListener$1.onFailure(ActionListener.java:68) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.monitoring.exporter.local.LocalBulk.lambda$doFlush$1(LocalBulk.java:127) ~[?:?]

at org.elasticsearch.action.ActionListener$1.onFailure(ActionListener.java:68) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.action.support.ContextPreservingActionListener.onFailure(ContextPreservingActionListener.java:50) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.action.support.TransportAction$1.onFailure(TransportAction.java:91) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.action.bulk.TransportBulkAction.lambda$processBulkIndexIngestRequest$4(TransportBulkAction.java:503) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.ingest.PipelineExecutionService$2.onFailure(PipelineExecutionService.java:79) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.common.util.concurrent.AbstractRunnable.onRejection(AbstractRunnable.java:63) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingAbstractRunnable.onRejection(ThreadContext.java:662) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.common.util.concurrent.EsThreadPoolExecutor.doExecute(EsThreadPoolExecutor.java:104) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.common.util.concurrent.EsThreadPoolExecutor.execute(EsThreadPoolExecutor.java:93) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.ingest.PipelineExecutionService.executeBulkRequest(PipelineExecutionService.java:75) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.action.bulk.TransportBulkAction.processBulkIndexIngestRequest(TransportBulkAction.java:496) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.action.bulk.TransportBulkAction.doExecute(TransportBulkAction.java:135) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.action.bulk.TransportBulkAction.doExecute(TransportBulkAction.java:86) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.action.support.TransportAction$RequestFilterChain.proceed(TransportAction.java:167) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.security.action.filter.SecurityActionFilter.apply(SecurityActionFilter.java:133) ~[?:?]

at org.elasticsearch.action.support.TransportAction$RequestFilterChain.proceed(TransportAction.java:165) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.action.support.TransportAction.execute(TransportAction.java:139) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.action.support.TransportAction.execute(TransportAction.java:81) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.client.node.NodeClient.executeLocally(NodeClient.java:83) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.client.node.NodeClient.doExecute(NodeClient.java:72) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.client.support.AbstractClient.execute(AbstractClient.java:405) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.client.support.AbstractClient.bulk(AbstractClient.java:482) ~[elasticsearch-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.core.ClientHelper.executeAsyncWithOrigin(ClientHelper.java:73) ~[x-pack-core-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.monitoring.exporter.local.LocalBulk.doFlush(LocalBulk.java:120) ~[?:?]

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk.flush(ExportBulk.java:72) ~[?:?]

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk$Compound.lambda$doFlush$1(ExportBulk.java:166) ~[?:?]

at org.elasticsearch.xpack.core.common.IteratingActionListener.run(IteratingActionListener.java:93) [x-pack-core-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk$Compound.doFlush(ExportBulk.java:182) [x-pack-monitoring-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk.flushAndClose(ExportBulk.java:96) [x-pack-monitoring-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk.close(ExportBulk.java:86) [x-pack-monitoring-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.monitoring.exporter.Exporters.export(Exporters.java:205) [x-pack-monitoring-6.2.2.jar:6.2.2]

at org.elasticsearch.xpack.monitoring.MonitoringService$MonitoringExecution$1.doRun(MonitoringService.java:231) [x-pack-monitoring-6.2.2.jar:6.2.2]

at org.elasticsearch.common.util.concurrent.AbstractRunnable.run(AbstractRunnable.java:37) [elasticsearch-6.2.2.jar:6.2.2]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [?:1.8.0_66]

at java.util.concurrent.FutureTask.run(FutureTask.java:266) [?:1.8.0_66]

at org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingRunnable.run(ThreadContext.java:573) [elasticsearch-6.2.2.jar:6.2.2]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) [?:1.8.0_66]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) [?:1.8.0_66]

at java.lang.Thread.run(Thread.java:745) [?:1.8.0_66]

Caused by: org.elasticsearch.xpack.monitoring.exporter.ExportException: failed to flush export bulks

at org.elasticsearch.xpack.monitoring.exporter.ExportBulk$Compound.lambda$doFlush$0(ExportBulk.java:168) ~[?:?]

... 41 more

Caused by: org.elasticsearch.xpack.monitoring.exporter.ExportException: failed to flush export bulk [default_local]

... 40 more

Caused by: org.elasticsearch.common.util.concurrent.EsRejectedExecutionException: rejected execution of org.elasticsearch.ingest.PipelineExecutionService$2@7cfc78f3 on EsThreadPoolExecutor[name = 10.135.6.226/bulk, queue capacity = 200, org.elasticsearch.common.util.concurrent.EsThreadPoolExecutor@19accc58[Running, pool size = 32, active threads = 32, queued tasks = 305, completed tasks = 160486966]]

at org.elasticsearch.common.util.concurrent.EsAbortPolicy.rejectedExecution(EsAbortPolicy.java:48) ~[elasticsearch-6.2.2.jar:6.2.2]

at java.util.concurrent.ThreadPoolExecutor.reject(ThreadPoolExecutor.java:823) ~[?:1.8.0_66]

at java.util.concurrent.ThreadPoolExecutor.execute(ThreadPoolExecutor.java:1369) ~[?:1.8.0_66]

at org.elasticsearch.common.util.concurrent.EsThreadPoolExecutor.doExecute(EsThreadPoolExecutor.java:98) ~[elasticsearch-6.2.2.jar:6.2.2]

... 31 more

[2018-08-04T22:51:34,430][ERROR][o.e.a.b.TransportBulkAction] [10.135.6.226] failed to execute pipeline for a bulk requestESdata节点脱离集群,系统日志报120秒超时的更多相关文章

- ES 内存使用和GC指标——主节点每30秒会去检查其他节点的状态,如果任何节点的垃圾回收时间超过30秒(Garbage collection duration),则会导致主节点任务该节点脱离集群。

摘录自:http://blog.csdn.net/yangwenbo214/article/details/74000458 内存使用和GC指标 在运行Elasticsearch时,内存是您要密切监控 ...

- elasticsearch入库错误:gc overhead导致数据节点脱离集群

https://my.oschina.net/u/3625378/blog/1793796

- 理解 OpenStack Swift (1):OpenStack + 三节点Swift 集群+ HAProxy + UCARP 安装和配置

本系列文章着重学习和研究OpenStack Swift,包括环境搭建.原理.架构.监控和性能等. (1)OpenStack + 三节点Swift 集群+ HAProxy + UCARP 安装和配置 ( ...

- redis客户端可以连接集群,但JedisCluster连接redis集群一直报Could not get a resource from the pool

一,问题描述: (如题目)通过jedis连接redis单机成功,使用JedisCluster连接redis集群一直报Could not get a resource from the pool 但是使 ...

- 通过jedis连接redis单机成功,使用redis客户端可以连接集群,但使用JedisCluster连接redis集群一直报Could not get a resource from the pool

一,问题描述: (如题目)通过jedis连接redis单机成功,使用JedisCluster连接redis集群一直报Could not get a resource from the pool 但是使 ...

- 基于Docker快速搭建多节点Hadoop集群--已验证

Docker最核心的特性之一,就是能够将任何应用包括Hadoop打包到Docker镜像中.这篇教程介绍了利用Docker在单机上快速搭建多节点 Hadoop集群的详细步骤.作者在发现目前的Hadoop ...

- 项目进阶 之 集群环境搭建(三)多管理节点MySQL集群

上次的博文项目进阶 之 集群环境搭建(二)MySQL集群中,我们搭建了一个基础的MySQL集群,这篇博客咱们继续讲解MySQL集群的相关内容,同时针对上一篇遗留的问题提出一个解决方案. 1.单管理节点 ...

- 实战weblogic集群之创建节点和集群

一.启动weblogic,访问控制台 weblogic的domain创建完成后,接下来就可以启动它,步骤如下: $ cd /app/sinova/domains/base_domain/bin $ . ...

- docker swarm英文文档学习-6-添加节点到集群

Join nodes to a swarm添加节点到集群 当你第一次创建集群时,你将单个Docker引擎置于集群模式中.为了充分利用群体模式,可以在集群中添加节点: 添加工作节点可以增加容量.当你将服 ...

随机推荐

- codevs3342绿色通道(单调队列优化dp)

3342 绿色通道 时间限制: 1 s 空间限制: 256000 KB 题目等级 : 黄金 Gold 题目描述 Description <思远高考绿色通道>(Green Pass ...

- [Apple开发者帐户帮助]九、参考(2)撤销特权

您可以撤消的证书取决于证书类型和您的角色.如果您是个人注册,则可以撤销所有类型的开发和分发证书,除非另有说明.组织团队的任何成员都可以撤销自己的开发证书,但只有帐户持有人或管理员可以撤销分发证书. 证 ...

- day03_12/13/2016_bean的管理之初始化和销毁

- 【LeetCode】-- 260. Single Number III

问题描述: https://leetcode.com/problems/single-number-iii/ 在一个数组里面,只有两个元素仅出现过1次,其余都出现过两次.找出出现仅一次的那两个(a, ...

- Oracle 10g RAC的负载均衡配置[转载]

Oracle 10g RAC的负载均衡配置 负载均衡是指连接的负载均衡.RAC的负载均衡主要是指新会话连接到RAC数据库时,如何判定这个新的连接要连到哪个节点进行工作.在RAC中,负载均衡分为两种,一 ...

- JAVA中list,set,map与数组之间的转换详解

package test; import java.util.*; /** * Created by ming */ public class Test { public static void ma ...

- Python随笔-切片

Python为取list部分元素提供了切片操作,list[begin:end]获取list的[begin,end)区间元素. 可以用负数索引. tuple.str都是list的一种,所以也适用. 可以 ...

- iOS动画——DynamicAnimate

力学动画 以dynamicAnimate为首的力学动画是苹果在iOS7加入的API,里面包含了很多力学行为,这套API是基于Box2d实现的.其中包含了重力.碰撞.推.甩.和自定义行为. 涉及到的类如 ...

- JVM 优化之逃逸分析

整理自 周志明<深入JVM> 1, 是JVM优化技术,它不是直接优化手段,而是为其它优化手段提供依据. 2,逃逸分析主要就是分析对象的动态作用域. 3,逃逸有两种:方法逃逸和线程逃逸. ...

- 笔记《精通css》第3章 盒模型,定位,浮动,清理

第3章 盒模型,定位,浮动,清理 1.盒模型用到的属性width,height,padding,border,margin 普通文档流的上下垂直margin会叠加 2.块级框 与 行内框, 利用 ...