k8s动态存储管理GlusterFS

1. 在node上安装Gluster客户端(Heketi要求GlusterFS集群至少有三个节点)

删除master标签

kubectl taint nodes --all node-role.kubernetes.io/master-

kubectl describe node k8s查看taint是否为空

查看kube-apiserver是否以特权模式运行:

ps -ef | grep kube | grep allow

给每个node打上标签:

kubectl label node k8s storagenode=glusterfs

kubectl label node k8s-node1 storagenode=glusterfs

kubectl label node k8s-node2 storagenode=glusterfs 2. 确保每个node上运行一个GlusterFS管理服务

cat glusterfs.yaml

kind: DaemonSet

apiVersion: extensions/v1beta1

metadata:

name: glusterfs

labels:

glusterfs: daemonsett

annotations:

description: GlusterFS DaemonSet

tags: glusterfs

spec:

template:

metadata:

name: glusterfs

labels:

glusterfs-node: pod

spec:

nodeSelector:

storagenode: glusterfs

hostNetwork: true

containers:

- image: gluster/gluster-centos:latest

name: glusterfs

volumeMounts:

- name: glusterfs-heketi

mountPath: "/var/lib/heketi"

- name: glusterfs-run

mountPath: "/run"

- name: glusterfs-lvm

mountPath: "/run/lvm"

- name: glusterfs-etc

mountPath: "/etc/glusterfs"

- name: glusterfs-logs

mountPath: "/var/log/glusterfs"

- name: glusterfs-config

mountPath: "/var/lib/glusterd"

- name: glusterfs-dev

mountPath: "/dev"

- name: glusterfs-misc

mountPath: "/var/lib/misc/glusterfsd"

- name: glusterfs-cgroup

mountPath: "/sys/fs/cgroup"

readOnly: true

- name: glusterfs-ssl

mountPath: "/etc/ssl"

readOnly: true

securityContext:

capabilities: {}

privileged: true

readinessProbe:

timeoutSeconds: 3

initialDelaySeconds: 60

exec:

command:

- "/bin/bash"

- "-c"

- systemctl status glusterd.service

livenessProbe:

timeoutSeconds: 3

initialDelaySeconds: 60

exec:

command:

- "/bin/bash"

- "-c"

- systemctl status glusterd.service

volumes:

- name: glusterfs-heketi

hostPath:

path: "/var/lib/heketi"

- name: glusterfs-run

- name: glusterfs-lvm

hostPath:

path: "/run/lvm"

- name: glusterfs-etc

hostPath:

path: "/etc/glusterfs"

- name: glusterfs-logs

hostPath:

path: "/var/log/glusterfs"

- name: glusterfs-config

hostPath:

path: "/var/lib/glusterd"

- name: glusterfs-dev

hostPath:

path: "/dev"

- name: glusterfs-misc

hostPath:

path: "/var/lib/misc/glusterfsd"

- name: glusterfs-cgroup

hostPath:

path: "/sys/fs/cgroup"

- name: glusterfs-ssl

hostPath:

path: "/etc/ssl"

kubectl create -f glusterfs.yaml && kubectl describe pods <pod_name>

2. 创建Heketi服务

创建一个ServiceAccount对象

cat heketi-service.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: heketi-service-account

kubectl create -f heketi-service.yaml

部署heketi服务:

cat heketi-svc.yaml

---

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: deploy-heketi

labels:

glusterfs: heketi-deployment

deploy-heketi: heket-deployment

annotations:

description: Defines how to deploy Heketi

spec:

replicas: 1

template:

metadata:

name: deploy-heketi

labels:

glusterfs: heketi-pod

name: deploy-heketi

spec:

serviceAccountName: heketi-service-account

containers:

- image: heketi/heketi

imagePullPolicy: IfNotPresent

name: deploy-heketi

env:

- name: HEKETI_EXECUTOR

value: kubernetes

- name: HEKETI_FSTAB

value: "/var/lib/heketi/fstab"

- name: HEKETI_SNAPSHOT_LIMIT

value: '14'

- name: HEKETI_KUBE_GLUSTER_DAEMONSET

value: "y"

ports:

- containerPort: 8080

volumeMounts:

- name: db

mountPath: "/var/lib/heketi"

readinessProbe:

timeoutSeconds: 3

initialDelaySeconds: 3

httpGet:

path: "/hello"

port: 8080

livenessProbe:

timeoutSeconds: 3

initialDelaySeconds: 30

httpGet:

path: "/hello"

port: 8080

volumes:

- name: db

hostPath:

path: "/heketi-data" ---

kind: Service

apiVersion: v1

metadata:

name: deploy-heketi

labels:

glusterfs: heketi-service

deploy-heketi: support

annotations:

description: Exposes Heketi Service

spec:

selector:

name: deploy-heketi

ports:

- name: deploy-heketi

port: 8080

targetPort: 8080

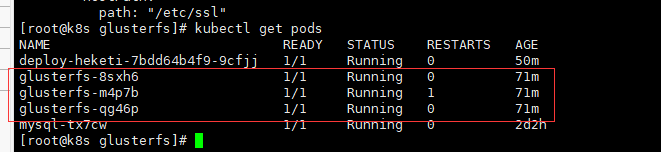

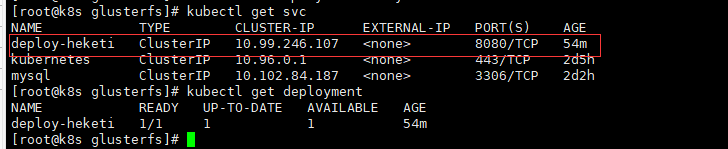

kubectl create -f heketi-service.yaml && kubectl get svc && kubectl get deploument

kubectl describe pod deploy-heketi查看运行在哪个node

3. Heketi安装

yum install -y centos-release-gluster

yum install -y heketi heketi-client

cat topology.json

{

"clusters": [

{

"nodes": [

{

"node": {

"hostnames": {

"manage": [

"k8s"

],

"storage": [

"192.168.66.86"

]

},

"zone": 1

},

"devices": [

"/dev/vdb"

]

},

{

"node": {

"hostnames": {

"manage": [

"k8s-node1"

],

"storage": [

"192.168.66.87"

]

},

"zone": 1

},

"devices": [

"/dev/vdb"

]

},

{

"node": {

"hostnames": {

"manage": [

"k8s-node2"

],

"storage": [

"192.168.66.84"

]

},

"zone": 1

},

"devices": [

"/dev/vdb"

]

}

]

}

]

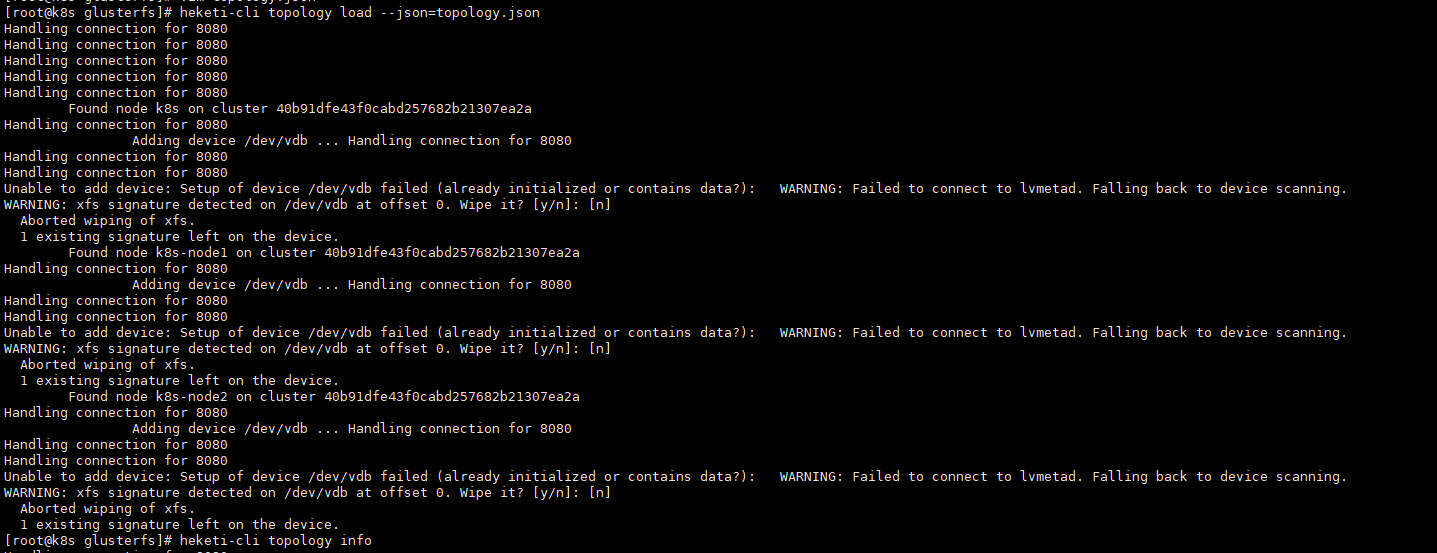

} HEKETI_BOOTSTRAP_POD=$(kubectl get pods | grep deploy-heketi | awk '{print $1}')

kubectl port-forward $HEKETI_BOOTSTRAP_POD 8080:8080 &后台启动

export HEKETI_CLI_SERVER=http://localhost:8080

heketi-cli topology load --json=topology.json

heketi-cli topology info

4. 报错处理

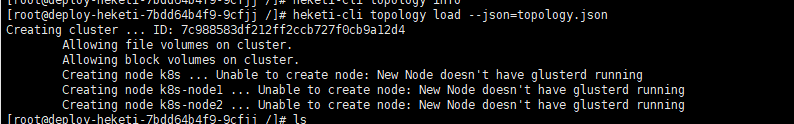

4.1:执行heketi-cli topology load --json=topology.json报错如下

Creating cluster ... ID: 76576f2209ccd75a0ab1e44fc38fd393

Allowing file volumes on cluster.

Allowing block volumes on cluster.

Creating node k8s ... Unable to create node: New Node doesn't have glusterd running

Creating node k8s-node1 ... Unable to create node: New Node doesn't have glusterd running

Creating node k8s-node2 ... Unable to create node: New Node doesn't have glusterd running

解决:kubectl create clusterrole fao --verb=get,list,watch,create --resource=pods,pods/status,pods/exec如还报错

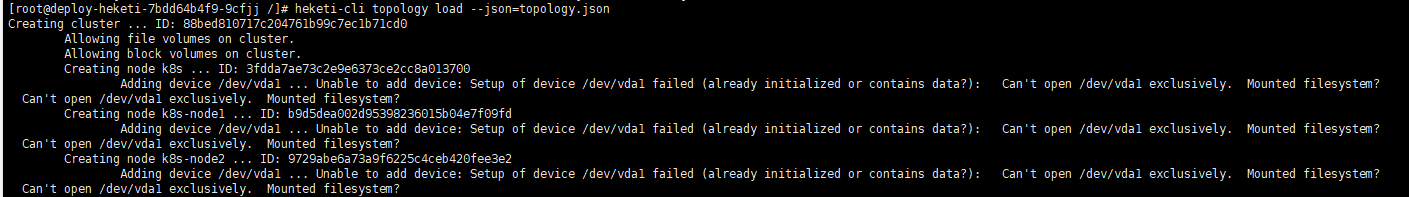

kubectl create clusterrolebinding heketi-gluster-admin --clusterrole=edit --serviceaccount=default:heketi-service-account 4.2:执行heketi-cli topology load --json=topology.json报错如下

Found node k8s on cluster 88bed810717c204761b99c7ec1b71cd0

Adding device /dev/vdb ... Unable to add device: Setup of device /dev/vdb failed (already initialized or contains data?): Can't open /dev/vdb exclusively. Mounted filesystem?

Can't open /dev/vdb exclusively. Mounted filesystem?

Found node k8s-node1 on cluster 88bed810717c204761b99c7ec1b71cd0

Adding device /dev/vdb ... Unable to add device: Setup of device /dev/vdb failed (already initialized or contains data?): Can't open /dev/vdb exclusively. Mounted filesystem?

Can't open /dev/vdb exclusively. Mounted filesystem?

Found node k8s-node2 on cluster 88bed810717c204761b99c7ec1b71cd0

Adding device /dev/vdb ... Unable to add device: Setup of device /dev/vdb failed (already initialized or contains data?): Can't open /dev/vdb exclusively. Mounted filesystem?

Can't open /dev/vdb exclusively. Mounted filesystem?

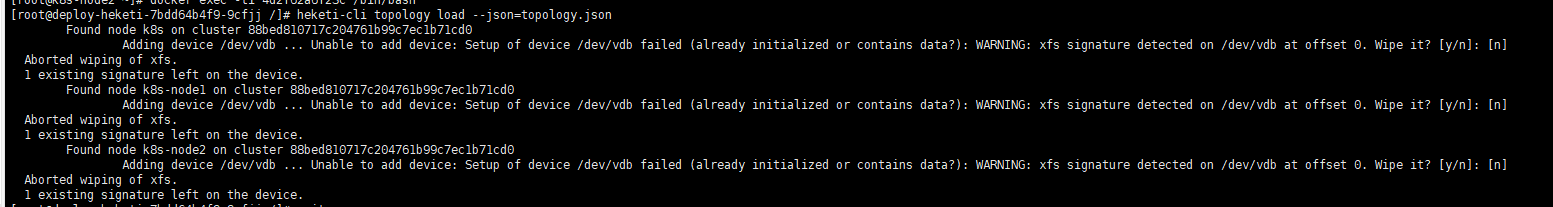

解决:格式化节点磁盘:mkfs.xfs -f /dev/vdb(-f 强制) 4.3:执行heketi-cli topology load --json=topology.json报错如下

Found node k8s on cluster 88bed810717c204761b99c7ec1b71cd0

Adding device /dev/vdb ... Unable to add device: Setup of device /dev/vdb failed (already initialized or contains data?): WARNING: xfs signature detected on /dev/vdb at offset 0. Wipe it? [y/n]: [n]

Aborted wiping of xfs.

1 existing signature left on the device.

Found node k8s-node1 on cluster 88bed810717c204761b99c7ec1b71cd0

Adding device /dev/vdb ... Unable to add device: Setup of device /dev/vdb failed (already initialized or contains data?): WARNING: xfs signature detected on /dev/vdb at offset 0. Wipe it? [y/n]: [n]

Aborted wiping of xfs.

1 existing signature left on the device.

Found node k8s-node2 on cluster 88bed810717c204761b99c7ec1b71cd0

Adding device /dev/vdb ... Unable to add device: Setup of device /dev/vdb failed (already initialized or contains data?): WARNING: xfs signature detected on /dev/vdb at offset 0. Wipe it? [y/n]: [n]

Aborted wiping of xfs.

1 existing signature left on the device

解决:进入节点glusterfs容器执行pvcreate -ff --metadatasize=128M --dataalignment=256K /dev/vdb

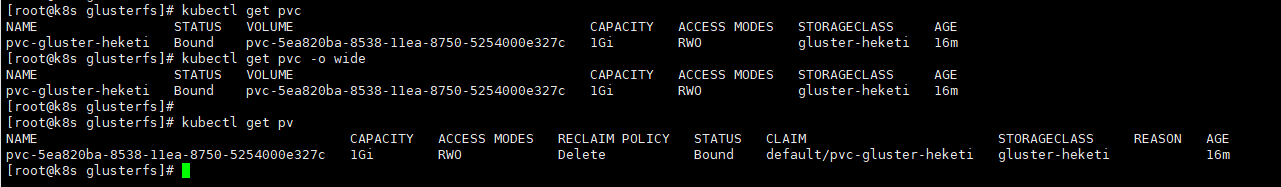

5. 定义StorageClass

netstat -anp | grep 8080查看resturl地址,resturl必须设置为API Server能访问Heketi服务的地址

cat storageclass-gluster-heketi.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: gluster-heketi

provisioner: kubernetes.io/glusterfs #此参数必须设置kubernetes.io/glusterfs

parameters:

resturl: "http://127.0.0.1:8080"

restauthenabled: "false" 6. 定义PVC

cat storageclass.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: pvc-gluster-heketi

spec:

storageClassName: gluster-heketi

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

kubectl get pvc

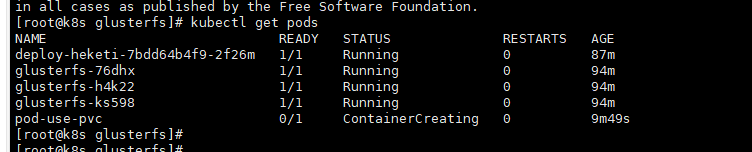

7. Pod使用pvc存储

cat pod-pvc.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-use-pvc

spec:

containers:

- name: pod-pvc

image: busybox

command:

- sleep

- "3600"

volumeMounts:

- name: gluster-volume

mountPath: "/mnt"

readOnly: false

volumes:

- name: gluster-volume

persistentVolumeClaim:

claimName: pvc-gluster-heketi

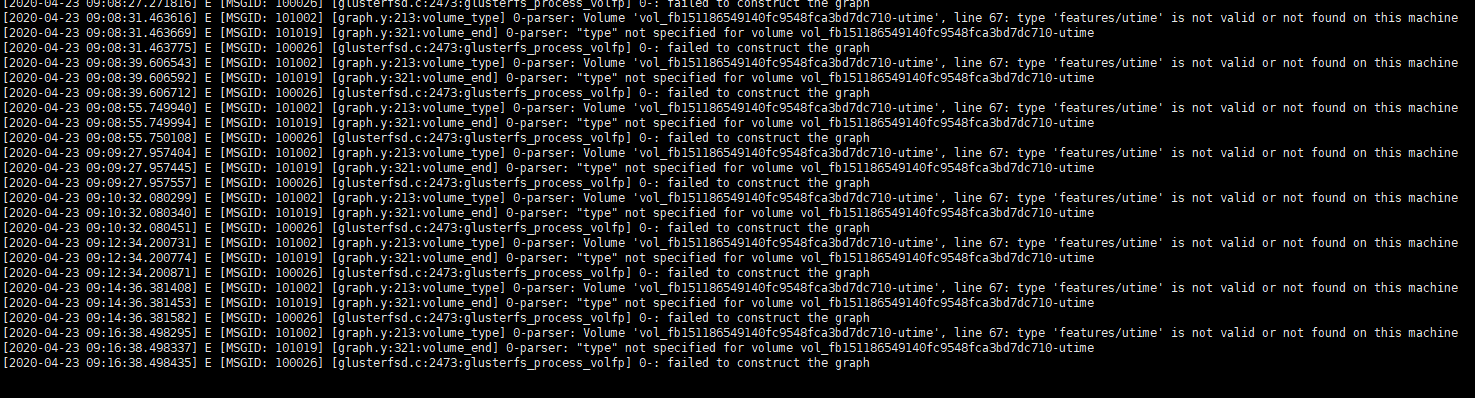

报错如下:

Warning FailedMount 9m34s kubelet, k8s-node2 ****: mount failed: mount failed: exit status 1

在node2上查看日志:

tail -100f /var/lib/kubelet/plugins/kubernetes.io/glusterfs/pvc-5ea820ba-8538-11ea-8750-5254000e327c/pod-use-pvc-glusterfs.log

line 67: type 'features/utime' is not valid or not found on this machine 解决:查看node时间,glusterfs和k8s_glusterfs容器的时间和glusterfs版本不对

安装ntp,使用ntp ntp1.aliyun.com发现glusterfs容器时间一直同步了 升级node glusterfs版本,升级前node版本为3.12.x,容器内版本为7.1

yum install centos-release-gluster -y

yum install glusterfs-client -y升级后版本为7.5

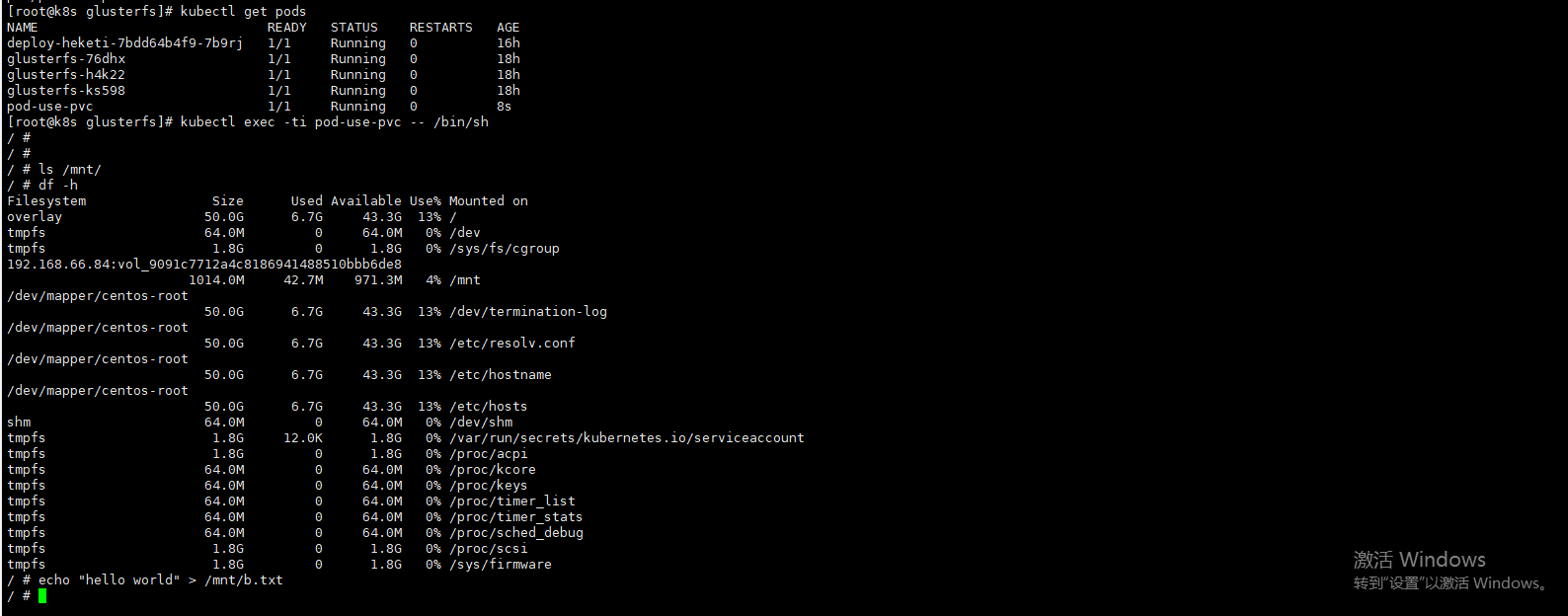

8. 创建文件验证是否成功

在k8s集群master上执行:kubectl exec -ti pod-use-pvc -- /bin/sh

echo "hello world" > /mnt/b.txt

df -h: 查看挂载那台的glusterfs

在node节点进入glusterfs节点查看文件

docker exec -ti 89f927aa2110 /bin/bash

find / -name b.txt

cat /var/lib/heketi/mounts/vg_22e127efbdefc1bbb315ab0fcf90e779/brick_97de1365f98b19ee3b93ce8ecb588366/brick/b.txt 或者在k8s集群master上查看

进入相应的glusterfs集群几点

kubectl exec -ti glusterfs-h4k22 -- /bin/sh

find / -name b.txt

cat /var/lib/heketi/mounts/vg_22e127efbdefc1bbb315ab0fcf90e779/brick_97de1365f98b19ee3b93ce8ecb588366/brick/b.txt

k8s动态存储管理GlusterFS的更多相关文章

- kubernetes实战(九):k8s集群动态存储管理GlusterFS及使用Heketi扩容GlusterFS集群

1.准备工作 所有节点安装GFS客户端 yum install glusterfs glusterfs-fuse -y 如果不是所有节点要部署GFS管理服务,就在需要部署的节点上打上标签 [root@ ...

- 动态存储管理实战:GlusterFS

文件转载自:https://www.orchome.com/1284 本节以GlusterFS为例,从定义StorageClass.创建GlusterFS和Heketi服务.用户申请PVC到创建Pod ...

- k8s中应用GlusterFS类型StorageClass

GlusterFS在Kubernetes中的应用 GlusterFS服务简介 GlusterFS是一个可扩展,分布式文件系统,集成来自多台服务器上的磁盘存储资源到单一全局命名空间,以提供共享文件存储. ...

- kubespy 用bash实现的k8s动态调试工具

原文位于 https://github.com/huazhihao/kubespy/blob/master/implement-a-k8s-debug-plugin-in-bash.md 背景 Kub ...

- glusterfs+heketi为k8s提供共享存储

背景 近来在研究k8s,学习到pv.pvc .storageclass的时候,自己捣腾的时候使用nfs手工提供pv的方式,看到官方文档大量文档都是使用storageclass来定义一个后端存储服务, ...

- 通过Heketi管理GlusterFS为K8S集群提供持久化存储

参考文档: Github project:https://github.com/heketi/heketi MANAGING VOLUMES USING HEKETI:https://access.r ...

- 部署GlusterFS及Heketi

一.前言及环境 在实践kubernetes的StateFulSet及各种需要持久存储的组件和功能时,通常会用到pv的动态供给,这就需要用到支持此类功能的存储系统了.在各类支持pv动态供给的存储系统中, ...

- 独立部署GlusterFS+Heketi实现Kubernetes共享存储

目录 环境 glusterfs配置 安装 测试 heketi配置 部署 简介 修改heketi配置文件 配置ssh密钥 启动heketi 生产案例 heketi添加glusterfs 添加cluste ...

- 附009.Kubernetes永久存储之GlusterFS独立部署

一 前期准备 1.1 基础知识 Heketi提供了一个RESTful管理界面,可以用来管理GlusterFS卷的生命周期.Heketi会动态在集群内选择bricks构建所需的volumes,从而确保数 ...

随机推荐

- python基础练习题(斐波那契数列)

day4 --------------------------------------------------------------- 实例006:斐波那契数列 题目 斐波那契数列. 题目没说清楚, ...

- Spring 源码(4)在Spring配置文件中自定义标签如何实现?

Spring 配置文件自定义标签的前置条件 在上一篇文章https://www.cnblogs.com/redwinter/p/16165274.html Spring BeanFactory的创建过 ...

- JMeter配置Oauth2.0授权接口访问

本文主要介绍如何使用JMeter配置客户端凭证(client credentials)模式下的请求 OAuth2.0介绍 OAuth 2.0 是一种授权机制,主要用来颁发令牌(token) 客户端凭证 ...

- Dockerfile 中对常用命令详解

说明 Dockerfile 是一个用来构建镜像的文本文件,文本内容包含了一条条构建镜像所需的指令和说明. 在Dockerfile 中命令书写对先后顺序及表示其执行对顺序,在书写时需注意. 约定 命令不 ...

- 二叉查找树速通攻略 图文代码精心编写(Java实现)

说在前面 如题目所言 这篇文章为了给下一篇二叉查找数做铺垫和前期知识准备,以便大家有良好的阅读体验,本来想合在一起的,但觉得有些长,所以就拆开了哈哈哈,还是新手向,两篇文章有些长,但如果能认真看下去, ...

- 攻防世界web进阶题—bug

攻防世界web进阶题-bug 1.打开题目看一下源码,没有问题 2.扫一下目录,没有问题 3.查一下网站的组成:php+Apache+Ubuntu 只有登录界面 这里可以可以想到:爆破.万能密码.进行 ...

- Flutter异步与线程详解

一:前言 - 关于多线程与异步 关于 Dart,我相信大家都知道Dart是一门单线程语言,这里说的单线程并不是说Dart没有或着不能使用多线程,而是Dart的所有API默认情况下都是单线程的.但大家也 ...

- TS 自学笔记(一)

TS 自学笔记(一) 本文写于 2020 年 5 月 6 日 日常废话两句 有几天没有更新了,最近学的比较乱,休息了两天感觉好一些了.这两天玩了几个设计软件,过几天也写篇文章分享分享. 为啥要学 TS ...

- 论文解读(GMIM)《Deep Graph Clustering via Mutual Information Maximization and Mixture Model》

论文信息 论文标题:Deep Graph Clustering via Mutual Information Maximization and Mixture Model论文作者:Maedeh Ahm ...

- 【多线程】线程同步 synchronized

由于同一进程的多个线程共享同一块存储空间 , 在带来方便的同时,也带来了访问 冲突问题 , 为了保证数据在方法中被访问时的正确性 , 在访问时加入 锁机制synchronized , 当一个线程获得对 ...