Kubernetes Rook

Rook

Rook 是一个开源的cloud-native storage编排, 提供平台和框架;为各种存储解决方案提供平台、框架和支持,以便与云原生环境本地集成。

Rook 将存储软件转变为自我管理、自我扩展和自我修复的存储服务,它通过自动化部署、引导、配置、置备、扩展、升级、迁移、灾难恢复、监控和资源管理来实现此目的。

Rook 使用底层云本机容器管理、调度和编排平台提供的工具来实现它自身的功能。

Rook 目前支持Ceph、NFS、Minio Object Store和CockroachDB。

搭建Rook for Ceph环境

第一步:创建Rook Operator

kubectl apply -f operator.yaml

apiVersion: v1

kind: Namespace

metadata:

name: rook-ceph-system

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: clusters.ceph.rook.io

spec:

group: ceph.rook.io

names:

kind: Cluster

listKind: ClusterList

plural: clusters

singular: cluster

shortNames:

- rcc

scope: Namespaced

version: v1beta1

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: filesystems.ceph.rook.io

spec:

group: ceph.rook.io

names:

kind: Filesystem

listKind: FilesystemList

plural: filesystems

singular: filesystem

shortNames:

- rcfs

scope: Namespaced

version: v1beta1

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: objectstores.ceph.rook.io

spec:

group: ceph.rook.io

names:

kind: ObjectStore

listKind: ObjectStoreList

plural: objectstores

singular: objectstore

shortNames:

- rco

scope: Namespaced

version: v1beta1

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: pools.ceph.rook.io

spec:

group: ceph.rook.io

names:

kind: Pool

listKind: PoolList

plural: pools

singular: pool

shortNames:

- rcp

scope: Namespaced

version: v1beta1

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: volumes.rook.io

spec:

group: rook.io

names:

kind: Volume

listKind: VolumeList

plural: volumes

singular: volume

shortNames:

- rv

scope: Namespaced

version: v1alpha2

---

# The cluster role for managing all the cluster-specific resources in a namespace

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: rook-ceph-cluster-mgmt

labels:

operator: rook

storage-backend: ceph

rules:

- apiGroups:

- ""

resources:

- secrets

- pods

- services

- configmaps

verbs:

- get

- list

- watch

- patch

- create

- update

- delete

- apiGroups:

- extensions

resources:

- deployments

- daemonsets

- replicasets

verbs:

- get

- list

- watch

- create

- update

- delete

---

# The role for the operator to manage resources in the system namespace

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: rook-ceph-system

namespace: rook-ceph-system

labels:

operator: rook

storage-backend: ceph

rules:

- apiGroups:

- ""

resources:

- pods

- configmaps

verbs:

- get

- list

- watch

- patch

- create

- update

- delete

- apiGroups:

- extensions

resources:

- daemonsets

verbs:

- get

- list

- watch

- create

- update

- delete

---

# The cluster role for managing the Rook CRDs

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: rook-ceph-global

labels:

operator: rook

storage-backend: ceph

rules:

- apiGroups:

- ""

resources:

# Pod access is needed for fencing

- pods

# Node access is needed for determining nodes where mons should run

- nodes

- nodes/proxy

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

# PVs and PVCs are managed by the Rook provisioner

- persistentvolumes

- persistentvolumeclaims

verbs:

- get

- list

- watch

- patch

- create

- update

- delete

- apiGroups:

- storage.k8s.io

resources:

- storageclasses

verbs:

- get

- list

- watch

- apiGroups:

- batch

resources:

- jobs

verbs:

- get

- list

- watch

- create

- update

- delete

- apiGroups:

- ceph.rook.io

resources:

- "*"

verbs:

- "*"

- apiGroups:

- rook.io

resources:

- "*"

verbs:

- "*"

---

# The rook system service account used by the operator, agent, and discovery pods

apiVersion: v1

kind: ServiceAccount

metadata:

name: rook-ceph-system

namespace: rook-ceph-system

labels:

operator: rook

storage-backend: ceph

---

# Grant the operator, agent, and discovery agents access to resources in the rook-ceph-system namespace

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: rook-ceph-system

namespace: rook-ceph-system

labels:

operator: rook

storage-backend: ceph

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: rook-ceph-system

subjects:

- kind: ServiceAccount

name: rook-ceph-system

namespace: rook-ceph-system

---

# Grant the rook system daemons cluster-wide access to manage the Rook CRDs, PVCs, and storage classes

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: rook-ceph-global

namespace: rook-ceph-system

labels:

operator: rook

storage-backend: ceph

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: rook-ceph-global

subjects:

- kind: ServiceAccount

name: rook-ceph-system

namespace: rook-ceph-system

---

# The deployment for the rook operator

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: rook-ceph-operator

namespace: rook-ceph-system

labels:

operator: rook

storage-backend: ceph

spec:

replicas: 1

template:

metadata:

labels:

app: rook-ceph-operator

spec:

serviceAccountName: rook-ceph-system

containers:

- name: rook-ceph-operator

image: rook/ceph:master

args: ["ceph", "operator"]

volumeMounts:

- mountPath: /var/lib/rook

name: rook-config

- mountPath: /etc/ceph

name: default-config-dir

env:

# To disable RBAC, uncomment the following:

# - name: RBAC_ENABLED

# value: "false"

# Rook Agent toleration. Will tolerate all taints with all keys.

# Choose between NoSchedule, PreferNoSchedule and NoExecute:

# - name: AGENT_TOLERATION

# value: "NoSchedule"

# (Optional) Rook Agent toleration key. Set this to the key of the taint you want to tolerate

# - name: AGENT_TOLERATION_KEY

# value: "<KeyOfTheTaintToTolerate>"

# Set the path where the Rook agent can find the flex volumes

# - name: FLEXVOLUME_DIR_PATH

# value: "<PathToFlexVolumes>"

# Rook Discover toleration. Will tolerate all taints with all keys.

# Choose between NoSchedule, PreferNoSchedule and NoExecute:

# - name: DISCOVER_TOLERATION

# value: "NoSchedule"

# (Optional) Rook Discover toleration key. Set this to the key of the taint you want to tolerate

# - name: DISCOVER_TOLERATION_KEY

# value: "<KeyOfTheTaintToTolerate>"

# Allow rook to create multiple file systems. Note: This is considered

# an experimental feature in Ceph as described at

# http://docs.ceph.com/docs/master/cephfs/experimental-features/#multiple-filesystems-within-a-ceph-cluster

# which might cause mons to crash as seen in https://github.com/rook/rook/issues/1027

- name: ROOK_ALLOW_MULTIPLE_FILESYSTEMS

value: "false"

# The logging level for the operator: INFO | DEBUG

- name: ROOK_LOG_LEVEL

value: "INFO"

# The interval to check if every mon is in the quorum.

- name: ROOK_MON_HEALTHCHECK_INTERVAL

value: "45s"

# The duration to wait before trying to failover or remove/replace the

# current mon with a new mon (useful for compensating flapping network).

- name: ROOK_MON_OUT_TIMEOUT

value: "300s"

# Whether to start pods as privileged that mount a host path, which includes the Ceph mon and osd pods.

# This is necessary to workaround the anyuid issues when running on OpenShift.

# For more details see https://github.com/rook/rook/issues/1314#issuecomment-355799641

- name: ROOK_HOSTPATH_REQUIRES_PRIVILEGED

value: "false"

# The name of the node to pass with the downward API

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

# The pod name to pass with the downward API

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

# The pod namespace to pass with the downward API

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumes:

- name: rook-config

emptyDir: {}

- name: default-config-dir

emptyDir: {}

operator.yaml

第二步:创建Rook Cluster

kubectl apply -f cluster.yaml

#################################################################################

# This example first defines some necessary namespace and RBAC security objects.

# The actual Ceph Cluster CRD example can be found at the bottom of this example.

#################################################################################

apiVersion: v1

kind: Namespace

metadata:

name: rook-ceph

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: rook-ceph-cluster

namespace: rook-ceph

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: rook-ceph-cluster

namespace: rook-ceph

rules:

- apiGroups: [""]

resources: ["configmaps"]

verbs: [ "get", "list", "watch", "create", "update", "delete" ]

---

# Allow the operator to create resources in this cluster's namespace

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: rook-ceph-cluster-mgmt

namespace: rook-ceph

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: rook-ceph-cluster-mgmt

subjects:

- kind: ServiceAccount

name: rook-ceph-system

namespace: rook-ceph-system

---

# Allow the pods in this namespace to work with configmaps

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: rook-ceph-cluster

namespace: rook-ceph

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: rook-ceph-cluster

subjects:

- kind: ServiceAccount

name: rook-ceph-cluster

namespace: rook-ceph

---

#################################################################################

# The Ceph Cluster CRD example

#################################################################################

apiVersion: ceph.rook.io/v1beta1

kind: Cluster

metadata:

name: rook-ceph

namespace: rook-ceph

spec:

dataDirHostPath: /var/lib/rook

dashboard:

enabled: true

storage:

useAllNodes: true

useAllDevices: false

config:

databaseSizeMB: ""

journalSizeMB: ""

cluster.yaml

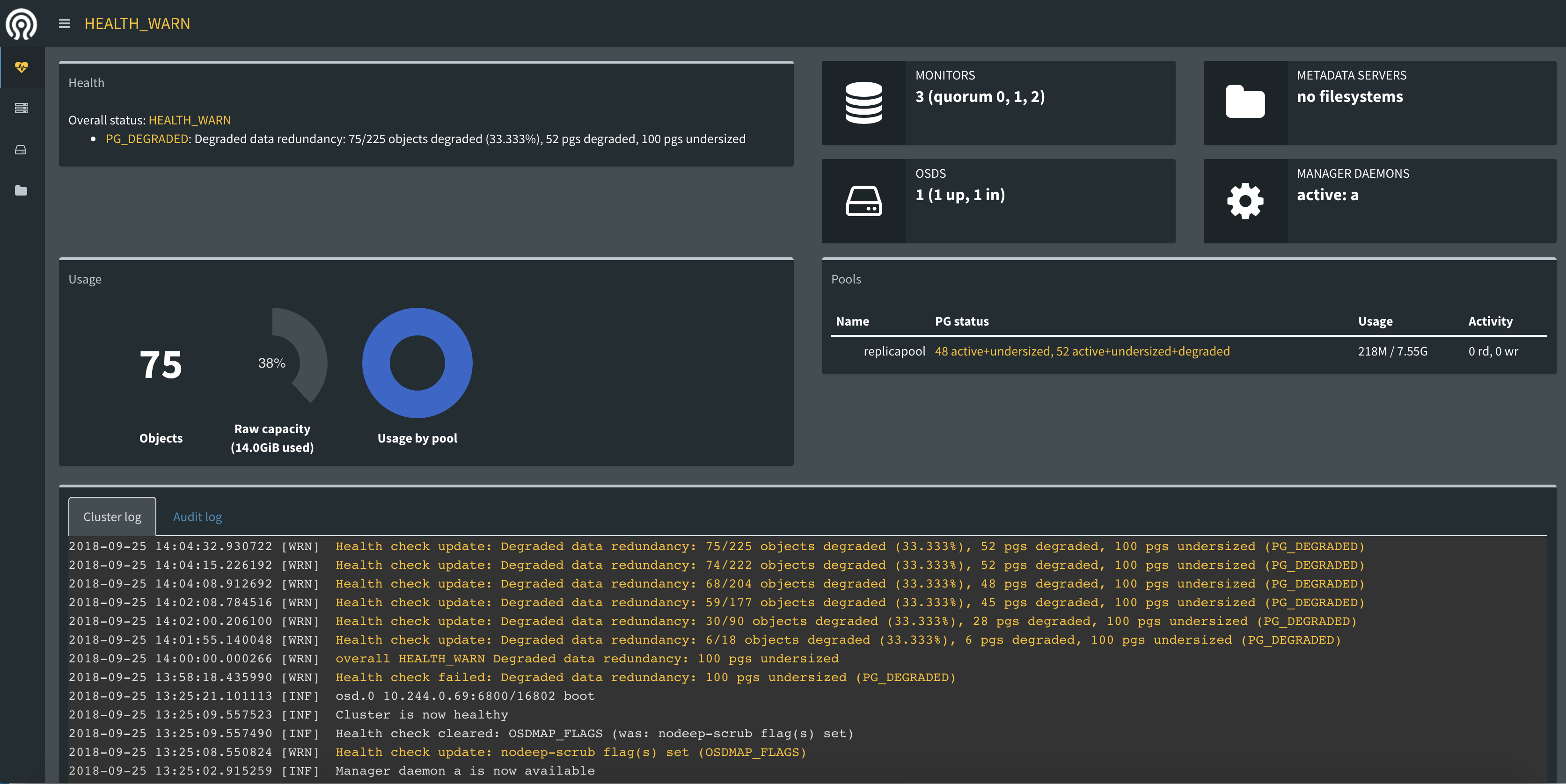

第三步:启用Ceph Dashboard

1、在Cluster.yaml中开启

spec:

dashboard:

enabled: true

2、修改dashboard svc type为NodePort

kubectl edit svc --namespace=rook-ceph rook-ceph-mgr-dashboard

3、访问Ceph Dashboard

[root@node01 rook]# kubectl get svc --namespace=rook-ceph

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

rook-ceph-mgr ClusterIP 10.108.75.252 <none> 9283/TCP 12d

rook-ceph-mgr-dashboard NodePort 10.102.61.3 <none> 7000:32400/TCP 12d

rook-ceph-mon-a ClusterIP 10.111.7.51 <none> 6790/TCP 12d

rook-ceph-mon-b ClusterIP 10.102.239.101 <none> 6790/TCP 12d

rook-ceph-mon-c ClusterIP 10.105.212.119 <none> 6790/TCP 12d

找到rook-ceph-mgr-dashborad的暴露端口

第四步:创建StorageClass

kubectl apply -f storageclass.yaml

apiVersion: ceph.rook.io/v1beta1

kind: Pool

metadata:

name: replicapool

namespace: rook-ceph

spec:

replicated:

size: 3

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: rook-ceph-block

provisioner: ceph.rook.io/block

parameters:

pool: replicapool

# The value of "clusterNamespace" MUST be the same as the one in which your rook cluster exist

clusterNamespace: rook-ceph

# Specify the filesystem type of the volume. If not specified, it will use `ext4`.

fstype: xfs

# Optional, default reclaimPolicy is "Delete". Other options are: "Retain", "Recycle" as documented in https://kubernetes.io/docs/concepts/storage/storage-classes/

reclaimPolicy: Retain

StorageClass

第五步:在应用中创建PVC

kubectl apply -f wordpress_mysql.yaml

apiVersion: v1

kind: Service

metadata:

name: wordpress

labels:

app: wordpress

spec:

ports:

- port: 80

selector:

app: wordpress

tier: frontend

type: LoadBalancer

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: wp-pv-claim

labels:

app: wordpress

spec:

storageClassName: rook-ceph-block

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: wordpress

labels:

app: wordpress

spec:

strategy:

type: Recreate

template:

metadata:

labels:

app: wordpress

tier: frontend

spec:

containers:

- image: wordpress:4.6.1-apache

name: wordpress

env:

- name: WORDPRESS_DB_HOST

value: wordpress-mysql

- name: WORDPRESS_DB_PASSWORD

value: changeme

ports:

- containerPort: 80

name: wordpress

volumeMounts:

- name: wordpress-persistent-storage

mountPath: /var/www/html

volumes:

- name: wordpress-persistent-storage

persistentVolumeClaim:

claimName: wp-pv-claim ---

apiVersion: v1

kind: Service

metadata:

name: wordpress-mysql

labels:

app: wordpress

spec:

ports:

- port: 3306

selector:

app: wordpress

tier: mysql

clusterIP: None

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mysql-pv-claim

labels:

app: wordpress

spec:

storageClassName: rook-ceph-block

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi

---

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: wordpress-mysql

labels:

app: wordpress

spec:

strategy:

type: Recreate

template:

metadata:

labels:

app: wordpress

tier: mysql

spec:

containers:

- image: mysql:5.6

name: mysql

env:

- name: MYSQL_ROOT_PASSWORD

value: changeme

ports:

- containerPort: 3306

name: mysql

volumeMounts:

- name: mysql-persistent-storage

mountPath: /var/lib/mysql

volumes:

- name: mysql-persistent-storage

persistentVolumeClaim:

claimName: mysql-pv-claim

WordPress_Mysql.yaml

第六步:验证

[root@node01 rook]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-8bc2a268-c0cb-11e8-a65a-000c29b52823 2Gi RWO Retain Bound default/wp-pv-claim rook-ceph-block 25m

pvc-8bcc6847-c0cb-11e8-a65a-000c29b52823 2Gi RWO Retain Bound default/mysql-pv-claim rook-ceph-block 25m

[root@node01 rook]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

mysql-pv-claim Bound pvc-8bcc6847-c0cb-11e8-a65a-000c29b52823 2Gi RWO rook-ceph-block 25m

wp-pv-claim Bound pvc-8bc2a268-c0cb-11e8-a65a-000c29b52823 2Gi RWO rook-ceph-block 25m

其他:

在StatefulSet中使用 volumeClaimTemplate

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

serviceName: "nginx"

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

name: web

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: www

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: rook-ceph-block

参考文档:

https://rook.github.io/docs/rook/master/ceph-quickstart.html

https://github.com/rook/rook

Kubernetes Rook的更多相关文章

- 附013.Kubernetes永久存储Rook部署

一 Rook概述 1.1 Ceph简介 Ceph是一种高度可扩展的分布式存储解决方案,提供对象.文件和块存储.在每个存储节点上,将找到Ceph存储对象的文件系统和Ceph OSD(对象存储守护程序)进 ...

- 【转载】Docker+Kubernetes 干货文章精选

主要涉及到以下关键字: K8S.Docker.微服务.安装.教程.网络.日志.存储.安全.工具.CI/CD.分布式.实践.架构等: 以下盘点2018年一些精选优质文章! 漫画形式: 漫画:小黄人学 S ...

- kubernetes之监控Operator部署Prometheus(三)

第一章和第二章中我们配置Prometheus的成本非常高,而且也非常麻烦.但是我们要考虑Prometheus.AlertManager 这些组件服务本身的高可用的话,成本就更高了,当然我们也完全可以用 ...

- Kubernetes — 从0到1:搭建一个完整的Kubernetes集群

准备工作 首先,准备机器.最直接的办法,自然是到公有云上申请几个虚拟机.当然,如果条件允许的话,拿几台本地的物理服务器来组集群是最好不过了.这些机器只要满足如下几个条件即可: 满足安装 Docker ...

- Kubernetes之StatefulSet

什么是StatefulSet StatefulSet 是Kubernetes中的一种控制器,他解决的什么问题呢?我们知道Deployment是对应用做了一个简化设置,Deployment认为一个应用的 ...

- 容器、容器集群管理平台与 Kubernetes 技术漫谈

原文:https://www.kubernetes.org.cn/4786.html 我们为什么使用容器? 我们为什么使用虚拟机(云主机)? 为什么使用物理机? 这一系列的问题并没有一个统一的标准答案 ...

- 关于 Kubernetes 中的 Volume 与 GlusterFS 分布式存储

容器中持久化的文件生命周期是短暂的,如果容器中程序崩溃宕机,kubelet 就会重新启动,容器中的文件将会丢失,所以对于有状态的应用容器中持久化存储是至关重要的一个环节:另外很多时候一个 Pod 中可 ...

- rook 排错记录 + Orphaned pod found kube-controller-manager的日志输出

1.查看rook-agent(重要)和mysql-wordpress 的日志,如下: MountVolume.SetUp failed for volume "pvc-f002e1fe-46 ...

- kubespray 容器存储设备 -- rook ceph

1./root/kubespray/roles/docker/docker-storage/defaults/main.yml #在用kubespray部署集群是制定docker用什么设备 dock ...

随机推荐

- 最新win7系统安全稳定版

最新win7系统32位安全稳定版 V2016年2月,具有更安全.更稳定.更人性化等特点.集成最常用的装机软件,集成最全面的硬件驱动,精心挑选的系统维护工具,加上萝卜独有人性化的设计.是电脑城.个人.公 ...

- js创建对象的最佳方式

1.对象的定义 ECMAScript中,对象是一个无序属性集,这里的“属性”可以是基本值.对象或者函数 2.数据属性与访问器属性 数据属性即有值的属性,可以设置属性只读.不可删除.不可枚举等等 访问器 ...

- CentOS6.8部署SVN

第1章 安装svn服务 1.1 准备操作系统并查看系统环境 [root@localhost ~]# cat /etc/redhat-release CentOS release 6.8 (Final) ...

- jsx编译器 atom

开始学习react es6 觉得没有合适的编译器.于是找到了个Atom. 官网 https://atom.io/ 下载安装. 双击运行即可完成安装. 安装后点击 file>setting> ...

- 第二篇:尽可能使用 const

前言 const 关键字是常量修辞符,如果要告知编译器某个变量在程序中不会发生改变,则可将其声明为 const. 但,对 const 关键字的认识不能仅仅停留在这一层 - 它提供了很多更强大的功能. ...

- 《从零开始学Swift》学习笔记(Day 26)——可选链

原创文章,欢迎转载.转载请注明:关东升的博客 在Swift程序表达式中会看到问号(?)和感叹号(!),它们代表什么含义呢?这些符号都与可选类型和可选链相关,下面来看看可选链. 可选链: 类图: 它们之 ...

- c#文件流汇总

操作文件比较常见,项目中经常出现这样的需求:按每个月自动创建文件,并且向文件里面插入一些数据,那么我们将要分析,文件是否存在的情况:如果存在则直接打开文件流向文件中插入数据,如果不存在,则创建文件再插 ...

- POJ 2485 Highways【最小生成树最大权——简单模板】

链接: http://poj.org/problem?id=2485 http://acm.hust.edu.cn/vjudge/contest/view.action?cid=22010#probl ...

- Starting Session of user root.

Sep 23 01:50:01 d systemd: Started Session 1475 of user root.Sep 23 01:50:01 d systemd: Starting Ses ...

- Intellij IDEA快捷键及常见问题

在java学习与开发中经常使用Intellij IDEA,为提高效率会使用快捷方式. 现在记录下经常使用到快捷键: Ctr l+ O 快速重写父类方法 Ctrl + Shift + / xml注释&l ...