k8s集群部署(3)

一、利用ansible部署kubernetes集群环境准备

基于二进制方式部署和利用ansible-playbook实现自动化:既提供一键安装脚本,也可以分步执行安装各个组件,同时讲解每一步主要参数配置和注意事项;二进制方式部署有助于理解系统各组件的交互原理和熟悉组件启动参数,有助于快速排查解决实际问题。

版本组件:

wget http://download2.yunwei.edu/shell/yum-repo.sh

bash yum-repo.sh

2°下载并安装docker:

wget http://download2.yunwei.edu/shell/docker.tar.gz

解压后切换到docker目录下

运行docker.sh脚本

查看docker服务是否启动

docker image

#/bin/bash tar zxvf docker-app.tar.gz -C /usr/local/bin/ mkdir -p /etc/docker

mkdir -p /etc/docker/certs.d/reg.yunwei.edu cp ca.crt /etc/docker/certs.d/reg.yunwei.edu/ echo "172.16.254.20 reg.yunwei.edu">>/etc/hosts cat <<EOF>/etc/docker/daemon.json

{

"registry-mirrors": ["http://cc83932c.m.daocloud.io"],

"max-concurrent-downloads": ,

"log-driver": "json-file",

"log-level": "warn",

"log-opts": {

"max-size": "10m",

"max-file": ""

}

}

EOF cat <<EOF>/etc/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=http://docs.docker.io [Service]

Environment="PATH=/usr/local/bin:/bin:/sbin:/usr/bin:/usr/sbin"

ExecStart=/usr/local/bin/dockerd

ExecStartPost=/sbin/iptables -I FORWARD -s 0.0.0.0/ -j ACCEPT

ExecReload=/bin/kill -s HUP $MAINPID

Restart=on-failure

RestartSec=

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

Delegate=yes

KillMode=process [Install]

WantedBy=multi-user.target

EOF systemctl daemon-reload && systemctl enable docker.service && systemctl start docker.service

docker.sh

3°下载并运行docker版的ansible:

docker pull reg.yunwei.edu/learn/ansible:alpine3

docker run -itd -v /etc/ansible:/etc/ansible -v /etc/kubernetes/:/etc/kubernetes/ -v /root/.kube:/root/.kube -v /usr/local/bin/:/usr/local/bin/ 1acb4fd5df5b /bin/sh

4° 配置每台机器之间主机名解析

5° 进入ansible容器,设置免密钥登录

测试各节点是否正常

ansible all -m ping

3.在部署节点上传ansible工作文件:

wget http://download2.yunwei.edu/shell/kubernetes.tar.gz

解压后:

bash harbor-offline-installer-v1.4.0.tgz k8s197.tar.gz scope.yaml

ca.tar.gz image.tar.gz kube-yunwei-197.tar.gz sock-shop

解压kube-yunwei-197.tar.gz 并将kube-yunwei-下的所有文件移动到ansible下,删除kube-yunwei-

[root@localhost kubernetes]# tar xf kube-yunwei-.tar.gz

[root@localhost kubernetes]# ls

bash harbor-offline-installer-v1.4.0.tgz k8s197.tar.gz kube-yunwei-.tar.gz sock-shop

ca.tar.gz image.tar.gz kube-yunwei- scope.yaml

[root@localhost kubernetes]# cd kube-yunwei-

[root@localhost kube-yunwei-]# ls

.prepare.yml .docker.yml .kube-node.yml .clean.yml bin manifests tools

.etcd.yml .kube-master.yml .network.yml ansible.cfg example roles

[root@localhost kube-yunwei-]# mv * /etc/ansible/

解压 k8s197.tar.gz 并将bin下的所有文件移动到ansible下的bin目录下,删除kubernetes下的bin

[root@localhost kubernetes]# tar xf k8s197.tar.gz

[root@localhost kubernetes]# ls

bash ca.tar.gz image k8s197.tar.gz kube-yunwei-.tar.gz sock-shop

bin harbor-offline-installer-v1.4.0.tgz image.tar.gz kube-yunwei- scope.yaml

[root@localhost kubernetes]# cd bin

[root@localhost bin]# ls

bridge docker dockerd etcdctl kubectl portmap

calicoctl docker-compose docker-init flannel kubelet

cfssl docker-containerd docker-proxy host-local kube-proxy

cfssl-certinfo docker-containerd-ctr docker-runc kube-apiserver kube-scheduler

cfssljson docker-containerd-shim etcd kube-controller-manager loopback

[root@localhost bin]# mv * /etc/ansible/bin/

[root@localhost bin]# ls

[root@localhost bin]# cd /etc/ansible/bin/

[root@localhost bin]# ls

bridge docker dockerd etcdctl kubectl portmap

calicoctl docker-compose docker-init flannel kubelet VERSION.md

cfssl docker-containerd docker-proxy host-local kube-proxy

cfssl-certinfo docker-containerd-ctr docker-runc kube-apiserver kube-scheduler

cfssljson docker-containerd-shim etcd kube-controller-manager loopback

切换到example目录下,将 hosts.s-master.example 文件复制到ansible目录下,并改名为hosts

[root@localhost kubernetes]# cd /etc/ansible/

[root@localhost ansible]# ls

.prepare.yml .docker.yml .kube-node.yml .clean.yml bin manifests tools

.etcd.yml .kube-master.yml .network.yml ansible.cfg example roles

[root@localhost ansible]# cd example/

[root@localhost example]# ls

hosts.s-master.example

[root@localhost example]# cp hosts.s-master.example ../hosts

[root@localhost example]# cd ..

[root@localhost ansible]# ls

.prepare.yml .docker.yml .kube-node.yml .clean.yml bin hosts roles

.etcd.yml .kube-master.yml .network.yml ansible.cfg example manifests tools

[root@localhost ansible]# vim hosts

# 在deploy节点生成CA相关证书,以及kubedns.yaml配置文件

- hosts: deploy

roles:

- deploy # 集群节点的公共配置任务

- hosts:

- kube-master

- kube-node

- deploy

- etcd

- lb

roles:

- prepare # [可选]多master部署时的负载均衡配置

- hosts: lb

roles:

- lb

01.prepare.yml

- hosts: etcd

roles:

- etcd

02.etcd.yml

- hosts:

- kube-master

- kube-node

roles:

- docker

03.docker.yml

- hosts: kube-master

roles:

- kube-master

- kube-node

# 禁止业务 pod调度到 master节点

tasks:

- name: 禁止业务 pod调度到 master节点

shell: "{{ bin_dir }}/kubectl cordon {{ NODE_IP }} "

when: DEPLOY_MODE != "allinone"

ignore_errors: true

04.kube-master.yml

- hosts: kube-node

roles:

- kube-node

05.kube-node.yml

# 集群网络插件部署,只能选择一种安装

- hosts:

- kube-master

- kube-node

roles:

- { role: calico, when: "CLUSTER_NETWORK == 'calico'" }

- { role: flannel, when: "CLUSTER_NETWORK == 'flannel'" }

06.network.yml

编辑hosts文件

# 部署节点:运行ansible 脚本的节点

[deploy]

192.168.42.30 # etcd集群请提供如下NODE_NAME、NODE_IP变量,请注意etcd集群必须是1,,,...奇数个节点

[etcd]

192.168.42.121 NODE_NAME=etcd1 NODE_IP="192.168.42.121"

192.168.42.122 NODE_NAME=etcd2 NODE_IP="192.168.42.122"

192.168.42.172 NODE_NAME=etcd3 NODE_IP="192.168.42.172" [kube-master]

192.168.42.121 NODE_IP="192.168.42.121" [kube-node]

192.168.42.121 NODE_IP="192.168.42.121"

192.168.42.122 NODE_IP="192.168.42,122"

192.168.42.172 NODE_IP="192.168.42.172" [all:vars]

# ---------集群主要参数---------------

#集群部署模式:allinone, single-master, multi-master

DEPLOY_MODE=single-master #集群 MASTER IP

MASTER_IP="192.168.42.121" #集群 APISERVER

KUBE_APISERVER="https://192.168.42.121:6443" #TLS Bootstrapping 使用的 Token,使用 head -c /dev/urandom | od -An -t x | tr -d ' ' 生成

BOOTSTRAP_TOKEN="d18f94b5fa585c7123f56803d925d2e7" # 集群网络插件,目前支持calico和flannel

CLUSTER_NETWORK="calico" # 部分calico相关配置,更全配置可以去roles/calico/templates/calico.yaml.j2自定义

# 设置 CALICO_IPV4POOL_IPIP=“off”,可以提高网络性能,条件限制详见 .安装calico网络组件.md

CALICO_IPV4POOL_IPIP="always"

# 设置 calico-node使用的host IP,bgp邻居通过该地址建立,可手动指定端口"interface=eth0"或使用如下自动发现

IP_AUTODETECTION_METHOD="can-reach=223.5.5.5" # 部分flannel配置,详见roles/flannel/templates/kube-flannel.yaml.j2

FLANNEL_BACKEND="vxlan" # 服务网段 (Service CIDR),部署前路由不可达,部署后集群内使用 IP:Port 可达

SERVICE_CIDR="10.68.0.0/16" # POD 网段 (Cluster CIDR),部署前路由不可达,**部署后**路由可达

CLUSTER_CIDR="172.20.0.0/16" # 服务端口范围 (NodePort Range)

NODE_PORT_RANGE="20000-40000" # kubernetes 服务 IP (预分配,一般是 SERVICE_CIDR 中第一个IP)

CLUSTER_KUBERNETES_SVC_IP="10.68.0.1" # 集群 DNS 服务 IP (从 SERVICE_CIDR 中预分配)

CLUSTER_DNS_SVC_IP="10.68.0.2" # 集群 DNS 域名

CLUSTER_DNS_DOMAIN="cluster.local." # etcd 集群间通信的IP和端口, **根据实际 etcd 集群成员设置**

ETCD_NODES="etcd1=https://192.168.42.121:2380,etcd2=https://192.168.42.122:2380,etcd3=https://192.168.42.172:2380" # etcd 集群服务地址列表, **根据实际 etcd 集群成员设置**

ETCD_ENDPOINTS="https://192.168.42.121:2379,https://192.168.42.122:2379,https://192.168.42.172:2379" # 集群basic auth 使用的用户名和密码

BASIC_AUTH_USER="admin"

BASIC_AUTH_PASS="admin" # ---------附加参数--------------------

#默认二进制文件目录

bin_dir="/usr/local/bin" #证书目录

ca_dir="/etc/kubernetes/ssl" #部署目录,即 ansible 工作目录

base_dir="/etc/ansible"

二、部署kubernetes过程

进入容器,查看ansible目录下是否有文件,并且查看能否ping通其他节点

[root@localhost ansible]# docker exec -it 0918862b8730 /bin/sh

/ # cd /etc/ansible/

/etc/ansible # ls

.prepare.yml .network.yml hosts

.etcd.yml .clean.yml manifests

.docker.yml ansible.cfg roles

.kube-master.yml bin tools

.kube-node.yml example

/etc/ansible # ansible all -m ping

192.168.42.122 | SUCCESS => {

"changed": false,

"ping": "pong"

}

192.168.42.172 | SUCCESS => {

"changed": false,

"ping": "pong"

}

192.168.42.121 | SUCCESS => {

"changed": false,

"ping": "pong"

}

192.168.42.30 | SUCCESS => {

"changed": false,

"ping": "pong"

}

ansible-playbook .prepare.yml

ansible-playbook .etcd.yml

ansible-playbook .docker.yml

ansible-playbook .kube-master.yml

ansible-playbook .kube-node.yml

在执行06.network.yml之前要确保其他节点有镜像,所以解压image.tar.gz

[root@cicd kubernetes]# ls

bash ca.tar.gz image k8s197.tar.gz kube-yunwei- scope.yaml

bin harbor-offline-installer-v1.4.0.tgz image.tar.gz kubernetes.tar.gz kube-yunwei-.tar.gz sock-shop

[root@cicd kubernetes]# cd image

[root@cicd image]# ls

calico-cni-v2.0.5.tar coredns-1.0..tar.gz influxdb-v1.3.3.tar

calico-kube-controllers-v2.0.4.tar grafana-v4.4.3.tar kubernetes-dashboard-amd64-v1.8.3.tar.gz

calico-node-v3.0.6.tar heapster-v1.5.1.tar pause-amd64-3.1.tar

[root@cicd image]# scp ./* node1:/root/image

[root@cicd image]# scp ./* node2:/root/image

[root@cicd image]# scp ./* node3:/root/image

在node节点:

[root@node1 image]# for i in `ls`;do docker load -i $i;done

部署节点:

ansible-playbook .network.yml

CoreDNS,该DNS服务器利用SkyDNS的库来为Kubernetes pod和服务提供DNS请求。

/etc/ansible # ls

.prepare.yml .docker.yml .network.yml bin manifests

.etcd.retry .kube-master.yml .clean.yml example roles

.etcd.yml .kube-node.yml ansible.cfg hosts tools

/etc/ansible # cd manifests/

/etc/ansible/manifests # ls

coredns dashboard efk heapster ingress kubedns

/etc/ansible/manifests # cd coredns/

/etc/ansible/manifests/coredns # ls

coredns.yaml

/etc/ansible/manifests/coredns # kubectl create -f .

serviceaccount "coredns" created

clusterrole "system:coredns" created

clusterrolebinding "system:coredns" created

configmap "coredns" created

deployment "coredns" created

service "coredns" created /etc/ansible/manifests # ls

coredns dashboard efk heapster ingress kubedns

/etc/ansible/manifests # cd dashboard/

/etc/ansible/manifests/dashboard # ls

1.6.3 kubernetes-dashboard.yaml ui-read-rbac.yaml

admin-user-sa-rbac.yaml ui-admin-rbac.yaml

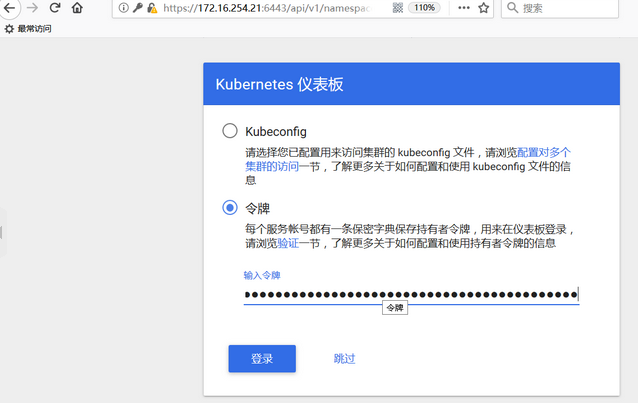

/etc/ansible/manifests/dashboard # kubectl create -f .

serviceaccount "admin-user" created

clusterrolebinding "admin-user" created

secret "kubernetes-dashboard-certs" created

serviceaccount "kubernetes-dashboard" created

role "kubernetes-dashboard-minimal" created

rolebinding "kubernetes-dashboard-minimal" created

deployment "kubernetes-dashboard" created

service "kubernetes-dashboard" created

clusterrole "ui-admin" created

rolebinding "ui-admin-binding" created

clusterrole "ui-read" created

rolebinding "ui-read-binding" created

[root@cicd ansible]# kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE

coredns-6ff7588dc6-l8q4h / Running 7m 172.20.0.2 192.168.42.122

coredns-6ff7588dc6-x2jq5 / Running 7m 172.20.1.2 192.168.42.172

kube-flannel-ds-c688h / Running 14m 192.168.42.172 192.168.42.172

kube-flannel-ds-d4p4j / Running 14m 192.168.42.122 192.168.42.122

kube-flannel-ds-f8gp2 / Running 14m 192.168.42.121 192.168.42.121

kubernetes-dashboard-545b66db97-z9nr4 / Running 1m 172.20.1.3 192.168.42.172

[root@cicd ansible]# kubectl cluster-info

Kubernetes master is running at https://192.168.42.121:6443

CoreDNS is running at https://192.168.42.121:6443/api/v1/namespaces/kube-system/services/coredns:dns/proxy

kubernetes-dashboard is running at https://192.168.42.121:6443/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

k8s集群部署(3)的更多相关文章

- Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列目录

0.目录 整体架构目录:ASP.NET Core分布式项目实战-目录 k8s架构目录:Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列目录 一.感谢 在此感谢.net ...

- Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列之集群部署环境规划(一)

0.前言 整体架构目录:ASP.NET Core分布式项目实战-目录 k8s架构目录:Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列目录 一.环境规划 软件 版本 ...

- Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列之自签TLS证书及Etcd集群部署(二)

0.前言 整体架构目录:ASP.NET Core分布式项目实战-目录 k8s架构目录:Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列目录 一.服务器设置 1.把每一 ...

- Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列之flanneld网络介绍及部署(三)

0.前言 整体架构目录:ASP.NET Core分布式项目实战-目录 k8s架构目录:Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列目录 一.flanneld介绍 ...

- Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列之部署master/node节点组件(四)

0.前言 整体架构目录:ASP.NET Core分布式项目实战-目录 k8s架构目录:Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列目录 1.部署master组件 ...

- (视频)asp.net core系列之k8s集群部署视频

0.前言 应许多网友的要求,特此录制一下k8s集群部署的视频.在录制完成后发现视频的声音存在一点瑕疵,不过不影响大家的观感. 一.视频说明 1.视频地址: 如果有不懂,或者有疑问的欢迎留言.视频分为两 ...

- 在k8s集群部署SonarQube

目录 1.2.存储环境 1.3.sonarqube版本 2.部署sonarqube 2.1.部署PostgreSQL 2.2.部署SonarQube 2.3.访问检查 SonarQube 是一款用于代 ...

- 基于k8s集群部署prometheus监控ingress nginx

目录 基于k8s集群部署prometheus监控ingress nginx 1.背景和环境概述 2.修改prometheus配置 3.检查是否生效 4.配置grafana图形 基于k8s集群部署pro ...

- 基于k8s集群部署prometheus监控etcd

目录 基于k8s集群部署prometheus监控etcd 1.背景和环境概述 2.修改prometheus配置 3.检查是否生效 4.配置grafana图形 基于k8s集群部署prometheus监控 ...

- 菜鸟系列k8s——k8s集群部署(2)

k8s集群部署 1. 角色分配 角色 IP 安装组件 k8s-master 10.0.0.170 kube-apiserver,kube-controller-manager,kube-schedul ...

随机推荐

- linux——在windows上搭建linux练习环境

程序员自己研究——java-linux-php——环境搭建 需要首选准备一个linux环境. 1,可用安装一个虚拟机:VMware虚拟机 2,安装一个VMware大约5分钟左右. 3,截止目前2019 ...

- 【CQOI2017】老C的键盘

Description https://loj.ac/problem/3023 一句话题意:给你一棵完全二叉树,每条边有一个方向,求这棵树有多少种不同的拓扑序. Solution 简化题意后,其实就是 ...

- Python&Selenium 数据驱动【unittest+ddt+json】

一.摘要 本博文将介绍Python和Selenium做自动化测试的时候,基于unittest框架,借助ddt模块使用json文件作为数据文件作为测试输入,最后生成html测试报告 二.json文件 [ ...

- Linux的正则练习

grep和 egrep的正则表达式 1.显示三个用户root.wang的UID和默认shell cat /etc/passwd | grep “^\(root\|wang\)” | tr ‘:’ ‘ ...

- appium+python 【Mac】UI自动化测试封装框架介绍 <三>---脚本的执行

我自己编写的脚本框架中,所有的脚本执行均放在一个py文件中,此文件作为启动文件执行,包含了运行此文件将执行脚本.分配设备端口.自启appium服务等. 详细的介绍待后期补充.

- Elasticsearch:search template

我们发现一些用户经常编写了一些非常冗长和复杂的查询 - 在很多情况下,相同的查询会一遍又一遍地执行,但是会有一些不同的值作为参数来查询.在这种情况下,我们觉得使用一个search template(搜 ...

- Map遍历效率 : entrySet > keySet

1 //entrySet() 2 for (Entry<String, String> entry : map.entrySet()) { 3 Stri ...

- 获取当前exe的绝对路径

string GetExePath(void) { ]={}; GetModuleFileNameA(NULL, szFilePath, MAX_PATH); (strrchr(szFilePath, ...

- learning armbian steps(9) ----- armbian 源码分析(四)

在上一节的分析当中,我们知道是通过对话框来选择到底编译的是哪块板子,基于什么样的配置. 接下来我们来拿一个实例来分析一下具体的案例,我们会选中如下所示的版本 iotx-3 AM335X 1Gb SoC ...

- quartz.net 执行后台任务

... https://www.cnblogs.com/zhangweizhong/category/771057.html https://www.cnblogs.com/lanxiaoke/cat ...