Kernel Methods for Deep Learning

@article{cho2009kernel,

title={Kernel Methods for Deep Learning},

author={Cho, Youngmin and Saul, Lawrence K},

pages={342--350},

year={2009}}

引

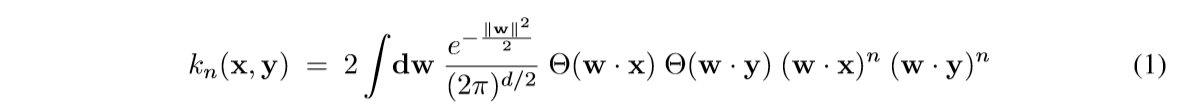

这篇文章介绍了一种新的核函数, 其启发来自于神经网络的运算.

其中\(\Theta(z)=\frac{1}{2}(1+\mathrm{sign}(z))\).

主要内容

主要性质, 公式(1)可以表示成:

\tag{2}

\]

其中:

\tag{3}

\]

\tag{4}

\]

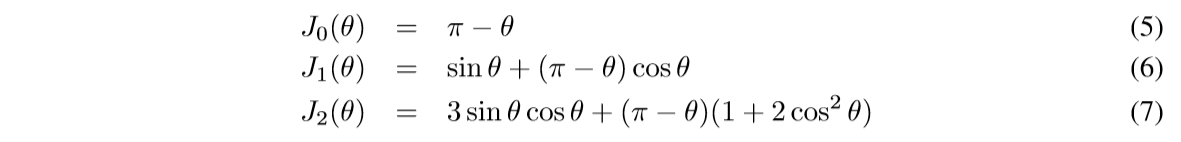

特别的:

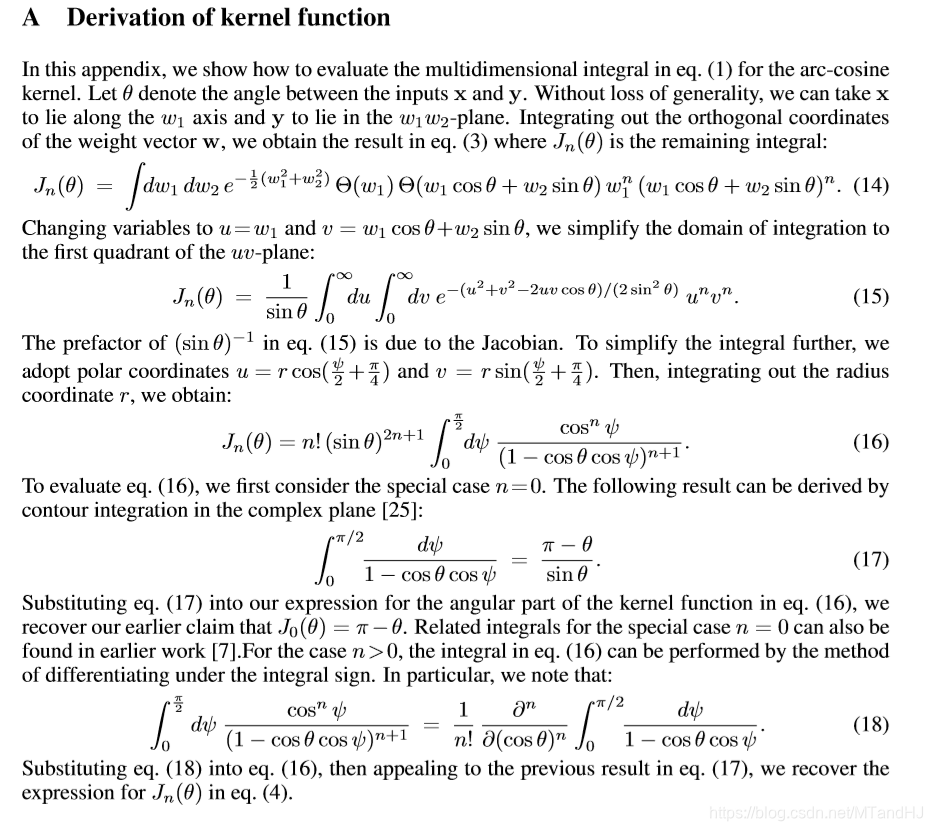

其证明如下:

第(17)的证明我没有推, 因为 contour integration 暂时不了解.

细心的读者可能会发现, 最后的结果是\(\frac{\partial^n}{\partial(\cos \theta)^n}\), 注意对于一个函数\(f(\cos \theta)\), 我们可以令\(g(\theta) = f(\cos \theta)\)则:

\]

又

\]

便得结论.

与深度学习的联系

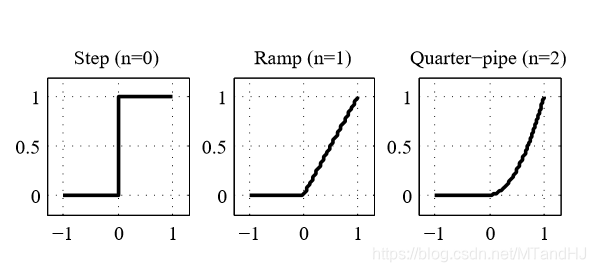

如果我们把注意力集中在某一层, 假设输入为\(\mathbf{x}\), 输出为:

\]

其中\(g(z) = \Theta(z) z^n\)是激活函数, 不同的n有如下的表现:

\(n=1\)便是我们熟悉的ReLU.

考虑俩个输入\(\mathbf{x},\mathbf{y}\)所对应的输出\(\mathbf{f}(\mathbf{x}),\mathbf{f}(\mathbf{y})\)的内积:

\]

如果每个权重\(W_{ij}\)都服从标准正态分布, 则:

\]

实验

实验失败了, 代码如下.

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from sklearn.svm import NuSVC

"""

Arc_cosine kernel

"""

class Arc_cosine:

def __init__(self, n=1):

self.n = n

self.own_kernel = self.kernels(n)

def kernel0(self, x, y):

norm_x = np.linalg.norm(x)

norm_y = np.linalg.norm(y)

cos_value = x @ y / (norm_x *

norm_y)

angle = np.arccos(cos_value)

return 1 - angle / np.pi

def kernel1(self, x, y):

norm_x = np.linalg.norm(x)

norm_y = np.linalg.norm(y)

cos_value = x @ y / (norm_x *

norm_y)

angle = np.arccos(cos_value)

sin_value = np.sin(angle)

return (norm_x * norm_y) ** self.n * \

(sin_value + (np.pi - angle) *

cos_value) / np.pi

def kernel2(self, x, y):

norm_x = np.linalg.norm(x)

norm_y = np.linalg.norm(y)

cos_value = x @ y / (norm_x *

norm_y)

angle = np.arccos(cos_value)

sin_value = np.sin(angle)

return (norm_x * norm_y) ** self.n * \

3 * sin_value * cos_value + \

(np.pi - angle) * (1 + 2 * cos_value ** 2)

def kernels(self, n):

if n is 0:

return self.kernel0

elif n is 1:

return self.kernel1

elif n is 2:

return self.kernel2

else:

raise ValueError("No such kernel, n should be "

"0, 1 or 2")

def kernel(self, X, Y):

m = X.shape[0]

n = Y.shape[0]

C = np.zeros((m, n))

for i in range(m):

for j in range(n):

C[i, j] = self.own_kernel(

X[i], Y[j]

)

return C

def __call__(self, X, Y):

return self.kernel(X, Y)

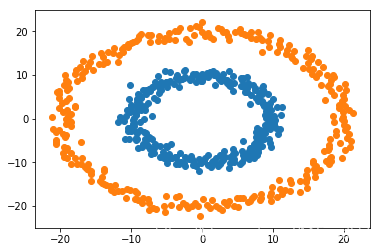

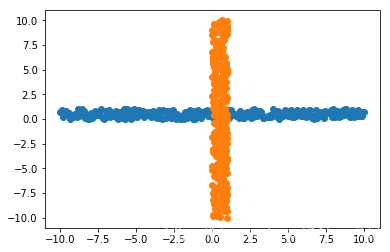

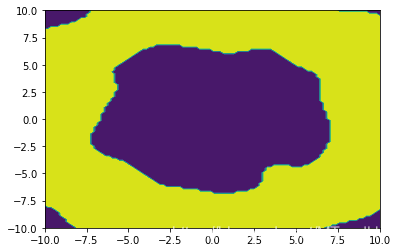

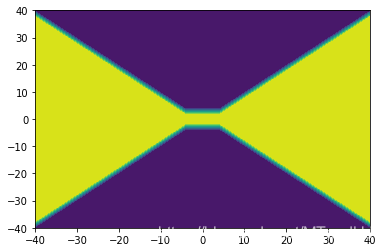

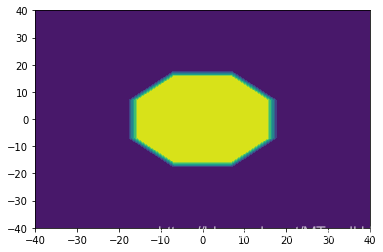

在俩个数据上进行SVM, 数据如下:

在SVM上跑:

'''

#生成圈圈数据

def generate_data(circle, r1, r2, nums=300):

variance = 1

rs1 = np.random.randn(nums) * variance + r1

rs2 = np.random.randn(nums) * variance + r2

angles = np.linspace(0, 2*np.pi, nums)

data1 = (rs1 * np.sin(angles) + circle[0],

rs1 * np.cos(angles) + circle[1])

data2 = (rs2 * np.sin(angles) + circle[0],

rs2 * np.cos(angles) + circle[1])

df1 = pd.DataFrame({'x':data1[0], 'y': data1[1],

'label':np.ones(nums)})

df2 = pd.DataFrame({'x':data2[0], 'y': data2[1],

'label':-np.ones(nums)})

return df1, df2

'''

#生成十字数据

def generate_data(left, right, down, up,

circle=(0., 0.), nums=300):

variance = 1

y1 = np.random.rand(nums) * variance + circle[1]

x2 = np.random.rand(nums) * variance + circle[0]

x1 = np.linspace(left, right, nums)

y2 = np.linspace(down, up, nums)

df1 = pd.DataFrame(

{'x': x1,

'y': y1,

'label':np.ones_like(x1)}

)

df2 = pd.DataFrame(

{'x': x2,

'y': y2,

'label':-np.ones_like(x2)}

)

return df1, df2

def pre_test(left, right, func, nums=100):

x1, y1 = left

x2, y2 = right

x = np.linspace(x1, x2, nums)

y = np.linspace(y1, y2, nums)

X,Y = np.meshgrid(x,y)

m, n = X.shape

Z = func(np.vstack((X.reshape(1, -1),

Y.reshape(1, -1))).T).reshape(m, n)

return X, Y, Z

df1, df2 = generate_data(-10, 10, -10, 10)

df = df1.append(df2)

classifer2 = NuSVC(kernel=Arc_cosine(n=1))

classifer2.fit(df.iloc[:, :2], df['label'])

X, Y, Z = pre_test((-10, -10), (10, 10), classifer2.predict)

plt.contourf(X, Y, Z)

plt.show()

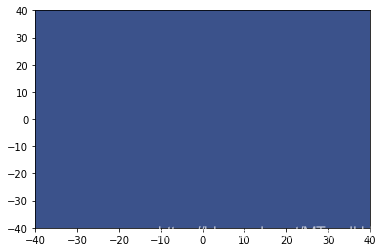

预测结果均为:

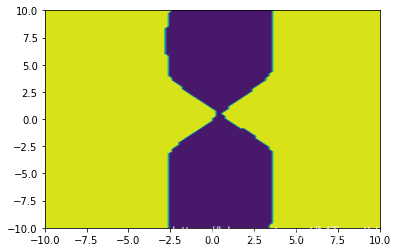

而在一般的RBF上, 结果都是很好的:

在多项式核上也ok:

如果有人能发现代码中的错误,请务必指正.

Kernel Methods for Deep Learning的更多相关文章

- (转) Ensemble Methods for Deep Learning Neural Networks to Reduce Variance and Improve Performance

Ensemble Methods for Deep Learning Neural Networks to Reduce Variance and Improve Performance 2018-1 ...

- 深度学习的集成方法——Ensemble Methods for Deep Learning Neural Networks

本文主要参考Ensemble Methods for Deep Learning Neural Networks一文. 1. 前言 神经网络具有很高的方差,不易复现出结果,而且模型的结果对初始化参数异 ...

- Paper List ABOUT Deep Learning

Deep Learning 方向的部分 Paper ,自用.一 RNN 1 Recurrent neural network based language model RNN用在语言模型上的开山之作 ...

- Deep Learning方向的paper

转载 http://hi.baidu.com/chb_seaok/item/6307c0d0363170e73cc2cb65 个人阅读的Deep Learning方向的paper整理,分了几部分吧,但 ...

- Kernel Functions for Machine Learning Applications

In recent years, Kernel methods have received major attention, particularly due to the increased pop ...

- Deep Learning and the Triumph of Empiricism

Deep Learning and the Triumph of Empiricism By Zachary Chase Lipton, July 2015 Deep learning is now ...

- How To Improve Deep Learning Performance

如何提高深度学习性能 20 Tips, Tricks and Techniques That You Can Use ToFight Overfitting and Get Better Genera ...

- My deep learning reading list

My deep learning reading list 主要是顺着Bengio的PAMI review的文章找出来的.包括几本综述文章,将近100篇论文,各位山头们的Presentation.全部 ...

- Deep Learning关于Vision的Reading List

最近开始学习深度学习了,加油! 下文转载自:http://blog.sina.com.cn/s/blog_bda0d2f10101fpp4.html 主要是顺着Bengio的PAMI review的文 ...

随机推荐

- Spark(七)【RDD的持久化Cache和CheckPoint】

RDD的持久化 1. RDD Cache缓存 RDD通过Cache或者Persist方法将前面的计算结果缓存,默认情况下会把数据以缓存在JVM的堆内存中.但是并不是这两个方法被调用时立即缓存,而是 ...

- 为什么CTR预估使用AUC来评估模型?

ctr预估简单的解释就是预测用户的点击item的概率.为什么一个回归的问题需要使用分类的方法来评估,这真是一个好问题,尝试从下面几个关键问题去回答. 1.ctr预估是特殊的回归问题 ctr预估的目标函 ...

- 【分布式】ZooKeeper权限控制之ACL(Access Control List)访问控制列表

zk做为分布式架构中的重要中间件,通常会在上面以节点的方式存储一些关键信息,默认情况下,所有应用都可以读写任何节点,在复杂的应用中,这不太安全,ZK通过ACL机制来解决访问权限问题,详见官网文档:ht ...

- adb命令对app进行测试

1.何为adb adb android debug bridge ,sdk包中的工具,将Platform-tooks 和tools 两个路径配置到环境变量中 2.SDK下载链接:http://t ...

- OpenStack之七: compute服务(端口8774)

注意此处的bug,参考o版 官网地址 https://docs.openstack.org/nova/stein/install/controller-install-rdo.html 控制端配置 # ...

- Jenkins获取jar包的快照号

目录 一.简介 二.脚本 一.简介 主要用于打jar包的工程,显示快照包的名字.当jar打包完成后,会在target目录中,截取快照名. 二.脚本 1.脚本return-version.sh #!/b ...

- Linux入侵 反弹shell

目录 一.简介 二.命令 三.NetCat 一.简介 黑入服务器很少会是通过账号密码的方式进入,因为这很难破解密码和很多服务器都做了限制白名单. 大多是通过上传脚本文件,然后执行脚本开启一个端口,通过 ...

- &和nohup

目录 一.简介 二.& 三.nohup 一.简介 当我们在终端或控制台工作时,可能不希望由于运行一个作业而占住了屏幕,因为可能还有更重要的事情要做,比如阅读电子邮件.对于密集访问磁盘的进程,我 ...

- mit6.830-lab2-常见算子和 volcano 执行模型

一.实验概览 github : https://github.com/CreatorsStack/CreatorDB 这个实验需要完成的内容有: 实现过滤.连接运算符,这些类都是继承与OpIterat ...

- 在eclipse打开jsp文件变成文本的解决:

在eclipse打开jsp文件变成文本的解决: ------原因:可能是不小心删除某些组件等等一些操作 1,考虑一下是否还有插件jsp 编辑器组件 选择内部编辑器[在下面选择 JSP Editor]- ...