Keepalived搭建主从架构、主主架构实例

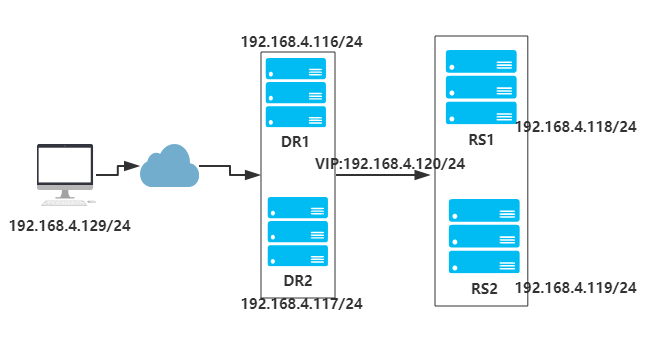

实例拓扑图:

DR1和DR2部署Keepalived和lvs作主从架构或主主架构,RS1和RS2部署nginx搭建web站点。

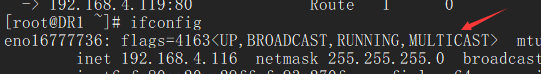

注意:各节点的时间需要同步(ntpdate ntp1.aliyun.com);关闭firewalld(systemctl stop firewalld.service,systemctl disable firewalld.service),设置selinux为permissive(setenforce 0);同时确保DR1和DR2节点的网卡支持MULTICAST(多播)通信。通过命令ifconfig可以查看到是否开启了MULTICAST:

Keepalived的主从架构

搭建RS1:

[root@RS1 ~]# yum -y install nginx #安装nginx

[root@RS1 ~]# vim /usr/share/nginx/html/index.html #修改主页

<h1> 192.168.4.118 RS1 server </h1>

[root@RS1 ~]# systemctl start nginx.service #启动nginx服务

[root@RS1 ~]# vim RS.sh #配置lvs-dr的脚本文件

#!/bin/bash

#

vip=192.168.4.120

mask=255.255.255.255

case $1 in

start)

echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce

ifconfig lo:0 $vip netmask $mask broadcast $vip up

route add -host $vip dev lo:0

;;

stop)

ifconfig lo:0 down

echo 0 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/all/arp_announce

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_announce

;;

*)

echo "Usage $(basename $0) start|stop"

exit 1

;;

esac

[root@RS1 ~]# bash RS.sh start

参考RS1的配置搭建RS2。

搭建DR1:

[root@DR1 ~]# yum -y install ipvsadm keepalived #安装ipvsadm和keepalived

[root@DR1 ~]# vim /etc/keepalived/keepalived.conf #修改keepalived.conf配置文件

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id 192.168.4.116

vrrp_skip_check_adv_addr

vrrp_mcast_group4 224.0.0.10

} vrrp_instance VIP_1 {

state MASTER

interface eno16777736

virtual_router_id 1

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass %&hhjj99

}

virtual_ipaddress {

192.168.4.120/24 dev eno16777736 label eno16777736:0

}

} virtual_server 192.168.4.120 80 {

delay_loop 6

lb_algo rr

lb_kind DR

protocol TCP real_server 192.168.4.118 80 {

weight 1

HTTP_GET {

url {

path /index.html

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.4.119 80 {

weight 1

HTTP_GET {

url {

path /index.html

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

[root@DR1 ~]# systemctl start keepalived

[root@DR1 ~]# ifconfig

eno16777736: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.4.116 netmask 255.255.255.0 broadcast 192.168.4.255

inet6 fe80::20c:29ff:fe93:270f prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:93:27:0f txqueuelen 1000 (Ethernet)

RX packets 14604 bytes 1376647 (1.3 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 6722 bytes 653961 (638.6 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 eno16777736:0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.4.120 netmask 255.255.255.0 broadcast 0.0.0.0

ether 00:0c:29:93:27:0f txqueuelen 1000 (Ethernet)

[root@DR1 ~]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.4.120:80 rr

-> 192.168.4.118:80 Route 1 0 0

-> 192.168.4.119:80 Route 1 0 0

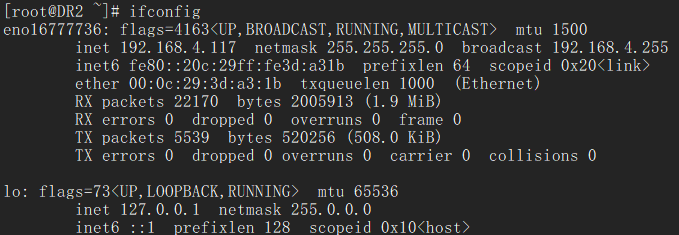

DR2的搭建基本同DR1,主要修改一下配置文件中/etc/keepalived/keepalived.conf的state和priority:state BACKUP、priority 90. 同时我们发现作为backup的DR2没有启用eno16777736:0的网口:

客户端进行测试:

[root@client ~]# for i in {1..20};do curl http://192.168.4.120;done #客户端正常访问

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

[root@DR1 ~]# systemctl stop keepalived.service #关闭DR1的keepalived服务

[root@DR2 ~]# systemctl status keepalived.service #观察DR2,可以看到DR2已经进入MASTER状态

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; disabled; vendor preset: disabled)

Active: active (running) since Tue 2018-09-04 11:33:04 CST; 7min ago

Process: 12983 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 12985 (keepalived)

CGroup: /system.slice/keepalived.service

├─12985 /usr/sbin/keepalived -D

├─12988 /usr/sbin/keepalived -D

└─12989 /usr/sbin/keepalived -D

Sep 04 11:37:41 happiness Keepalived_healthcheckers[12988]: SMTP alert successfully sent.

Sep 04 11:40:22 happiness Keepalived_vrrp[12989]: VRRP_Instance(VIP_1) Transition to MASTER STATE

Sep 04 11:40:23 happiness Keepalived_vrrp[12989]: VRRP_Instance(VIP_1) Entering MASTER STATE

Sep 04 11:40:23 happiness Keepalived_vrrp[12989]: VRRP_Instance(VIP_1) setting protocol VIPs.

Sep 04 11:40:23 happiness Keepalived_vrrp[12989]: Sending gratuitous ARP on eno16777736 for 192.168.4.120

Sep 04 11:40:23 happiness Keepalived_vrrp[12989]: VRRP_Instance(VIP_1) Sending/queueing gratuitous ARPs on eno16777736 for 192.168.4.120

Sep 04 11:40:23 happiness Keepalived_vrrp[12989]: Sending gratuitous ARP on eno16777736 for 192.168.4.120

Sep 04 11:40:23 happiness Keepalived_vrrp[12989]: Sending gratuitous ARP on eno16777736 for 192.168.4.120

Sep 04 11:40:23 happiness Keepalived_vrrp[12989]: Sending gratuitous ARP on eno16777736 for 192.168.4.120

Sep 04 11:40:23 happiness Keepalived_vrrp[12989]: Sending gratuitous ARP on eno16777736 for 192.168.4.120

[root@client ~]# for i in {1..20};do curl http://192.168.4.120;done #可以看到客户端正常访问

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

Keepalived的主主架构

修改RS1和RS2,添加新的VIP:

[root@RS1 ~]# cp RS.sh RS_bak.sh

[root@RS1 ~]# vim RS_bak.sh #添加新的VIP

#!/bin/bash

#

vip=192.168.4.121

mask=255.255.255.255

case $1 in

start)

echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce

ifconfig lo:1 $vip netmask $mask broadcast $vip up

route add -host $vip dev lo:1

;;

stop)

ifconfig lo:1 down

echo 0 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/all/arp_announce

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_announce

;;

*)

echo "Usage $(basename $0) start|stop"

exit 1

;;

esac

[root@RS1 ~]# bash RS_bak.sh start

[root@RS1 ~]# ifconfig

...

lo:0: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 192.168.4.120 netmask 255.255.255.255

loop txqueuelen 0 (Local Loopback) lo:1: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 192.168.4.121 netmask 255.255.255.255

loop txqueuelen 0 (Local Loopback)

[root@RS1 ~]# scp RS_bak.sh root@192.168.4.119:~

root@192.168.4.119's password:

RS_bak.sh 100% 693 0.7KB/s 00:00 [root@RS2 ~]# bash RS_bak.sh #直接运行脚本添加新的VIP

[root@RS2 ~]# ifconfig

...

lo:0: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 192.168.4.120 netmask 255.255.255.255

loop txqueuelen 0 (Local Loopback) lo:1: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 192.168.4.121 netmask 255.255.255.255

loop txqueuelen 0 (Local Loopback)

修改DR1和DR2:

[root@DR1 ~]# vim /etc/keepalived/keepalived.conf #修改DR1的配置文件,添加新的实例,配置服务器组

...

vrrp_instance VIP_2 {

state BACKUP

interface eno16777736

virtual_router_id 2

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass UU**99^^

}

virtual_ipaddress {

192.168.4.121/24 dev eno16777736 label eno16777736:1

}

} virtual_server_group ngxsrvs {

192.168.4.120 80

192.168.4.121 80

}

virtual_server group ngxsrvs {

...

}

[root@DR1 ~]# systemctl restart keepalived.service #重启服务

[root@DR1 ~]# ifconfig #此时可以看到eno16777736:1,因为DR2还未配置

eno16777736: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.4.116 netmask 255.255.255.0 broadcast 192.168.4.255

inet6 fe80::20c:29ff:fe93:270f prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:93:27:0f txqueuelen 1000 (Ethernet)

RX packets 54318 bytes 5480463 (5.2 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 38301 bytes 3274990 (3.1 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 eno16777736:0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.4.120 netmask 255.255.255.0 broadcast 0.0.0.0

ether 00:0c:29:93:27:0f txqueuelen 1000 (Ethernet) eno16777736:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.4.121 netmask 255.255.255.0 broadcast 0.0.0.0

ether 00:0c:29:93:27:0f txqueuelen 1000 (Ethernet)

[root@DR1 ~]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.4.120:80 rr

-> 192.168.4.118:80 Route 1 0 0

-> 192.168.4.119:80 Route 1 0 0

TCP 192.168.4.121:80 rr

-> 192.168.4.118:80 Route 1 0 0

-> 192.168.4.119:80 Route 1 0 0 [root@DR2 ~]# vim /etc/keepalived/keepalived.conf #修改DR2的配置文件,添加实例,配置服务器组

...

vrrp_instance VIP_2 {

state MASTER

interface eno16777736

virtual_router_id 2

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass UU**99^^

}

virtual_ipaddress {

192.168.4.121/24 dev eno16777736 label eno16777736:1

}

} virtual_server_group ngxsrvs {

192.168.4.120 80

192.168.4.121 80

}

virtual_server group ngxsrvs {

...

}

[root@DR2 ~]# systemctl restart keepalived.service #重启服务

[root@DR2 ~]# ifconfig

eno16777736: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.4.117 netmask 255.255.255.0 broadcast 192.168.4.255

inet6 fe80::20c:29ff:fe3d:a31b prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:3d:a3:1b txqueuelen 1000 (Ethernet)

RX packets 67943 bytes 6314537 (6.0 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 23250 bytes 2153847 (2.0 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 eno16777736:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.4.121 netmask 255.255.255.0 broadcast 0.0.0.0

ether 00:0c:29:3d:a3:1b txqueuelen 1000 (Ethernet)

[root@DR2 ~]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.4.120:80 rr

-> 192.168.4.118:80 Route 1 0 0

-> 192.168.4.119:80 Route 1 0 0

TCP 192.168.4.121:80 rr

-> 192.168.4.118:80 Route 1 0 0

-> 192.168.4.119:80 Route 1 0 0

客户端测试:

[root@client ~]# for i in {1..20};do curl http://192.168.4.120;done

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

[root@client ~]# for i in {1..20};do curl http://192.168.4.121;done

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

<h1> 192.168.4.119 RS2 server</h1>

<h1> 192.168.4.118 RS1 server </h1>

Keepalived搭建主从架构、主主架构实例的更多相关文章

- redis搭建主从(1主2从)

一,先附上配置文件 1,master(6379.conf)上面的配置文件 daemonize yes pidfile /usr/local/redis/logs/redis_6379.pid port ...

- redsi搭建主从和多主多从

- 搭建MySQL的主从、半同步、主主复制架构

复制其最终目的是让一台服务器的数据和另外的服务器的数据保持同步,已达到数据冗余或者服务的负载均衡.一台主服务器可以连接多台从服务器,并且从服务器也可以反过来作为主服务器.主从服务器可以位于不同的网络拓 ...

- Centos7+nginx+keepalived集群及双主架构案例

目录简介 一.简介 二.部署nginx+keepalived 集群 三.部署nginx+keepalived双主架构 四.高可用之调用辅助脚本进行资源监控,并根据监控的结果状态实现动态调整 一.简介 ...

- LVS+Keepalived+Mysql+主主数据库架构[2台]

架构图 安装步骤省略. 158.140 keepalived.conf ! Configuration File for keepalived global_defs { #全局标识模块 notifi ...

- MySQL + KeepAlived + LVS 单点写入主主同步高可用架构实验

分类: MySQL 架构设计 2013-05-08 01:40 5361人阅读 评论(8) 收藏 举报 mysql 高可用 keepalive ㈠ 实战环境 服务器名· IP OS MySQL odd ...

- lvs+keepalive主从和主主架构

下面配置主从 1)关闭SELinux和防火墙 vi /etc/sysconfig/selinux SELINUX=disabled setenforce 临时关闭SELinux,文件配置后,重启生效 ...

- mysql高可用架构方案之中的一个(keepalived+主主双活)

Mysql双主双活+keepalived实现高可用 文件夹 1.前言... 4 2.方案... 4 2.1.环境及软件... 4 2.2.IP规划... 4 2.3.架构图... ...

- MySQL主从、主主、半同步节点架构的的原理及实验总结

一.原理及概念: MySQL 主从复制概念 MySQL 主从复制是指数据可以从一个MySQL数据库服务器主节点复制到一个或多个从节点.MySQL 默认采用异步复制方式,这样从节点不用一直访问主服务器来 ...

随机推荐

- python数据类型(数字\字符串\列表)

一.基本数据类型——数字 1.布尔型 bool型只有两个值:True和False 之所以将bool值归类为数字,是因为我们也习惯用1表示True,0表示False. (1)布尔值是False的各种情况 ...

- input file 类型为excel表格

以下为react写法,可自行改为html的 <div className="flag-tip"> 请上传excel表格, 后缀名为.csv, .xls, .xlsx的都 ...

- 【Leetcode】【Easy】Binary Tree Level Order Traversal II

Given a binary tree, return the bottom-up level order traversal of its nodes' values. (ie, from left ...

- Struts2学习-拦截器2续

定义拦截器有2种办法:1.实现Interceptor接口2.集成AbstractInterceptor抽象类 一.方法1 ..... <struts> <package name=& ...

- WIN7系统程序放在中文文件夹打开报错及界面汉字变乱码

今天发现在一个服务商提供的设备的WIN7系统里,一个稳定运行的程序打开时报错,且界面汉字变乱码. 经测试发现程序放在英文名称的文件夹中可以正常打开,但界面上的汉字仍为乱码. 后检查“控制面板“--”区 ...

- sql 2008 远程过程调用失败 0x800706be

啊哦,SQL Server挂了!sql 2008 远程过程调用失败 0x800706be,找了一下解决方案,如下: 1.打开控制面板->添加删除程序 2.卸载一个叫Microsoft SQL S ...

- 怎么在overflow-y:sroll的情况下 隐藏滚动条

当我们的内容超出了我们的div,往往会出现滚动条,影响美观. 尤其是当我们在做一些导航菜单的时候.滚动条一出现就破坏了UI效果. 我们不希望出现滚动条,也不希望超出去的内容被放逐,就要保留鼠标滚动的 ...

- ORACLE常用函数汇总(持续更新中....)

在使用ORACLE过程中,把一些常用的函数的相关用法,注意事项进行简单的汇总,便于自己查询参考. DBMS_RANDOM包 dbms_random是一个可以生成随机数值或者字符串的程序包.这个包有in ...

- note03-计算机网络

3. 网络层 网络层的主要协议有IP.ICMP.IGMP.ARP等: IP地址分类:ABCDE ,根据32比特位的IP中网络号所占位数进行决定IP的类型 A:0 0000000 网络号| 000000 ...

- 服务器安装anaconda

SSH连接服务器可以用putty 网址:https://repo.continuum.io/archive/ 下载安装脚本 wget https://repo.anaconda.com/archive ...