Data Deduplication Workflow Part 1

Data deduplication provides a new approach to store data and eliminate duplicate data in chunk level.

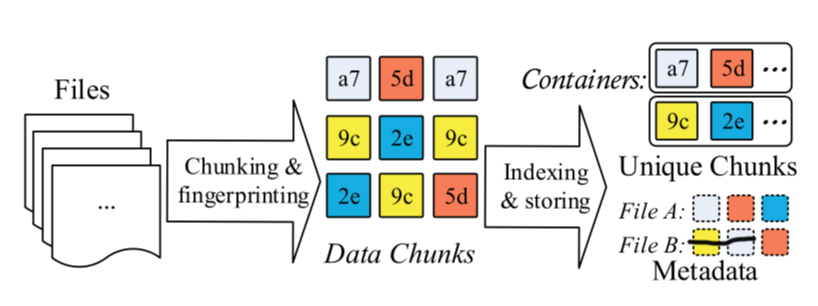

A typical data deduplication workflow can be explained like this.

File metadata describes how to restore the file use unique chunks.

Chunk level deduplication approach has five key stages.

- Chunking

- Fingerprinting

- Indexing fringerprints

- Further compression

- Storage management

Different Stage has its own challenges, which may become the bottleneck for restoring file or compressing files.

Chunking

At the chunking stage, we should split the data stream into chunks, which can be presented at the fingerprints.

The different method splitting data streaming has different result and different efficiency.

The splitting method can be divided into two categories:

Fixed Size Chunking, which just split the data stream into fixed size chunk, simply and easily .

Content Defined Chunking, which split the data into variable size chunk, depending on the content.

Although fixed size chunking is simple and quick, the biggest problem is Boundary Shift. Boundary Shift Problem is when little part of data stream is modified, all the subsequent chunks will be changed, because of the boundary is shifted.

Content defined chunking uses a sliding-window technique on the content of data stream and computes a hash value of the window. If the hash value is satisfied some predefined conditions, it will generate a chunk.

Chunk's size can also be optimized. If we use CDC (content defined chunking), the size of chunking can not be in charge. On some extremely condition, it will generate too large or too small chunking. If a chunking is too large, the compression ratio will decrease. Because the large chunk can hide duplicates from being detected. If a chunking is too small, the file metadata will increase. What's more, it can cause indexing fingerprints problem. So we can define the max and min chunk size.

Chunking still has some problems such as how to detect the deduplicate accurately, how to accelerate computing time cost.

Data Deduplication Workflow Part 1的更多相关文章

- Data De-duplication

偶尔看到data deduplication的博客,还挺有意思,记录之 http://blog.csdn.net/liuben/article/details/5829083?reload http: ...

- 大数据去重(data deduplication)方案

数据去重(data deduplication)是大数据领域司空见惯的问题了.除了统计UV等传统用法之外,去重的意义更在于消除不可靠数据源产生的脏数据--即重复上报数据或重复投递数据的影响,使计算产生 ...

- Note: Transparent data deduplication in the cloud

What Design and implement ClearBox which allows a storage service provider to transparently attest t ...

- 论文阅读 Prefetch-aware fingerprint cache management for data deduplication systems

论文链接 https://link.springer.com/article/10.1007/s11704-017-7119-0 这篇论文试图解决的问题是在cache 环节之前,prefetch-ca ...

- Data Deduplication in Windows Server 2012

https://blogs.technet.microsoft.com/filecab/2012/05/20/introduction-to-data-deduplication-in-windows ...

- Note: File Recipe Compression in Data Deduplication Systems

Zero-Chunk Suppression 检测全0数据块,将其用预先计算的自身的指纹信息代替. Detect zero chunks and replace them with a special ...

- SharePoint 2013 create workflow by SharePoint Designer 2013

这篇文章主要基于上一篇http://www.cnblogs.com/qindy/p/6242714.html的基础上,create a sample workflow by SharePoint De ...

- Seven Python Tools All Data Scientists Should Know How to Use

Seven Python Tools All Data Scientists Should Know How to Use If you’re an aspiring data scientist, ...

- 重复数据删除(De-duplication)技术研究(SourceForge上发布dedup util)

dedup util是一款开源的轻量级文件打包工具,它基于块级的重复数据删除技术,可以有效缩减数据容量,节省用户存储空间.目前已经在Sourceforge上创建项目,并且源码正在不断更新中.该工具生成 ...

随机推荐

- Sublime Text 3 配置 Phpcs

Phpcs 插件介绍 可以为 Sublime Text 编辑器提供代码格式检测的功能,使用以下工具(全部可选): PHP_CodeSniffer (phpcs) Linter (php -l) PHP ...

- 确认自己所用的python版本

总结: 目前有两个版本的python处于活跃状态:python2,python3 有多种流行的python运行环境:cpython(应用最广泛的python解释器,如无对解释器有要求,一般用这个,默认 ...

- Python读取excel 数据

1.安装xlrd 2.官网 通过官网来查看如何使用python读取Excel,python excel官网: http://www.python-excel.org/ 实例: (1)Excel内容 把 ...

- 游戏服务器和Web服务器的区别

用Go语言写游戏服务器也有一个多月了,也能够明显的感受到两者的区别.这篇文章就是想具体的聊聊其中的区别.当然,在了解区别之间,我们先简单的了解一下Go语言本身. 1. Go语言的特点 Go语言跟其他的 ...

- 02-19 k近邻算法(鸢尾花分类)

[TOC] 更新.更全的<机器学习>的更新网站,更有python.go.数据结构与算法.爬虫.人工智能教学等着你:https://www.cnblogs.com/nickchen121/ ...

- A-08 拉格朗日对偶性

目录 拉格朗日对偶性 一.原始问题 1.1 约束最优化问题 1.2 广义拉格朗日函数 1.3 约束条件的考虑 二.对偶问题 三.原始问题和对偶问题的关系 3.1 定理1 3.2 推论1 3.3 定理2 ...

- 洛谷 P3745 [六省联考2017]期末考试

题目描述 有 nnn 位同学,每位同学都参加了全部的 mmm 门课程的期末考试,都在焦急的等待成绩的公布. 第 iii 位同学希望在第 tit_iti 天或之前得知所有课程的成绩.如果在第 tit_ ...

- 阿里云学生服务器+WordPress搭建个人博客

搭建过程: 第一步:首先你需要一台阿里云服务器ECS,如果你是学生,可以享受学生价9.5元/月 (阿里云翼计划:https://promotion.aliyun.com/ntms/act/campus ...

- 算法学习之剑指offer(十)

一 题目描述 请实现一个函数用来判断字符串是否表示数值(包括整数和小数).例如,字符串"+100","5e2","-123","3 ...

- 创建SSM项目所需

一.mybatis所需: 1.相关jar包 2.创数据库+Javabean类 3.接口+写SQL的xml映射文件 4.核心配置文件:SqlMapConfig.xml 二.springMVC所需: 1. ...