使用R语言和XML包抓取网页数据-Scraping data from web pages in R with XML package

In the last years a lot of data has been released publicly in different formats, but sometimes the data we're interested in are still inside the HTML of a web page: let's see how to get those data.

One of the existing packages for doing this job is the XML package. This package allows us to read and create XML and HTML documents; among the many features, there's a function called readHTMLTable() that analyze the parsed HTML and returns the tables present in the page. The details of the package are available in the official documentation of the package.

Let's start.

Suppose we're interested in the italian demographic info present in this page http://sdw.ecb.europa.eu/browse.do?node=2120803 from the EU website. We start loading and parsing the page:

page <- "http://sdw.ecb.europa.eu/browse.do?node=2120803"

parsed <- htmlParse(page)

Now that we have parsed HTML, we can use the readHTMLTable() function to return a list with all the tables present in the page; we'll call the function with these parameters:

- parsed: the parsed HTML

- skip.rows: the rows we want to skip (at the beginning of this table there are a couple of rows that don't contain data but just formatting elements)

- colClasses: the datatype of the different columns of the table (in our case all the columns have integer values); the rep() function is used to replicate 31 times the "integer" value

table <- readHTMLTable(parsed, skip.rows=c(1,3,4,5), colClasses = c(rep("integer", 31)))

As we can see from the page source code, this web page contains six HTML tables; the one that contains the data we're interested in is the fifth, so we extract that one from the list of tables, as a data frame:

values <- as.data.frame(table[5])

Just for convenience, we rename the columns with the period and italian data:

# renames the columns for the period and Italy

colnames(values)[1] <- 'Period'

colnames(values)[19] <- 'Italy'

The italian data lasts from 1990 to 2014, so we have to subset only those rows and, of course, only the two columns of period and italian data:

# subsets the data: we are interested only in the first and the 19th column (period and italian info)

ids <- values[c(1,19)] # Italy has only 25 years of info, so we cut away the other rows

ids <- as.data.frame(ids[1:25,])

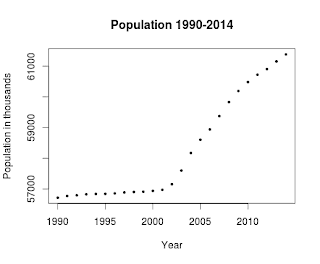

Now we can plot these data calling the plot function with these parameters:

- ids: the data to plot

- xlab: the label of the X axis

- ylab: the label of the Y axis

- main: the title of the plot

- pch: the symbol to draw for evey point (19 is a solid circle: look here for an overview)

- cex: the size of the symbol

plot(ids, xlab="Year", ylab="Population in thousands", main="Population 1990-2014", pch=19, cex=0.5)

and here is the result:

Here's the full code, also available on my github:

library(XML) # sets the URL

url <- "http://sdw.ecb.europa.eu/browse.do?node=2120803" # let the XML library parse the HTMl of the page

parsed <- htmlParse(url) # reads the HTML table present inside the page, paying attention

# to the data types contained in the HTML table

table <- readHTMLTable(parsed, skip.rows=c(1,3,4,5), colClasses = c(rep("integer", 31) )) # this web page contains seven HTML pages, but the one that contains the data

# is the fifth

values <- as.data.frame(table[5]) # renames the columns for the period and Italy

colnames(values)[1] <- 'Period'

colnames(values)[19] <- 'Italy' # now subsets the data: we are interested only in the first and

# the 19th column (period and Italy info)

ids <- values[c(1,19)] # Italy has only 25 year of info, so we cut away the others

ids <- as.data.frame(ids[1:25,]) # plots the data

plot(ids, xlab="Year", ylab="Population in thousands", main="Population 1990-2014", pch=19, cex=0.5) from: http://andreaiacono.blogspot.com/2014/01/scraping-data-from-web-pages-in-r-with.html

使用R语言和XML包抓取网页数据-Scraping data from web pages in R with XML package的更多相关文章

- java抓取网页数据,登录之后抓取数据。

最近做了一个从网络上抓取数据的一个小程序.主要关于信贷方面,收集的一些黑名单网站,从该网站上抓取到自己系统中. 也找了一些资料,觉得没有一个很好的,全面的例子.因此在这里做个笔记提醒自己. 首先需要一 ...

- Asp.net 使用正则和网络编程抓取网页数据(有用)

Asp.net 使用正则和网络编程抓取网页数据(有用) Asp.net 使用正则和网络编程抓取网页数据(有用) /// <summary> /// 抓取网页对应内容 /// </su ...

- 使用JAVA抓取网页数据

一.使用 HttpClient 抓取网页数据 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 ...

- 使用HtmlAgilityPack批量抓取网页数据

原文:使用HtmlAgilityPack批量抓取网页数据 相关软件点击下载登录的处理.因为有些网页数据需要登陆后才能提取.这里要使用ieHTTPHeaders来提取登录时的提交信息.抓取网页 Htm ...

- web scraper 抓取网页数据的几个常见问题

如果你想抓取数据,又懒得写代码了,可以试试 web scraper 抓取数据. 相关文章: 最简单的数据抓取教程,人人都用得上 web scraper 进阶教程,人人都用得上 如果你在使用 web s ...

- c#抓取网页数据

写了一个简单的抓取网页数据的小例子,代码如下: //根据Url地址得到网页的html源码 private string GetWebContent(string Url) { string strRe ...

- 【iOS】正則表達式抓取网页数据制作小词典

版权声明:本文为博主原创文章,未经博主同意不得转载. https://blog.csdn.net/xn4545945/article/details/37684127 应用程序不一定要自己去提供数据. ...

- 01 UIPath抓取网页数据并导出Excel(非Table表单)

上次转载了一篇<UIPath抓取网页数据并导出Excel>的文章,因为那个导出的是table标签中的数据,所以相对比较简单.现实的网页中,有许多不是通过table标签展示的,那又该如何处理 ...

- Node.js的学习--使用cheerio抓取网页数据

打算要写一个公开课网站,缺少数据,就决定去网易公开课去抓取一些数据. 前一阵子看过一段时间的Node.js,而且Node.js也比较适合做这个事情,就打算用Node.js去抓取数据. 关键是抓取到网页 ...

随机推荐

- 在linux下安装sbt

1.到官方网站下载deb包,下载地址:https://dl.bintray.com/sbt/debian/sbt-1.0.3.deb 2.点击下载的deb包进行安装 3.安装完成后,在terminal ...

- thinkphp5.0 配置格式

ThinkPHP支持多种格式的配置格式,但最终都是解析为PHP数组的方式. PHP数组定义 返回PHP数组的方式是默认的配置定义格式,例如: //项目配置文件 return [ // 默认模块名 'd ...

- .htaccess文件

前言 看了几篇文章,发现自己对于如何维护普通的服务器安全完全不会,先从简单的.htaccess来研究吧 .htaccess文件的作用,就是更改httpd.ini文件中的配置,但作用范围仅限当前文件夹 ...

- python3 爬虫教学之爬取链家二手房(最下面源码) //以更新源码

前言 作为一只小白,刚进入Python爬虫领域,今天尝试一下爬取链家的二手房,之前已经爬取了房天下的了,看看链家有什么不同,马上开始. 一.分析观察爬取网站结构 这里以广州链家二手房为例:http:/ ...

- Python函数系列-一个简单的生成器的例子

def consumer(): while True: x = yield print('处理了数据:',x) def producer(): pass c = consumer() #构建一个生成器 ...

- 【JAVAWEB学习笔记】19_事务概述、操作、特性和隔离级别

事务 学习目标 案例-完成转账 一.事务概述 1.什么是事务 一件事情有n个组成单元 要不这n个组成单元同时成功 要不n个单元就同时失败 就是将n个组成单元放到一个事务中 2.mysql的事务 默认的 ...

- 关于maven工程的几个BUG

换了个新的环境,重新导入的maven工程出现了2个BUG: 1.Could not calculate build plan: Plugin org.apache.maven.plugins:mave ...

- 详解Ubuntu Server下启动/停止/重启MySQL数据库的三种方式(ubuntu 16.04)

启动mysql: 方式一:sudo /etc/init.d/mysql start 方式二:sudo service mysql start 停止mysql: 方式一:sudo /etc/init.d ...

- 再谈mobile web retina 下 1px 边框解决方案

本文实际上想说的是ios8下 1px解决方案. 1px的边框在devicePixelRatio = 2的retina屏下会显示成2px,在iphone 6 plug 下,更显示成3px.由其影响美感. ...

- 「2017 山东一轮集训 Day4」基因

设置 \(\sqrt{n}\) 个关键点,维护出关键点到每个右端点之间的答案以及Pam的左指针,每次暴力向左插入元素即可,为了去重,还需要记录一下Pam上每个节点在每个关键点为左端点插入到时候到最左边 ...