On Using Very Large Target Vocabulary for Neural Machine Translation Candidate Sampling Sampled Softmax

【softmax分类器的加速器】

https://www.tensorflow.org/api_docs/python/tf/nn/sampled_softmax_loss

This is a faster way to train a softmax classifier over a huge number of classes.

【分类的结果集过大,选取子集】

https://www.tensorflow.org/api_guides/python/nn#Candidate_Sampling

Do you want to train a multiclass or multilabel model with thousands or millions of output classes (for example, a language model with a large vocabulary)? Training with a full Softmax is slow in this case, since all of the classes are evaluated for every training example. Candidate Sampling training algorithms can speed up your step times by only considering a small randomly-chosen subset of contrastive classes (called candidates) for each batch of training examples.

https://www.tensorflow.org/extras/candidate_sampling.pdf

【 compute F(x, y) for every class y ∈ L for every training example----耗时点,这是要解决的问题】

What is Candidate Sampling Say we have a multiclass or multilabel problem where each training example (x , ) consists of i Ti a context xi a small (multi)set of target classes Ti out of a large universe L of possible classes. For example, the problem might be to predicting the next word (or the set of future words) in a sentence given the previous words.

We wish to learn a compatibility function F(x, y) which says something about the compatibility of a class y with a context x . For example the probability of the class given the context.

“Exhaustive” training methods such as softmax and logistic regression require us to compute F(x, y) for every class y ∈ L for every training example. When |L| is very large, this can be prohibitively expensive.

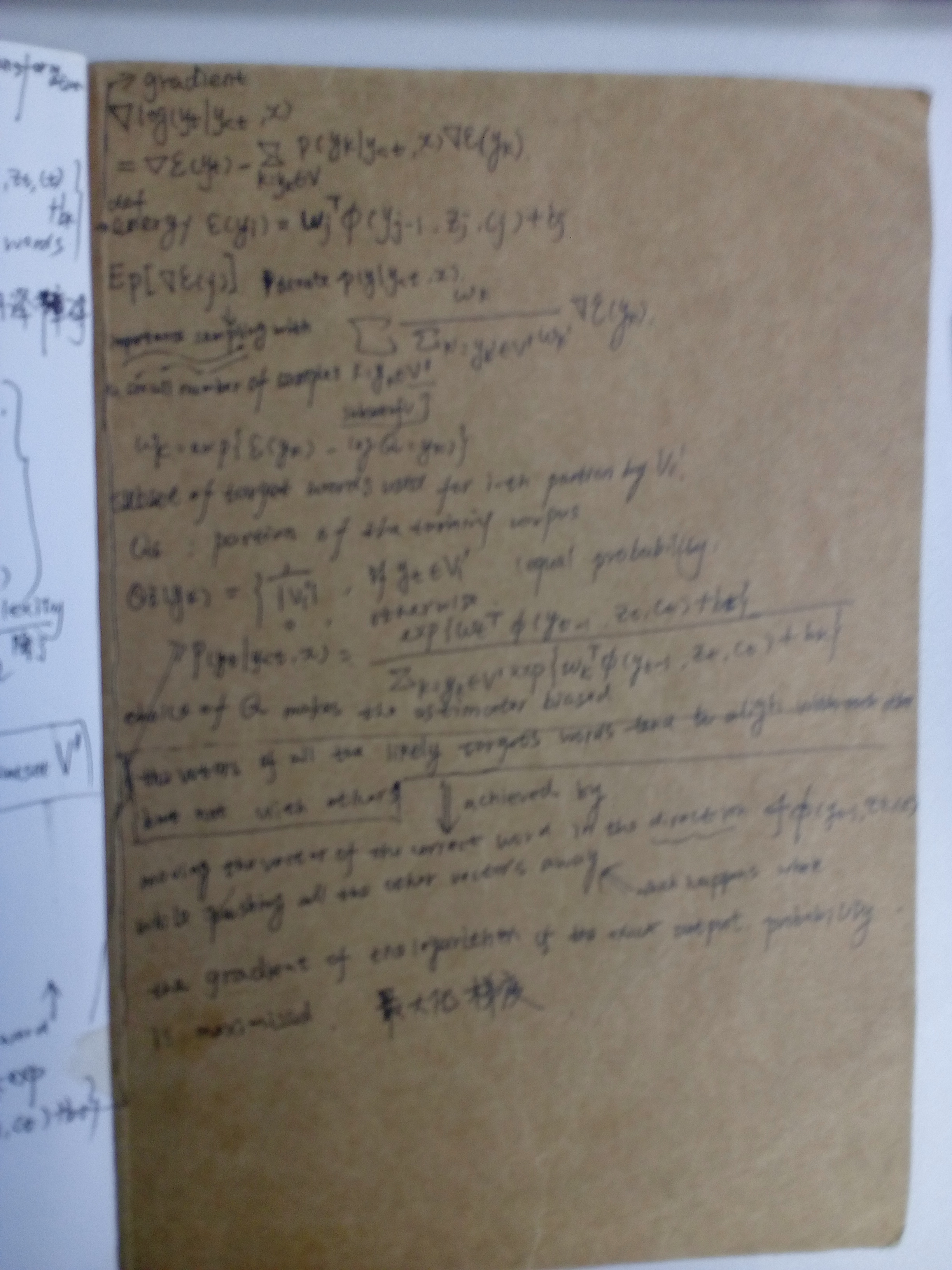

【the model having a very large target vocabulary by selecting only a small subset of the whole target vocabulary:子集】

https://arxiv.org/pdf/1412.2007.pdf

Neural machine translation, a recently proposed approach to machine translation based purely on neural networks, has shown promising results compared to the existing approaches such as phrase-based statistical machine translation. Despite its recent success, neural machine translation has its limitation in handling a larger vocabulary, as training complexity as well as decoding complexity increase proportionally to the number of target words. In this paper, we propose a method that allows us to use a very large target vocabulary without increasing training complexity, based on importance sampling. We show that decoding can be efficiently done even with the model having a very large target vocabulary by selecting only a small subset of the whole target vocabulary. The models trained by the proposed approach are empirically found to outperform the baseline models with a small vocabulary as well as the LSTM-based neural machine translation models. Furthermore, when we use the ensemble of a few models with very large target vocabularies, we achieve the state-of-the-art translation performance (measured by BLEU) on the English->German translation and almost as high performance as state-of-the-art English->French translation system.

On Using Very Large Target Vocabulary for Neural Machine Translation Candidate Sampling Sampled Softmax的更多相关文章

- 课程五(Sequence Models),第三周(Sequence models & Attention mechanism) —— 1.Programming assignments:Neural Machine Translation with Attention

Neural Machine Translation Welcome to your first programming assignment for this week! You will buil ...

- Sequence Models Week 3 Neural Machine Translation

Neural Machine Translation Welcome to your first programming assignment for this week! You will buil ...

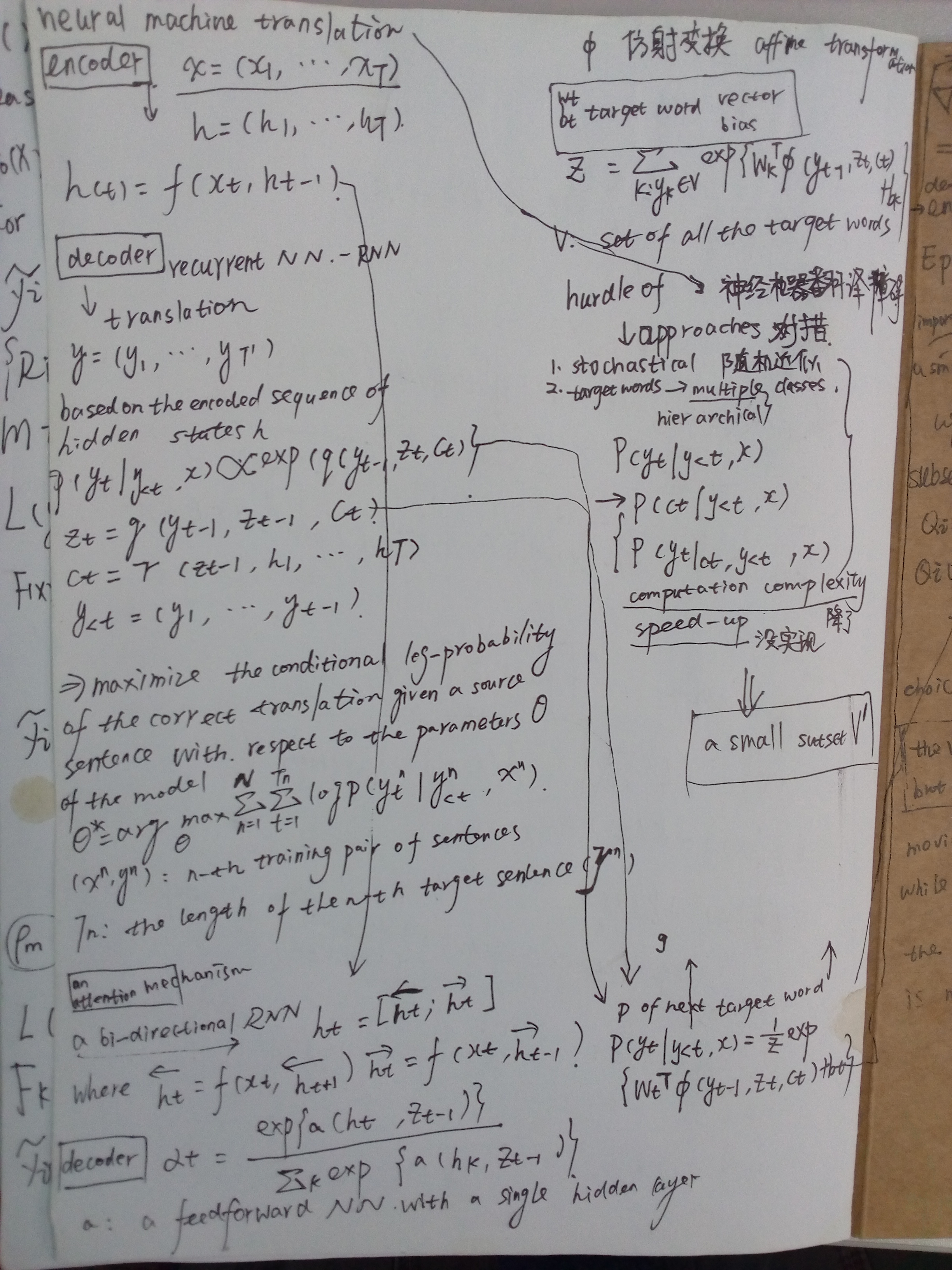

- 神经机器翻译 - NEURAL MACHINE TRANSLATION BY JOINTLY LEARNING TO ALIGN AND TRANSLATE

论文:NEURAL MACHINE TRANSLATION BY JOINTLY LEARNING TO ALIGN AND TRANSLATE 综述 背景及问题 背景: 翻译: 翻译模型学习条件分布 ...

- 对Neural Machine Translation by Jointly Learning to Align and Translate论文的详解

读论文 Neural Machine Translation by Jointly Learning to Align and Translate 这个论文是在NLP中第一个使用attention机制 ...

- Effective Approaches to Attention-based Neural Machine Translation(Global和Local attention)

这篇论文主要是提出了Global attention 和 Local attention 这个论文有一个译文,不过我没细看 Effective Approaches to Attention-base ...

- 【转载 | 翻译】Visualizing A Neural Machine Translation Model(神经机器翻译模型NMT的可视化)

转载并翻译Jay Alammar的一篇博文:Visualizing A Neural Machine Translation Model (Mechanics of Seq2seq Models Wi ...

- [笔记] encoder-decoder NEURAL MACHINE TRANSLATION BY JOINTLY LEARNING TO ALIGN AND TRANSLATE

原文地址 :[1409.0473] Neural Machine Translation by Jointly Learning to Align and Translate (arxiv.org) ...

- Introduction to Neural Machine Translation - part 1

The Noise Channel Model \(p(e)\): the language Model \(p(f|e)\): the translation model where, \(e\): ...

- 论文阅读 | Robust Neural Machine Translation with Doubly Adversarial Inputs

(1)用对抗性的源实例攻击翻译模型; (2)使用对抗性目标输入来保护翻译模型,提高其对对抗性源输入的鲁棒性. 生成对抗输入:基于梯度 (平均损失) -> AdvGen 我们的工作处理由白盒N ...

随机推荐

- Codeforces 509E(思维)

...

- 设计模式(1)---Factory Pattern

针对的问题:定义一个创建对象的接口,让其子类自己决定实例化哪一个工厂类,工厂模式使其创建过程延迟到子类进行. 第一步:创建接口 //创建一个接口 public interface Shape { pu ...

- Ruby on rails初体验(三)

继体验一和体验二中的内容,此节将体验二中最开始的目标来实现,体验二中已经将部门添加的部分添加到了公司的show页面,剩下的部分是将部门列表也添加到公司的显示页面,整体思路和体验二中相同,但是还是会有点 ...

- fauxbar.bak

{"options": { "almostdone":"0", "backup_searchEngines":" ...

- mysql日常运维与参数调优

日常运维 DBA运维工作 日常 导数据,数据修改,表结构变更 加权限,问题处理 其它 数据库选型部署,设计,监控,备份,优化等 日常运维工作: 导数据及注意事项 数据修改及注意事项 表结构变更及注意事 ...

- recovery怎么刷机,recovery是什么意思

转自:http://www.3lian.com/edu/2012/04-11/25212.html Recovery是什么意思? recovery翻译过来就是“恢复”的意思,是开机后通过特殊按键组合( ...

- 配置和使用服务器Tomcat连接池

1.配置Tomcat6.0根目录\conf\context.xml <?xml version='1.0' encoding='utf-8'?> <!-- Licensed to t ...

- 【DQ冰淇淋】—— Babylon 冰淇淋三维互动营销项目总结

前言:在学习过Babylon.js基础之后,我上手的第一个网页端3D效果制作项目就是‘DQ冰淇淋’.这个小项目应用到了Babylon最基础的知识,既可以选味道,选点心,也可以旋转.倒置冰淇淋,互动起来 ...

- 【温故知新】——BABYLON.js学习之路·前辈经验(一)

前言:公司用BABYLON作为主要的前端引擎,同事们在长时间的项目实践中摸索到有关BABYLON的学习路径和问题解决方法,这里只作为温故知新. 一.快速学习BABYLON 1. 阅读Babylon[基 ...

- TP如何进行批量查询

public function getUserInfo($uid){ if(is_null($uid) || empty($uid)){return false;} if(is_arr ...