CentOS7.4 + Hadoop2.7.5安装配置管理(伪分布式)

1. 规划

1.1. 机器列表

|

NameNode |

SecondaryNameNode |

DataNodes |

|

192.168.1.80 |

192.168.1.80 |

192.168.1.80 |

1.2. 机器列表

|

机器IP |

主机名 |

用户组/用户 |

|

192.168.1.80 |

centoshadoop.smartmap.com |

hadoop/hadoop |

2. 添加用户

[root@centoshadoop ~]# useradd hadoop

[root@centoshadoop ~]# passwd hadoop

Changing password for user hadoop.

New password:

BAD PASSWORD: The password is shorter than 8

characters

Retype new password:

passwd: all authentication tokens updated

successfully.

[root@centoshadoop ~]#

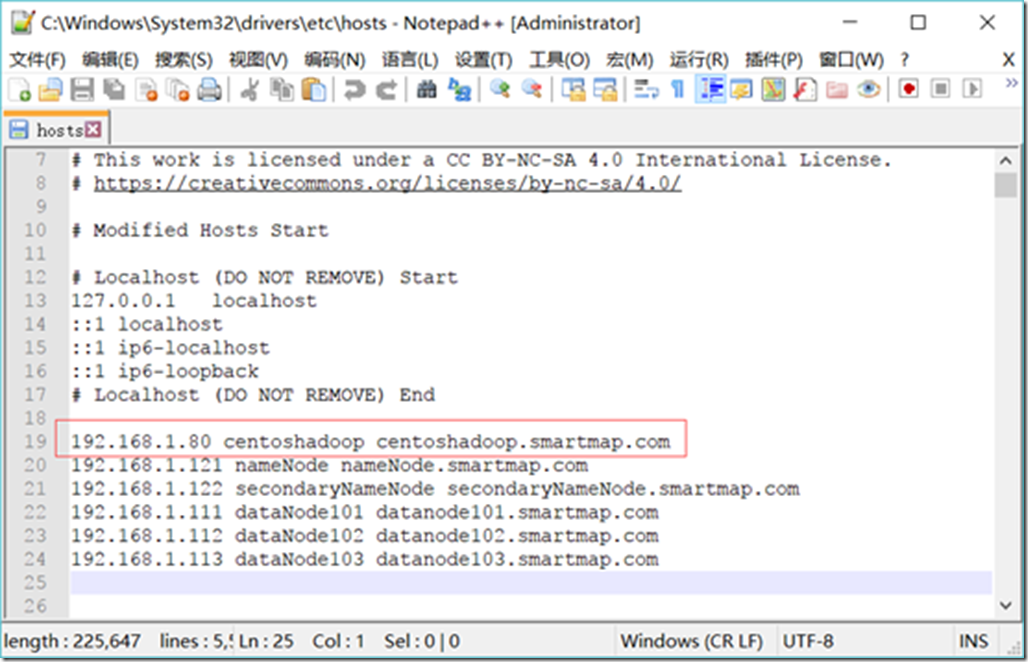

3. 设置IP与服务器名映射

[root@centoshadoop ~]# vi /etc/hosts

192.168.1.80 centoshadoop

centoshadoop.smartmap.com

并修改Windows中的hosts文件

C:\Windows\System32\drivers\etc

4. 免密码登录

4.1. 免密码登录范围

要求能通过免登录包括使用IP和主机名都能免密码登录:

1)

NameNode能免密码登录所有的DataNode

2)

SecondaryNameNode能免密码登录所有的DataNode

3)

NameNode能免密码登录自己

4)

SecondaryNameNode能免密码登录自己

5)

NameNode能免密码登录SecondaryNameNode

6)

SecondaryNameNode能免密码登录NameNode

7)

DataNode能免密码登录自己

8)

DataNode不需要配置免密码登录NameNode、SecondaryNameNode和其它DataNode。

4.2. SSH无密码配置

4.2.1. 所有节点准备密钥

4.2.1.1. 创建密钥对

[hadoop@centoshadoop ~]$ ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key

(/home/hadoop/.ssh/id_rsa):

Created directory '/home/hadoop/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in

/home/hadoop/.ssh/id_rsa.

Your public key has been saved in

/home/hadoop/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:4hSyoWOuj5MS+ME0ukspvAbfowEbv8iL4ZhfFrETtns

hadoop@centoshadoop.smartmap.com

The key's randomart image is:

+---[RSA 2048]----+

| |

| |

| *

. |

| oo O . |

|+++.* o S |

|*B+. * . |

|=X+o+ E |

|%B+=o. |

|@X*. . |

+----[SHA256]-----+

[hadoop@centoshadoop ~]$ ls -la .ssh/

total 8

drwx------ 2 hadoop hadoop 38 Apr 16 21:00 .

drwx------ 3 hadoop hadoop 74 Apr 16 21:00 ..

-rw------- 1 hadoop hadoop 1675 Apr 16 21:00

id_rsa

-rw-r--r-- 1 hadoop hadoop 414 Apr 16 21:00 id_rsa.pub

[hadoop@centoshadoop ~]$

4.2.1.2. 创建存放公钥的文件

[hadoop@centoshadoop ~]$ cat ~/.ssh/id_rsa.pub >>

~/.ssh/authorized_keys

[hadoop@centoshadoop ~]$ chmod 600 ~/.ssh/authorized_keys

[hadoop@centoshadoop ~]$ ls -la ~/.ssh/

total 12

drwx------ 2 hadoop hadoop 61 Apr 16 21:02 .

drwx------ 3 hadoop hadoop 74 Apr 16 21:00 ..

-rw------- 1 hadoop hadoop 414 Apr 16 21:02 authorized_keys

-rw------- 1 hadoop hadoop 1675 Apr 16 21:00

id_rsa

-rw-r--r-- 1 hadoop hadoop 414 Apr 16 21:00 id_rsa.pub

[hadoop@centoshadoop ~]$

4.2.2. 验证

[hadoop@centoshadoop ~]$ ssh hadoop@192.168.1.80

The authenticity of host '192.168.1.80 (192.168.1.80)'

can't be established.

ECDSA key fingerprint is

SHA256:lgN0eOtdLR2eqHh+fabe54DGpV08ZiWo9oWVS60aGzw.

ECDSA key fingerprint is

MD5:28:c0:cf:21:35:29:3d:23:d3:62:ca:0e:82:7a:4b:af.

Are you sure you want to continue connecting (yes/no)?

yes

Warning: Permanently added '192.168.1.80' (ECDSA) to the

list of known hosts.

Last login: Mon Apr 16 21:00:08 2018 from

192.168.1.100

[hadoop@centoshadoop ~]$ exit

logout

Connection to 192.168.1.80 closed.

[hadoop@centoshadoop ~]$ ssh hadoop@192.168.1.80

Last login: Mon Apr 16 21:04:40 2018 from

192.168.1.80

[hadoop@centoshadoop ~]$ exit

logout

Connection to 192.168.1.80 closed.

[hadoop@centoshadoop ~]$

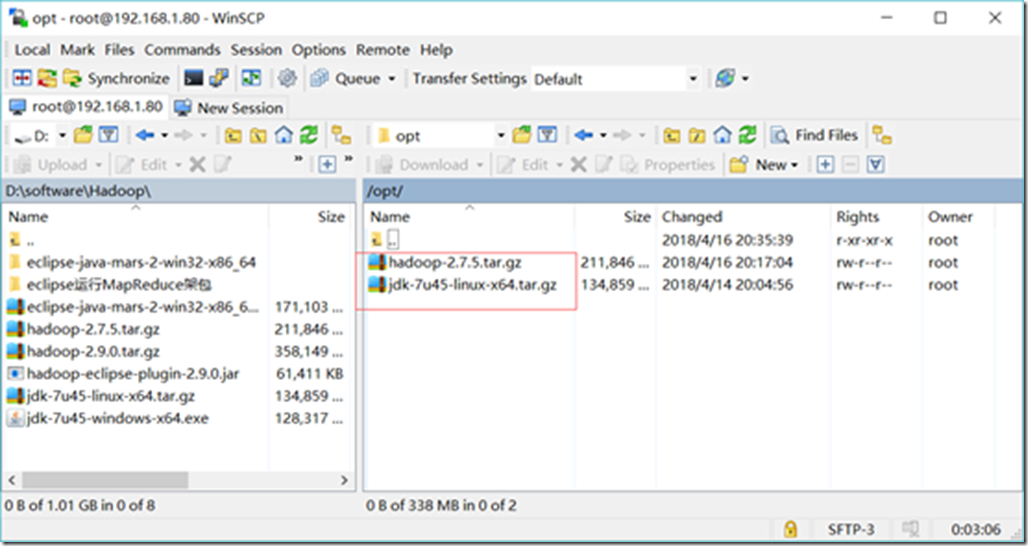

5. 上传软件

将下载好的JDK与hadoop包上传到服务器上

以root用户上传目录为 /opt/

6. 安装JDK

6.1. 解压JDK包

[hadoop@centoshadoop ~]$ su - root

Password:

Last login: Mon Apr 16 20:41:23 CST 2018 from

192.168.1.100 on pts/0

[root@centoshadoop ~]# cd /opt/

[root@centoshadoop opt]# ll

total 346708

-rw-r--r-- 1 root root 216929574 Apr 16 20:17

hadoop-2.7.5.tar.gz

-rw-r--r-- 1 root root 138094686 Apr 14 20:04

jdk-7u45-linux-x64.tar.gz

[root@centoshadoop opt]# mkdir java

[root@centoshadoop opt]# tar -zxvf

jdk-7u45-linux-x64.tar.gz

jdk1.7.0_45/

jdk1.7.0_45/db/

jdk1.7.0_45/db/lib/

……

jdk1.7.0_45/man/man1/javadoc.1

jdk1.7.0_45/THIRDPARTYLICENSEREADME.txt

jdk1.7.0_45/COPYRIGHT

[root@centoshadoop opt]# ls -la

total 346708

drwxr-xr-x. 4 root root 97 Apr 16 21:14 .

dr-xr-xr-x. 17 root root 244 Apr 16 20:35 ..

-rw-r--r-- 1 root root 216929574 Apr 16 20:17 hadoop-2.7.5.tar.gz

drwxr-xr-x 2 root root 6 Apr 16 21:13 java

drwxr-xr-x 8 10 143 233 Oct 8 2013

jdk1.7.0_45

-rw-r--r-- 1 root root 138094686 Apr 14 20:04 jdk-7u45-linux-x64.tar.gz

[root@centoshadoop opt]# mv jdk1.7.0_45/ java/

[root@centoshadoop opt]# ls -la

total 346708

drwxr-xr-x. 3 root root 78 Apr 16 21:17 .

dr-xr-xr-x. 17 root root 244 Apr 16 20:35 ..

-rw-r--r-- 1 root root 216929574 Apr 16 20:17 hadoop-2.7.5.tar.gz

drwxr-xr-x 3 root root 25 Apr 16 21:17 java

-rw-r--r-- 1 root root 138094686 Apr 14 20:04 jdk-7u45-linux-x64.tar.gz

[root@centoshadoop opt]# cd java/

[root@centoshadoop java]# ls -la

total 0

drwxr-xr-x 3 root root 25 Apr 16 21:17 .

drwxr-xr-x. 3 root root 78 Apr 16 21:17 ..

drwxr-xr-x 8 10 143

233 Oct 8 2013

jdk1.7.0_45

[root@centoshadoop java]# cd ..

[root@centoshadoop opt]#

6.2. 配置环境变量

[root@centoshadoop opt]# vi /etc/profile

在其结尾加入如下的内容

export JAVA_HOME=/opt/java/jdk1.7.0_45

export JRE_HOME=/opt/java/jdk1.7.0_45/jre

export

CLASSPATH=.:$JAVA_HOME/lib:$JRE_HOME/lib:$CLASSPATH

export PATH=$JAVA_HOME/bin:$PATH

[root@centoshadoop opt]# source /etc/profile

[root@centoshadoop opt]# java -version

java version "1.7.0_45"

Java(TM) SE Runtime Environment (build

1.7.0_45-b18)

Java HotSpot(TM) 64-Bit Server VM (build 24.45-b08,

mixed mode)

[root@centoshadoop opt]#

[root@centoshadoop opt]# rm -rf jdk-7u45-linux-x64.tar.gz

[root@centoshadoop opt]#

7. Hadoop安装及其环境配置

7.1. 解压Hadoop包

[root@centoshadoop opt]# mkdir hadoop

[root@centoshadoop opt]# ls -la

total 211848

drwxr-xr-x. 4 root root 59 Apr 16 21:28 .

dr-xr-xr-x. 17 root root 244 Apr 16 20:35 ..

drwxr-xr-x 2 root root 6 Apr 16 21:28 hadoop

-rw-r--r-- 1 root root 216929574 Apr 16 20:17 hadoop-2.7.5.tar.gz

drwxr-xr-x 3 root root 25 Apr 16 21:17 java

[root@centoshadoop opt]# tar -zxvf hadoop-2.7.5.tar.gz

……

hadoop-2.7.5/share/doc/hadoop-project/hadoop-datajoin/

hadoop-2.7.5/share/doc/hadoop-project/hadoop-ant/

hadoop-2.7.5/share/doc/hadoop-project/hadoop-extras/

hadoop-2.7.5/share/doc/hadoop-project/hadoop-pipes/

hadoop-2.7.5/share/doc/hadoop-project/hadoop-openstack/

hadoop-2.7.5/share/doc/hadoop-project/hadoop-aws/

hadoop-2.7.5/share/doc/hadoop-project/hadoop-azure/

hadoop-2.7.5/share/doc/hadoop-project/hadoop-minicluster/

hadoop-2.7.5/share/doc/hadoop-project/hadoop-sls/

hadoop-2.7.5/share/doc/hadoop-project/hadoop-tools/

hadoop-2.7.5/share/doc/hadoop-project/hadoop-dist/

hadoop-2.7.5/share/doc/hadoop-project/

[root@centoshadoop opt]# ls -la

total 211848

drwxr-xr-x. 5 root root 79 Apr 16 21:31 .

dr-xr-xr-x. 17 root root 244 Apr 16 20:35 ..

drwxr-xr-x 2 root root 6 Apr 16 21:28 hadoop

drwxr-xr-x 9 20415 101 149 Dec 16 09:12 hadoop-2.7.5

-rw-r--r-- 1 root root 216929574 Apr 16 20:17 hadoop-2.7.5.tar.gz

drwxr-xr-x 3 root root 25 Apr 16 21:17 java

[root@centoshadoop opt]# mv hadoop-2.7.5/ hadoop/

[root@centoshadoop opt]# ls -la

total 211848

drwxr-xr-x. 4 root root 59 Apr 16 21:32 .

dr-xr-xr-x. 17 root root 244 Apr 16 20:35 ..

drwxr-xr-x 3 root root 26 Apr 16 21:32 hadoop

-rw-r--r-- 1 root root 216929574 Apr 16 20:17 hadoop-2.7.5.tar.gz

drwxr-xr-x 3 root root 25 Apr 16 21:17 java

[root@centoshadoop opt]# rm -rf hadoop-2.7.5.tar.gz

7.2. 修改Hadoop包的用户

[root@centoshadoop opt]# chown hadoop:hadoop -R hadoop/

[root@centoshadoop opt]#

7.3. Hadoop环境配置

7.3.1. 把Hadoop的安装路径添加到”/etc/profile”中

[root@centoshadoop opt]# vi /etc/profile

# export

JAVA_HOME=/opt/java/jdk1.7.0_45

# export

JRE_HOME=/opt/java/jdk1.7.0_45/jre

# export

CLASSPATH=.:$JAVA_HOME/lib:$JRE_HOME/lib:$CLASSPATH

# export

PATH=$JAVA_HOME/bin:$PATH

export

JAVA_HOME=/opt/java/jdk1.7.0_45

export

CLASSPATH=.:$JAVA_HOME/lib:$CLASSPATH

export

HADOOP_HOME=/opt/hadoop/hadoop-2.7.5

export

HADOOP_MAPRED_HOME=$HADOOP_HOME

export

HADOOP_COMMON_HOME=$HADOOP_HOME

export

HADOOP_HDFS_HOME=$HADOOP_HOME

export

YARN_HOME=$HADOOP_HOME

export

HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export

YARN_CONF_DIR=$HADOOP_HOME/etc/hadoop

export

PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

export

LD_LIBRARY_PATH=$JAVA_HOME/jre/lib/amd64/server:/usr/local/lib:$HADOOP_HOME/lib/native

export

JAVA_LIBRARY_PATH=$LD_LIBRARY_PATH:$JAVA_LIBRARY_PATH

[root@centoshadoop opt]# source /etc/profile

[root@centoshadoop opt]#

7.3.2. 创建目录

[root@centoshadoop opt]# exit

logout

[hadoop@centoshadoop ~]$ cd /opt/

[hadoop@centoshadoop opt]$

[hadoop@centoshadoop opt]# mkdir /opt/hadoop/tmp

[hadoop@centoshadoop opt]# mkdir -p

/opt/hadoop/data/hdfs/name

[hadoop@centoshadoop opt]# mkdir -p

/opt/hadoop/data/hdfs/data

[hadoop@centoshadoop opt]# mkdir -p

/opt/hadoop/data/hdfs/checkpoint

[hadoop@centoshadoop opt]# mkdir -p

/opt/hadoop/data/yarn/local

[hadoop@centoshadoop opt]# mkdir -p /opt/hadoop/pid

[hadoop@centoshadoop opt]# mkdir -p /opt/hadoop/log

[hadoop@centoshadoop opt]#

7.3.3. 配置hadoop-env.sh

[hadoop@centoshadoop opt]$ vi

/opt/hadoop/hadoop-2.7.5/etc/hadoop/hadoop-env.sh

[hadoop@centoshadoop opt]$

# export

JAVA_HOME=${JAVA_HOME}

export

JAVA_HOME=/opt/java/jdk1.7.0_45

export

HADOOP_PID_DIR=/home/hadoop/pid

export

HADOOP_LOG_DIR=/home/hadoop/log

[hadoop@centoshadoop opt]$ source /etc/profile

[hadoop@centoshadoop opt]$ source

/opt/hadoop/hadoop-2.7.5/etc/hadoop/hadoop-env.sh

[hadoop@centoshadoop opt]$ hadoop version

Hadoop 2.7.5

Subversion

https://shv@git-wip-us.apache.org/repos/asf/hadoop.git -r

18065c2b6806ed4aa6a3187d77cbe21bb3dba075

Compiled by kshvachk on 2017-12-16T01:06Z

Compiled with protoc 2.5.0

From source with checksum

9f118f95f47043332d51891e37f736e9

This command was run using

/opt/hadoop/hadoop-2.7.5/share/hadoop/common/hadoop-common-2.7.5.jar

[hadoop@centoshadoop opt]$

7.3.4. 配置yarn-env.sh

[hadoop@centoshadoop opt]$ vi

/opt/hadoop/hadoop-2.7.5/etc/hadoop/yarn-env.sh

[hadoop@centoshadoop opt]$

# export

JAVA_HOME=/home/y/libexec/jdk1.6.0/

export

JAVA_HOME=/opt/java/jdk1.7.0_45

7.3.5. 配置etc/hadoop/core-site.xml

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/hadoop/tmp</value>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://192.168.1.80:9000</value>

</property>

</configuration>

7.3.6. 配置etc/hadoop/hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.http-address</name>

<value>192.168.1.80:50070</value>

</property>

<property>

<name>dfs.namenode.http-bind-host</name>

<value>192.168.1.80</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>192.168.1.80:50090</value>

</property>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/opt/hadoop/data/hdfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/opt/hadoop/data/hdfs/data</value>

</property>

<property>

<name>dfs.namenode.checkpoint.dir</name>

<value>/opt/hadoop/data/hdfs/checkpoint</value>

</property>

</configuration>

7.3.7. 配置etc/hadoop/mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

7.3.8. 配置etc/hadoop/yarn-site.xml

<configuration>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>192.168.1.80</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.local-dirs</name>

<value>/opt/hadoop/data/yarn/local</value>

</property>

</configuration>

7.3.9. 配置etc/hadoop/slaves

[hadoop@centoshadoop hadoop]$ vi

/opt/hadoop/hadoop-2.7.5/etc/hadoop/slaves

[hadoop@centoshadoop hadoop]$

192.168.1.80

8. 启动

8.1. 启动HDFS

8.1.1. 格式化NameNode

[hadoop@centoshadoop hadoop]$ hdfs namenode -format

18/04/16 22:24:38 INFO namenode.NameNode:

STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = centoshadoop.smartmap.com/192.168.1.80

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.7.5

8.1.2. 启动与停止HDFS

[hadoop@centoshadoop hadoop]$ .

/opt/hadoop/hadoop-2.7.5/sbin/start-dfs.sh

/usr/bin/which: invalid option -- 'b'

/usr/bin/which: invalid option -- 's'

/usr/bin/which: invalid option -- 'h'

Usage: /usr/bin/which [options] [--] COMMAND

[...]

Write the full path of COMMAND(s) to standard

output.

Report bugs to< which-bugs@gnu.org>.

Starting namenodes on [centoshadoop]

The authenticity of host 'centoshadoop (192.168.1.80)'

can't be established.

ECDSA key fingerprint is

SHA256:lgN0eOtdLR2eqHh+fabe54DGpV08ZiWo9oWVS60aGzw.

ECDSA key fingerprint is

MD5:28:c0:cf:21:35:29:3d:23:d3:62:ca:0e:82:7a:4b:af.

Are you sure you want to continue connecting (yes/no)?

yes

centoshadoop: Warning: Permanently added 'centoshadoop'

(ECDSA) to the list of known hosts.

centoshadoop: starting namenode, logging to

/home/hadoop/log/hadoop-hadoop-namenode-centoshadoop.smartmap.com.out

192.168.1.80: starting datanode, logging to

/home/hadoop/log/hadoop-hadoop-datanode-centoshadoop.smartmap.com.out

Starting secondary namenodes [0.0.0.0]

The authenticity of host '0.0.0.0 (0.0.0.0)' can't be

established.

ECDSA key fingerprint is

SHA256:lgN0eOtdLR2eqHh+fabe54DGpV08ZiWo9oWVS60aGzw.

ECDSA key fingerprint is

MD5:28:c0:cf:21:35:29:3d:23:d3:62:ca:0e:82:7a:4b:af.

Are you sure you want to continue connecting (yes/no)?

yes

0.0.0.0: Warning: Permanently added '0.0.0.0' (ECDSA) to

the list of known hosts.

0.0.0.0: starting secondarynamenode, logging to

/home/hadoop/log/hadoop-hadoop-secondarynamenode-centoshadoop.smartmap.com.out

[hadoop@centoshadoop hadoop]$ jps

8210 NameNode

8334 DataNode

8611 Jps

8495 SecondaryNameNode

[hadoop@centoshadoop hadoop]$

8.2. 启动YARN

[hadoop@centoshadoop opt]$ cd /opt/hadoop/hadoop-2.7.5

[hadoop@centoshadoop hadoop-2.7.5]$

[hadoop@centoshadoop hadoop-2.7.5]$ sbin/start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to

/opt/hadoop/hadoop-2.7.5/logs/yarn-hadoop-resourcemanager-centoshadoop.smartmap.com.out

192.168.1.80: starting nodemanager, logging to

/opt/hadoop/hadoop-2.7.5/logs/yarn-hadoop-nodemanager-centoshadoop.smartmap.com.out

[hadoop@centoshadoop hadoop-2.7.5]$

[hadoop@centoshadoop hadoop-2.7.5]$ jps

11478 NodeManager

11373 ResourceManager

10580 SecondaryNameNode

10413 DataNode

10283 NameNode

11792 Jps

[hadoop@centoshadoop hadoop-2.7.5]$

设置logger级别,看下具体原因

export HADOOP_ROOT_LOGGER=DEBUG,console

8.3. 查看节点情况

[hadoop@centoshadoop hadoop-2.7.5]$ hadoop dfsadmin -report

DEPRECATED: Use of this script to execute hdfs command

is deprecated.

Instead use the hdfs command for it.

18/04/16 23:17:14 WARN util.NativeCodeLoader: Unable to

load native-hadoop library for your platform... using builtin-java classes where

applicable

Configured Capacity: 38635831296 (35.98 GB)

Present Capacity: 37050920960 (34.51 GB)

DFS Remaining: 37050908672 (34.51 GB)

DFS Used: 12288 (12 KB)

DFS Used%: 0.00%

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

Missing blocks (with replication factor 1): 0

-------------------------------------------------

Live datanodes (1):

Name: 192.168.1.80:50010 (centoshadoop)

Hostname: centoshadoop

Decommission Status : Normal

Configured Capacity: 38635831296 (35.98 GB)

DFS Used: 12288 (12 KB)

Non DFS Used: 1584910336 (1.48 GB)

DFS Remaining: 37050908672 (34.51 GB)

DFS Used%: 0.00%

DFS Remaining%: 95.90%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Mon Apr 16 23:17:13 CST 2018

[hadoop@centoshadoop hadoop-2.7.5]$

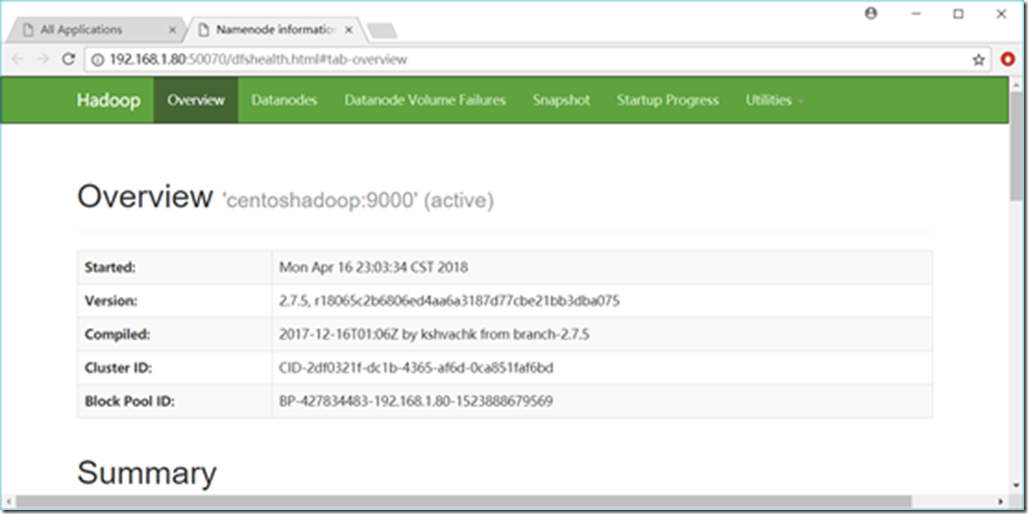

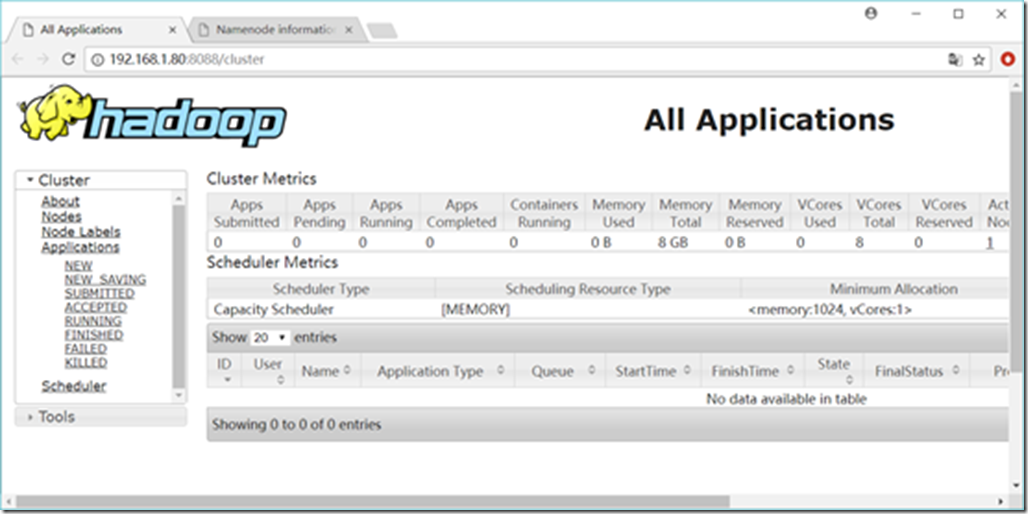

8.4. 通过网页查看集群

http://192.168.1.80:50070/dfshealth.html#tab-overview

http://192.168.1.80:8088/cluster

9. 如何运行自带wordcount

9.1. 找到该程序所在的位置

[hadoop@namenode sbin]$ cd

/opt/hadoop/hadoop-2.7.5/share/hadoop/mapreduce/

[hadoop@namenode mapreduce]$ ll

total 5196

-rw-r--r-- 1 hadoop hadoop 571621 Nov 14 07:28 hadoop-mapreduce-client-app-2.9.0.jar

-rw-r--r-- 1 hadoop hadoop 787871 Nov 14 07:28 hadoop-mapreduce-client-common-2.9.0.jar

-rw-r--r-- 1 hadoop hadoop 1611701 Nov 14 07:28

hadoop-mapreduce-client-core-2.9.0.jar

-rw-r--r-- 1 hadoop hadoop 200628 Nov 14 07:28 hadoop-mapreduce-client-hs-2.9.0.jar

-rw-r--r-- 1 hadoop hadoop 32802 Nov 14 07:28

hadoop-mapreduce-client-hs-plugins-2.9.0.jar

-rw-r--r-- 1 hadoop hadoop 71360 Nov 14 07:28 hadoop-mapreduce-client-jobclient-2.9.0.jar

-rw-r--r-- 1 hadoop hadoop 1623508 Nov 14 07:28

hadoop-mapreduce-client-jobclient-2.9.0-tests.jar

-rw-r--r-- 1 hadoop hadoop 85175 Nov 14 07:28 hadoop-mapreduce-client-shuffle-2.9.0.jar

-rw-r--r-- 1 hadoop hadoop 303317 Nov 14 07:28 hadoop-mapreduce-examples-2.9.0.jar

drwxr-xr-x 2 hadoop hadoop 4096 Nov 14 07:28 jdiff

drwxr-xr-x 2 hadoop hadoop 4096 Nov 14 07:28 lib

drwxr-xr-x 2 hadoop hadoop 30 Nov 14 07:28 lib-examples

drwxr-xr-x 2 hadoop hadoop 4096 Nov 14 07:28 sources

[hadoop@namenode mapreduce]$

9.2. 准备数据

9.2.1. 先在HDFS创建几个数据目录

[hadoop@namenode mapreduce]$ hadoop fs -mkdir -p /data/wordcount

[hadoop@namenode mapreduce]$ hadoop fs -mkdir -p /output/

[hadoop@namenode mapreduce]$

目录/data/wordcount用来存放Hadoop自带的WordCount例子的数据文件,运行这个MapReduce任务的结果输出到/output/wordcount目录中

9.2.2. 创建测试数据

[hadoop@namenode mapreduce]$ vi /opt/hadoop/inputWord

9.2.3. 上传数据

[hadoop@namenode mapreduce]$ hadoop fs -put /opt/hadoop/inputWord

/data/wordcount/

9.2.4. 显示文件

[hadoop@namenode mapreduce]$ hadoop fs -ls /data/wordcount/

Found 1 items

-rw-r--r-- 2 hadoop supergroup 241 2018-04-15 22:01 /data/wordcount/inputWord

9.2.5. 查看文件内容

[hadoop@namenode mapreduce]$ hadoop fs -text

/data/wordcount/inputWord

hello smartmap

hello gispathfinder

language c

language c++

language c#

language java

language python

language r

web javascript

web html

web css

gis arcgis

gis supermap

gis mapinfo

gis qgis

gis grass

gis sage

webgis leaflet

webgis openlayers

[hadoop@namenode mapreduce]$

9.3. 运行WordCount例子

[hadoop@namenode mapreduce]$ hadoop jar

/opt/hadoop/hadoop-2.9.0/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.9.0.jar

wordcount /data/wordcount /output/wordcount

18/04/15 22:10:08 INFO client.RMProxy: Connecting to

ResourceManager at /192.168.1.121:8032

18/04/15 22:10:09 INFO input.FileInputFormat: Total

input files to process : 1

18/04/15 22:10:09 INFO mapreduce.JobSubmitter: number of

splits:1

18/04/15 22:10:09 INFO Configuration.deprecation:

yarn.resourcemanager.system-metrics-publisher.enabled is deprecated. Instead,

use yarn.system-metrics-publisher.enabled

18/04/15 22:10:10 INFO mapreduce.JobSubmitter:

Submitting tokens for job: job_1523794216379_0001

18/04/15 22:10:10 INFO impl.YarnClientImpl: Submitted

application application_1523794216379_0001

18/04/15 22:10:10 INFO mapreduce.Job: The url to track

the job: http://nameNode:8088/proxy/application_1523794216379_0001/

18/04/15 22:10:10 INFO mapreduce.Job: Running job:

job_1523794216379_0001

18/04/15 22:10:20 INFO mapreduce.Job: Job

job_1523794216379_0001 running in uber mode : false

18/04/15 22:10:20 INFO mapreduce.Job: map 0% reduce 0%

18/04/15 22:10:30 INFO mapreduce.Job: map 100% reduce 0%

18/04/15 22:10:40 INFO mapreduce.Job: map 100% reduce 100%

18/04/15 22:10:40 INFO mapreduce.Job: Job

job_1523794216379_0001 completed successfully

18/04/15 22:10:40 INFO mapreduce.Job: Counters:

49

File System Counters

FILE: Number of bytes

read=305

FILE: Number of bytes

written=404363

FILE: Number of read

operations=0

FILE: Number of large read

operations=0

FILE: Number of write

operations=0

HDFS: Number of bytes

read=356

HDFS: Number of bytes

written=203

HDFS: Number of read

operations=6

HDFS: Number of large read

operations=0

HDFS: Number of write

operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in

occupied slots (ms)=7775

Total time spent by all reduces in

occupied slots (ms)=6275

Total time spent by all map tasks

(ms)=7775

Total time spent by all reduce tasks

(ms)=6275

Total vcore-milliseconds taken by

all map tasks=7775

Total vcore-milliseconds taken by

all reduce tasks=6275

Total megabyte-milliseconds taken by

all map tasks=7961600

Total

megabyte-milliseconds taken by all reduce tasks=6425600

Map-Reduce Framework

Map input records=19

Map output records=38

Map output bytes=393

Map output materialized

bytes=305

Input split bytes=115

Combine input records=38

Combine output records=24

Reduce input groups=24

Reduce shuffle bytes=305

Reduce input records=24

Reduce

output records=24

Spilled Records=48

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=1685

CPU time spent (ms)=2810

Physical

memory (bytes) snapshot=476082176

Virtual memory (bytes)

snapshot=1810911232

Total committed heap usage

(bytes)=317718528

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format

Counters

Bytes Read=241

File Output Format

Counters

Bytes Written=203

[hadoop@namenode mapreduce]$

9.4. 查看结果

9.4.1. 通过服务器上查看

[hadoop@namenode mapreduce]$ hadoop fs -ls /output/wordcount

Found 2 items

-rw-r--r-- 2 hadoop supergroup 0 2018-04-15 22:10 /output/wordcount/_SUCCESS

-rw-r--r-- 2 hadoop supergroup 203 2018-04-15 22:10 /output/wordcount/part-r-00000

[hadoop@namenode mapreduce]$ hadoop fs -text

/output/wordcount/part-r-00000

arcgis 1

c 1

c# 1

c++ 1

css 1

gis 6

gispathfinder 1

grass 1

hello 2

html 1

java 1

javascript 1

language 6

leaflet 1

mapinfo 1

openlayers 1

python 1

qgis 1

r 1

sage 1

smartmap 1

supermap 1

web 3

webgis 2

[hadoop@namenode mapreduce]$

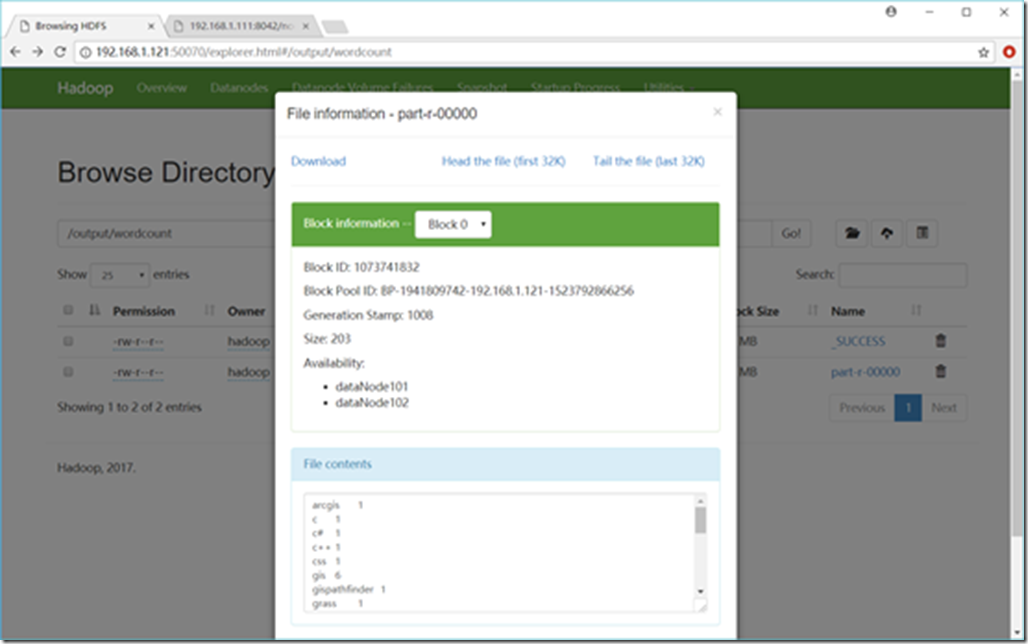

9.4.2. 通过Web UI查看

http://192.168.1.121:50070/explorer.html#/output/wordcount

CentOS7.4 + Hadoop2.7.5安装配置管理(伪分布式)的更多相关文章

- java大数据最全课程学习笔记(1)--Hadoop简介和安装及伪分布式

Hadoop简介和安装及伪分布式 大数据概念 大数据概论 大数据(Big Data): 指无法在一定时间范围内用常规软件工具进行捕捉,管理和处理的数据集合,是需要新处理模式才能具有更强的决策力,洞察发 ...

- VMwareWorkstation 平台 Ubuntu14 下安装配置 伪分布式 hadoop

VMwareWorkstation平台Ubuntu14下安装配置伪分布式hadoop 安装VmwareStation 内含注册机. 链接:https://pan.baidu.com/s/1j-vKgD ...

- Hadoop2.6.0安装—单机/伪分布

目录 环境准备 创建hadoop用户 更新apt 配置SSH免密登陆 安装配置Java环境 安装Hadoop Hadoop单机/伪分布配置 单机Hadoop 伪分布Hadoop 启动Hadoop 停止 ...

- hadoop2.7.2单机与伪分布式安装

环境相关 系统:CentOS 6.8 64位 jdk:1.7.0_79 hadoop:hadoop 2.7.2 安装java环境 详见:linux中搭建java开发环境 创建hadoop用户 # 以r ...

- centos中-hadoop单机安装及伪分布式运行实例

创建用户并加入授权 1,创建hadoop用户 sudo useradd -m hadoop -s /bin/bash 2,修改sudo的配置文件,位于/etc/sudoers,需要root权限才可以读 ...

- ubantu18.04下Hadoop安装与伪分布式配置

1 下载 下载地址:http://mirror.bit.edu.cn/apache/hadoop/common/stable2/ 2 解压 将文件解压到 /usr/local/hadoop cd ~ ...

- Hadoop安装-单机-伪分布式简单部署配置

最近在搞大数据项目支持所以有时间写下hadoop随笔吧. 环境介绍: Linux: centos7 jdk:java version "1.8.0_181 hadoop:hadoop-3.2 ...

- CentOS7.4 + Hadoop2.9安装配置管理(分布式)

1. 规划 1.1. 机器列表 NameNode SecondaryNameNode DataNodes 192.168.1.121 192.168.1.122 192.168.1.101 192 ...

- Hadoop-2.9.2单机版安装(伪分布式模式)(一)

一.环境 硬件:虚拟机VMware.win7 操作系统:Centos-7 64位 主机名: hadoopServerOne 安装用户:root软件:jdk1.8.0_181.Hadoop-2.9.2 ...

随机推荐

- liunx相关指令

修改网卡命名规范 a 如何进入到救援模式 修改网卡 1.修改配置文件名称 /etc/sysconfig/network-scripts/ 名称为:ifcfg-xxx 2.修改配置文件内的 dev ...

- 【kafka】kafka.admin.AdminOperationException: replication factor: 1 larger than available brokers: 0

https://blog.csdn.net/bigtree_3721/article/details/78442912 I am trying to create topics in Kafka by ...

- IIS web证书申请与安装

数字证书一般是由权威机构颁发的,操作系统会携带权威机构的根证书和中级证书.如果操作系统没有携带权威机构签发的根证书,浏览器会报警,如www.12306.cn,需要安装铁道部根证书后,才能正常访问. 证 ...

- Linux驱动:SPI驱动编写要点

题外话:面对成功和失败,一个人有没有“冠军之心”,直接影响他的表现. 几周前剖析了Linux SPI 驱动框架,算是明白个所以然,对于这么一个庞大的框架,并不是每一行代码都要自己去敲,因为前人已经把这 ...

- 第6章—渲染web视图—使用Thymeleaf

使用Thymeleaf 长期以来,jsp在视图领域有非常重要的地位,随着时间的变迁,出现了一位新的挑战者:Thymeleaf,Thymeleaf是原生的,不依赖于标签库.它能够在接受原始HTML的地方 ...

- 【数组】Product of Array Except Self

题目: iven an array of n integers where n > 1, nums, return an array output such that output[i] is ...

- Delphi基础语法

1.LowerCase(const s:string):string.UpperCase(const s:string):string 2.CompareStr(const s1,s2:string) ...

- 一款高效视频播放控件的设计思路(c# WPF版)

因工作的需要,开发了一款视频播放程序.期间也经历许多曲折,查阅了大量资料,经过了反复测试,终于圆满完成了任务. 我把开发过程中的一些思路.想法写下来,以期对后来者有所帮助. 视频播放的本质 就是连续的 ...

- protocol buffer开发指南

ProtoBuf 是一套接口描述语言(IDL)和相关工具集(主要是 protoc,基于 C++ 实现),类似 Apache 的 Thrift).用户写好 .proto 描述文件,之后使用 protoc ...

- ELK日志系统之使用Rsyslog快速方便的收集Nginx日志

常规的日志收集方案中Client端都需要额外安装一个Agent来收集日志,例如logstash.filebeat等,额外的程序也就意味着环境的复杂,资源的占用,有没有一种方式是不需要额外安装程序就能实 ...