图像验证码识别,字母数字汉子均可cnn+lstm+ctc

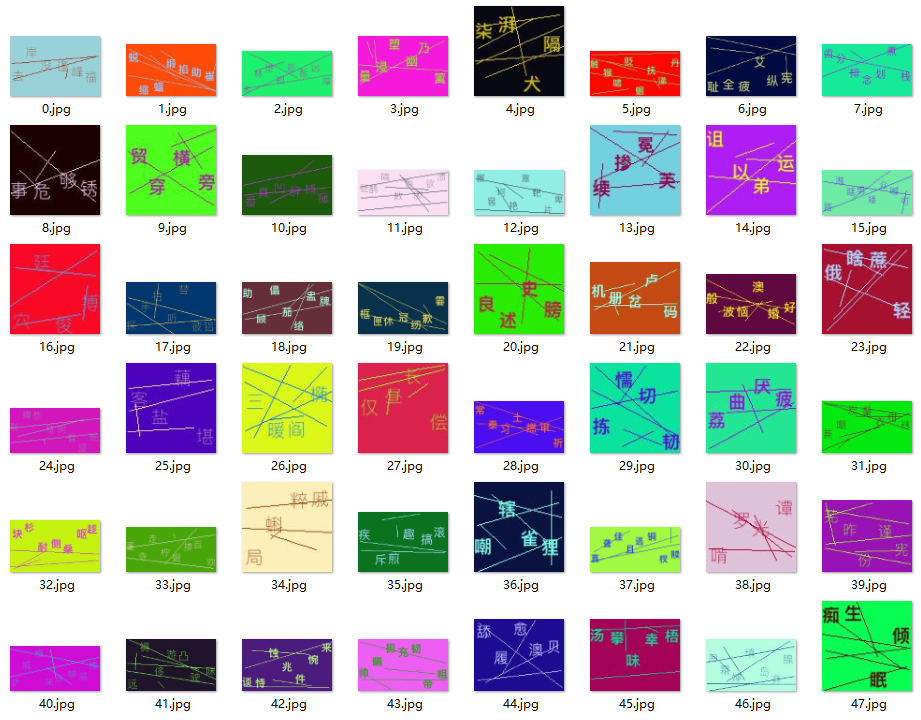

图形验证码如下:

训练两轮时的准确率:上边显示的是未识别的

config_demo.yaml

System:

GpuMemoryFraction: 0.7

TrainSetPath: 'train/'

TestSetPath: 'test/'

ValSetPath: 'dev/'

LabelRegex: '([\u4E00-\u9FA5]{4,8}).jpg'

MaxTextLenth: 8

IMG_W: 200

IMG_H: 100

ModelName: 'captcha2.h5'

Alphabet: 'ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz0123456789' NeuralNet:

RNNSize: 256

Dropout: 0.25 TrainParam:

EarlyStoping:

monitor: 'val_acc'

patience: 10

mode: 'auto'

baseline: 0.02

Epochs: 10

BatchSize: 100

TestBatchSize: 10

train.py

# coding=utf-8

"""

将三通道的图片转为灰度图进行训练

"""

import itertools

import os

import re

import random

import string

from collections import Counter

from os.path import join

import yaml

import cv2

import numpy as np

import tensorflow as tf

from keras import backend as K

from keras.callbacks import ModelCheckpoint, EarlyStopping, Callback

from keras.layers import Input, Dense, Activation, Dropout, BatchNormalization, Reshape, Lambda

from keras.layers.convolutional import Conv2D, MaxPooling2D

from keras.layers.merge import add, concatenate

from keras.layers.recurrent import GRU

from keras.models import Model, load_model f = open('./config/config_demo.yaml', 'r', encoding='utf-8')

cfg = f.read()

cfg_dict = yaml.load(cfg) config = tf.ConfigProto()

config.gpu_options.allow_growth = True

session = tf.Session(config=config)

K.set_session(session) TRAIN_SET_PTAH = cfg_dict['System']['TrainSetPath']

VALID_SET_PATH = cfg_dict['System']['TrainSetPath']

TEST_SET_PATH = cfg_dict['System']['TestSetPath']

IMG_W = cfg_dict['System']['IMG_W']

IMG_H = cfg_dict['System']['IMG_H']

MODEL_NAME = cfg_dict['System']['ModelName']

LABEL_REGEX = cfg_dict['System']['LabelRegex'] RNN_SIZE = cfg_dict['NeuralNet']['RNNSize']

DROPOUT = cfg_dict['NeuralNet']['Dropout'] MONITOR = cfg_dict['TrainParam']['EarlyStoping']['monitor']

PATIENCE = cfg_dict['TrainParam']['EarlyStoping']['patience']

MODE = cfg_dict['TrainParam']['EarlyStoping']['mode']

BASELINE = cfg_dict['TrainParam']['EarlyStoping']['baseline']

EPOCHS = cfg_dict['TrainParam']['Epochs']

BATCH_SIZE = cfg_dict['TrainParam']['BatchSize']

TEST_BATCH_SIZE = cfg_dict['TrainParam']['TestBatchSize'] letters_dict = {}

MAX_LEN = 0 def get_maxlen():

global MAX_LEN

maxlen = 0

lines = open("train.csv", "r", encoding="utf-8").readlines()

for line in lines:

name,label = line.strip().split(",")

if len(label)>maxlen:

maxlen = len(label)

MAX_LEN = maxlen

return maxlen def get_letters():

global letters_dict

letters = "" lines = open("train.csv","r",encoding="utf-8").readlines()

maxlen = get_maxlen()

for line in lines:

name,label = line.strip().split(",")

letters = letters+label

if len(label) < maxlen:

label = label + '_' * (maxlen - len(label))

letters_dict[name] = label if os.path.exists("letters.txt"):

letters = open("letters.txt","r",encoding="utf-8").read()

return letters

return "".join(set(letters)) letters = get_letters()

f_W = open("letters.txt","w",encoding="utf-8")

f_W.write("".join(letters))

class_num = len(letters) + 1 # plus 1 for blank

print('Letters:', ''.join(letters))

print("letters_num:",class_num) def labels_to_text(labels):

return ''.join([letters[int(x)] if int(x) != len(letters) else '' for x in labels]) def text_to_labels(text):

return [letters.find(x) if letters.find(x) > -1 else len(letters) for x in text] def is_valid_str(s):

for ch in s:

if not ch in letters:

return False

return True class TextImageGenerator: def __init__(self,

dirpath,

tag,

img_w, img_h,

batch_size,

downsample_factor,

):

global letters_dict

self.img_h = img_h

self.img_w = img_w

self.batch_size = batch_size

self.downsample_factor = downsample_factor

self.letters_dict = letters_dict

self.n = len(self.letters_dict)

self.indexes = list(range(self.n))

self.cur_index = 0

self.imgs = np.zeros((self.n, self.img_h, self.img_w))

self.texts = [] for i, (img_filepath, text) in enumerate(self.letters_dict.items()): img_filepath = dirpath+img_filepath

if i == 0:

img_filepath = "train/0.jpg"

img = cv2.imread(img_filepath)

img = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) # cv2默认是BGR模式

img = cv2.resize(img, (self.img_w, self.img_h))

img = img.astype(np.float32)

img /= 255

self.imgs[i, :, :] = img

self.texts.append(text)

print(len(self.texts),len(self.imgs),self.n) @staticmethod

def get_output_size():

return len(letters) + 1 def next_sample(self): #每次返回一个数据和对应标签

self.cur_index += 1

if self.cur_index >= self.n:

self.cur_index = 0

random.shuffle(self.indexes)

return self.imgs[self.indexes[self.cur_index]], self.texts[self.indexes[self.cur_index]] def next_batch(self): #

while True:

# width and height are backwards from typical Keras convention

# because width is the time dimension when it gets fed into the RNN

if K.image_data_format() == 'channels_first':

X_data = np.ones([self.batch_size, 1, self.img_w, self.img_h])

else:

X_data = np.ones([self.batch_size, self.img_w, self.img_h, 1])

Y_data = np.ones([self.batch_size, MAX_LEN])

input_length = np.ones((self.batch_size, 1)) * (self.img_w // self.downsample_factor - 2)

label_length = np.zeros((self.batch_size, 1))

source_str = [] for i in range(self.batch_size):

img, text = self.next_sample()

img = img.T

if K.image_data_format() == 'channels_first':

img = np.expand_dims(img, 0) #增加一个维度

else:

img = np.expand_dims(img, -1)

X_data[i] = img

Y_data[i] = text_to_labels(text)

source_str.append(text)

text = text.replace("_", "") # important step

label_length[i] = len(text) inputs = {

'the_input': X_data,

'the_labels': Y_data,

'input_length': input_length,

'label_length': label_length,

# 'source_str': source_str

}

outputs = {'ctc': np.zeros([self.batch_size])}

yield (inputs, outputs) # # Loss and train functions, network architecture

def ctc_lambda_func(args): #ctc损失是时间序列损失函数

y_pred, labels, input_length, label_length = args

# the 2 is critical here since the first couple outputs of the RNN

# tend to be garbage:

y_pred = y_pred[:, 2:, :]

return K.ctc_batch_cost(labels, y_pred, input_length, label_length) downsample_factor = 4 def train(img_w=IMG_W, img_h=IMG_H, dropout=DROPOUT, batch_size=BATCH_SIZE, rnn_size=RNN_SIZE):

# Input Parameters

# Network parameters

conv_filters = 16

kernel_size = (3, 3)

pool_size = 2

time_dense_size = 32 if K.image_data_format() == 'channels_first':

input_shape = (1, img_w, img_h)

else:

input_shape = (img_w, img_h, 1) global downsample_factor

downsample_factor = pool_size ** 2

tiger_train = TextImageGenerator(TRAIN_SET_PTAH, 'train', img_w, img_h, batch_size, downsample_factor)

tiger_val = TextImageGenerator(VALID_SET_PATH, 'val', img_w, img_h, batch_size, downsample_factor) act = 'relu'

input_data = Input(name='the_input', shape=input_shape, dtype='float32')

inner = Conv2D(conv_filters, kernel_size, padding='same',

activation=None, kernel_initializer='he_normal',

name='conv1')(input_data)

inner = BatchNormalization()(inner) # add BN

inner = Activation(act)(inner) inner = MaxPooling2D(pool_size=(pool_size, pool_size), name='max1')(inner)

inner = Conv2D(conv_filters, kernel_size, padding='same',

activation=None, kernel_initializer='he_normal',

name='conv2')(inner)

inner = BatchNormalization()(inner) # add BN

inner = Activation(act)(inner) inner = MaxPooling2D(pool_size=(pool_size, pool_size), name='max2')(inner) conv_to_rnn_dims = (img_w // (pool_size ** 2), (img_h // (pool_size ** 2)) * conv_filters)

inner = Reshape(target_shape=conv_to_rnn_dims, name='reshape')(inner) # cuts down input size going into RNN:

inner = Dense(time_dense_size, activation=None, name='dense1')(inner)

inner = BatchNormalization()(inner) # add BN

inner = Activation(act)(inner)

if dropout:

inner = Dropout(dropout)(inner) # 防止过拟合 # Two layers of bidirecitonal GRUs

# GRU seems to work as well, if not better than LSTM:

gru_1 = GRU(rnn_size, return_sequences=True, kernel_initializer='he_normal', name='gru1')(inner)

gru_1b = GRU(rnn_size, return_sequences=True, go_backwards=True, kernel_initializer='he_normal', name='gru1_b')(

inner)

gru1_merged = add([gru_1, gru_1b])

gru_2 = GRU(rnn_size, return_sequences=True, kernel_initializer='he_normal', name='gru2')(gru1_merged)

gru_2b = GRU(rnn_size, return_sequences=True, go_backwards=True, kernel_initializer='he_normal', name='gru2_b')(

gru1_merged) inner = concatenate([gru_2, gru_2b]) if dropout:

inner = Dropout(dropout)(inner) # 防止过拟合 # transforms RNN output to character activations:

inner = Dense(tiger_train.get_output_size(), kernel_initializer='he_normal',

name='dense2')(inner)

y_pred = Activation('softmax', name='softmax')(inner)

base_model = Model(inputs=input_data, outputs=y_pred)

base_model.summary() labels = Input(name='the_labels', shape=[MAX_LEN], dtype='float32')

input_length = Input(name='input_length', shape=[1], dtype='int64')

label_length = Input(name='label_length', shape=[1], dtype='int64')

# Keras doesn't currently support loss funcs with extra parameters

# so CTC loss is implemented in a lambda layer

loss_out = Lambda(ctc_lambda_func, output_shape=(1,), name='ctc')([y_pred, labels, input_length, label_length]) model = Model(inputs=[input_data, labels, input_length, label_length], outputs=loss_out)

# the loss calc occurs elsewhere, so use a dummy lambda func for the loss

model.compile(loss={'ctc': lambda y_true, y_pred: y_pred}, optimizer='adadelta') earlystoping = EarlyStopping(monitor=MONITOR, patience=PATIENCE, verbose=1, mode=MODE, baseline=BASELINE)

train_model_path = './tmp/train_' + MODEL_NAME

checkpointer = ModelCheckpoint(filepath=train_model_path,

verbose=1,

save_best_only=True) if os.path.exists(train_model_path):

model.load_weights(train_model_path)

print('load model weights:%s' % train_model_path) evaluator = Evaluate(model)

model.fit_generator(generator=tiger_train.next_batch(),

steps_per_epoch=tiger_train.n,

epochs=EPOCHS,

initial_epoch=1,

validation_data=tiger_val.next_batch(),

validation_steps=tiger_val.n,

callbacks=[checkpointer, earlystoping, evaluator]) print('----train end----') # For a real OCR application, this should be beam search with a dictionary

# and language model. For this example, best path is sufficient.

def decode_batch(out):

ret = []

for j in range(out.shape[0]):

out_best = list(np.argmax(out[j, 2:], 1))

out_best = [k for k, g in itertools.groupby(out_best)]

outstr = ''

for c in out_best:

if c < len(letters):

outstr += letters[c]

ret.append(outstr)

return ret class Evaluate(Callback):

def __init__(self, model):

self.accs = []

self.model = model def on_epoch_end(self, epoch, logs=None):

acc = evaluate(self.model)

self.accs.append(acc) # Test on validation images

def evaluate(model):

global downsample_factor

tiger_test = TextImageGenerator(VALID_SET_PATH, 'test', IMG_W, IMG_H, TEST_BATCH_SIZE, downsample_factor) net_inp = model.get_layer(name='the_input').input

net_out = model.get_layer(name='softmax').output

predict_model = Model(inputs=net_inp, outputs=net_out) equalsIgnoreCaseNum = 0.00

equalsNum = 0.00

totalNum = 0.00

for inp_value, _ in tiger_test.next_batch():

batch_size = inp_value['the_input'].shape[0]

X_data = inp_value['the_input'] net_out_value = predict_model.predict(X_data)

pred_texts = decode_batch(net_out_value)

labels = inp_value['the_labels']

texts = []

for label in labels:

text = labels_to_text(label)

texts.append(text) for i in range(batch_size): totalNum += 1

if pred_texts[i] == texts[i]:

equalsNum += 1

if pred_texts[i].lower() == texts[i].lower():

equalsIgnoreCaseNum += 1

else:

print('Predict: %s ---> Label: %s' % (pred_texts[i], texts[i]))

if totalNum >= 10000:

break

print('---Result---')

print('Test num: %d, accuracy: %.5f, ignoreCase accuracy: %.5f' % (

totalNum, equalsNum / totalNum, equalsIgnoreCaseNum / totalNum))

return equalsIgnoreCaseNum / totalNum if __name__ == '__main__':

train()

test = True

if test:

model_path = './tmp/train_' + MODEL_NAME

model = load_model(model_path, compile=False)

evaluate(model)

print('----End----')

interface_testset.py

import itertools

import string

import yaml

from tqdm import tqdm

import cv2

import numpy as np

import os

import tensorflow as tf

from keras import backend as K

from keras.models import Model, load_model f = open('./config/config_demo.yaml', 'r', encoding='utf-8')

cfg = f.read()

cfg_dict = yaml.load(cfg)

config = tf.ConfigProto()

config.gpu_options.allow_growth = True

session = tf.Session(config=config)

K.set_session(session) MODEL_NAME = cfg_dict['System']['ModelName'] letters = string.ascii_uppercase + string.ascii_lowercase+string.digits def decode_batch(out):

ret = []

for j in range(out.shape[0]):

out_best = list(np.argmax(out[j, 2:], 1))

out_best = [k for k, g in itertools.groupby(out_best)]

outstr = ''

for c in out_best:

if c < len(letters):

outstr += letters[c]

ret.append(outstr)

return ret def get_x_data(img_data, img_w, img_h):

img = cv2.cvtColor(img_data, cv2.COLOR_RGB2GRAY)

img = cv2.resize(img, (img_w, img_h))

img = img.astype(np.float32)

img /= 255

batch_size = 1

if K.image_data_format() == 'channels_first':

X_data = np.ones([batch_size, 1, img_w, img_h])

else:

X_data = np.ones([batch_size, img_w, img_h, 1])

img = img.T

if K.image_data_format() == 'channels_first':

img = np.expand_dims(img, 0)

else:

img = np.expand_dims(img, -1)

X_data[0] = img

return X_data # Test on validation images

def interface(datapath ="./testset" ,img_w = 200,img_h = 100): save_file = open("answer.csv","a",encoding="utf-8")

save_file.truncate()

model_path = './tmp/train_' + MODEL_NAME

model = load_model(model_path, compile=False) net_inp = model.get_layer(name='the_input').input

net_out = model.get_layer(name='softmax').output

predict_model = Model(inputs=net_inp, outputs=net_out) print("开始预测,预测结果:")

listdir = os.listdir(datapath) bar = tqdm(range(len(listdir)),total=len(listdir))

for idx in bar:

img_data = cv2.imread(datapath+"/" + str(idx) + ".jpg")

X_data = get_x_data(img_data, img_w, img_h)

net_out_value = predict_model.predict(X_data)

pred_texts = decode_batch(net_out_value)

#print(str(idx) + ".jpg" + "\t", pred_texts[0])

save_file.write(str(idx)+","+pred_texts[0]+"\r\n") if __name__ == '__main__':

interface(datapath="./testset")

图像验证码识别,字母数字汉子均可cnn+lstm+ctc的更多相关文章

- 利用开源程序(ImageMagick+tesseract-ocr)实现图像验证码识别

--------------------------------------------------低调的分割线-------------------------------------------- ...

- canvas验证码 - 随机字母数字

基于canvas制作随机生成数字英文组合验证码效果,点击或刷新会自动重组.输入验证码提交验证效果代码. <div class="verification"> <i ...

- Python 3.6 版本-使用Pytesseract 模块进行图像验证码识别

环境: (1) win7 64位 (2) Idea (3) python 3.6 (4) pip install pillow < >pip install pytesse ...

- php生成纯数字、字母数字、图片、纯汉字的随机数验证码

现在讲开始通过PHP生成各种验证码旅途,新手要开车了,请刷卡! 首先,我们开始先生成一个放验证码的背景图片 注:没有Imagejpg()这个函数,只有imagepng()函数 imagecreatet ...

- 验证码识别之w3cschool字符图片验证码(easy级别)

起因: 最近在练习解析验证码,看到了这个网站的验证码比较简单,于是就拿来解析一下攒攒经验值,并无任何冒犯之意... 验证码所在网页: https://www.w3cschool.cn/checkmph ...

- CNN+BLSTM+CTC的验证码识别从训练到部署

项目地址:https://github.com/kerlomz/captcha_trainer 1. 前言 本项目适用于Python3.6,GPU>=NVIDIA GTX1050Ti,原mast ...

- 【转】CNN+BLSTM+CTC的验证码识别从训练到部署

[转]CNN+BLSTM+CTC的验证码识别从训练到部署 转载地址:https://www.jianshu.com/p/80ef04b16efc 项目地址:https://github.com/ker ...

- [验证码识别技术] 字符型验证码终结者-CNN+BLSTM+CTC

验证码识别(少样本,高精度)项目地址:https://github.com/kerlomz/captcha_trainer 1. 前言 本项目适用于Python3.6,GPU>=NVIDIA G ...

- 基于SVM的字母验证码识别

基于SVM的字母验证码识别 摘要 本文研究的问题是包含数字和字母的字符验证码的识别.我们采用的是传统的字符分割识别方法,首先将图像中的字符分割出来,然后再对单字符进行识别.首先通过图像的初步去噪.滤波 ...

- PHP字母数字验证码和中文验证码

1:字母数字组合的验证码 HTML代码: 验证码:<input type="text" name="code"> <img onclick=& ...

随机推荐

- mysql标识列和事务

1 #标识列 2 /* 3 又称为自增长列 4 含义:可以不用手动的插入值,系统提供默认的序列值 5 6 7 特点: 8 1.标识列必须和主键搭配吗?不一定,但要求是一个key 9 2.一个表可以有几 ...

- Zabbix与乐维监控对比分析(四)——告警管理篇

在前面发布的Zabbix与乐维监控对比分析文章中,我们评析了二者在架构与性能.Agent管理.自动发现.权限管理.对象管理等方面的差异.接下来让我们一起看看二者在告警管理方面的差异. 告警管理是所有I ...

- nowrap - table td 列 宽度 不被挤 - 大表格制作

nowrap - table td 列 宽度 不被挤 - 大表格制作 表格前几列 设置完宽度,会被右侧动态数据挤没有宽度,加上nowrap,就保证宽度了

- SecureCRT windows 登录 linux

SecureCRT是一款支持SSH(SSH1和SSH2)的终端仿真程序,简单地说是Windows下登录UNIX或Linux服务器主机的软件.SecureCRT支持SSH,同时支持Telnet和rlog ...

- 2023山东省“技能兴鲁”职业技能大赛-学生组初赛wp

PWN pwn1 c++ pwn,cin 直接相当于 gets 了,程序有后门,保护基本没开,在 change 的最后一个输入点改掉返回地址为后门地址即可 from pwn import * cont ...

- 将谷歌chrome浏览器主题变黑的方法

两个步骤: 第一: 桌面找到google chrome图标右键->属性,在后面加上: --force-dark-mode (注意有空格) 第二: 1.浏览器地址输入chrome://flags/ ...

- 详解SSL证书系列(5)SSL证书为什么不能好多年签一次呢

上一篇介绍了详解SSL证书系列(4)免费的SSL证书和收费的证书有什么区别,这一篇我们继续了解一下我们申请的SSL证书为什么不能好多年签一次呢,这样不是更省事吗? SSL证书最多只能签发一年,一年到期 ...

- C++ Qt开发:QUdpSocket实现组播通信

Qt 是一个跨平台C++图形界面开发库,利用Qt可以快速开发跨平台窗体应用程序,在Qt中我们可以通过拖拽的方式将不同组件放到指定的位置,实现图形化开发极大的方便了开发效率,本章将重点介绍如何运用QUd ...

- HeaderedContentControl实现左右对称

在我们使用TextBlock却想给前面添加固定字段的时候,发现TextBlock没有Header属性, 这个时候我们可以用到HeaderedContentControl 然而,默认情况下Headere ...

- [HTML、CSS]细节、经验

[版权声明]未经博主同意,谢绝转载!(请尊重原创,博主保留追究权) https://blog.csdn.net/m0_69908381/article/details/130134573 出自[进步* ...